- AMD Community

- Blogs

- Adaptive Computing

- Configurable Low Latency Streaming

Configurable Low Latency Streaming

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This article was originally published on July 21, 2022.

Editor’s Note: This content is contributed by

- Rob Green, Senior Manager - Pro AV, Broadcast, and Consumer at AMD

- Gordon Lau, Systems Architect - Pro AV, Broadcast, and Consumer at AMD

Quality of experience and the need for low latency encoding & decoding

Various multimedia applications need very low end-to-end latency so that the quality of experience (QoE) is kept high and the application remains useful. You’ll probably have been in a video conference where there is a noticeable delay between you speaking and the receiver hearing it – it creates a poor experience and makes it difficult to hold a conversation. Or imagine a live football broadcast where you hear your neighbors cheering for a goal while they watch the game on over-the-air TV, and then you frustratingly see the same goal 30 seconds later because you’re watching an Internet stream. For live coverage or interactivity, keeping latency as low as possible is a must. Similar low latency streaming is required during live events, news gathering, e-sports/game streaming, and KVM (keyboard, video, mouse) control systems.

Defining latency

In this environment, latency is a system metric that describes the delay in time to capture, encode, transport, decode, and display a media stream (Figure 1). Modern professional media systems can have multiple encode/decode cycles which contribute to the overall end-to-end latency, such as encoding from a TV camera for wireless transmission to an outside broadcast truck, then receiving, decoding, and re-encoding in the truck for transmission back to the studio. It would then be further decoded, and likely experience an additional encode/decode cycle to enable distribution to viewers at home. So, keeping latency as low as possible at each stage is key for high QoE.

The Zynq® UltraScale+™ MPSoC EV family from AMD-Xilinx includes an integrated H.264/H.265 video codec unit (VCU) and offers simultaneous encode and decode of 4K60 4:2:2 10-bit video (or 4K30 4:4:4 10-bit) or an equivalent number of SD/HD streams that aggregate to 4K60. We define our latency metrics as the time taken to capture, encode, decode and display through the Zynq UltraScale+ MPSoC EV devices. We don’t include the IP network delay as this is highly dependent on network configuration and many factors outside our control, so it’s up to the user to ensure that this link is also as low latency as possible!

Figure 1 End-to-end latency

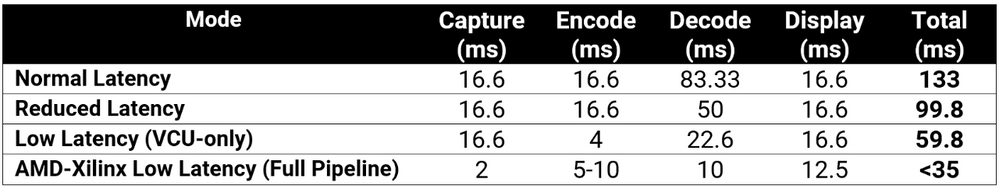

Figure 1 End-to-end latencyAMD-Xilinx has four latency modes that can be configured on the device. “Normal latency” of 133ms is the typical use case of frame-based capture and frame-based display and supports all the features available for the VCU: different GOP structures, picture types, buffer settings, etc. “Reduced latency” of <100ms is essentially encoding with no frame re-ordering, so without B-frames (bidirectional predicted frames that depend on information on previous and subsequent frames). This means the decoder can reduce its buffer size, and the decode latency is reduced. “Low latency (VCU-only)” is a mode that still has frame-based capture and display, but we operate the VCU encoder and decoder in slice mode. In slice mode, a Sync IP block in the programmable logic (PL) lets the VCU know when a complete slice (assuming 8 slices per frame) is available, so it can start encoding or decoding straight away without waiting for a full frame to be available and reduces overall latency to <60ms. The final mode is “AMD-Xilinx Low Latency (full pipeline),” which uses slices across the entire capture, encode, decode and display pipeline, bringing the overall latency down to <35ms with H.264/AVC (with H.265/HEVC, <30ms is possible!). This mode makes the Zynq UltraScale+ VCU one of the industry's lowest latency professional H.264/H.265 codecs.

It’s important to note that the figures stated are for 60 frames/sec and that the latency numbers would need to be recalculated for lower frame rates.

Table 1 AMD-Xilinx VCU latency modes

Table 1 AMD-Xilinx VCU latency modesWant to get started with configurable low-latency streaming?

AMD-Xilinx has a comprehensive suite of VCU targeted reference designs (TRDs) which provide all the required sources, project files, and recipes to recreate system-level designs to evaluate connectivity, processing, and codec components together. There are various modules that support our low latency modes, and all can be quickly implemented on the Zynq UltraScale+ MPSoC ZCU106 Evaluation Kit.

Design Module | Description |

Low Latency PS DDR NV12 HDMI audio video capture and display | HDMI audio video design to showcase ultra-low latency support using Sync IP, encoding and decoding with PS DDR for NV12 format |

Low latency PL DDR HDMI video capture and display | HDMI design to showcase ultra-low latency support using Sync IP, encoding with PS DDR and decoding with PL DDR |

Low latency PL DDR HLG SDI audio video capture and display | HLG/Non-HLG video + 2/8 channels audio capture and display through SDI, encoding from PS DDR and decoding from PL DDR. It also showcases ultra-low latency support using Sync IP |

Table 2 TRD design modules supporting VCU low latency modes

Whilst codecs often require a balance between video quality, compression ratio, and bitrate, power consumption, cost, and latency, we’ve shown that low latency is vital for a variety of use cases to keep the user’s QoE as high as possible. There are much lower latency mezzanine video codecs that offer visually lossless compression with just a few lines of video delay: JPEG XS, intoPIX Tico, VC-2, Audinate Colibri, etc. However, these don’t offer the same heavy compression ratio as H.264/H.265, so are aimed at different use cases where the available bitrate is much higher. Fortunately, all these lightweight mezzanine codecs can also be supported on the Zynq UltraScale+ MPSoC alongside the VCU in the PL if needed. But the highly configurable nature of the VCU means that it can be adapted to suit a wide range of professional multimedia workflows. Our TRD can quickly demonstrate low latency modes in action with a variety of design modules offering support for different video formats (HDR, 4:4:4), interfaces (HDMI, PCIe, Ethernet), and various codec configurations; you can start your next streaming design with much of the hard work already done, allowing you to focus on tailoring the platform to suit your needs. The Zynq UltraScale+ MPSoC EV family is unique in the industry, offering a professional H.264/H.265 codec integrated with programmable hardware, and whilst trade-offs always need to be made with any codec, it offers a superb balance and makes it the ideal choice for your next streaming design.

-

Accelerators

13 -

Adaptive Computing

171 -

Adaptive Computing News

29 -

Adaptive Networking

3 -

Adaptive SoC

36 -

Adaptive Video

4 -

AI

1 -

Alveo

19 -

Artix

7 -

Data Center

16 -

Fintech

3 -

FPGA

44 -

Kintex

4 -

Kria

12 -

Pervasive AI

8 -

SOM

8 -

Versal

40 -

Virtex

9 -

Vitis

12 -

Vitis AI

10 -

Vivado

11 -

Zynq

28

- « Previous

- Next »