This article was originally published on June 20, 2022.

Editor’s Note: This content is contributed by Rob Green, Senior Manager - Pro AV, Broadcast, and Consumer.

Multimedia streaming is one of the most complex system designs you’ll face. Integrating from a vast array of audio/video (AV) connectivity standards with compression codecs, audio and video processing, synchronization, and IP network streaming, while making the embedded software all work seamlessly and reliably often means you’ll need a team of people working on different aspects of the design and plenty of collaboration to make things work as you expected.

Whether you’re new to streaming designs or experienced in multimedia but still coming to grips with FPGA and embedded SoC designs, AMD-Xilinx has put a huge amount of resources into the building blocks and example designs you’ll need to get a head start. The Zynq® UltraScale+™ MPSoC EV family includes an integrated 4K60 4:2:2 10-bit H.264/H.265 video codec unit (VCU) alongside the adaptive compute programmable logic (PL) available for AV interfacing and AV processing, as well as a multicore Arm® PS (processor subsystem) that handles the operating system, drivers, and high-performance peripherals. For prototyping most professional AV and broadcast applications, targeting the ZCU106 Evaluation Kit makes good sense because it has the most flexible connectivity options and the largest PL resources for your development work. So that’s the hardware sorted!

Introducing our Targeted Reference Design for streaming

For the design itself, AMD-Xilinx provides a targeted reference design (TRD) specifically for the VCU and the ZCU106 on our Wiki (Figure 1).

Figure 1. AMD-Xilinx VCU TRD

Figure 1. AMD-Xilinx VCU TRD

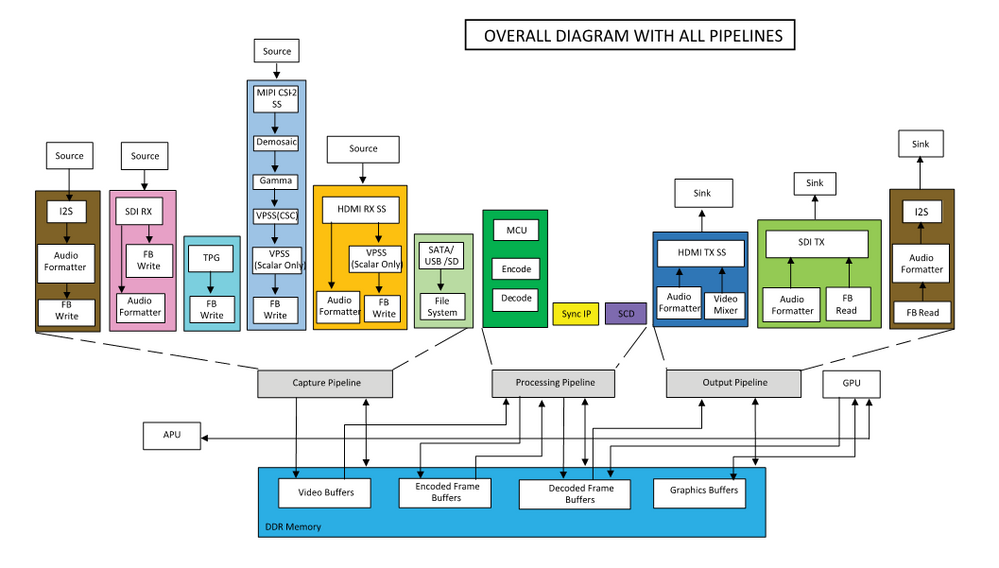

The TRD is built in a modular fashion, with the individual IP and system infrastructure blocks integrated together into different, fully validated design modules that represent most of the use cases we see in the market. This includes complete capture, encode, decode, and display pipelines with a choice of AV connectivity, various methods of DDR interfaces, and different video formats. The aim is to provide a system that will cover all, or most, of your requirements—with plenty of room to innovate and differentiate. It also aims to show details on how we built these pipelines so that you can fully educate yourself on how the system works and then adapt it to your application needs.

Design Module | Description |

Multi Stream Video Capture and Display | Multi-stream design supporting HDMI-Rx, TPG, MIPI, HDMI-Tx, and DP, along with showcasing capabilities of VCU |

Multi Stream Audio Video Capture and Display | I2S and HDMI audio with video capture of HDMI-Rx/MIPI-Rx, along with showcasing capabilities of VCU |

PL DDR HDR10 HDMI Video Capture and Display | HDMI design to showcase encoding with PS DDR and decoding with PL DDR. Supports HDR10 static metadata for HDMI, including DCI4K. |

Low Latency PS DDR NV12 HDMI Audio Video Capture and Display | HDMI audio video design to showcase ultra-low latency support using Sync IP, encoding and decoding with PS DDR for NV12 format |

Low Latency PL DDR HDMI Video Capture and Display | HDMI design to showcase ultra-low latency support using Sync IP, encoding with PS DDR and decoding with PL DDR |

Low Latency PL DDR HLG SDI Audio Video Capture and Display | HLG/Non-HLG video + 2/8 channels audio capture and display through SDI, encoding from PS DDR and decoding from PL DDR. It also showcases ultra-low latency support using Sync IP |

YUV444 Full Chroma Video Capture and Display | Beta release to demonstrate capture and display of YUV444 8/10-bit video through the VCU up to 4Kp30 YU24 and XV30 format |

These are all provided as pre-built images that can be loaded directly onto the ZCU106 Evaluation Kit so that you can…well, evaluate them! It also provides validated IP cores, source, project files BSP, drivers, and build flows so that you can tune the performance parameters of VCU and arrive at an optimal configuration for encoder and decoder blocks for your specific use case. The TRD uses the Vivado® IP integrator (IPI) flow for building the hardware design and Xilinx PetaLinux tools for software design.

Besides offering access to the control software layer, the VCU TRD leverages the strengths of the hugely popular GStreamer open source multimedia framework to assemble audio, video, and data elements into highly flexible multimedia pipelines. This framework abstracts away much of the complexity of the multimedia system pipeline so that the TRDs can be run with just a few lines of GStreamer commands. It also opens the pipeline to use other GStreamer plug-ins from the open source community, and it includes a well-defined way for you to create interaction between the TRD and your own AV design components.

The VCU TRD results from a massive, multi-year investment AMD-Xilinx has made into hardware and software to support the main professional compression use cases we see in broadcast, pro AV and other markets. Examples include the industry’s lowest latency for a professional H.264/H.265 codec with a glass-to-glass delay of <35ms (capture, encode, decode, and display). They also include the ability to deal effectively with scene changes, to work in challenging network and bandwidth constraints, to use machine learning to implement region-of-interest encoding, as well as new modes supporting HDR and 4:4:4 streaming. With comprehensive documentation and training available, the ZCU106 takes a good portion of pain away from dealing with complex multimedia streaming designs.

Learn More

Related Blogs