- AMD Community

- Blogs

- Adaptive Computing

- 4:4:4 Full Chroma Streaming for Video with Desktop...

4:4:4 Full Chroma Streaming for Video with Desktop Content

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This article was originally published on July 14, 2022

Editor’s Note: This content is contributed by Rob Green, Senior Manager - Pro AV, Broadcast, and Consumer.

Seeing the world in full colour

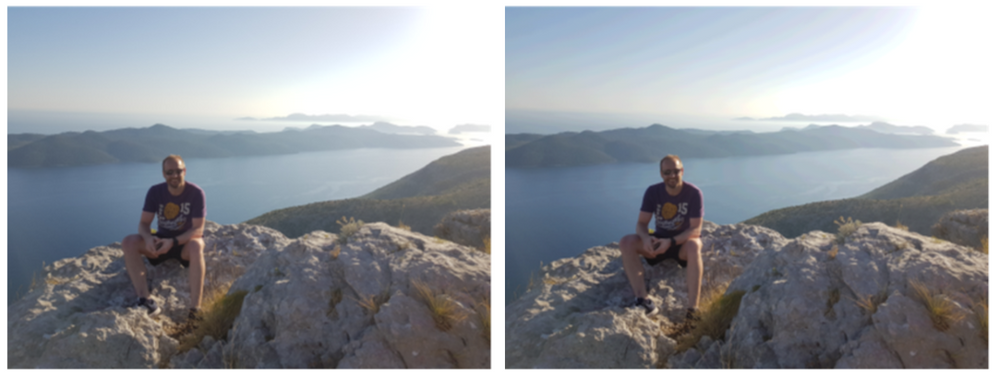

Video folks often refer to terms such as “4:2:2 10-bit”, “full 4:4:4” or “8-bit 4:2:0”, but what do these numbers mean? The 8- and 10-bit refers to the number of digital bits per colour component (bpcc), with 10-bits used to represent each of the red, green, and blue pixel values. So, 10-bit will have a vastly bigger palette to choose from than 8-bit. Higher numbers of bits per colour not only means more accurate representation of the scene in the digital domain, but also affects video quality when representing smooth transitions – e.g., lower bits can introduce banding in gradients across the sky, as shown in Figure 1:

Figure 1 - (L) 10-bit image, (R) 8-bit image with "banding" artifacts

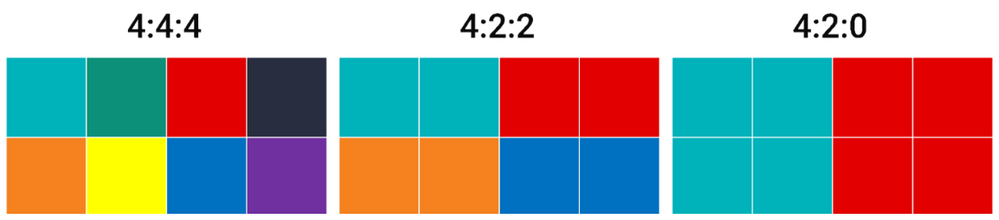

Figure 1 - (L) 10-bit image, (R) 8-bit image with "banding" artifactsOK, so that’s a bit depth sorted, but what about 4:4:4, 4:2:2, and 4:2:0? Well, they refer to chroma subsampling and is another way to use human vision perception to remove the information and reduce bandwidth. Images and video can be represented by primary colours of mixtures of Red, Green, and Blue (RGB), or as light brightness (known as luma or luminance) and colour (chroma) components. Our eyes are more sensitive to luma than chroma, so we keep all the luma samples, but can subsample the colours and reduce bandwidth without a huge loss in quality (Figure 2).

Saving bandwidth is a good thing though, so why do we need 4:4:4 at all if our eyes and brains don’t really care about those extra chroma samples?

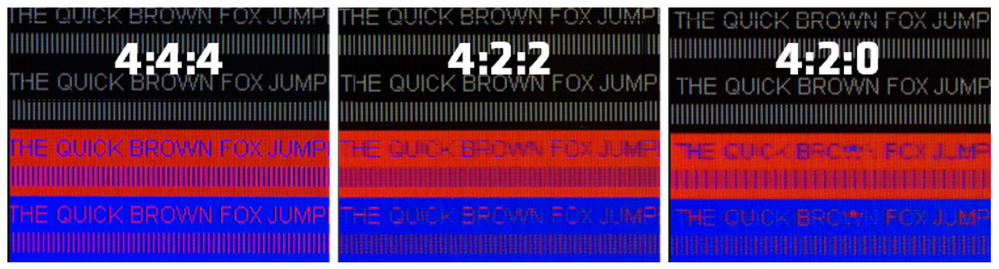

Well, for a video, we can throw away data more easily because our eyes and brains perceive natural scenes based on thousands of years of evolution. Viewers will be hard-pressed to notice any difference between 4K 4:4:4 and 4K 4:2:0 video scenes. Throwing away data through chroma resampling has, therefore, minimal impact on video scenes. But the same is not true for graphics, and particularly text-based desktop content such as spreadsheets, where combinations of certain font and background colours in 4:2:0 can have a drastic and detrimental effect on the received content and its visual quality. Look at the test images below (Figure 3), and you’ll see that chroma resampling can make text virtually unreadable for certain colour combinations:

So being able to transmit and receive 4:4:4 is highly desirable in applications where desktop content and graphics are more prominent and may be sent separately to, or along with, “natural” video content. Example applications might include: KVM systems, the extension of keyboard, video, and mouse signals at user terminals to and from remote or multiple PCs; control room applications that monitor surveillance footage with clocks and other graphical overlays; collaboration systems that combine video headshots of users with shared office documents.

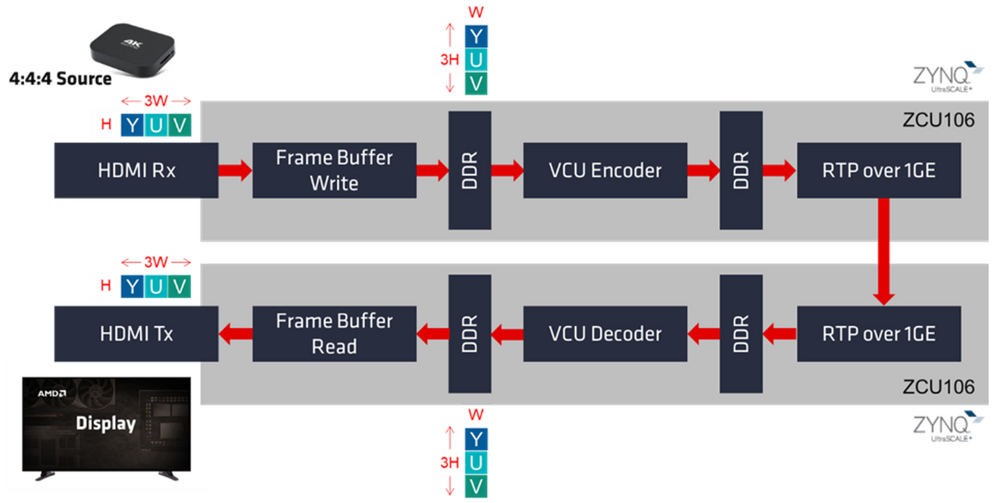

AMD-Xilinx has a long-established streaming device called the Zynq® UltraScale+™ MPSoC, which combines a multi-core Arm®-based processing subsystem, programmable logic for implementing AV, and Ethernet interfaces and real-time video processing, and in the EV family an integrated and hardened H.264/H.265 codec for video compression. The codec offers professional quality streaming of up to 4K60 at 4:2:2 10-bit, making it perfect for broadcasting those natural video scenes discussed earlier. But now we have tweaked the capabilities of the device and its codec to support 4:4:4 streaming. With the release of a new 4:4:4 full chroma mode for the integrated H.264/H.265 video codec unit (VCU), we can now support transmission and reception of full chroma content, as shown in Figure 4.

An uncompressed 4K stream is received from HDMI 2.0 and then written into memory. The YUV planes are transposed to enable 4:4:4 encoding in the VCU, before RTP packetization and delivery over 1Gb Ethernet. This technique does introduce some minor drawbacks: this transposing of the YUV planes makes the streaming technique proprietary, so that it can’t be received by any H.264/H.265 decoder – it needs to have the AMD-Xilinx VCU and full chroma mode at each end of the link. In pro AV, many systems and networks are closed, so having specific required equipment at both Tx and Rx nodes is not that unusual. It also limits the max resolution and frame rate to 4K30, rather than the full 4K60 supported in 4:2:2 mode.

AMD-Xilinx has created a collection of VCU targeted reference designs (TRDs) which provide all the required sources, project files, and recipes to recreate system-level designs to evaluate connectivity, processing, and codec components together and get a jump-start on tailoring to your specific application requirements. One of these TRD modules supports the new 4:4:4 full chroma mode implemented on the Zynq UItraScale+ MPSoC ZCU106 Evaluation Kit, which you can download and start working with straight away!

Design Module | Description |

VCU-based HDMI/DisplayPort video design to highlight YUV444 8-bit and 10-bit functionality |

* It is linked to Vivado 2022.1 version. Check out the Zynq UltraScale+ MPSoC TRD home page for the latest versions

The ability to now stream 4:4:4 content through the VCU makes it interesting for KVM, control room, and other applications using desktop graphics. Of course, H.264/H.265 isn’t the only way to stream such content, with lightweight mezzanine codecs such as JPEG XS and Colibri offering 4:4:4 at higher quality and lower latency. Still, they don’t offer the same compression ratios, so can’t reduce the bandwidth as much. The wonderful thing about building on adaptive computing platforms from AMD-Xilinx is that you can easily evaluate and choose the right codec, or do both H.264/H.265 and mezzanine compression in parallel for local and remote streaming. It really depends on your use case, but we’re here to help you decide!

-

Accelerators

13 -

Adaptive Computing

171 -

Adaptive Computing News

29 -

Adaptive Networking

3 -

Adaptive SoC

36 -

Adaptive Video

4 -

AI

1 -

Alveo

19 -

Artix

7 -

Data Center

16 -

Fintech

3 -

FPGA

44 -

Kintex

4 -

Kria

12 -

Pervasive AI

8 -

SOM

8 -

Versal

40 -

Virtex

9 -

Vitis

12 -

Vitis AI

10 -

Vivado

11 -

Zynq

28

- « Previous

- Next »