- AMD Community

- Support Forums

- General Discussions

- Re: To help people game better I'll share how I se...

General Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To help people game better I'll share how I set my ryzen win 10 5700xt system up for great gaming with samsung QLED and dolby atmos speakers and no black screens. Also response to nvidia's "efficiency claims" and freesync.

I've a 2017 Samsung QLED Q7FN and a ryzen 2700x with some gskill trident Z RAM.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for this.

I have the exact same TV as you and a 5700XT Gaming X and would like to know if you are experiencing the same issue as I am.

Are you able to select 10 bit 4:2:2 1440p @ 120hz in the AMD settings? I am only able to select 8 bit 4:4:4 RGB 1440p @ 120hz. I was able to trick/force the TV into playing HDR video @1440p/120hz by selecting HDR in Division 2 and alt+tabbing a few times until boom the colors popped like they should.

This is not a link speed issue - am I missing something? Is the TV supposed to govern this for me and I select it manually in games?

In the post https://community.amd.com/message/2953854 another user complained about this issue with a Vega GPU.

Your feedback will be highly appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

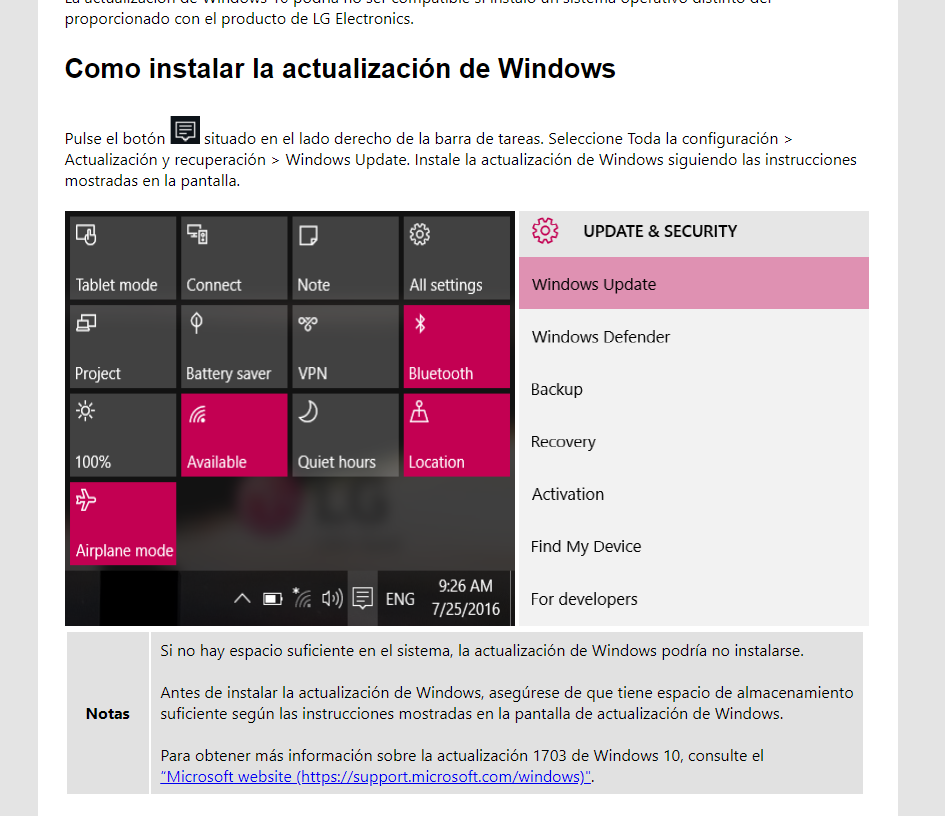

Hi, it isnt an issue with vega or AMD or anything its an issue with bandwidth limitations and HDMI and displayport ports and cables. And also with what freesync is designed for. If you wish to game in HDR you must go to windows 10 display settings and toggle HDR and WCG colour enabled on first! you must have your display in 420 or 422 10bit in AMD control panel then start your game up. Personally i've tried enabling HDR mode in windows settings then setting my display to 10bit colour but it looks worse than simply setting my TV's colourspace to "native" so it auto enhances the colour bitdepth of all my content and setting my TV source input to game console and adjusting my TV's picture settings to however I like then just setting colour in adrenaline software to 120% saturation or for some games or netflix i may increase the saturation slider in adrenaline to be higher. The only downside to playing a HDR game like this with HDR off is that some very very dark details may be a little crushed in shadows. When you adjust your in game gamma settings you may find that the darkest images are nigh impossible to see. But it looks so much better and its almost never a make or break for a game you can up the brightness in your display settings to correct or adjust ingame brightness gamma settings to compensate.

when I game I disable all overlays possible including the Uplay ingame overlay it complains when i launch it that some features may be missing but screw ubisoft works fine so far. I also disable adrenaline overlay.

From what i've seen most tv's and displays are either 4k30hz or 4k60hz 444 8bit limited by HDMI 2.0a or hdmi 2.0b.

You can set them to 420 compression mode to lose a little quality on some colours like magenta's and navy blues and make text hard to read and possibly eye sight damaging unless its huge movie subtitles that are always yellow or white and thick. you shouldnt use your PC desktop in 420 mode. gaming is ok in it on consoles and in general as they've been designed with big fonts and colours to not ruin your eyes and you sit far enough back its not a high quality output either.

internet chatrooms and things with purple or navy blue usernames or text or small fonts in 420 will appear fuzzy and hard to read with chroma subsampling compression which is why you should 8bit444 instead for regular desktop. If your video player like daum potplayer MPC HC or kodi or whatever needs to output 10bit video with HDR it will change its output colourspace for you, you can enable fullscreen exclusive mode for video playback of content and use d3d11 renderer.

I just recently got the division 2 and havent played it much, its actually like most games nowadays like the frostbyte engine and EA titles like BFV or SWBF2 has been designed with forced antialiasing to cover up all the terrible messy textures that have join seams and artifacts everywhere and shimmering peak luminance max clipping. basically the morons designed the whole game with AA on and then turning it off reveals a nightmare. which is a shame as having AA on cripples performance and latency even if i go to great pains to force it off it looks so ugly i must turn it back on.

Try gaming in borderless windowed mode like I do maybe that will help fix your issues. Basically with freesync I believe its meant for RGB 444 if you want 10 or 12bit you must YCBCR 420.. theres not enough bandwidth for full 444 120hz 1440p and 12bit at 444. If you go down to 1080p then theres way less bandwidth used so you can squeeze in full 12bit 444 RGB 1080p 120hz. But it doesnt work for 1440p because theres not enough room in bandwidth of the ports and cables. with HDMI 2.1 it should or perhaps with a display port 1.4 display and a displayport 1.4 cable and your 5700xt's displayport which goes up to 5k there'd maybe be enough room i'd guess since it does 4k 120hz over displayport but our TV's dont have displayport and all the converter adaptors cripple the bandwidth as they're cheap and old and slow hdmi 2.0b without freesync because there'd be an adaptor in between breaking the chain freesync needs direct input to the display and low latency because it has to feed frames to the display differently than it would to adaptor or other device like audio receiver.

Rather than waste time telling you my settings i just snapped a few photos keep in mind i've not optimized my graphics settings in game very well yet as i just recently got it and didnt wanna waste much time. If you wanna improve speed maybe lower the world/environment quality i left it at high as i like it when games look good or disable parralax or lower vegetation maybe as it may help you spot guys but i like having at least medium. Or you could try force TAA off in the my documents folders game settings.cfg file by editing it and maybe set to read only? but that looks like barf.

http://imgur.com/a/OW7aTpR

The reason HDR is good is because it lets you have "subtle details" more shades of dark objects in shadow and if a bright light is shining like a lamp or light bulb instead of being a glowing white ball around it you can see details within the brightest parts of light for video content a dark shadow or a black dog used to be a black object with no fine details now with HDR we can see subtle strands of black hairs because the info isnt lost. But for video games HDR makes them look a little dull or less vibrant but i'm an old man who loves oldschool high colour saturation vibrant popping colours on my video games and video content so for netflix with the windows 10 store app I play HDR content back in SDR mode and use my TV's settings and AMD adrenaline saturation to make things look super colourful and pretty. in my opinion it looks better than HDR though its less lifelike and maybe not as director intended but ehh.. choice is yours.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response and attached images.

I do not believe that this is a bandwidth issue.

I do not want to use RGB 4:4:4 1440p 120hz as I know this is not possible over HDMI due to its limited bandwidth. I want to use YCBCR 4:2:2. Even 4:2:0 if i can while running 10bpc 1440p 120hz. This must be possible as this uses less bandwidth than 4k 10 bit YCBCR 4:2:2 @60hz(Which works fine on my setup, but is limited to 60hz) as per Wikipedia. HDMI - Wikipedia

As you can see in your screenshots, Division 2 does not detect it is connected to an HDR display(Mine does if i alt+tab a few times). I managed to get it to run in HDR 1440p 120hz but that required some alt+tabbing(AMD driver still only offered 8bpc here so the TV must have overrid the driver somehow). I will confirmt he game colors looked way better. It was definitely running in HDR and my TV info stated UHD HDR. I could also feel that it was running 120hz. It is like the AMD software does not have functionality to manually set the pixel format to anything besides 8bpc when running at 1440p 120hz.

Have you been able to set your display to 10 bit 4:2:2/4:2:0 1440p@120hz?

I see you mentioned you put your Windows into HDR and then change the AMD control panel settings - is this in 4K resolution?

"If you wish to game in HDR you must go to windows 10 display settings and toggle HDR and WCG colour enabled on first! you must have your display in 420 or 422 10bit in AMD control panel then start your game up"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I have a LG34GL750 monitor, I recently activated HDR and it looks great in games, except when taking screenshots, will it be the drivers for amd?

HDR ON

HDR OFF

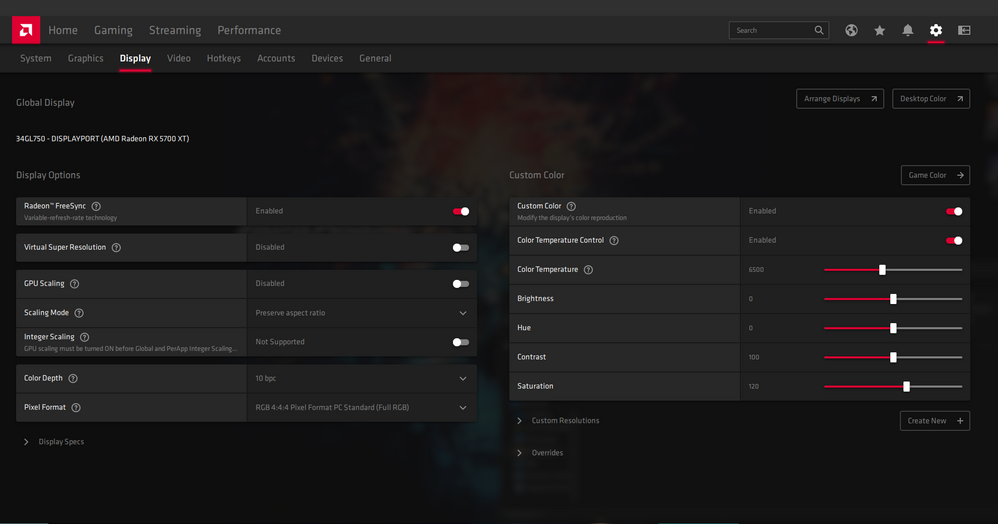

AMD DISPLAY

The screenshot (win + alt + print screen) generates two screenshots one in jxr format and in png, recognizing HDR

Excuse my level of English...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

Sorry to disappoint you but you are using a not very good monitor at all.

You see LG34GL750 it says HDR10 compatible but its only got like 300CCDM of brightness.. thats like 300nits. For AMD gaming the HDR 10 standard requires HDR 400 minimum which is an overpriced waste of time as those monitors have so little HDR that its not visually impressive or worth the extra cost for a HDR display you can get better looking SDR monitors for the same price is often the case when compared to a 400nits of HDR display you should save your money or get SDR. As the HDR adds more cost to the display meaning a cheap HDR monitor though expensive is not a good buy at all as its usually less impressive or specced than a monitor SDR of the same price.

Most 4k bluray discs are mastered in 1000 nits of HDR. So if you want a HDR display its best to go all in and get HDR thats visibly better and get full 1000nits. Or buy a large quantum dot TV for a similar price and it will have maybe 1500nits of HDR or 2000 nits of HDR and support 200hz or 240hz 1080p and 120hz 4k and will have freesync ultimate/VRR and be like 55 inches instead of a say 38inch or 32inch 4k HDR 400nits monitor. Here in australia you could have bought a hisense TV on black friday sales for same price as a 4k monitor with sooo much better specs and size its absurd monitors are overpriced garbage often is the case.

You are using an Nvidia Gsync monitor with an AMD graphics card, so even if freesync does work at all it probably wont work very well. Your display has a max resolution of 2560x1080 so I'm assuming its "claims of 144hz" requires you to possibly run in 1920x720 resolution to acheive it? or does it work at 144hz at 2560x1080res I wonder? Are you using AMD relive and "overlay" to capture the screenshots or record video if so then you may need to contact AMD. If you are using windows 10 to capture the images you should contact microsoft support for the windows OS. My guess is if its saving two images for HDR thats how HDR photography works in photoshop or FALSE HDR with layering multiple images which creates blurry oversaturated garbage but some of it can be very pretty but not exactly how HDR monitors work. HDR monitors do add additional colours but its sent in via metadata during playback or at the start of playback and it instructs your display how to output to the display in additional colours or brightness for the movie/game so it can be as the director intended hopefully. perhaps in HDR desktop mode when you are taking screenshots or viewing the screenshots the programs you are using do not support capturing HDR video. try capturing screenshots and viewing them with different software.

truth is for HDR 10bit video you must capture in LOG format then use colour grading software to add all the colours back into the footage at the settings and levels you want and to adjust the levels/luminance and raise peak brightness ceiling to 1000nits in the editing software and ideally you'd capture it with a camera with a HQ 10/12/16bit sensor usually expensive ones like an 8k red monstro cinema camera costing hundreds of thousands for full setup. In video games most game engines that create the game are actually 16bit colour supported which is why most video games look very colourful and vibrant compared to other content on your PC and using HDR in video games just means the levels of brightness and colour have been adjusted for the display to however many nits the display supports. More HDR means things can be more lifelike like if a painter makes a black and white painting or a lead pencil drawing can only use 8 different blacks/greys on his brush/pencil his picture wont look life like but if he can mix paints and whites and blacks and create hundreds of shades/tones and make subtle tones of black or dark grey and lighter then it looks more life like. HDR adds subtle details making things more life like but often it makes things look dull and unimpressive compared to high colour anime/video games and such with high saturation that dont look life like at all. So it depends on what you are using it with. Having my samsung TV and AMD GPU set well for SDR makes the SDR with HDR disabled look better when playing back HDR movies on netflix or playing HDR games like mass effect andromeda/divinity 2 and so on.

The colour gamut your display can reproduce and the HDR it supports is i'm sorry to say not impressive or good its maybe below the worst AMD will tolerate in their freesync HDR standards i'm guessing as im fairly certain 300ccdm translates to the same number of NITS of HDR peak luminance.

Maybe next time buy a quality quantum dot HDR TV with HDR10 and dolbyvision and dolby atmos and 200hz or more for 1080p. It will also be full resolution 16:9 .. all those gaming monitors cut the height in half then call it "wide" and just give you a handful more hz/fpz possible in return and charge more for the word gaming being on the box its a bad deal man. Research displays before purchasing. If you are stuck on a budget try to grab a second hand or refurbished/repaired TV or display or maybe get one from an auction as people die and their stuff gets auctioned or businesses get liquidated all the time. Criminals seized goods gets sold at police auctions all the time so if u wanna own some stupid criminals stuff he went to prison just to get his hands on for dirt cheap then keep an eye out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, to be honest, it is my first monitor that I buy, and if it costs me high, the brightness if there are monitors that have more than 350 nit or cd / m2, more however, the monitor looks very good, it will be later to keep those recommendations in mind.

I work with a resolution of 2560 x 1080 (WFHD), at 144HZ, in the games I felt a variation of HZ, so I deactivated the Radeon FreeSync and it always keeps 144HZ, despite not having 400 nits or CD / M2 the monitor it looks excellent

My only problem is when I take screenshots with the cropping tool, or when I stream with AMD ADRENALIN, which basically looks like the photos I attached.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

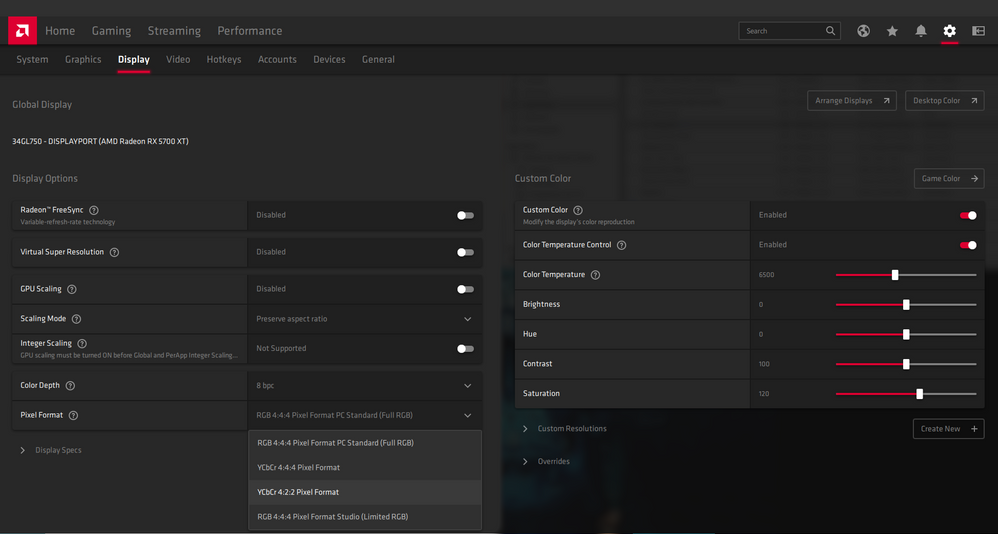

If you are using RGB try changing to YCBCR 4:2:0 10bit

But also recording in 10bit HDR may require different software as I said. Plus if you record in HDR you must use HEVC or VP9 version 2 or whatever they're up to now video codec format. Regular mp4 file and codec and xvid/divx/avc will not capture HDR video. special AVC formats from sony or the one on bluray discs AVC1 maybe has a chance of 10bit HDR though I'm not certain. Try using a different video codec when capturing/streaming. You also may want to choose to stream in regular 8bit because not all video sites support HDR 10bit and not everybodys monitor supports HDR 10bit. To make any video to 10bit HDR you just save it first then master it with colour grading software for best results. Though I dont mean do not have a HDR stream, you just need a second regular stream too so everybody can see it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, Thank you very much for all the responses.

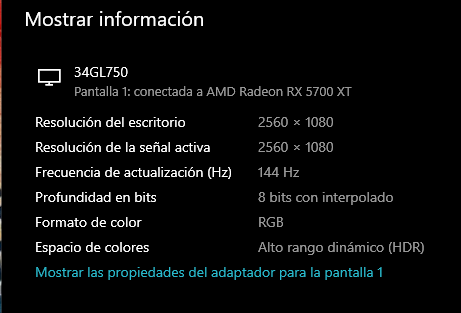

Look, as you can see in the image, I only get the 4: 2: 2 pixel format.

The tools I use to make a streaming is directly from AMD, and to make captures either in the browser, I use the print screen key, it can be compatibility problems by AMD that does not differentiate when HDR is active.

Thank you...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

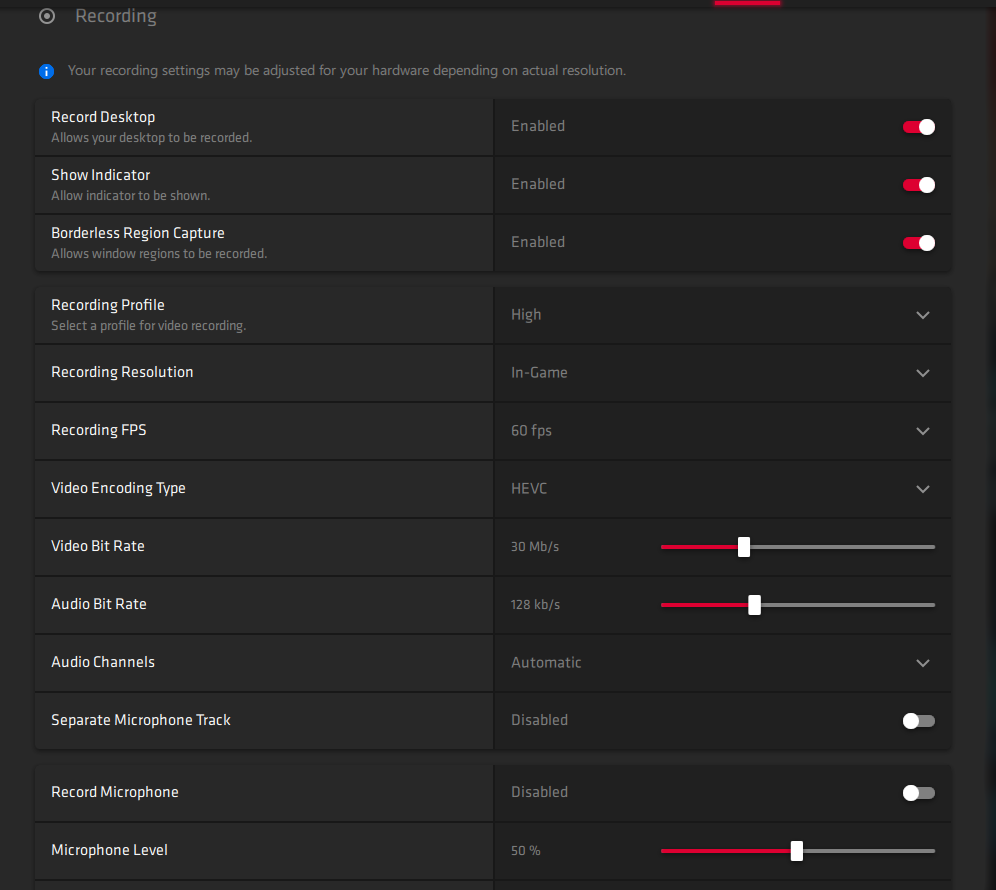

In the AMD adrenaline software, try testing out with HEVC for streaming/recording with AMD's software they provide.

You click on the settings gear icon in the top right of the screen then click the word "general"

then find "recording" and see the video encoding type and select HEVC. HEVC uses half the file size with equal or slightly better quality so its often best to use that. HEVC is one of the few formats that support 10bit video content.

However according to some nvidia sponsored youtubers if you try to make money with HEVC video files you must licence it, since they use it for their jobs/business/work to earn money they have to purchase HEVC codec first. Since its free included with AMD driver software its free to use I think as far as I know. if it needed money to use it then it would be separate and you must buy to unlock it. So go ahead and use it just dont try to sell it or profit from it and should be no issues. I believe AMD already licenced it for anyone probably or they cant use it in their software. But whatever.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I just changed the configuration to the HEVC video type as you indicate, and not only do I stream for fun, I am not in the least interested in generating income.

I need to do the streaming test to see how it is displayed, take a capture from AMD Adrenalin and it continues to display bright and contrasted, the screen configuration leaves it 4: 4: 4 full rgb, and in 8 bits

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thats because your DESKTOP or WINDOWS 10 ISNT HDR MASTERED. you see HDR needs to be created for the HDR colours and brightness levels. or else you could just enable HDR and every game and everything on your entire PC would be HDR. but it doesnt work like that as different cameras and displays have different levels of HDR and movies and games are set by the creator to display the correct levels of brightness and colours to do so it requires a different colourspace. Instead of using RGB (it sucks its not even close to being what we see maybe less than 30% of visible light colours) then adobe SRGB is just like 1080p bluray discs colourspace by coincidence BT809 then theres DCI p3 which is what 4k bluray discs use which is different again. However latest TV's and 4k discs can use BT2020 colourspace which is even higher and covers more than 50% of the visible light spectrum so its actually starting to become startlingly lifelike. Most TV's and displays only produce about 80 to 90% of rec 2020 colourspace in the expensive models but cheap monitors like yours maybe produce considerably less. Anyway with HDR to make it more lifelike, theres "brightness" levels and saturation levels of colour and also by reproducing billions of colours with 10bit colour instead of 16 million things will look dull and washed out and flat if they arent created to use billions of colours. Imagine your windows 10 desktop is painted and when you spread it out more it the paint becomes thin and diluted and then somebody's cranking the brightness up by shining a light behind the painted picture of your desktop. For pictures created to have more light and made with more paint it of course looks perfect but for everything else it kinda sucks.

So yeah unless a creator created it specifically to adjust brightness and use billions of colours then it can look awful for all regular content. Which is why as I said leave your desktop in 8bit 444 unless you are running a HDR game then toggle HDR on in windows settings and play. If you're normally in 8bit 444 RGB desktop and go to play a movie with your movie player software that supports HDR like daum potplayer or kodi and its a proper 10bit HEVC video file the movie player will automatically output to 10bit for you most of the time as long as you've got it set correctly. so you wont need to change to HDR mode to play back video if you are using fullscreen exclusive mode or d3d11 renderer as playback is now supported in the desktop i believe natively in windows for video.

Try taking screenshots and videos of a HDR GAME or a HDR VIDEO.

Sorry about my misunderstanding i thought you were just showing me the wrong coloured images as quick and easy examples.. Im on medication and mentally ill so you see I've got communication difficulties and can talk to people for the longest time with major miscommunications or misunderstandings all the time it frustrates me just as much as it does you. Sorry I just assume things alot because I know them its like everybody else should have realised this obvious fact when nothing but specific titles are HDR supported.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I hope you get better...

Look, in the display settings it says that the color space is high dynamic range (HDR).

And in the DxDiag, I exported the information and it says the following:

---------------

Display Devices

---------------

Card name: AMD Radeon RX 5700 XT

Manufacturer: Advanced Micro Devices, Inc.

Chip type: AMD Radeon Graphics Processor (0x731F)

DAC type: Internal DAC(400MHz)

Device Type: Full Device (POST)

Device Key: Enum\PCI\VEN_1002&DEV_731F&SUBSYS_57011682&REV_C1

Device Status: 0180200A [DN_DRIVER_LOADED|DN_STARTED|DN_DISABLEABLE|DN_NT_ENUMERATOR|DN_NT_DRIVER]

Device Problem Code: No Problem

Driver Problem Code: Unknown

Display Memory: 16315 MB

Dedicated Memory: 8151 MB

Shared Memory: 8164 MB

Current Mode: 2560 x 1080 (32 bit) (144Hz)

HDR Support: Supported

Display Topology: Internal

Display Color Space: DXGI_COLOR_SPACE_RGB_FULL_G2084_NONE_P2020

Color Primaries: Red(0.651367,0.332031), Green(0.306641,0.630859), Blue(0.150391,0.059570), White Point(0.313477,0.329102)

Display Luminance: Min Luminance = 0.304500, Max Luminance = 301.000000, MaxFullFrameLuminance = 243.000000

Monitor Name: Generic PnP Monitor

Monitor Model: 34GL750

Monitor Id: GSM773B

Native Mode: 2560 x 1080(p) (144.001Hz)

Output Type: Displayport External

Monitor Capabilities: HDR Supported (BT2020RGB BT2020YCC Eotf2084Supported )

Display Pixel Format: DISPLAYCONFIG_PIXELFORMAT_NONGDI

Advanced Color: AdvancedColorSupported AdvancedColorEnabled

Driver Name: C:\WINDOWS\System32\DriverStore\FileRepository\u0354308.inf_amd64_48534036afa0f0d8\B354265\aticfx64.dll,C:\WINDOWS\System32\DriverStore\FileRepository\u0354308.inf_amd64_48534036afa0f0d8\B354265\aticfx64.dll,C:\WINDOWS\System32\DriverStore\FileRepository\u0354308.inf_amd64_48534036afa0f0d8\B354265\aticfx64.dll,C:\WINDOWS\System32\DriverStore\FileRepository\u0354308.inf_amd64_48534036afa0f0d8\B354265\amdxc64.dll

Driver File Version: 26.20.15029.27016 (English)

Driver Version: 26.20.15029.27016

DDI Version: 12

Feature Levels: 12_1,12_0,11_1,11_0,10_1,10_0,9_3,9_2,9_1

Driver Model: WDDM 2.6

Graphics Preemption: Primitive

Compute Preemption: DMA

Miracast: Not Supported

Detachable GPU: No

Hybrid Graphics GPU: Not Supported

Power P-states: Not Supported

Virtualization: Paravirtualization

Block List: No Blocks

Catalog Attributes: Universal:False Declarative:False

Driver Attributes: Final Retail

Driver Date/Size: 20/04/2020 7:00:00 p. m., 1945712 bytes

WHQL Logo'd: Yes

WHQL Date Stamp: Unknown

Device Identifier: {D7B71EE2-305F-11CF-BA1C-B44D7AC2D735}

Vendor ID: 0x1002

Device ID: 0x731F

SubSys ID: 0x57011682

Revision ID: 0x00C1

Driver Strong Name: oem72.inf:cb0ae414e89a1ab6:ati2mtag_Navi10:26.20.15029.27016:PCI\VEN_1002&DEV_731F&REV_C1

Rank Of Driver: 00CF2000

Video Accel: Unknown

DXVA2 Modes: DXVA2_ModeMPEG2_VLD DXVA2_ModeMPEG2_IDCT DXVA2_ModeH264_VLD_NoFGT DXVA2_ModeHEVC_VLD_Main DXVA2_ModeH264_VLD_Stereo_Progressive_NoFGT DXVA2_ModeH264_VLD_Stereo_NoFGT DXVA2_ModeVC1_VLD DXVA2_ModeMPEG4pt2_VLD_AdvSimple_NoGMC DXVA2_ModeHEVC_VLD_Main10 DXVA2_ModeVP9_VLD_Profile0 DXVA2_ModeVP9_VLD_10bit_Profile2

Deinterlace Caps: n/a

D3D9 Overlay: Not Supported

DXVA-HD: Not Supported

DDraw Status: Enabled

D3D Status: Enabled

AGP Status: Enabled

MPO MaxPlanes: 2

MPO Caps: ROTATION,VERTICAL_FLIP,HORIZONTAL_FLIP,YUV,BILINEAR,STRETCH_YUV,HDR (MPO3)

MPO Stretch: 16.000X - 0.250X

MPO Media Hints: rotation, resizing, colorspace Conversion

MPO Formats:

PanelFitter Caps: ROTATION,VERTICAL_FLIP,HORIZONTAL_FLIP,YUV,BILINEAR,STRETCH_YUV,HDR (MPO3)

PanelFitter Stretch: 16.000X - 0.250X

Everything I have found says you can use HDR without problems, however it takes ugly screenshots except with windows game DVR (Win + G)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Think of Mp3 player or bluray player.. You can play back lots of audio files and they work, but bluray player supports dolby atmos 32bit 384khz audio.. If you take your free internet mp3 and play it back on dolby atmos sound system.. It sounds ugly like regular mp3.. why doesnt it sound amazing and dolby atmos surround sound!?!! You can use windows 10 app store dolby access app and make all your windows OS "Spatial Audio" setting set to DOLBY ATMOS. But then every movie and music you play every video game doesnt do dolby atmos it sounds regular and lame and boring. You get latest games maybe 3 or 4 of them have DOLBY ATMOS enable in in game menu settings. You play shadow of tomb raider, or battlefield V or star wars battlefront 2 or borderlands 3 or other game titles that support dolby atmos and enable in in-game settings DOLBY ATMOS SPACIAL AUDIO and you see your atmos receiver decoder light up with the dolby atmos symbol saying its in dolby atmos. So it should sound amazing like your mad max 4 blu-ray or game of thrones 4k blurays of season 8 or regular 1080p bluray of season 7 or 6 which was first bluray release to ever support dolby atmos.. The bluray discs sound amazingly good in dolby atmos 32bit true HD lossless 384khz and the true HD audio tracks maybe have 9 channels of discrete audio but atmos object oriented audio lets it power and drive extra ceiling height speakers with maybe up to 13, or 19 or even 21 speakers with its software processing... The 4k discs are recorded and mastered in high quality and specially mixed and mastered for dolby atmos. it takes days or weeks of work then long time encoding and formatting for it. Like how you FILM and RECORD HDR 10bit video without any colours its dull and flat and capture as much detail as possible in LOGARITHMIC PQ CURVE COMPRESSION to capture more subtle shades and picture so a dark shadow doesnt look solid black but can see dirt or rocks in the shadow. Then the person making 10bit HDR movie uses video editing software and spend hours or days or weeks adjusting colour scene by scene for dynamic metadata HDR or just applies as general a setting as possible for the whole movie at the start with static metadata HDR. They literally add colour into the movie they filmed without colour so it can be seen with colours they want. Action movie they use orange and teal colours to make heros skin tones stand out and pop vividly while background less present. For horror movie in the woods they maybe use some extra cyan colouring to make it feel chilling dark and spooky. They literally create and control all aspects of it including how bright it is for your display so if the sun is shining on a car mirror or car headlights are on highbeam it will look very bright at that point which is how they adjust peak luminance levels. In a game if you make it HDR you must set I want the lasers to be this bright with HDR.. and I want the carvings on the walls in the dark tomb raider tomb to be visible and not just hidden in black so there must be this much light and this much brightness.. You cannot simply open a photo and have it work with your computer and monitor set to 10bit HDR. it doesnt work like that.. Your mp3's aren't dolby atmos.. and your game that are dolby atmos aren't very good at it at all yet and are maybe doing it wrong.. but it'll get better.

Please understand theres false HDR where in photoshop you take a overexposed white photo then a underexposed dark photo and layer the two images together and adjust saturation and it makes a picture with more visible details that looks pretty and sorta blurry because two photos in one.. But thats FAKE HDR false photo editing HDR.. if you wanna take multiple pictures of your desktop with different gamma settings and make a fake HDR photo of your desktop thats blurry and oversaturated then show it off go ahead.. it will look vaguely like what you're seeing with the colours wrong but it doesnt work that way for video real time you cant do it.. But theres video software that lets you re-encode to look like the false HDR look.. Or theres photo editing software like AURORA HDR PHOTO application. If you want your games to LOOK FALSE HDR then use RESHADE FILTERS!

HDR isnt just everything the monitor shows it must be calibrated in editing software by the creator or it doesnt work even if your monitor is set to SHOW HDR CONTENT it needs HDR MASTERED CONTENT. If your windows is set to support dolby atmos output it wont work with dolby atmos with mp3's you MUST HAVE MASTERED ATMOS CONTENT. do you understand? mp3 is not TRUE HD file. h.264/xvid/divx is not H.265 10bit HDR video file format that can support regular 8bit video most of the time unless you use something like davinci resolve and master it into 10bit HDR or film with special cameras and software or its ripped from a 4k bluray or netflix or something that already mastered to 10bit HDR . Its different thing different files different software. mp3 audio isn't blu-ray audio.. but you can play an mp3 in your bluray player can you understand?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had previously with my display in freesync game mode enabled which allows me to select 120hz but once freesync 120hz at 1440p is enabled there is no mode to select 10bit YCBCR. To do so you go to windows 10 display settings and turn HDR on with the toggle there then start your game up with the HDR setting in auto or on. When I toggle HDR on my windows 10 display settings say its still 1440p 120hz. Does this mean freesync doesnt work with HDR 10bit? no you see the latest freesync in our samsung TV's freesync ultimate automatically sets the HDR levels in the display correctly for it and the HDR settings for you to be accurate so the display is calibrated and adjusted by the GPU better for a life like image (FREESYNC ULTIMATE is literally regular freesync basic with HDR settings built into it and slightly better performance minimum specs as original freesync was developed long ago when monitors were really not good and high latency so they had high minimum latency but hardware vendors in present era still pushed out garbage cheap monitors with terrible latency at the minimum to get freesync cert and sold them for years so people mistakenly believed freesync wasnt good while nvidia made people spend thousands on their garbage gsync monitors for even the cheapest ones so they were manufactured better for minimum standards imagine paying twice as much for a monitor for nvidia and it somehow ended up having ever so slightly better specs in general than paying peanuts for the cheapest freesync monitors on the market then telling people freesync isnt good because hardware vendors are lazy or not good enough at implementing VRR/freesync)

I have played the game with both HDR ON and HDR off and like how it looks with my settings with HDR OFF much better than with HDR ON. I simply compared how it looks with a samsung TV and my colour/picture settings with HDR and without it and decided with HDR off it looks a bit better so i leave HDR off intentionally because if it looks worse to me why turn it on? I prefer vibrant colours my TV and GPU do with HDR off. I watch netflix HDR with HDR disabled and use my adrenaline saturation levels and my TV source input set to game console for my PC input source then I adjust my picture settings how I like with the TV and have it set to "NATIVE" colourspace and crank the saturation slider. I have no issues getting HDR to work for me. its just when it does work it looks less colourful and more washed out (HDR means more life like and subtle colours and more details in extreme dark blacks or extreme bright whites) So playing lots of content like video games or movies I prefer a more colourful look rather than a duller dimmer look.

However to playback HDR video that was recorded in high bit depth on a very very expensive camera some hundreds of thousands of dollars probably a 8k camera or higher in 12 or 16bit colour captured in LOG format and then using software like davinci resolve like the 4kmedia.org travel with my pet HDR demo video or to play back 4k blu-ray discs with a 4k bluray player the HDR colours look better as its TRUE 10bit HDR CAPTURED CONTENT. So then I set my TV's colourspace to AUTO and enable HDR mode in windows to play it back or use fullscreen exclusive mode so my video player app can play it back. I have no problem enabling HDR.

If you want to say that gaming in borderless windowed mode makes all your games run faster and look better then yeah it does for some reason. Particularly if you have a different program window active and the game runs in the background its FPS soars super high and it runs properly for once but then clicking on the game window to play slows it down again. When you are alt tabbing or ALT ENTER key you are maybe making it windowed.