- AMD Community

- Communities

- PC Processors

- PC Processors

- Re: Threadripper 1950x slow with Anaconda Numpy

PC Processors

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Threadripper 1950x slow with Anaconda Numpy

Hi,

I built a new quad GPU system with AMD Threadripper 1950x. But to my surprise, the numpy linear algebra performance is extremely slow (2x slow than even i7-6700k ...), not even have to mention i9 series. Not just numpy, PyTorch uses Magma, the SVD operation in Magma uses CPU too. Now this threadripper CPU becomes a huge bottleneck of our server.

I did some benchmark with python2 came with anaconda distribution. With the prebuild numpy (linked to rt_mkl), the performance is shockingly bad as I mentioned. With nomkl package, the openblas performance is slightly better with more thread, but still pretty bad. I have heard a lot of good things about threadripper, but maybe scientific computing is not what it is for.

Since this is only our experimental server with an AMD CPU, we can switch to Intel i9 or dual xeon platform without too much trouble. But please let me know if there is a way to make Threadripper get an okay performance with numpy. As long as it can get 80% of an i9-7900x performance on linear algebra, we will keep this CPU. If anyone can give me some advice, we will appreciate that!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so the linear algebra operation is done on CPU? It's highly likely that numpy invokes MKL, which is not friendly to AMD CPUs.

The solution is to replace MKL with OpenBLAS(if OpenBLAS provides all the functions you need and numpy agrees....), set environment variable OPENBLAS_CORETYPE=ZEN(or EXCAVATOR if ZEN is not supported), OPENBLAS_NUM_THREADS=16(if SMT is disabled).

a properly configured openblas is much faster than MKL on TR 1950x.

The performance of TR 1950x is sensitive to the memory bandwith. 2400MHz*4-chanel at least.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The linear algebra is like SVD. Threadripper 1950x uses slower memory, has slower single thread speed, I was hoping to have a better linear algebra performance. If the linear algebra is this slow, we can not use this CPU since the whole system is about $8.5K for just parts.

I actually used nomkl option provided by Anaconda, which is compiled with openBLAS 0.2.20. Maybe it didn't have the Zen architecture flag turned on, I'm not sure?

But it uses 32 thread, still slower than an i7-6700K. The memory I'm using is quad channel 2666, 128GB, so the memory should not be a problem.

I will compile an openBLAS for this CPU, but now I really don't expect much from it given the discussion on openBLAS github: performance on AMD Ryzen and Threadripper · Issue #1461 · xianyi/OpenBLAS · GitHub

Probably I will switch to an Intel i9-7960x setup now. Threadripper doesn't not seems to be well support for scientific computing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

maybe you have to set environment variable OPENBLAS_CORETYPE=ZEN/EXCAVATOR manually.

I often find that openblas recognizes HASWELL/ZEN as PRESCOTT.

One way to check it is to call function char* openblas_get_corename().

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From HASWELL intel's floating uint have two ports either of them can do a 256-bit FMA in 1 cycle(throughput),i.e., 8 double mult+8 double add per cycle.While zen can do only one 256-bit FMA per cycle.

So ZEN is roughly the half the speed of intel's cpu. And the lower CPU frequency makes it worse. A proper overclocking can improve the performance but at your own risk.

In fact I'm considering a new machine with 8700K...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

canyouseeme Thanks for the comments! The number reported in thread is similar to what I saw in my benchmark on dense matrix multiplication and matrix vector multiplication. So actually if I use all of the 16 cores, it outperforms i7-6700K by a good amount.

But the real problem for me is the matrix factorization. The performance on matrix factorization seems unreasonable bad and unfortunately matrix factorization is a necessary part in my models. PyTorch uses Magma, which do not put everything on GPU when it comes to matrix factorization. I might did something wrong during the compilation. I will have to play with it a little bit to get a fair number, e.g. on Github they suggestion to compile it with architecture set to

OPENBLAS_CORETYPE=Haswell. Even if I can get an okay performance, I can not afford to tune very part in my tool chains since too many things can go wrong during the process. So I will still get an Intel platform to save some time. After I compile OpenBLAS properly, I will report some more benchmark performance back. OpenBLAS team also has been very helpful throughout the process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To the best of my knowledge, OpenBLAS doesn't implement LAPACK himself. It's netlib's LAPACK inside.

Because I don't know any detail in your work, so I'm not sure what's the real reason.

In my experience, there are many situations in which BLAS/LAPACK performs poorly.

eg.

1. matrix is too small, the real time-consuming step is not only BLAS Level-3 operaiton.BLAS level-1 operation is also hot spot. And the program may runs faster in single-thread than multi-thread.

2.LAPACK run faster in column major than row major. but Magma won't make this mistake,I think....

3.SMT does no good to compute-intensitive program, you can disable it in BIOS.

ps. dgemm() run faster when OPENBLAS_CORETYPE=EXCAVATOR than HASWELL on TR 1950x.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I compiled openblas on that machine and basically I found Zen, EXCAVATOR, HASWELL are similar. I did the following very simple benchmark and the result on 1950x still doesn't look quite right.

Platform 1: i7+6700K@4.2G, DDR4-2400 64G Dual Channel

20Kx20K, MM:--- 36.5188958645 seconds ---

10Kx10K, MM:--- 4.55566120148 seconds ---

8Kx8K, MM:--- 2.285572052 seconds ---

4Kx4K, MM: --- 0.314983844757 seconds ---

4Kx4K, Eig:--- 20.8545958996 seconds ---

1Kx1K, MM: --- 0.0073459148407 seconds ---

1Kx1K, Eig:--- 0.335417985916 seconds ---

Platform2: 1950x@3.85G, DDR4-2666 128G Quad Channel

20Kx20K, MM:--- 22.4687049389 seconds ---

10Kx10K, MM:--- 5.40012598038 seconds ---

8Kx8K, MM:--- 2.06533098221 seconds ---

4Kx4K, MM: --- 0.335155010223 seconds ---

4Kx4K, Eig:--- 34.5462789536 seconds ---

1Kx1K, MM: --- 0.0200951099396 seconds ---

1Kx1K, Eig:--- 1.06898498535 seconds ---

The performance on 1950x is unreasonable. So I wonder if you could test this benchmark on your machine and see if the numbers are much better than mine. The following is the benchmark code I posted at GitHub, the bottom of the discussion: performance on AMD Ryzen and Threadripper · Issue #1461 · xianyi/OpenBLAS · GitHub

One thing I found along the way is that 1950x drops Clock speed to about 2.1G Hz by default. I think this affect the performance significantly. I have to modify some of the features in the BIOS to avoid this auto speed down. Once I get that fixed, I will be able to report a more fair number.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2*20k^3/22.4687=712.1GFLOPS, 3.85G*8ops*16cores=492.8GFLOPS

2*20k^3/36.5189=438.1GFLPS, 4.2G*16ops*4cores=268.8GFLOPS

if it's double precision, your result exceeds the upper limit? I'm wondering if some algorithm like strassen is used inside.

my result is 380GFLOPS(dgemm,4096*4096), or 0.36second/4096x4096.Windows 10, TR 1950x@3.75G, DDR4 2666 quad chanel.

what's the name of LAPACKE function you are using to compute Matrix Eigendecomposition? I will test it later

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's an interesting point! I'm not sure why it exceeds that number. But I can confirm the default setting of numpy on a 64-bit machine is to use float64 data type.

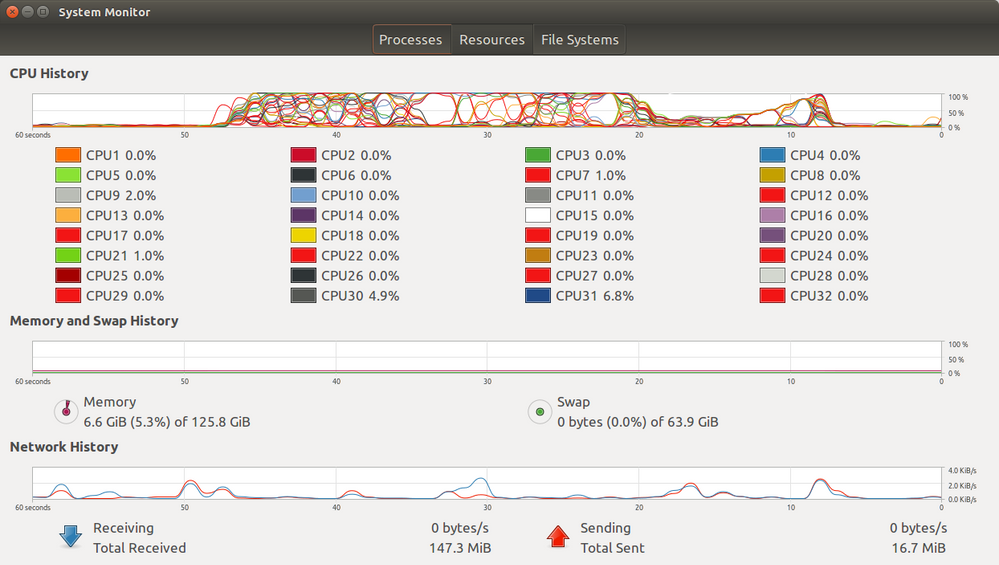

Also I'm not sure what's the LAPACK function numpy is calling for eigendecomposition. According to another user on Github's test, it seems eigendecomposition doesn't scale much, but it's using 16 threads. The CPU utilization during eigendecomposition is quite low, the following is the utilization curves, it drops very frequently:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

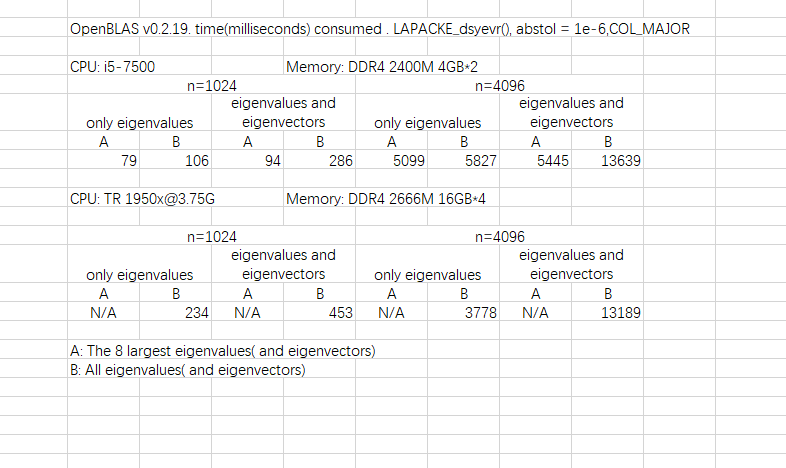

I am not sure what function does numpy calls.what I have tested is LAPACKE_dsyevr(),

lapack_int LAPACKE_dsyevr (int matrix_layout, char jobz, char range, char uplo,

lapack_int n, double* a, lapack_int lda, double vl, double vu, lapack_int il, lapack_int

iu, double abstol, lapack_int* m, double* w, double* z, lapack_int ldz, lapack_int*

isuppz);

MKL's reference says this routine computes selected eigenvalues and, optionally, eigenvectors of a real symmetric matrix A.Eigenvalues and eigenvectors can be selected by specifying either a range of values or a range of indices for the desired eigenvalues.

The parameter abstol is set to 1E-6.

The CPU utilization is really a big problem, when is set to compute eigenvalues and eigenvectors, CPU utilization is relatively low, while is set to only compute eigenvalues it's a little better. So the bottlenect is not CPU. That's the reason why dgemm() is used as benchmark, it can be highly optimized and make CPU keep full load.

The routine first reduces the matrix A to tridiagonal form T. So even if we only need few eigenvalues this routine still consumes much time. MKL's reference also says

Note that for most cases of real symmetric eigenvalue problems the default choice should be syevr function as its underlying algorithm is faster and uses less workspace. ?syevx is faster for a few selected eigenvalues.And the computing of eigenvectors is also very time-consuming,especially when n is large.

Anyway, I have to do some other work now...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for your help and it was really supportive! I think the benchmark has already reflected some potential library issues. For this specific case, we will get an Intel platform instead to save some time for now. Overall I think Threadripper is still a good CPU, the software eco-system just needs a little more time to catch up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My new result on TR1950x is 400GFLOPS. And I realize that the real problem is heat, or power consumption. TR 1950x @3.75G > 200W in dgemm() loop. It's said that i9-7980xe can consumes 1000W at full speed. So I want to collect GFLOPS/frequency/power consumption data of i7-8700K and i9.

What's the performance of your new i9?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sorry about the delay, I had some issue setting up the new server due to a faulty BIOS. I just reported the benchmark on the same github issue page. The result is as the following:

Platform 4: 7960x 2.8G + MKL 2018.0.2, DDR4-2666 128GB Quad Channel

30Kx30K,MM:--- 34.8145999908 seconds ---

20Kx20K,MM:--- 11.1714441776 seconds ---

10Kx10K,MM:--- 1.65482592583 seconds ---

8Kx8K,MM:--- 0.960442066193 seconds ---

4Kx4K, MM: --- 0.162860155106 seconds ---

4Kx4K, Eig:--- 17.041315794 seconds ---

1Kx1K, MM: --- 0.00704598426819 seconds ---

1Kx1K, Eig:--- 0.410210847855 seconds ---

It seems the scalability Eig itself is not good, which is not only the issue on 1950x. But 7960x does outperform 1950x quite a bit on this benchmark.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you![]() .

.