- AMD Community

- Support Forums

- PC Graphics

- Re: 6800xt FPS drops

PC Graphics

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

6800xt FPS drops

Hi,

Current PC : POWER COLOR RED DEVIL 6800XT, Ryzen5 3600, 16go 3466mhz, ASUS TUF B450 PLUS GAMING, 750W power supply.

I received 2 weeks ago a POWER COLOR RED DEVIL 6800XT, and I saw something is going wrong. When I'm playing COD Warzone, I sometimes have FPS drops. When using MSI afterburner for the monitoring, my GPU has a 65° max temperature, so the problem doesn't come from temperatures. I saw that the FPS drops come from the GPU usage the clock of the GPU who also drops. For example, when the clock is about 2300mhz, the GPU usage is above 95% and I can have 160fps with normal settings in COD. And a sudden off a time, the clock can drop to any number, and i saw my GPU usage dropping to 50% and so my FPS drops to 100-110. A friend of mine bought the exact same card, but instead he has a Ryzen5 5600X and 16go 3600mhz, and he has the exact same problem has me. We tried a lot of things like DDU, drivers desinstallation, install games again.

I did a benchmark on Heaven benchmark, and the same things happen (clock drops and FPS drops).

This is a problem because performance in games are not consistant and this is for me the key to enjoy a game.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Warzone is a problem not the card. Everyone is complaining about FPS drops and poor performance in general with that and other recent COD titles. Dev's need to work on that issue. Even the 3000 series Nvidia's are not so hot with that game.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah but I did a Heaven Benchmark to verify if it was only on COD warzone, and the same thing happens. So I know that warzone has some problems but I don't think that's the case here...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Random suggestion but are you running separate power cables to the card? If you're using an 8pin with splitter to an 8+8 pin that comes with most power supplies this can cause issues. Its best to use 2 separate 8Pin power cables from the PSU. Just a thought off the top of my head...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First I actually had 1 cable splitted, so I changed for 2 different cables, but it didn't change anything...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried turning on/off Radeon Chill? I believe you can do it in game too, with the default hot key being F11. Try that out and see.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it is always turned off

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well Unigine Heaven is more of a stability tester than a FPS indicator for one. Secondly, you're using a tool developed in the exact code of Warzone, C++, very CPU intensive. You're running a 3600, not the hottest CPU with a B450 board also not optimal for AAA gaming. Running a serious game or test means all the hardware needs to be factored as a whole when looking at results and such. It would be like putting that card into a AM3+ FX 8150 system and expecting 200 FPS in any game. Not happening because the card exceeds the power/speed of the CPU, BUS and RAM. You're missing the benefit of PCIe 4.0 which that card takes advantage of.

Try running 3D Mark TimeSpy, Super Position other tests. Try other games. Are you running the test with back round apps or unnecessary Windows services on? Is "enhanced sync" on? Have you tried at least moving the minimum GPU clock to within 100Mhz of the max GPU clock and enabling "fast" VRAM? What monitor specs are we talking about? DP or HDMI connection, refresh rate, GTG response all play a part in the smoothness or perceived FPS drop. Remember these tests weigh heavily on the CPU as well as the GPU, especially single threaded functions that AMD 3000 series CPU's fall behind in.

I've read the Power Color cards, especially the Red Devil's aren't living up to expectations as well. So at the end it could be brand. Very hard to say being the hardware attached. The hardware of the build is just "ok" not top of the line like an X570 and other matching components where diagnosing a performance issue could really be pin pointed to the card or something else.

I've also red posts dating back over a year with Nvidia having FPS drops using that test, so definitely try others. Bottom line if you drop in a high performance GPU expecting miracles using parts that don't totally match, you're in for disappointment. There's a science to building a gaming PC and just slapping high priced parts in doesn't mean the rig will fly. I can virtually guarantee that there's nothing wrong with the card itself in this build. My feeling is many of the complaints have the same in common of using lower end CPU's and boards, even RAM and expecting 300 FPS because the card cost about $1k.

My build is a X570 board with a 3600X/PBO enabled/10XScalar/motherboard voltage, 16GB DDR4 3733 custom tuned to 3600, 1800 IF, Gigabyte WindForce RX 6800 Gaming OC, Corsair RM750x, Corsair H110i. So far no issues with FPS and test scores are very nice. I say this to demonstrate parts matching parts to achieve a desired result, not to brag.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No problem, I understand and thank you for helping me. In deed Heaven bench is a good indicator for stability, that's why I used it, the problem is a stability problem. I sometimes have 150 fps and the second after 110.

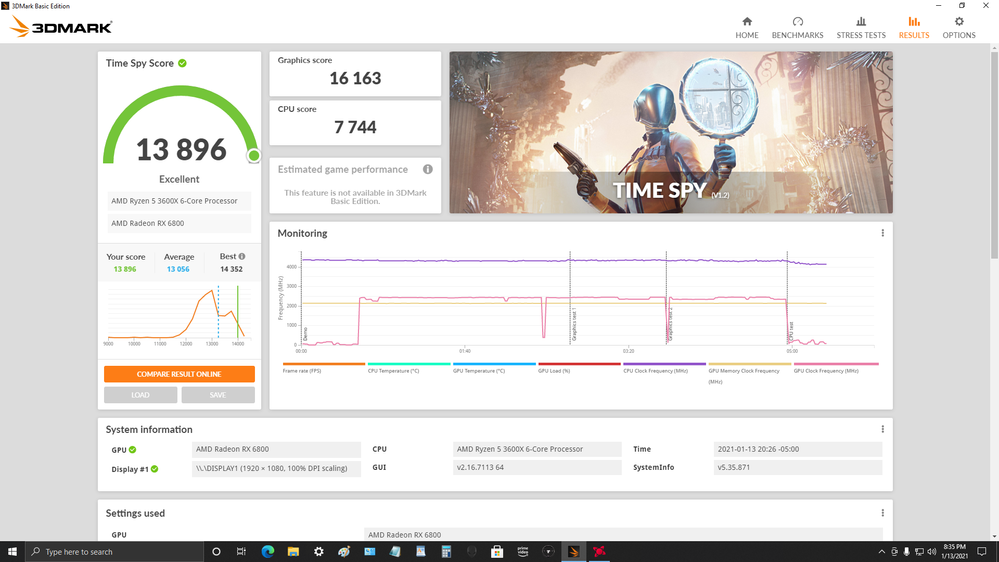

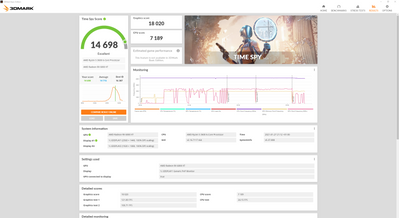

I did a 3D time spy so you can see my results (but I can't upload it lol). My total score is 14 698, my graphic score is 18 020 and my CPU score is 7 189.

The problem could in deed be a CPU bottleneck, but my friends who bought the same card as me, has the same problem. But he has a ryzen 5 5600x and 32go 3600mhz.

And today I also played at Jedi the fallen order, and the same thing happens. But curiously, during cinematics, my 6800xt is used from 95% to 100%. And then when it's my time to play, it drops from 70% to 80%.

I have a few applications running in backward, but nothing insane (like discord/steam/epic games).

And I forgot to say it but it is really important, I play at 1440p, so the CPU should not be a problem at this resolution.

I did a lot of research to verify which GPU I should buy for a 1440p, and a lot of reviews prooved that my R5 3600 could definitely handel a 6800xt.

And I also set a minimum core clock speed, but the GPU usage doesn't move and still drops.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

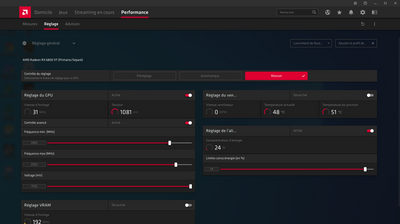

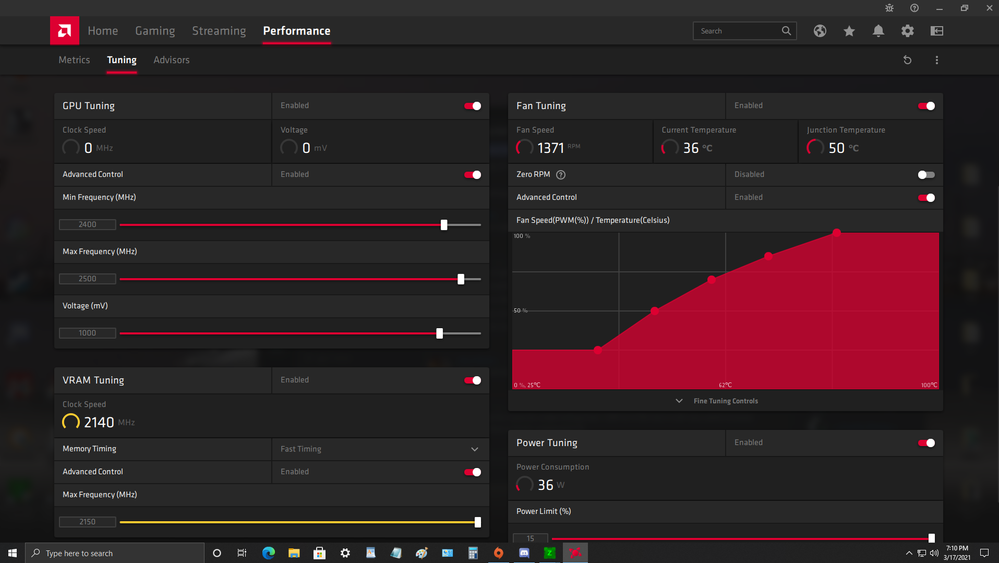

Now it's working

And you can also see the graph of my core clock

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

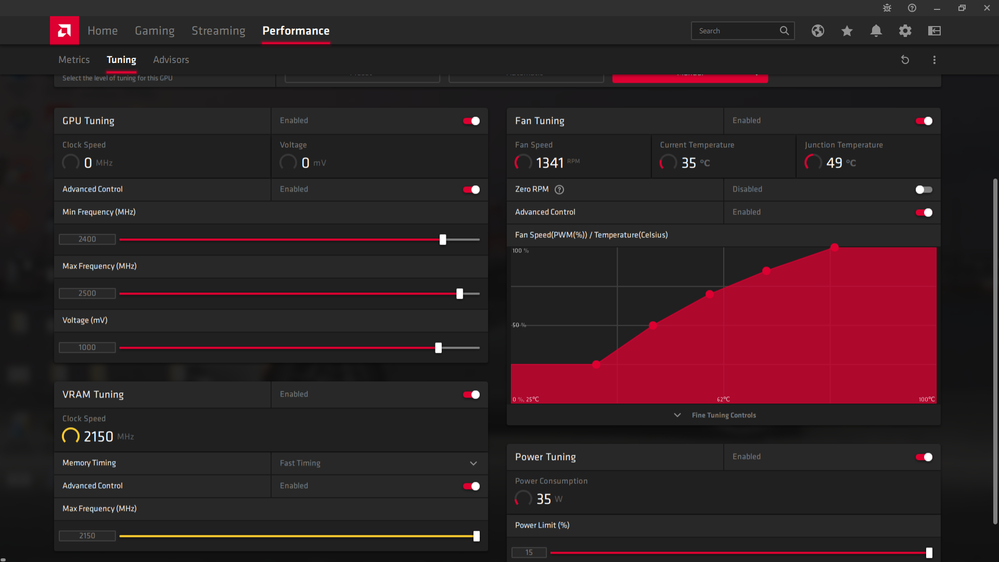

Usage doesn't matter and 1440p affects the CPU more if anything in certain titles. You're drawing more, smaller is why, so single threaded usage will go up. All usage tells you is if the application or game is calling for more or less GPU not whether it's working properly or not. Your clock is all over the place, which is why I suggested moving the 2 clocks within 100Mhz of each other and setting the VRAM to "fast". Skip using the OEM utility for the card and use just the Adrenaline software for best results.

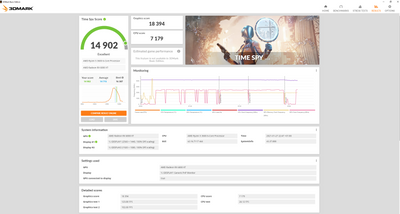

Look at my graph and you see a near flatline performance, that's the object for stability. My first TimeSpy test new out of box my graph looked like yours, red line all over and a 600 point lower score than the pic I put up.

Your friend's PC is running a 5600X and I would doubt his score is lower or the same. He may get FPS drops in testing, all PC's will, that's part of the test. One cannot compare the performance of a 5600X with that of a 3000 series anything. Especially if one is running the X570 chipset and a 6000 series card. AMD CPU's have always been weak in the single threaded area and the 5000 series is better but not where it should be.

In any case, glad to see you got it to work better.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I now have this results, with this settings, core clock still having drops and I can't even change the VRAM, or the bench just crash....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And I was wondering, when you see those 2 graphs, the GPU usage goes down as the power consumption goes down aswell. I got a power supply 750w from Aerocool. I didn't know when I bought it but those power supply have very bad reputation. Could this be the problem ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi brother i have the same issue with xfx merc 6800 xt and x570 mainboard with 3600xt cpu

could u solve this problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how important is it to use 2 seperate Pcie cables can it cause performance loss in older titles like battlefield 4,league of legends,apex legends? currently i am using a daisy chain and i would have to get another psu if i want more pcie cables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One six-pin cable holds 75 watts, an eight-pin cable holds 150 watts. And do not use adapters with molex for 6-8 pins. Given the voracity of modern video cards, the cables simply can not withstand such a load, they will fuse and there will be a short circuit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Old question, but in any case, the graphs show a drop at a particular timeframe. Does that correspond with a frame drop situation or is it an actual "movie" part of the game where frames often go to ~25-50 FPS?

Another thing is the Tjmax seems high at 80c+. My non-x only hits ~60c in games running on max graphics and OC'ed to the hilt. Might want to change the fan profile or get some more airflow there. Yes, in spec, too high? Absolutely in my book. AMD cards always seem to have an issue around the 77c mark on the VRAM. The "hotspot" seems to be the same way.

Make sure you use 2 separate, 8 pin power connectors, not the split. In earlier posts (see pic below) , my settings are for a non-xt Gigabyte RX6800. The "XT" uses a tad bit more power but not enough to call for 84c. In Radeon settings under "performance", enable all adjustments then: select "manual", select "under-volt GPU" and write that number down, same screen, enable "fan tuning". Make sure to turn off any "zero RPM" option and make your own fan curve, similar to mine for coolest results. Not the least noisy but far from loud. Cooler is better no matter how you achieve that goal, to a point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not about Games, in any game, disable the frame rate limiter and the card should work at full capacity (if again it will not be limited by the processor.) Your card has a peak consumption of 270 watts in the BIOS OC, BIOS Silent 265 watts.(2 eight-pin cables of 8 pins 150 + 150 watts) On all modern power supplies, there are usually two cables of at least 8 pins for powering the video card.(but the cable is divided into several (2 by 6 pins 2 by 8 pins)) all one cable holds 150 watts. It may also be that the bios for a processor with a limit is sewn on cheap motherboards( that is, you have a 5800x processor with a TDP of 105 watts, there is no TDP limit on an expensive motherboard, and under load the 5800x processor on an expensive board will work not at 105 watts but at 200 watts, and on cheap boards it will work according to TDP, that is, if under load it rests on a TDP of 105 watts at a frequency of 3.5 ghz, it will not raise the frequency higher, although it can work at 4.7 and higher. (it already depends on the subsystem, of course)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have 2 BIOS on your video card, one is OC, the second is silent, the switch on the video card itself is small, turn off the PC and switch the switch to another position, turn on the PC, play, then turn it off again and switch back. It helped me and the frequencies stopped jumping to rx6900xt(but I can do it through the utility) you have only this way and only when the pc is turned off!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Amd freesync