- AMD Community

- Support Forums

- PC Drivers & Software

- 2 ssd NVME in Raid 0 slow

PC Drivers & Software

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2 ssd NVME in Raid 0 slow

hi, I recently mounted an aorus x470 gaming 7 with a cpu rayzen 7 2700x with 2 ssd nvme in raid 0 (samsung 970evo). but when I create the raid 0 and then install windows 10 (driver for detecting the raid to be immed when installing windows), I do a performance test with samsung magican and me from 74.218 in random reading (IOPS), and 59.326 in random writing (IOPS), while I use only one without UEFI and without Raid 0 le performance is noticeably higher, in addition to this you notice the startup speed .. a ssd 850 pro in sata beats me in boot .. it seems absurd, I can think of both the driver given by amd for RAID ..

Any solution?

Thanks in advance

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I know this is a zombie thread but I wanted to get you folks some more information and confirm your findings. I work with extreme performance enterprise drives and you're correct. After digging into why performance is... well bad on a drive that should be running circles around the CPU I found that the NVME raid driver is the cause.

4x Intel Optane on 3900x DDR4000 - 1.2 Million IOPs, 7GBPS sequential read ---- AMD RAID disabled, driver not installed.

4x Intel Optane on 3900x - 300,000 IOPs 4k 64, 7GBPS seq read --- AMD RAID enabled, no raid volume, driver installed

There is performance loss of 73% when enabling AMD RAID and installing the drivers.

You're better off not using the "onboard" (CPU) raid and doing either a Windows strip or span.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After 6 hours of testing I still could not find the problem ... it seems to be that the AMD RAID drvier is not comfortable with the ssd 970 evo in raid 0.

Testing 1 ssd, installing windows 10 and making a benchmark, again on Samsung Magican, the performances are very high ..

On the samsung site there is the driver for nvme m2 but, (rightly) if installed, the AMD RAID drivers are invalidated, and the result is a crash at the start of the operating system..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Its not your drives, I have the same problem

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi, I read the various answers .. and when I had the problem I realized a bul###it, and true that if you put 2 ssd in raid you have more transfer in MB but IOPS fall .. my problem was that the nvme port M2B was PCIe 2.0 and then do a raid with the restricted bandwidth forced the raid to decrease its performance ... to date I remain disappointed with the decision of gigabyte not to put 2 pci 3.0 in the nvme .. the solution and a card in pcie 3.0 dedicated only to the ssd raid but from what I read in the forum you would have the same problems .. in a way thanks for the answer ..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

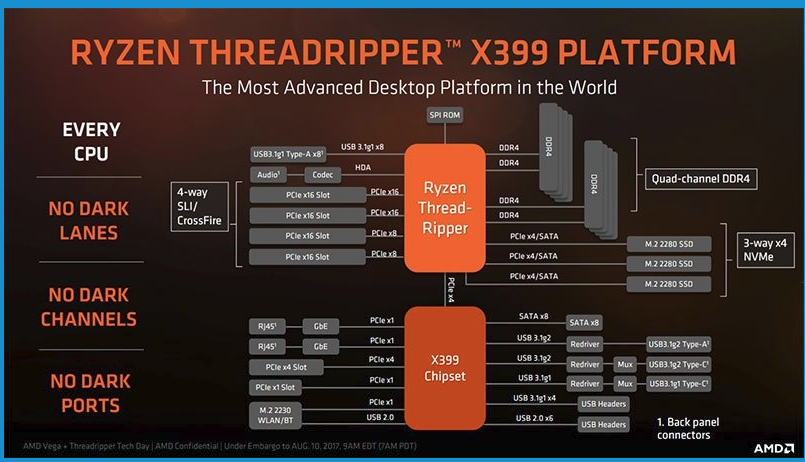

I have a TR4 Threadripper MB, Asus Prime X399-A, which does have pcie 3.0 and it has made no difference whatsoever.

On my motherboard its the AMD raid implementation not the M.2 NVMe interface or the lack of pcie of which I have 64 lanes!!! and I have one card installed an AMD rx580 graphics card.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok, so just to understand, have you used the pcie ports per m2 dedicated motherboard and you have my same problem?

if you can then think that the drivers raid amd are not able to handle or that the cpu can not handle all this speed ... because after these attempts I covered a 970 pro and a single ssd m2 I still have problems with IOPS .. (only milder) ..

I loose a link to a post on a forum where we talked about this problem ... (in Italian) ..

https://forum.tomshw.it/threads/velocit%C3%A0-bassa-samsung-970-pro-512gb.732247/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

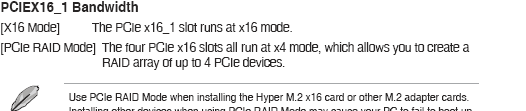

I do not get to allocate pcie lanes to the M.2. There is a setting in the bios manual which talks about doing this, but it mentions to do this ONLY if you install an M.2 adapter card.

Now I have tried both X16 mode and PCIe RAID mode with no difference whatsoever which makes sense, I have no M.2 adapter card in any of the PCIe slots.

according to AMD each M.2 gets PCIe x4 directly

a single PCIe x4 lane should be able to handle 3.94GB/s I have two, one for each m.2 drive and they are connected directly to the threadripper processor. What is the problem!! The problem is the AMD driver/software. Its rubbish. Maybe you have a similar problem with your ryzen 7 2700x.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can check your connected m.2 speeds using HWINFO. This will work even when configured in raid. It should say gen 3 x4. There is another post here talking about the performance issues with AMD m.2 raid linked below.

MSI MEG x399 Creation - 8 NVME bootable raid 0 - Slow Performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I know this is a zombie thread but I wanted to get you folks some more information and confirm your findings. I work with extreme performance enterprise drives and you're correct. After digging into why performance is... well bad on a drive that should be running circles around the CPU I found that the NVME raid driver is the cause.

4x Intel Optane on 3900x DDR4000 - 1.2 Million IOPs, 7GBPS sequential read ---- AMD RAID disabled, driver not installed.

4x Intel Optane on 3900x - 300,000 IOPs 4k 64, 7GBPS seq read --- AMD RAID enabled, no raid volume, driver installed

There is performance loss of 73% when enabling AMD RAID and installing the drivers.

You're better off not using the "onboard" (CPU) raid and doing either a Windows strip or span.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

at the time I blamed the driver, but since I no longer wanted to continue, besides because I needed it at that time for work, the idea was to buy a m2 controller from pcie so as to confirm my theory, from the written message the theory and confirmed, I don't understand why amd still did nothing today .. I wanted to ask you which chipset you work with x570 with?

in the time I asked, some time ago, to other forums but only a few people came to this problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

staggeredsix said "and doing either a Windows strip or span."

Well thats just plain embarrassing, the day windows software raid outperforms a hardware raid is a sad day indeed.

![]() I mean embarrassing for AMD of course!!

I mean embarrassing for AMD of course!!