- AMD Community

- Communities

- Developers

- OpenGL & Vulkan

- Re: Crysis 3 low FPS drops & low GPU utilization w...

OpenGL & Vulkan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Crysis 3 low FPS drops & low GPU utilization with RX 480

Hi everyone,

Here are my specs,

Windows 10 Professional 64-bit (up to date)

16GB (8x2) HyperX Fury DDR3 1866Mhz RAM

AMD FX 8350 stock clocks

MSI Radeon RX 480 Gaming X 8G (19.5.1 drivers)

MSI 990FXA Motherboard (latest BIOS)

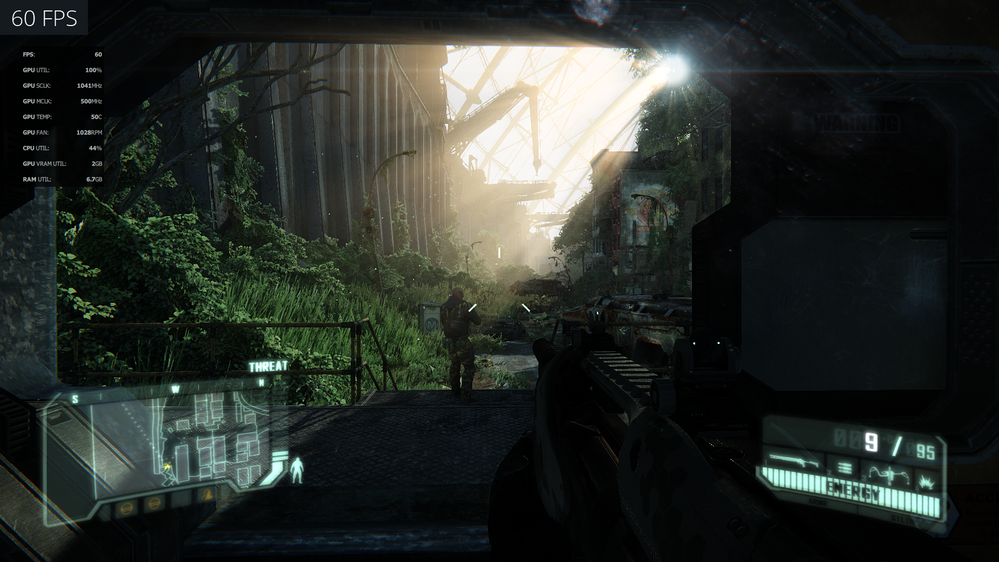

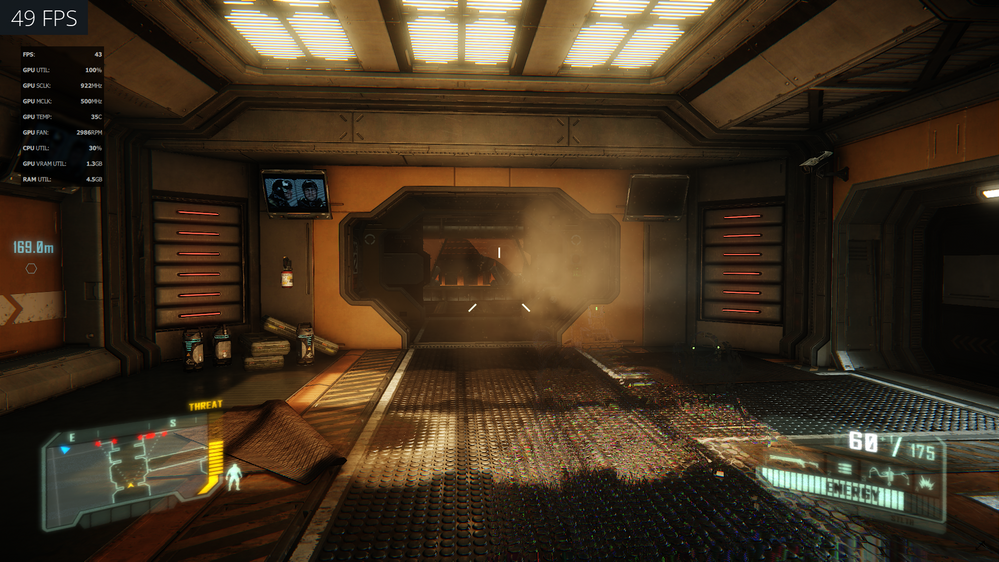

Crysis 3 is still experiencing low GPU utilization and FPS drops down to 17FPS in certain areas, which you can see in the screenshots which I am attaching. This screenshots is in the first mission, part 4 I think, where one just came through the green lazerbeam detection room and look through the windows.

Please note that it is not a CPU bottleneck, since I have tested it with my i5 8400 as well with only achieving 21FPS in this scene. This exact RX 480 was able to achieve 50FPS (100% GPU utilization at 1303Mhz stock clock speeds) in this scene in our old system with Windows 7 Home 64-bit & i5-4670k with early Crimson drivers that was installed for our R9 280X, but worked with the RX 480 with the exact same Very High preset at 1920x1080 resolution.

In addition, the frame rate drop is still present with Radeon Chill disabled.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you post some pictures showing the exact Video / Graphics options and the Advanced Graphics options you used in Crysis 3 please?

Also please send me screenshots of Radeon Settings Global Settings, i.e. Global Graphics and Global Wattman and also the Crysis Profile settings - i.e. Profie Graphics and Profile Wattman.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colesdav

Thank you for your reply.

I will do so somewhere in the week when I have time. But, thus far with all the RX 480 driver issues, and the findings I mentioned it this post, I am convinced it is driver related.

Crysis 3 does a CPU and GPU benchmark at the first launch of the game, and defaults my configuration to very high settings. Furthermore,

I use stock standard clocks for all my hardware components, therefore I barely ever open the wattman tab.

Radeon settings and game profile are at default, but enabling settings like chill, FPS limit or power efficiency does not have any effect on the framerate drops.

In the case of Unreal Tournament 3 the DX driver team notified me through the OpenGl team, that the performance drop between 16.9.2 and 19.4.3 was related to, too many ambient calls between the game and driver.

Thus my best assumption is that somewhere in their driver code, some functions are making to many recursive function calls which then bottlenecks on a little portion of GPU cores and leaves all the other thousand GPU cores hanging/halting for work, including the eight CPU cores.

Therefore, a bottleneck is not visible in utilization monitoring software such as MSI Afterburner, because GPU utilization will show as; for example, 12% if 340/2804 stream processors are working at 100% on recursive calls such as ambient calculations.

It becomes clear to me if you look at the screenshots where utilizations are relatively low on all major components that it is a software/driver bottleneck.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please keep in mind this happens with an i5 8400 as well, which has a lot more computing power than an i5 4670K (which didn't bottleneck the same RX 480 on Windows 7 with R9 280X drivers).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Had to reinstall the game on an appropriate PC.

So.

This is where I am.

Any further?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colesdav

Thank you for your efforts.

I also get 60FPS in that area of your screenshot. You are about one or two sub-chapters past the area of which I took screenshots.

You have to pass through a green laser-room just before the area with the FPS drops. This is just after fighting the helicopter in the rain on the bridge.

Sorry I had already sent this message an hour before, but this is the third time this website signs me out while typing and sending.

In addition, I would like to ask with which CPU you are bench-marking this?

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi hitbm47

Thanks for the information.

RE: Sorry I had already sent this message an hour before, but this is the third time this website signs me out while typing and sending.

Understood. It keeps happening to me as well. I frequently lose responses I am typing, or responses get saved before I can correct typing errors.

I frequently get locked out of posts for many hours after submit and cannot even correct any errors.

I do not know what is happening.

I have tried latest versions of Firefox and Chrome.

I will go back to the area you mentioned, take a look and then see what happens on a couple of different intel and AMD processors and various AMD and Nvidia GPU's. On the Intel side I have multiple older i7-4770K/4990K based machines which are all AIO Liquid Cooled and still competitive today for use in gaming tests versus the Ryzen 2700X. I also have a Ryzen 2700X build that I can try your test case on. I am in the process building a couple of new Intel based builds using latest high end Intel processors, so I may be able to look at this test case using those builds later on.

I can change BIOS settings to turn off cores and/ or hyperthreading and modify CPU operating frequencies to mimic the performance of your AMD FX 8350.

Comparison of teh CPU's is here: https://cpu.userbenchmark.com/Compare/Intel-Core-i7-4790K-vs-AMD-FX-8350/2384vs1489

I stayed away from AMD CPU's for personal use since first gen Intel i7 until my recent Ryzen 2700x build.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And here is the comparison of Ryzen 2700X versus i7-4790K:

https://cpu.userbenchmark.com/Compare/Intel-Core-i7-4790K-vs-AMD-Ryzen-7-2700X/2384vs3958

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

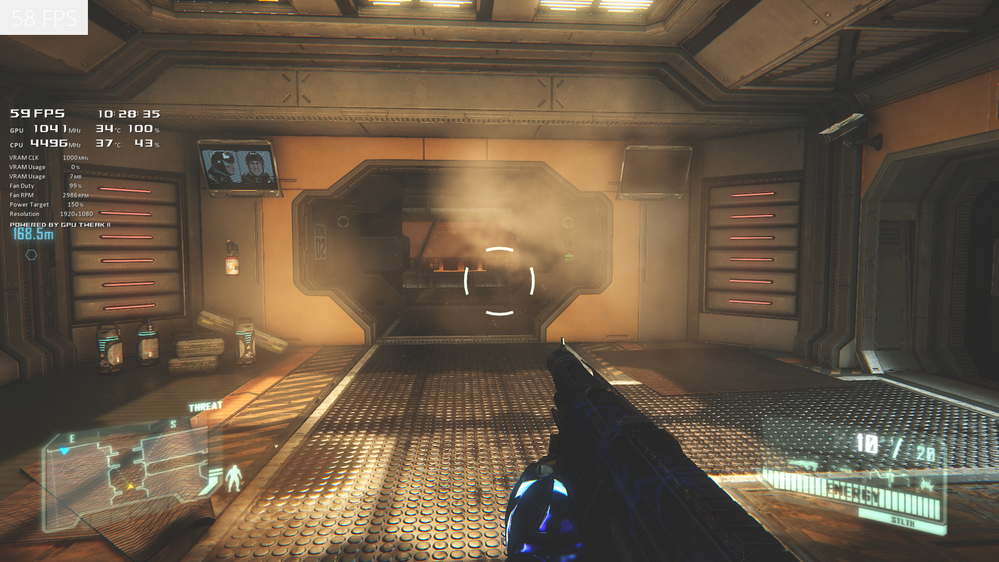

OK I got there.

So I have a few observations to make.

I took a look on the following system:

CPU i7-4790K on H100i Watercooler.

MB: Asus Z97 Deluxe NFC Wireless.

RAM 32GB Crucial Ballistix Tactical LP. DDR4.

GPU 1 R9 Fury X. PCIe3.0x8. Primary GPU.

GPU2 R9 Fury X. PCIe3.0x8. Secondary GPU.

GPU3. R9 Nano. PCIe 2.0x1.

GPU 4. R9 280x. PCIe2.0x1.

You can ignore the last 2 GPU's for these tests but I mention them because they are shown in Radeon Settings.

I am running Adrenalin 19.6.1

I set Global FRTC to 59 and FreeSync is on

Chill is off for these shots.

I am running Crysis at 1080p Full Screen Resolution, Very high Settings.

I killed all not essential processes, fans all maxed out

So first of all with GPU 1 active, Crossfire off, and Radeon Performance Overlay off. Radeon Relive Video Recording Off. I see this performance:

When I turn on Radeon Performance Overlay the FPS drops, then when I turn on Radeon ReLive to record what happens I get another a drop in performance to 49 FPS.

That's at least a 17% performance hit just to turn on the Radeon Performance Overlay and record at 1080p which is pathetic.This must be a bug in ReLive or RPO.

Moving on.

By the time I walk down the corridor to look out that window you can see in the distance the FPS has dropped to 32 FPS:

CPU Utilization isn't even at 50% but then I need to show the Task Manager performance charts to give a better picture.

I will post that info later.

I had to kill some enemies but then I went back to the window shown and looked at FPS variance with direction. I rotated the character slowly.

and then back looking out the window.

Running a pair of R9 Fury X's in Crossfire at the problem still cannot maintain 60 FPS when I look out the window at the bottom of the corridor.

I will post a few shots of that next.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a screenshot of a Pair of R9 Fury X in DX11 CrossFire .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A couple of screenshots showing the impact of using AMD Adrenalin 2019 19.6.1. own Radeon Performance Overly (RPO) to show FPS.

Relive is on.

Not recording video though.

Asus GPU Tweak II Performance Overlay is also running and it has no impact. Using it does not hurt FPS.

RPO Off.

=======

RPO on.

=======

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Submitted AMD Reporting form about this RPO and ReLive Recording hit of 17%. reduction in FPS.:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

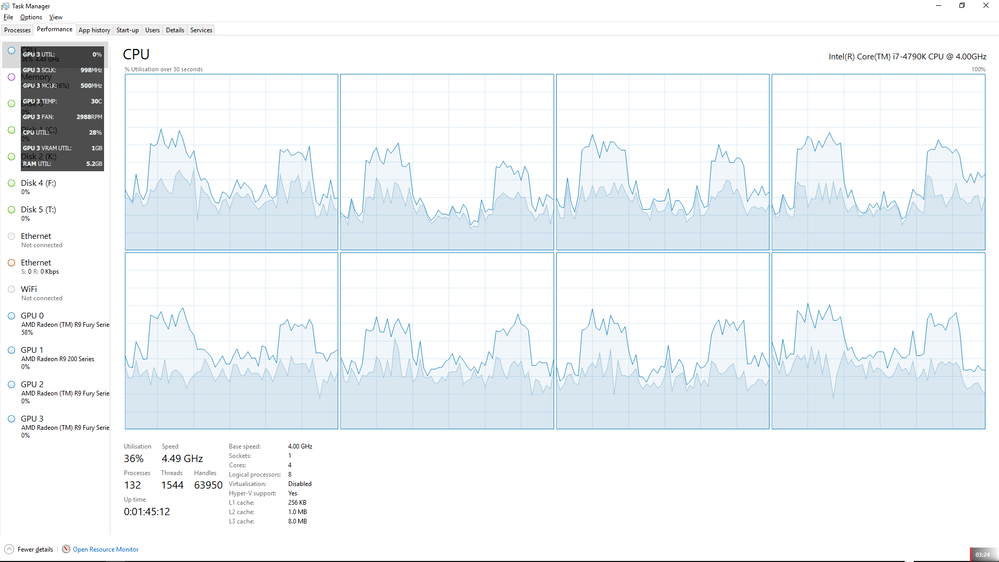

Here is the CPU Utilization:

The Utilization Peaks are statarting at the top of the corridor, walking down to the window with serious FPS drop, rotating the character, walking back up the corridor.

The Utilization Minimums are due to ALT Tab to desktop to take the Task Manager Screenshots.

Looks like the game is running reasonably evenly across 4 cores and 8 threads, and there is enough spare CPU utilization available.

I have a video uploading now showing turning on desktop recorder and performance overlay, launch Crysis, walk up and down that corridor and then Alt Tab to desktop to take the task manager screenshots just in case you want to take a look.

I think I have shown enough, but if you really want I can look at how it runs on Ryzen 2700X and RX Vega Liquid or I can show it running on Nvidia GPU like a GTX780Ti to compare to the R9 Fury X or an RTX2080 to compare to the RX Vega 64 Liquid which I guess would prove the problem is a game issue or an AMD Driver specific issue.

I will do no more on this unless you need any more data.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a link to the Test Video.

Crysis 3 poor performance. - YouTube

I will update details on the Video description when I can.

Please let me know if you have any questions.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colesdav

Wow thank you very much for your major efforts in reproducing the GPU performance drop issue!!!

I have tested a EVGA nvidia GTX 1070 in my exact FX 8350 system about a year ago after convincing a friend to do the swap and the result was higher CPU utilization (about 70% total whereas 50% total with my RX 480) and resulted in constant 50FPS+ compared to my 20FPS with the RX 480, which proves it being a @AMD driver issue and not a CPU bottleneck. But then I tested my exact RX 480 in our i5-4670k system with Windows 7 Home 64-bit SP2 with R9 280X drivers and the RX 480 was able to maintain a solid 50-60FPS throughout that whole area with 100% GPU utilization at all times.

Thus your i7-4790K should have no issues at all maintaining 60FPS+ in that area if an i5-4670K were able to do it. Furthermore, I reported this issue numerous times to tech support on amd.com, and they just replied with this issue has been reported to the driver team already and that they weren't able to reproduce the issue on a similar system.

That is why I decided to join this forum to find some way to speak to the developers more directly, not to be a nuance but because I am convinced the hardware has a lot of potential which isn't being utilized properly.

Hi dipak, dorisyan, xhuang I would just like to ask if anyone of the driver teams has seen the efforts colesdav has put in to reproduce the results on a very capable system?, even by today's gaming standards.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the compliment.

It wasn't a major effort though.

Maybe a couple of hours.

I doubt anyone will in the Drivers Team look at anything related to R9 FuryX or even RX 480 performance.

Especially unlikely on an older game like Crysis 3.

I reported issue with R9 Fury X / Nano performance versus RX580 4GB on Forza Horizon and even showed that a GTX780Ti 3GB can run the benchmark to prove it is not a memory issue. - well no one cares and no answer or investigation.

I doubt even reporting issue on Crysis 3 with RX Vega 64 Liquid would get looked at.

I think AMD drivers team only worry about performance issues on their latest GPU's and once the new one comes out you can forget it.

I think you would have to wait until July 7, buy the Polaris replacement GPU - RX5700XT, for a very high price and then you might be in with a chance if any reviewers out there are including Crysis 3 in their review Benchmarks.

Good luck.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

colesdav well apparently they fixed issues in two games which I reported for the RX 480, but these fixes has not been present in any of the 5 drivers released afterwards.

What I do appreciate is the fact that they made Enhanced Sync and Chill backwards compatible with older GCN cards at some point in time.

But you are right they do not seem very interested in fixing older driver bugs, or in my case necessarily trust the credibility of the performance reports.

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

They miss a real opportunity with Radeon Chill. It needs improving so it is usable and really saves power without killing keyboard only input FPS.

I have many posts about it, and what I believe needs changed. I also tested the complete set of "supported games" to prove those problems but again, no one cares to listen to user feedback.I didn't bother to post the complete set of videos because no-one listens or fixes it anyhow.

I reported rapid mouse movement causes excessive power draw, but the "solution" to that in Adrenalin 2019 was to lock it to Chill_Min, and it doesn't always lock. In other words it is still broken. I would like AMD to focus on fixing/improving Chill at the expense of AMD Link, because it looks like those new RX5700XT GPU's with their blower style coolers will need to use Chill.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @colesdav

Thus far I am quite impressed with Radeon Chill with my RX 480. It does indeed report much less wattage usage in the overlay and only uses max stock wattage when moving the mouse fast to achieve max FPS and Megahertz possible when GPU utilization does actually reach 100% to achieve the desired frame-rate.

But what I have noticed is that my FRTC barely works when Chill is disabled, this I have to set Chill Min equal to Chill Max to achieve a locked FPS, and I am uncertain of how compatible Chill and FreeSync is, even within the FreeSync range.

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am interested to discuss how Chill and Global FRTC is behaving on your RX480, if you are interested to look into it in detail. It would be good to compare results.

RE: Thus far I am quite impressed with Radeon Chill with my RX 480. It does indeed report much less wattage usage in the overlay.

There are a number of ways to bring down GPU power and you need to define what the FPS targets, game , game settings, Global and Local settings in Radeon Settings etc. Best thing to do, set the FPS limiter in the game engine itself, if it has one.

If you want to do a comparison test of how Chill runs and performs on an RX480 or RX580 or RX 590 versus an RX Vega 64 Liquid I would be very interested to look at that with you, but we should open another new thread on this forum to discuss it so we do not divert this thread from your original topic too much. We should use an officially supported game to test it, and run with the latest version of Adrenalin 2019.

.

RE: and only uses max stock wattage when moving the mouse fast to achieve max FPS and Megahertz possible when GPU utilization does actually reach 100% to achieve the desired frame-rate.

It shouldn't do that in any Adrenalin 2019 release . With Chill on the rapid mouse movement FPS should now be clamped to the value of Chill_Min. Letting rapid mouse movement incur unlimited FPS does two things. It costs more power for no real reason, as the game image will be far too blurry anyhow. If FPS rises above top end of your FreeSync range it can causes screen tear.

RE: But what I have noticed is that my FRTC barely works when Chill is disabled.

That depends on how you set your FRTC, and what you have been doing and using to set your Chill min and Chill Max values.

That one is a more complex discussion - but if you are using the Chill_Max slider in the Radeon Performance Overlay it can change the value of FRTC.

Chill_Max slider is not in fact a Frame Rate Target on its own. It is a slider used to scale max FPS performance with keyboard only input, depending on value of Chill_Min.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colesdav

Yes maybe we should start a new thread for this topic. I cannot promise much input other than taking a few screenshots since we have limited Internet bandwidth.

I feel quite familiar with Chill at this point, and my understanding is that it currently works in most DX9, 10, 11 & Vulkan games, unfortunately not in OpenGL. Furthermore, my best experience with it is for multiplayer games by using it in co-operation with Enhanced Sync to allow the FPS to go way above the screen refresh rate by some form of Triple Buffered VSync and in doing so reduces keyboard and mouse input latency a lot.

I think the main idea of it is to give exceptional input response with reduced power usage when you are; for example, "camping" around a corner in Counter-Strike. In CS:GO I use the default preset of Chill Min = 70 & Chill Max = 300 since this gives the best possible input response. In contradiction, it doesn't work well in games with vehicles (gives motion sickness) such as Battlefield 4, but setting chill min = chill max = screen refresh rate (75Hz in my case) with Enhanced Sync enabled gives me a great experience in such games, since it still reduces input latency and saves power.

I have not tested setting FRTC only with Power Efficiency enabled as an alternative to see if it does the same as setting Chill min = Chill max, but I suspect it will have more input latency.

Just tested Half-Life 2 with Chill min = Chill max = 75FPS with Enhanced Sync & FreeSync enabled and the experience is 90% smooth with GPU power usage only being at about 60W or less (where my card has a TDP of 125W).

FreeSync seems to work best with only FRTC set as compared to setting Chill Min = Chill Max = 30FPS in a game like theHunter: Call of the Wild, but it has more input lag than Chill.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually it only uses about 30W, but it has some issues where it goes way above Chill Max at some point like looking up at the sky would sometimes cause the FPS going to 300 instead of staying locked at 75FPS.

This might be due to the driver not being able to downclock the GPU quickly enough to maintain Chill Max in lighter games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing that really bothers me is that the drivers since launch for the RX 480 has never reached a point of being solid quality.

Thus to me these are issues that really needs to be fixed, since I spent a lot of money to upgrade from a R9 280 3GB to this expensive RX 480 (4500 ZAR), and this was supposed to be a card that would give me at least 5 years of solid gaming performance, but instead it just dragged old issues with it as if it is just some prototype card to them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think overall the RX480 was a great GPU and a great improvement versus previous AMD generations, and the launch price was great.

However I think AMD installer problems and Driver Stability (possibly caused by installation issues) are still a problem.

Having said that Adrenalin 2019 19.6.1 seems pretty stable so far versus initial Adrenalin 2019 releases.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colesdav

I still think this RX 480 is a great card, and is theoretically able to do great gaming for years to come. I would give this card an 8/10, but its' drivers a 6/10.

The reason for this is because our R9 280X experiences less GPU utilization issues than this card of mine on average; for example, Unreal Tournament 3 has a much higher minimum frame-rate with our R9 280X than it does with my RX 480 when swapping them out in the same system.

This is also what I mean when I mentioned it feels like a prototype to me. Since at launch it had perfect performance in games like doom 4 and Wolfenstein II The New Colossus, but recently I have started experiencing some performance issues in those games as well with new drivers, where frame-rate would drop to 50FPS and looking up to the sky and down again would reset the drop and allow it to reach 70FPS+ again.

Regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacques,

I really appreciate both of you for your efforts.

I already informed the driver team about this thread as well as some of your other threads related to the performance drop issue (e.g. Wolfenstein The Old Blood, RX 480 low performance, Standard Elder Scrolls of Skyrim low GPU usage and low FPS issues on RX 480). Today, I've notified them again. As soon as I get any update from them, I'll get back to you.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks,

Maybe you should have asked people in the AMD Community to look at performance of newer game titles that are likely to be benchmarked in RX5700/XT reviews?

Possibly too late now but you never know you might get some useful help.

People running RX480/580/590's and RX Vega 56/64's and even the few who run Radeon VII's might be able to give lots of feedback.

Look at recent Radeon VII reviews from people like Hardware Unboxed, for example. Take their most important game selection, add a few more newer titles.

Perhaps you have already done that with the Vanguard Program if it is still running? However many will not want to join that.

Maybe you could try that next time you do a new GPU release.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In addition, colesdav this happens even with only using RivaTuner Statistics Server for monitoring utilization or even when disabling any overlays and only playing.

But I would like which FPS monitor you were using in addition with Radeon Overlay? Because, I only know Radeon Overlay, FRAPS, MSI Afterburner and I thin Hardware Monitor.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The performance overlay I was using is ASUS GPU TWEAK II.

I do not normally run 3rd Party Overclocking Tools because they can cause problems with Wattman and performance of AMD GPU in some games.

But I do use ASUS GPU Tweak II just for Gaming Booster and OSD features on AMD GPUs.

You can download it here for free:

https://rog.asus.com/downloads/

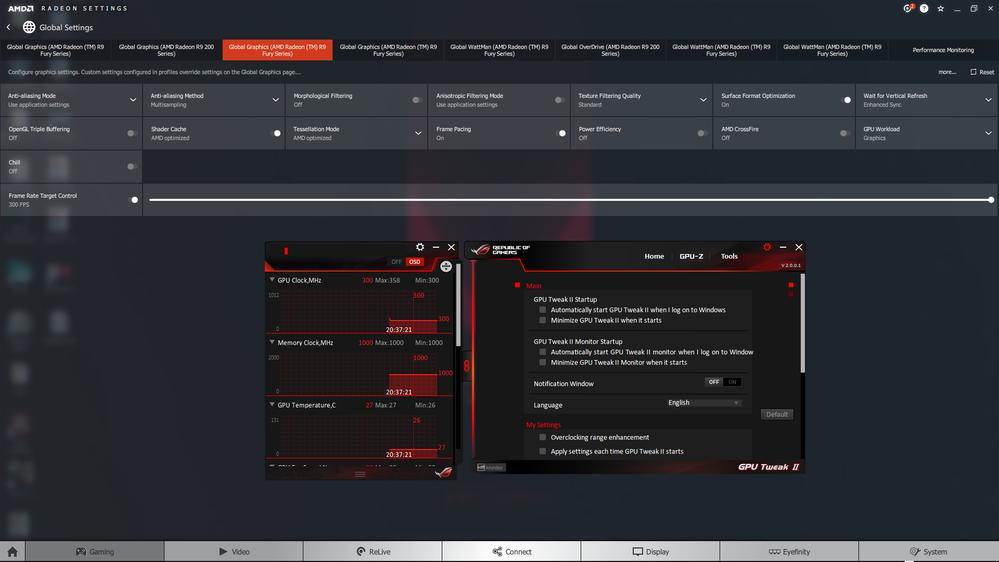

If you do install it to use with AMD Settings Wattman I advise you set the following options in GPUTeak II :

Make sure all of those boxes are unchecked, and only start the application to run the Gaming Booster and use the OSD.

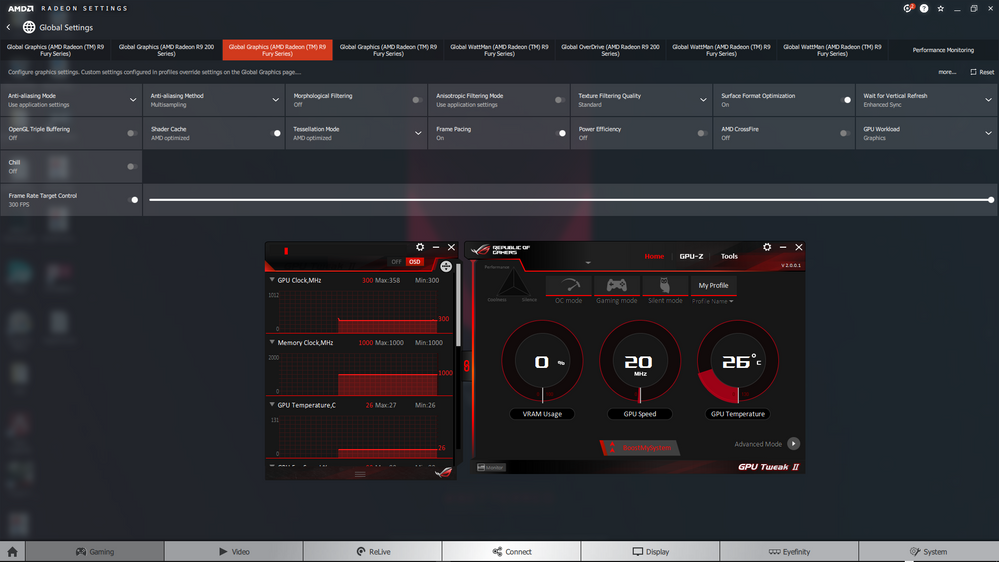

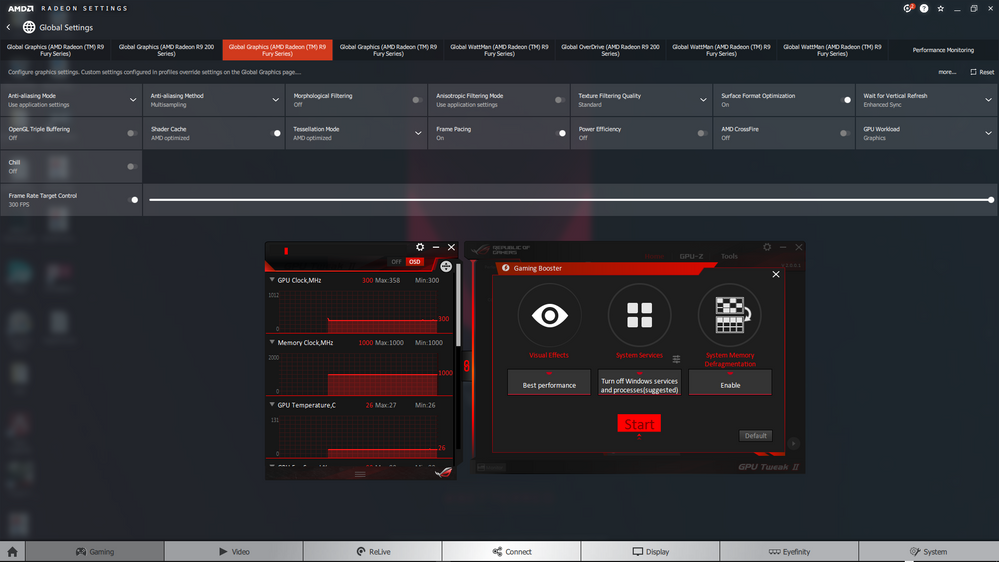

The Gaming Booster is here:

These settings are useful, but note if you run Windows 7 you might lose AERO Desktop style.

The OSD settings allow you to choose which metrics you want, on screen position etc, for each GPU on your system:

I also use the Auto Overclocking feature. It supports Automatic Overclocking and Tuning on Nvidia RTX GPU's.

It can also report Ryzen 2700X CPU Utilisation, something which amazingly AMD's own Radeon Performance Overlay cannot manage to do yet. The RPO only reports Intel CPU Utilization as far as I can tell.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colesdav

I can confirm that my Radeon Overlay displays total CPU utilization for my FX 8350 as well as an i5 8400. Although, every now and then the Radeon Overlay fails to display the FPS field.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's a problem with Ryzen 2700X processor for sure.

RPO will not report CPU utilization. It is always zero.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is that this isn't the only part of the game it affects, and therefore such driver issues affects the whole game's experience and rendering it non-enjoyable to play.

I find very few games to be playable with Radeon cards, even though I really like the architecture and driver features of the cards. AMD's hardware and older CPU's aren't bad at all, it's just the utilization that's the issue; for example, my friend had far less bottlenecking with his GTX 1070 paired with is AMD A10-7850k quad core than my RX 480 has with my FX 8350.

Borderlands 2, which I recently purchased, also experiences frame-rate issues like this in 35% of the areas.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Crysis 3 with Ryzen 2700x running vega 64 Liquid versus Ryzen 2700X running RTX2080 will be uploaded soon.

Also GTX780Ti on i7-4770K on the way.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey

Awesome colesdav! Thank you for your valuable benchmarking. Do you run a store or review these cards?

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

dipak, dorisyan I do not know if this would help, but I had an interesting find with Battlefield 3.

I decided to test this scenario with Battlefield 3, because Battlefield 3, 4 and Bad Company 2 performs exceptionally well in this system of mine.

What I did was, I limited my FX 8350 to 4 cores by only enabling cores 1&2 (module 0) and cores 3&4 (module 2) in my Basic Input Output System, assuming modules were named (0, 1, 2, 3). Afterwards, I set the in-game graphics to "low preset 1080p" with VSync disabled to force a CPU bottleneck to "choke" my RX 480 and to my surprise my GPU constantly stayed clocked at 1303Mhz (MSI RX 480 Gaming X default turbo clock) even when GPU utilization was only at 4-10%, whereas in Crysis 3 and Unreal Tournament 3, GPU clocks would fluctuate permanently in low GPU utilization scenarios as can be seen in the previous screenshots.

Furthermore, I will post screenshots of these findings of Battlefield 3 here.

Kind regards

Jacques

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ryzen 2700X + RTX 2080OC easily maintains 60FPs at 1080p.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The above saved before I could complete edit. Here is a try again:

Ryzen 2700X + RTX 2080OC easily maintains 60FPs at 1080p.

Crysis 3 Poor Performance 2. Test run on Ryzen 2700X and RTX 2080 OC. - YouTube

The RTX2080OC is running much better than the RX Vega 64 Liquid on the same PC.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ryzen 2700X + RVega 64 Liquid. Turbo Mode. Max Fans

DDU + clean install of Adrenalin 2019 19.6.2.

Only one word for it. Lame. Only manages about 20 FPS at that window at the end of the corridor.

There are so many problems with AMD GPU in this video I will discuss in detail later.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

colesdav thank you for the in-depth benchmarking!

This is exactly what happens to my RX 480 and it relieves me to see that it is a driver related issue. But, this resulted in me buying an i5-8400 almost a year ago, shortly after my FX 8350 (which was the newest AMD processors in our country) and wasn't necessary since it never was a CPU bottleneck!! ![]()

Furthermore, as I have said this results in a bad gaming experience and happens in almost 50% of popular games out their with Radeon cards.

Far Cry 2 & 3 has these issues in the home base as well.

Kind regards

Jacques