- AMD Community

- Communities

- General Discussions

- General Discussions

- Re: Ryzen 7000 series !!!!!!?????

General Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ryzen 7000 series vs Raptor Lake Performance

When comparing the Ryzen 7000 series Raphael with Core 13 Gen Raptor Lake in performance and cost...the Ryzen 7000 series really in bad situation...I don't know why...perhaps with the motherboard bios and chipset issues or compatibility issues with the ram ddr5......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not get your point. The Ryzen 7000 series is better in any way than the Intel chip. You cannot blame AMD for motherboard manufacturer errors.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

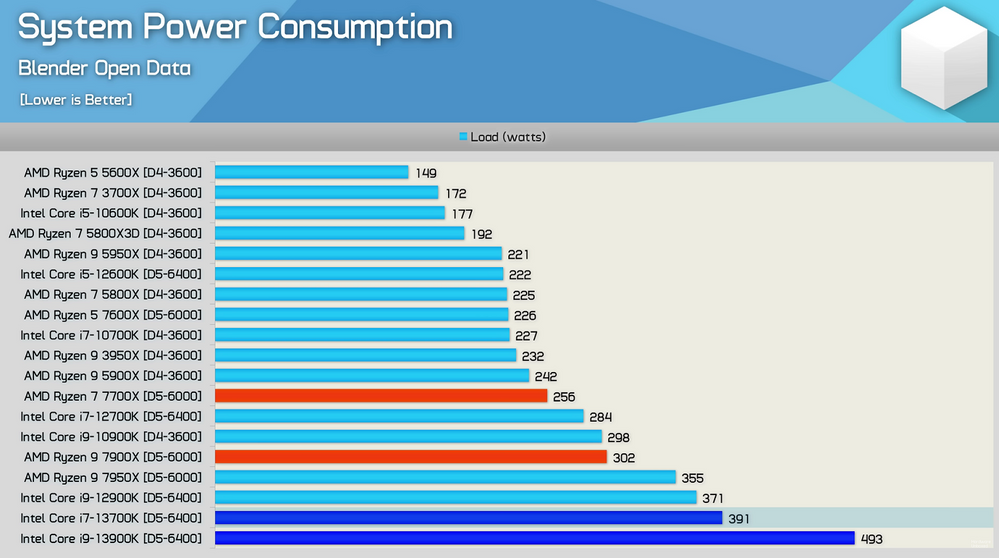

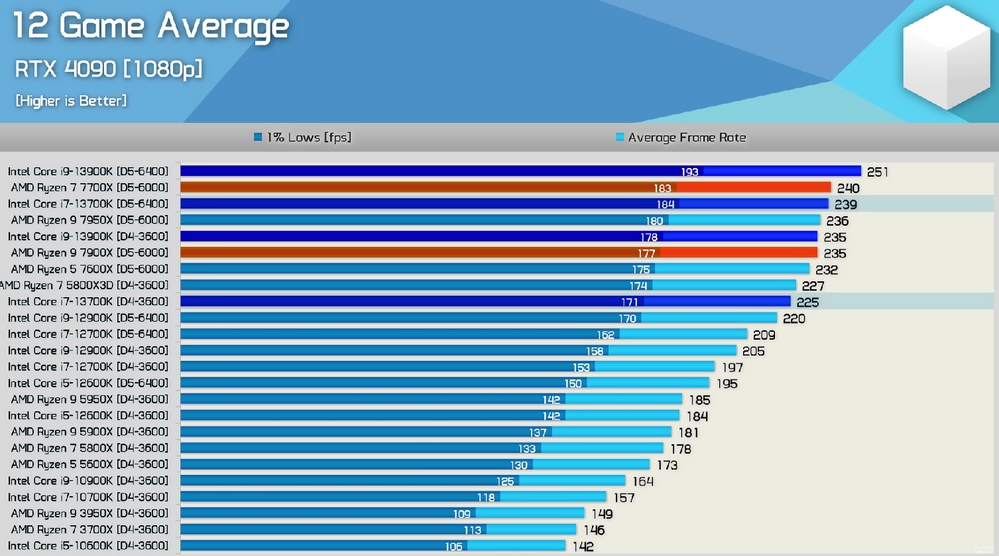

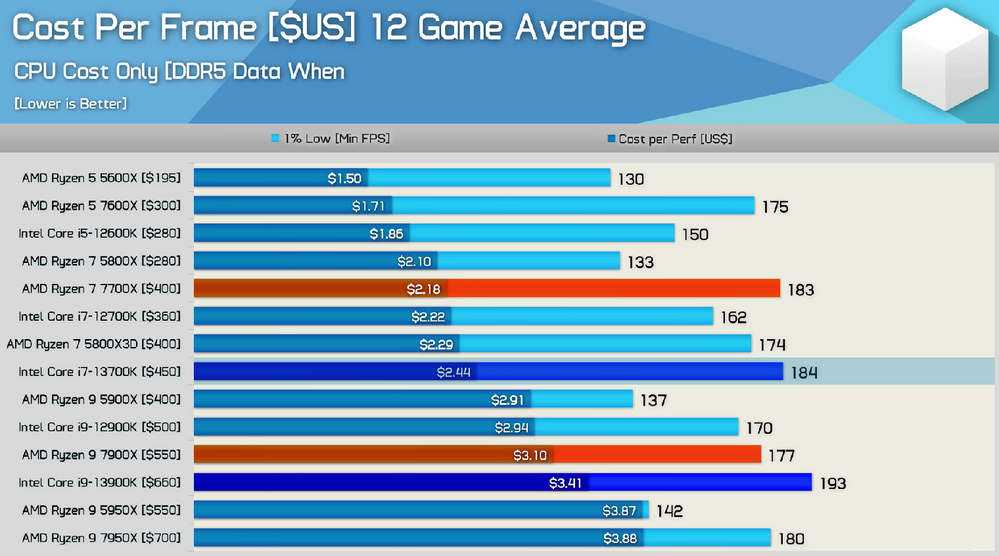

is better in any way in what???????? in games the intel raptor lake more fps and less power consumption and less cost than amd raphael $700---$590

I am wondering is better in what!!!!!!!!!!

And of course should blame AMD about the following up the compatibilities to ram and chipset with the partnership...like Intel does.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There's much more to it than games.

I would not be able to see the difference between 500 and 700 fps, and that is with expensive high end graphic cards. For mid-range graphic cards, the CPU almost does not matter, if you have a modern CPU architecture.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I know there is much more, but the games environment is very huge activity too and it's under focus, and I would be able to see the difference between 55 and 100 fps, and of course with using the same graphic card.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In power consumption, I'm sorry. You missed the target by a mile or two.

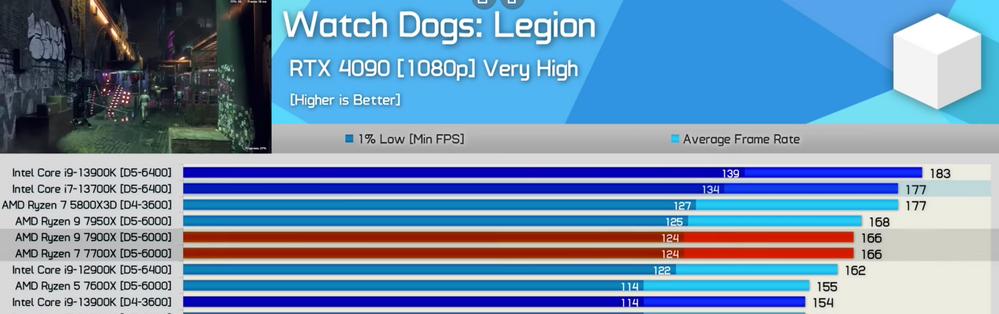

For game performance, something doesn't add up. The 7700X appears above the 7950X and second place right 11fps average behind 13900K.

Cost per frame, well.. the image speaks for it self. Not bad in the mid segment but AMD may need to lower the prices a bit, specially on the flagship. Youtubers are crowning the Intel chip King in gaming for the price.

Not gonna post productivity charts, Ryzen is dealing the cards there. The E-Cores can improve background tasks but they are not meant for heavy workloads. Although, Intel closed the gap on productivity.

But let me tell you something. I'm no fortune teller but AMD has a suspicious POKER FACE right now. At least its what this chart tells me.

5800X3D on par with a 13700K. C'mon!!!

We are all waiting for the 7950X3D

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure these 300 Watts suckers are better. Performance/Watt is not important to everyone, but it certainly shows Intel's card deck getting slim.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Performance/Watt is literally Energy Density and the key to every improvement in the current field. Currently the power costs are rising by up to 450%, so everyone using an Intel chip is paying the same price he paid for the chip on the power to operate it.

Let me ask you one thing: Would you buy a chip that costs 2000 Dollar, if there was another chip that does the same job for 400 Dollar? I guess not.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure the question is directed to me, but I would say product prices fluctuate over time but product power usage do not.