- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you get the WX 5100 to use 10-bit?

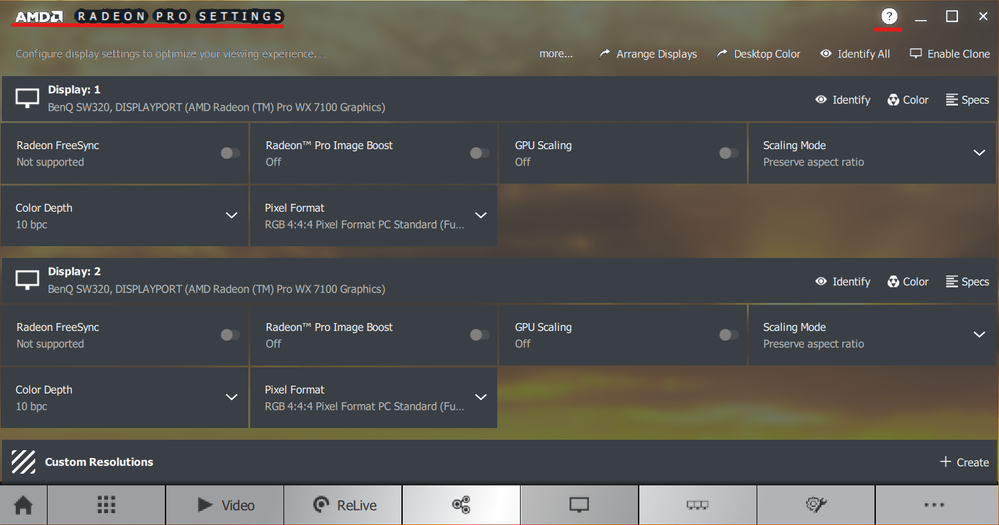

The WX 5100 supports 10-bit per channel color (or 30-bit color) and I have 2 x BenQ SW320 that support 10-bit color depth as well. 10-bit depth is one reason I got the WX 5100 as I do photography work. When I installed the v17.12.2 driver, I right-clicked on the desktop and opened the "AMD Radeon Pro and AMD FirePro Settings", clicked on the Display icon and saw there is a Color Depth setting and it was set to 8 bpc. I noticed there were 3 options for 6, 8 and 10 bpc. I selected 10 bpc, the monitors flickered a bit then stabilized (as usual), but the setting went immediately back to 8 bpc. Don't know why. I then selected the "AMD Radeon Pro and AMD FirePro Advanced Settings" context menu from the desktop which brought an old style Catalyst dialog box. There is a panel that has a checkbox at the bottom called "Enable 10-bit pixel format support" and I checked it, and it said I needed to reboot. I did. I went back into both settings panels again after the reboot to check the settings and the first driver panel still showed Color Depth at 8 bpc, while the Advanced driver settings still showed the checkbox checked. I then tried to set the Color Depth to 10 bpc, thinking that maybe I had to enable 10-bit in the Advanced panel before changing the Color Depth. The change would not stick -- Color Depth always reverts back to 8 bpc. In summary, current settings are

AMD Radeon Pro and AMD FirePro Settings:

AMD Freesync = Not Supported

Virtual Super Resolution = Off

GPU Scaling = Off

Scaling Mode = Preserve aspect ratio

Color Depth = 8 bpc

Pixel Format = RGB 4:4:4 Pixel Format PC Standard (Full RGB)

(Note: these were the default settings)

AMD Radeon Pro and AMD FirePro Advance Settings:

10-bit pixel format support = Enabled

(all other settings at default values)

I am confused. Just wondering if anyone has experience with this and knows which one is accurate? How do I use the 10-bit capability of the card?

TIA,

David

PS: My o/s installation is a fresh install of Windows 10 Pro x64 with the Fall Creators update.

PSS: I have a ticket in with AMD and they recommended using a third party DDU utility to uninstall the current driver and install the v18 driver (the latest release). This seemed questionable to me -- not sure I would trust a third-party uninstall utility over the manufacturer's integrated uninstall process.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe this issue may have been resolved! As posted by fsadough in this post, the 19.Q2 driver released on May 8, is working just as described with 10 bpc enabled at 4K@60Hz in RGB 4:4:4 Pixel Format mode! Fantastic! I am so thrilled! Thanks to fsadough and the development team at AMD for bringing this fix to us!

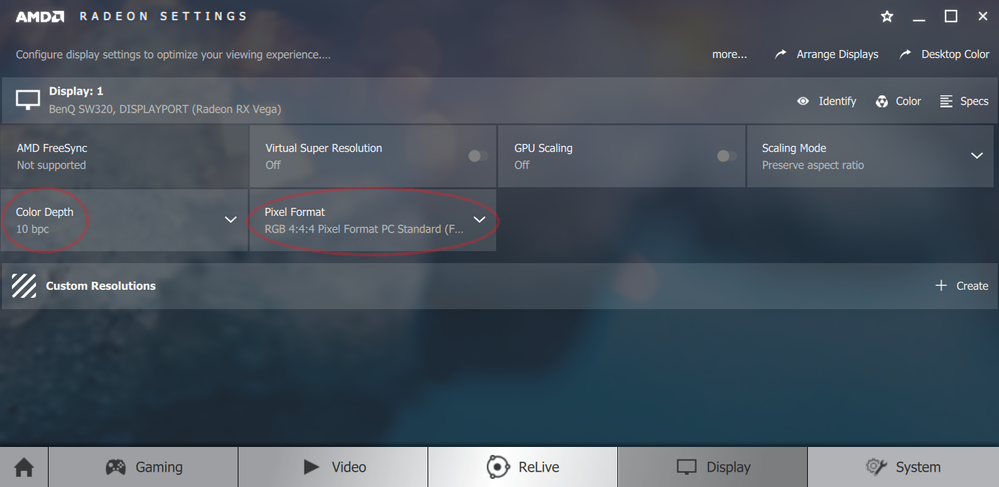

I noticed only an oddity in the driver settings panel, and they are underlined in red. But it appears to have no functional impact so far, which is great. If I recall correctly, I've seen the same artifact in previous Radeon Pro drivers when the 10-bit Pixel Format advanced setting is enabled, though I thought that it had been fixed (The reason I've enabled the setting is because Photoshop requires it in order to use the 10 bpc functionality).

Now the last step can be completed -- FINALLY to calibrate my monitors using this new driver! Calibration worked on older drivers (I believe 18.Q1), but failed on 19.Q1.1, though I'm not sure why. BenQ's calibration software seems a bit sensitive, especially with dual monitor setups. Here's hoping!

Thanks fsadough!

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't have that card but my monitor only does it through Display Port. It could be the port you are using?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks pokester. I appreciate your response and suggestion!

I have the WX 5100 which only has displayport connections, supporting version 1.4 revision of the standard. The cables I have are also displayport, but I guess that's obvious :-). And I'm running Windows 10 Pro x64, which supports 10 bpc or 30-bit workflow.

Are you using 10 bpc mode? Which graphics card do you have?

Would you mind sharing what your settings are in the driver? From what I can see, there are two interfaces to the driver. When I right click on my desktop, 2 options appear at the top of the context menu 1) AMD Radeon Pro and AMD FirePro Settings and 2) AMD Radeon Pro and AMD FirePro Advanced Settings. I'm curious what your settings are in the Settings for Color Depth, Pixel Format, Virtual Super Resolution and AMD FreeSync. And in the Advanced Settings I'm curious whether you have the Enable 10-bit pixel format support option checked.

TIA,

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would also wonder what resolution you are using. I am using 4K resolution (3840x2160) on two monitors in extended desktop mode.

Thanks again!

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1440p I wonder if it is the dual monitors throwing things off. Maybe disconnect one to test if things change by themselves. Make sure to reboot to, to make sure the change takes. Don't know if this will change anything. Just the best troubleshooting idea I have to at least figure out if, it even works.

If you have quality cables they should not be an issue. Are they the ones that came with your monitor? If not you might pick another one up retail that you can test with if the other troubleshooting you do doesn't pan out.

Are each monitor connected to the card with it's own display port connection or is it one connection to one monitor then the next monitor daisy chains off that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not on a pro card. I am on a RX 580. It does support 10 bpc. I am running display port to a 1440p IPS monitor. When I get wide gammut color images I can tell the difference so it is working. I primarily do Photoshop / Illustrator work with mine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same issue now. Did you fix it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did fix it some time ago. I found that somehow during the installation of the driver, the monitor frequency settings for both monitors got set to 59Hz. Once I changed that setting to 60Hz, I was able to enable 10-bit color in the driver (as long as the 10 bit setting in the Advanced Settings dialog box was enabled as well).

Then I noticed a crippling issue with CorelDraw 2017 performance (for example extremely slow creation of new documents, copy and paste, file save, file print, etc). But when the Windows 10 April update automatically installed, it removed my AMD driver (without telling me) and replaced it with a much older one (2016 vintage) and the CorelDraw issue went away; but then so did my ability to use/control 10-bit color.

After reading about much finger pointing between AMD, Microsoft and Corel, I decided to wait on one of them to fix the issue. AMD has now produced an Adrenalin driver that fixes the performance issue with CorelDraw, but doesn't allow me to enable 10-bit color again! Arggg. I checked my monitor refresh rates and they are at 60Hz, so that may not be the real solution I thought it was when I made the change from 59Hz to 60Hz before.

I've had it with this WX5100 video card and the poor quality drivers. In January I bought two BenQ 10-bit capable monitors on the promise of the WX5100 being able to produce 10-bit per color resolution, and it has been 7 months and the drivers still don't provide that capability. And AMD support hasn't helped. They're knowledge of their product is lacking, and their approach is trial and error "try this driver version, try that driver version"...and by the way, don't use the built in AMD uninstall capability to uninstall the AMD driver, instead download a third-party DDU utility to uninstall the AMD driver. Apparently not even AMD trusts their software.

Nvidia seems to have figured things out and everywhere I read, they have a better reputation for graphics card features that work seamlessly with the O/S and applications. I think I'll look there going forward.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One other fact. At one point when working with AMD support, I asked if there was a manual for the Radeon Pro WX5100 that explained the driver settings so that I could read it and be sure that I didn't have any incompatible settings. There are so many settings in the driver, and there's an Advanced Settings driver as well. I never got a manual, so apparently there is no manual that explains the settings and how they work together. Some settings may be obvious and no manual would be required, but others are not. For example, there are 2 places in the drivers where 10-bit color is referenced: in the Settings driver and in the Advanced Settings driver. However, in the Advanced Settings driver, the 10-bit setting has a caption that mentions use this setting for medical devices that need higher color resolution, making it seem as if the setting is not necessarily related to the 10-bit setting in the Settings driver. Is there a dependency or not? The drivers don't say and there is no manual to check.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not acceptable. My cheap WasabiMango panel displays as being 10 bit, but as soon as the sw 320 is attached I also get 8 bit. I've tried this with an HD series and a Vega 64, and now have made what will most likely be a very costly mistake and ordered a Vega Frontier to test a pro series card before finding this thread. I have contacted BenQ and am waiting for any info regarding this issue.

Edit: using DP port on both HD and Vega cards with a certified DP 1.4 Club3D cable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Make sure your load the drivers for your monitors by default Windows loads a Generic PNP driver. Also Windows likes to remove the driver periodically and put the GPNP driver back in. So use the driver from your monitors maker and when you see issues make sure it is still loaded. Windows 10 is really bad about messing this up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Driver installed, and I have suspended Windows update for a bit...still no joy. I received an email from BenQ this afternoon stating they were looking into the issue. At the moment I can get 10 bit if I switch to 4:2:2 instead of 4:4:4. not really a workaround...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good luck on that, I feel your frustration. I am a gamer myself and a graphic artist / IT guy at work. So not wanting to buy too many computers at home (I say that having 6 desktops and 5 running laptops and 1 iMac in the house for 3 people) but only a couple are recently new and the old ones migrate to other areas of the house. My primary office computer at home has a 32 inch IPS monitor with 10 bit color. Does okay with color for Photoshop and is good with gaming. When Windows 1803 came out it killed my 10 bit color. Luckily Philips had a new monitor driver out in about a month later that did fix this again for me. The problem since then has been that Windows updates often removes the driver after updating and put the Generic PNP driver back in. It hadn't happened in a few months and I thought maybe that was finally behind me, but then last week the cumulative driver update that fixed 3 of my PC issues with getting the Adrenaline 2019 drivers to load once again removed the driver for my monitor on the one PC the AMD driver was working fine on already. Windows has been updating and changing their graphics subsystem like crazy the last few years. I don't think that MS is doing a good job communicating these changes to GPU and Monitor makers alike, so they can stay ahead of issues. Consumers don't know where to turn and get frustrated with AMD and maybe a monitor maker. Many of the people here don't even realize that many issues are actually monitor related and not the GPU drivers. It's smart that you are addressing this with BenQ at the same time. Also a lot of newer monitors in addition to drivers they have updateable firmware, did you see if by chance your's has a firmware update? I would guess not as BenQ likely would have mentioned it, but worth asking just the same.

Long story short I sure get the importance of the 10 bit color. Unless you have ever really needed it you probably wouldn't realize how critical it is, but I sure empathize and hope where ever this fault lies you get it fixed.

I should mention that while there are a lot of knowledgeable people here it is primarily a User to User forum and rarely actual AMD support people help here. Pretty hit and miss, as there are only a couple official moderators and the other are just basically users not doing it from a professional stand point.

I know you said you had worked with AMD support before, there is a guy that does help here in the forums from time to time and he is always pretty nice. I think he is pretty high up on the Pro Graphics side, I know he has helped a lot of people here in the past. I will mention him so he is notified of this thread if he does not respond you might message him with your system specs and description of what you have done and what is going on. fsadough

On the Corel Draw Issue the slow issue is acknowledged by Corel and is a wide spread issue we have seen many complaints on. You could search Corel Draw in this forum and likely find the posts. Again this is one those changes with everyone moving to support the ever changing new standards of the graphics sub-system in Windows. The biggest nightmares are yet to come as they are just beginning the switch to their "Universal Driver" requirements. On the Corel issue though, I believe if you move to their latest Version that this is fixed now from what I have read. If they have a demo/trial you might try it? Or if your version isn't patched to the latest update you might want to make sure to do that. I used to stay current on Corel myself but kinda abandoned it about 8 years ago, I like the program but virtually nobody uses it in the printing world I am in and the few that do these days supply PDFs so you just don't need the native apps to those rarely used apps anymore. Adobe Illustrator is really top dog for us for vector art.

Good Luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info. I still have the issue on Windows 7 so I am wondering if it is indeed a firmware issue re the BenQ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to achieve the same result. The only way I could enable 10-bit color was by switching to 4:2:2 color mode, which is inferior to 4:4:4 (full color), as the former mode throws away some of the color information. The missing info is often hard to detect in full color images, but very easy to spot with text or with thin lines (as in vector artwork). As you state, it is not an effective work-around. One other thing I've found odd with the AMD driver is that when 10-bit is enabled in the AMD Radeon Advanced settings, even when 10 bpc is NOT enabled in AMD Radeon Settings, some applications display odd color artifacts on the monitor. Most notable is Adobe Photoshop CC -- the user interface would display in very loud colors (shocking pink, lime green) instead of the usual dark gray. In addition, sometimes it does some funky color inversions so that seeing the marquee for selections is very difficult. And then suddenly I would do something in the app, and the interface would refresh and correctly display with the dark gray background. That wouldn't last long though. So Photoshop was useless under 10-bit mode. I’ve always thought that was an indication of issues in the AMD driver -- I don't think it's ready for primetime, even though AMD has moved on to the next generation of graphics hardware. I hope someone proves me wrong. But it may be an issue in the monitor driver. I didn’t think to contact BenQ.

If you get a solution to this problem, I'm very interested in the results — whether its from BenQ, AMD, or Microsoft — please post them.

Also, I think I read somewhere above about slowness in CorelDraw. Corel implemented a work-around for that issue (I’ve tested them) with updates to at least 2 versions of the application (2017 and 2018), and Microsoft's April 2018 Creators update appears to have fixed the issue at the O/S level because my X8 version no longer has the issue, though Corel didn't release an update for X8. I cannot speak to Windows 7 unfortunately.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I forgot to mention that AMD posted drivers that address the CorelDraw performance issues as well, at least on Windows 10. They posted the same drivers for Windows 7, but I cannot attest to their effectiveness since I no longer run Windows 7. Read through the Release Notes for drivers release last Summer or Fall -- the issue is called out with CorelDraw performance improvement specifically mentioned.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just realized I made a mistake in my comment above. I DID find another way to achieve 10-bit color. It was by reducing the monitor refresh rate from 60Hz down to 30Hz. At the lower refresh rate, both BenQ monitors will go into 10 bpc mode. The problem with that is 30Hz is so low that I can see the mouse pointer jump from one location to the next as I move it across the screen rather than the continuous motion I experience at 60Hz. It's also very laggy making the computer feel very slow and making accurate cursor placement difficult. YMMV.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

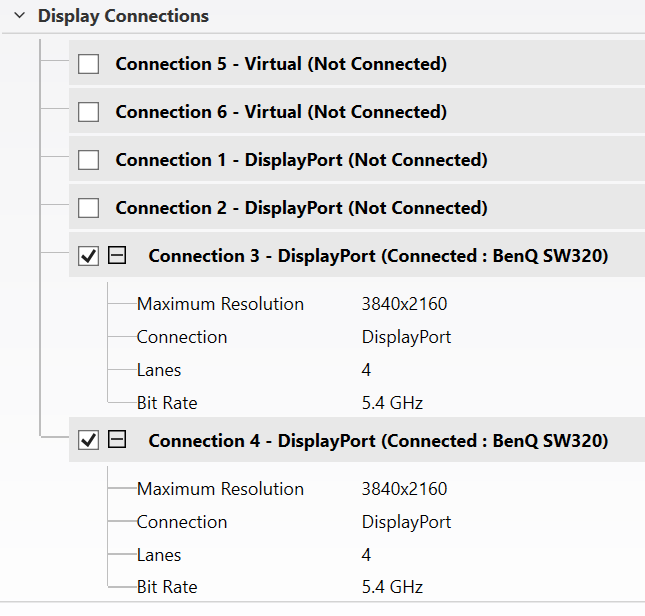

- How are you connecting, HDMI using adapters or direct DP cable?

- What is the resolution?

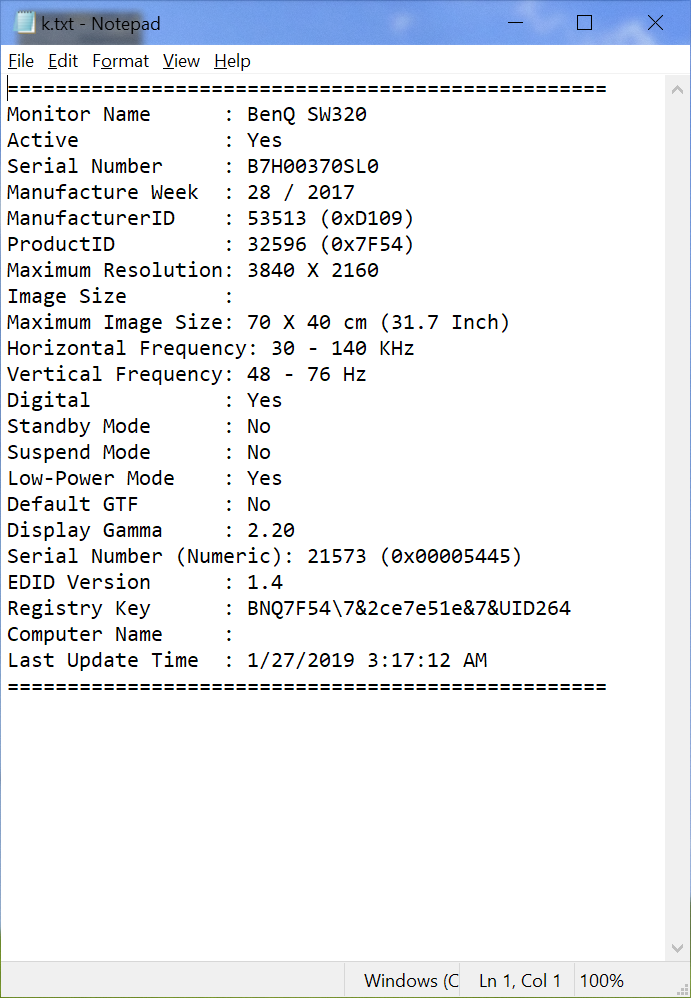

- Can you send the EDID file of the monitors?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi fsadough.

Both monitors are connected directly with DisplayPort cables rated for 4K resolution (3840 x 2160), which is the resolution that I am using.

I’m not sure how to get the EDID file nor what it’s filename is (EDID?). I’ll look for them and attach them. Any guidance you have on where to find them is appreciated.

Thanks

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

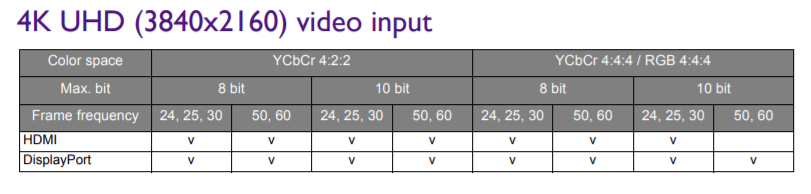

10bpc color depth is supported according the spec (see below). In AMD Radeon Pro and AMD FirePro Advance Settings under EDID emulation you should be able to save the EDID file. Or use third party software like: MonitorInfoView - View Monitor Information (EDID)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It took a bit to find it, but I found the button to save the EDID information from the AMD Advanced Settings driver. It saves the information by connected DisplayPorts, so there are 2 text files for the two monitors connected to ports 3 and 4 respectively. As the information is in hex format, I don't know what it says, but I figure you already knew what format it would be in. Hopefully this helps. Thanks.

DisplayPort3 EDID Info (BenQ SW320):

00 FF FF FF FF FF FF 00 09 D1 54 7F 45 54 00 00

2A 1B 01 04 B5 46 28 78 26 DF 50 A3 54 35 B5 26

0F 50 54 A5 6B 80 D1 C0 81 C0 81 00 81 80 A9 C0

B3 00 01 01 01 01 4D D0 00 A0 F0 70 3E 80 30 20

35 00 BA 89 21 00 00 1A 00 00 00 FF 00 45 41 48

30 34 32 34 32 53 4C 30 0A 20 00 00 00 FD 00 30

4C 1E 8C 3C 00 0A 20 20 20 20 20 20 00 00 00 FC

00 42 65 6E 51 20 53 57 33 32 30 0A 20 20 01 F3

02 03 2B F1 56 61 60 5D 5E 5F 10 05 04 03 02 07

06 0F 1F 20 21 22 14 13 12 16 01 23 09 7F 07 83

01 00 00 E3 06 07 01 E3 05 C0 00 02 3A 80 18 71

38 2D 40 58 2C 45 00 BA 89 21 00 00 1E 56 5E 00

A0 A0 A0 29 50 30 20 35 00 BA 89 21 00 00 1A 04

74 00 30 F2 70 5A 80 B0 58 8A 00 BA 89 21 00 00

1A 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 9A

DisplayPort4 EDID Info (BenQ SW320):

00 FF FF FF FF FF FF 00 09 D1 54 7F 45 54 00 00

25 1B 01 04 B5 46 28 78 26 DF 50 A3 54 35 B5 26

0F 50 54 A5 6B 80 D1 C0 81 C0 81 00 81 80 A9 C0

B3 00 01 01 01 01 4D D0 00 A0 F0 70 3E 80 30 20

35 00 BA 89 21 00 00 1A 00 00 00 FF 00 4D 39 48

30 31 37 38 39 53 4C 30 0A 20 00 00 00 FD 00 30

4C 1E 8C 3C 00 0A 20 20 20 20 20 20 00 00 00 FC

00 42 65 6E 51 20 53 57 33 32 30 0A 20 20 01 EB

02 03 2B F1 56 61 60 5D 5E 5F 10 05 04 03 02 07

06 0F 1F 20 21 22 14 13 12 16 01 23 09 7F 07 83

01 00 00 E3 06 07 01 E3 05 C0 00 02 3A 80 18 71

38 2D 40 58 2C 45 00 BA 89 21 00 00 1E 56 5E 00

A0 A0 A0 29 50 30 20 35 00 BA 89 21 00 00 1A 04

74 00 30 F2 70 5A 80 B0 58 8A 00 BA 89 21 00 00

1A 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 9A

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I meant to include a pic of the settings in the driver. It is below.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The two EDIDs are different. On the same page in Advanced Settings you can override the EDID of the secondary BenQ monitor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In this comment, I don't understand what you intended to accomplish. I assume that you are suggesting that I override the EDID of the secondary BenQ with that of the first BenQ. If so, what will that accomplish? Both monitors behave exactly the same already.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you give him the information he asked for and find out what he had in mind? Or are you just guessing?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which thread are you referring to? This thread is about missing "10bit pixel format" option in Advanced Radeon Pro Settings, which is already fixed in 19.Q1.1 driver.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

fsadough wrote:

Which thread are you referring to? This thread is about missing "10bit pixel format" option in Advanced Radeon Pro Settings, which is already fixed in 19.Q1.1 driver.

Just a little clarification. This thread was never intended to be about "10bit pixel format". Please read my original post.

I started this thread. And the problem I had was an inability to enable 10 bit per color channel in the driver (30-bit color). So this thread is about that problem (10 bpc). But some of my earliest posts may not have articulated the problem as clearly, because at that time I was not aware of a fact that you have since explained to me:

"10bit pixel format (bpp) has nothing to do with 10bit color/channel (bpc).

10 bit per channel (bpc) is related to the number of bits per Color Channel (R=Red, G=Green, B=Blue)

10 bit per pixel (bpp) Format is the number of bits in each pixel. The higher the number, the sharper is the image"

After I received this message from you, I (mostly) stopped referring to 10bit pixel format.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was referring to your suggestion regarding EDIDs which I’ve quoted below. I also quoted my response to it below yours, to be clear. I wasn’t sure what you were suggesting that I try or how it would help troubleshoot my issue.

fsadough wrote:

The two EDIDs are different. On the same page in Advanced Settings you can override the EDID of the secondary BenQ monitor.

dealio wrote:

In this comment, I don't understand what you intended to accomplish. I assume that you are suggesting that I override the EDID of the secondary BenQ with that of the first BenQ. If so, what will that accomplish? Both monitors behave exactly the same already.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the link, though after viewing the info there is no mention of bit depth re the monitor. Would this be covered by other data in the results? I'' attach a copy of mine:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

IPS Deep color panels are able to do 10 bit per color, but not many panels do that due to the cost

now HDR has kinda taken over from the idea of Deep Color as marketing types prefer acronyms etc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can't speak to all the differences in 10 bit vs. 8 bit, but as a digital artist using Photoshop the biggest benefit is the accurate portrayal of transitioning color. 10 bit transitions very smoothing and displays a file very accurately. An 8 bit monitor has a lot more banding in the transitions of vignettes. This is detrimental when editing raster images as you may be making changes in areas and think you are okay only to find out the monitor isn't really displaying the pixel information correctly. I currently own 3 IPS monitors at home. All were relatively inexpensive compared to what it used to cost for these monitors. My Phillips 32 inch last year was 300 and my Acer I got last month only 200. I don't own any IPS monitors that are not 10 bit. I have 6 IPS monitors in our art department at work as well. Not sure how cheap they have to be to drop, the 10 bit but all the ones I have and have ever seen that are IPS are 10 bit monitors. They are all Wide Gamut RGB as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My panel is 8-bit RGB which is IPS standard and the Deep Color models tend to be very slow too. Mostly they are targeted to film and photo sectors where lighting and color matter more.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I use a gradient from medium gray to a lighter medium gray to test whether the monitor/card are actually displaying 10-bit color rather than 8-bit. 8-bit has obvious banding as you mention, whereas with 10-bit the banding is either not present or very hard to discern (depends on how close the gradient colors are). But unlike you, at the time I was searching for 10-bit monitors, the BenQ showed up as one of the few with good ratings by a fair number of customers on Amazon. It's not a cheap monitor and it claims to be cheaper than most high-quality 10-bit monitors from the likes of companies like Eizo, where a 30" monitors are thousands of dollars. The 2 BenQ SW320 (31.5" diag) came in at about $1600 each, so I considered myself lucky. I've not seen 10-bit monitors at the price point you mention (or I probably would have got them instead). The SW320 are also 98% Adobe RGB, which is also rare. So if you have a printer that can print outside the sRGB gamut, it's good to have Adobe RGB capability so you can see what will print accurately.

I"m not sure how using 4:2:2 color mode affects color accuracy, but I'm forced to use it to get 10-bit color since the card/driver won't produce 10-bit color except at 30Hz, which is unusable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a deep color gradient on my site, a TN panel cannot see the gradient but IPS panels can see how the text floats over the gradient

I use 1920x1080 as higher resolution panels have cut too many corners for color quality

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I must be super lucky finding the 2 1440p 10 bit panels for less than $300 so easily. Try Micro Center they had many of them. One is a 5ms and the other a 4ms granted neither are 1ms like TN panels but hardly slow either. Never notice any lag or ghosing at all. The only caveat is the limit of 75hz although I see that as a plus. Buying a GPU to drive a 1440p @ 75hz is far more economical and still looks pretty fantastic IMHO. It really depends on what your happy with.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I only have a GTX 1060 to handle graphics so moving to 1440p would be taxing with some games I like to play.

Not rich enough for a GTX 1080 Ti yet

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You will pay a ton right now for 4K monitors but the 1440p 32" have dropped to super cheap in the last year. They are still great Photoshop monitors for home. I'm sure mine doesn't really have the range of a top end model but it is better than the very best of any VA or TN panel so I'm good. The big thing for me is not so much any color variation as in reality you judge that on printed proof to begin with not the screen, but that I can actually see how my editing changes pixel data properly before printing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not the best workaround, as this driver is almost 2 years old. I'm hoping that AMD will at least get the Pro drivers up to snuff in the near future. Driver version is 22.19.673.0 (17.8.2), from August of 2017. I'm noticing that the colors seem to be more washed out with this older driver...back to the latest...EDIT: Colors are fine within Windows unless I am browsing in Chrome/online. They improved a bit by reinstalling the BenQ monitor driver...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hope they get it fixed for you. Make sure to officially report it to AMD as well with an e-ticket. Don't count on anything getting done from this forum. I would be mad if my 10 bit stopped working on my card. I would be doubly PO'd if my Pro Card didn't work on 10 bit now when obviously it is a driver issue.