- AMD Community

- Communities

- Red Team

- Gaming Discussions

- Re: Proof of stock settings degrading 3rd Gen Ryze...

Gaming Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Proof of stock settings degrading 3rd Gen Ryzen performance

About a year ago (beginning of August 2019), not long after Third Gen Ryzen was released I bought the hardware my friend in Sweden needed to build his new computer.

I had a spare Gen 2 512 GB NVME M.2, a 512 GB SSD, an 8 TB HD drive and a Noctua NH-U9S Cooler which I could give him, and so it made sense to buy the rest of the hardware here in the UK where it was cheaper, and to configure everything for him and then to send it off to Sweden.

I bought the Ryzen 5 3600X, GigaByte AURUS X470 Gaming 7 WiFi Rev 1.1 and 16 GB of Team Group Edition 3600 CL16 RAM, which of course he paid for.

This was the same hardware I bought for myself at the time.

The case he got (also the same as mine) was the Phanteks Evolv X and the PSU he bought was an EVGA 650W SuperNova Gold, and he managed to get a great deal on an Nvidia 1080 Ti graphics card.

I already had the identical configuration and had already experimented with my own 3600X and had discovered the basics of how to properly configure the 3rd Gen Ryzen CPU which I outline in my guide Updating my definitive guide to configuring the Ryzen 3900X/3950X and all other 3000 Series CPUs

I had found that just leaving the system at stock settings and only configuring the CPU and RAM in Ryzen Master to give the best results.

I installed and configured the system with Windows 10 Enterprise and Ryzen Master.

As it turns out, he forgot what I told him about having to load Ryzen Master every time he booted and to apply the profile I had created; so since he has had the system (from September 2019) he has just booted into the bog-standard, out of the box, stock settings.

I can't really blame him for forgetting, because it was a huge adventure for him to build his very first PC which I talked him through, and I failed to re-emphasise the necessity for loading Ryzen Master and applying the profile.

Fast forward to yesterday, where I was talking him through upgrading his BIOS to the latest version and installing the newest Ryzen Master and configuring it (which I did via TeamViewer).

When I configured the profile for Ryzen Master again, and tested it, the system would not run AT ALL with the settings which had previously been rock solid steady and stable, namely 4.225 GHz all-core at a maximum of 1.3 Volts, when running CineBench R20.

Before I sent him the configured system last year I tested it VERY extensively. The reason for this was that I had every intention of buying the 3950X when it came out (and also the GigaByte AURUS X570 XTREME when I could get it for a good price - which I did), and so, if his CPU was not as good as the 3600X I had (Silicon Lottery is a thing) I would just swap them.

His CPU was no better or worse than the one I had.

His system is now only capable of completing a CineBench R20 run at 4.175 GHz at 1.3 Volts, even punting the voltage up to 1.325 Volts will not make the system run at the 4.225 GHz it ran comfortably at when I originally configured it.

When I say, that it does not run at all, I mean that setting it to 4.225 GHz at 1.3 Volts results in an immediate hard crash, not even a BSOD. It will not even run at 4.2 GHz at 1.3 Volts - although the results there are a bit better, only resulting in CineBench terminating or a BSOD.

At 4.2 GHz it will however run if I put the voltage up to 1.325.

This is a clear indication that the CPU has degraded over the period of less than a year (September to July).

Of course I said to my friend that he could have my 3600X in exchange for his, because I only need the CPU as a backup in case anything happens with my 3950X and I need to RMA it.

I know that some of you reading this will try to hand-wave this away with the comment "Purely anecdotal"; however, considering that I EXTENSIVELY TESTED the CPU with the Ryzen Master settings for about a week, and in all that time it ran perfectly stable at 4.225 GHz at 1.3 Volts (even below that voltage, looking back at some of the screenshots I made of the performance) before I sent it to him, the fact of the degradation of the CPU is anything but trivial.

To run it now at 4.175 at 1.3 Volts I had to raise the LLC to "High" in the BIOS because that was the only way that CineBench R20 would run stably without crashing. The LLC had been set to stock "Auto", i.e. the lowest setting, before.

Without adjusting the LLC the highest it will run is 4.15 GHz all-core at 1.3 Volts.

This proves that the statements I made last year with regard to my consideration that the voltages AMD considered safe to apply to the CPU were idiotic and would result in the degradation of the Ryzen 3rd Gen CPUs over time. It was the reason why I looked around for an alternative way to configure a Ryzen 3rd Gen CPU in the first place.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have an updated BIOS which might have changed the board voltage offset. This could be a reasson why the previous OC isnt stable anymore. Some early board appear to have a huge offset, and settings 1.3 might even push 1.375 or more to the CPU. You are pushing an all-core OC right?

As a rule of thumb, i never do OC's on systems that arent mine. But that doesnt help your situation.. updates in cinebench can even be the cause as changes in powerprofiles of pwm from within windows.

On the LLC topic... Loadline calibration with have the board try and compensate for the powerdraw of the CPU. You having to push it higher means the CPU is using more power at a certain moment, which drops voltages as VRMs are trying to keep up. My experiences are that LLC is used when jou push a high OC, to compensate for huge out of spec swings in powerdraw and the inablity of the voltage controller to respond at that instant. Try the CPU in your board to rule out VRM issues, if you can get up to the old OC on yours it might be a board or PSU issue. On the voltage test with 1.35 max as offset might take it upto 1.425 even.

I've heard of degeneration on both Intel and AMD cpu's when pushing high voltages, and i know its a hot topic for extreme OC's pushing around 1.4v or even more, but all degenerations of which i read are 1.4v+ for extended periods of time.

What temps are you getting? And have you tried undervolting a bit? Also dont use cinebench20 as a stability test, use prime95. LLC shouldnt change, because its the board that sends more power as voltage droops under high load.

CPU capitance shouldnt change.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you read the guide I linked to then you will see that I set a MAXIMUM voltage in Ryzen Master. Once that is set, checking with HWInfo (Vcore SVI2) shows that it does not exceed that maximum.

In my guide I am NOT going outside of spec, and I am NOT "pushing an all-core OC" in the guide. I am not overclocking, I am within the spec for voltage and for the power envelope. All I am doing is optimising the clockspeed for the maximum voltage I have set.

I spoke in the article above ABOUT THE CPU RUNNING AT STOCK - NO OVERCLOCK! It's in the TITLE OF THE THREAD for God's sakes.

Where do you get the idea that I am talking about overclocking??

You might want to take a look at the following video from Buildzoid (Actually Hardcore Overclocking) to inform yourself a bit better about LLC:

I am also very much aware of the difference between SET and GET voltages with regard to the CPU.

The early CPUs from the 3600 to the 3800X were garbage silicon and just last week I configured (notice the word CONFIGURED and not OVERCLOCKED) a new 3600 and had a stable clockspeed of 4.3 GHz at 1.3 Volts MAXIMUM.

What this means is that under no circumstances can the CPU get more than 1.3 Volts, it CANNOT be fed anything above that voltage the way I configure it.

This is measured by and regulated by the internal sensor of the CPU itself (Vcore SVI2) and accounts for any VRM inefficiency. The X470 motherboard I chose for my friend, and for myself, had one of the best if not the best VRM of the X470 generation of mobos (true 10 Phase controlled by an IR35201 in a 5+2 configuration with IR3599 doublers and the 10 MOSFETs are the 40 Amp IR3553).

At stock the AMD 3rd Gen will feed up to 1.5 Volts into the CPU with a single core load and under an all core load, such as CineBench it will still punt in around 1.375 Volts or more, which is bloody ridiculous and caused me to find out how to mitigate this behaviour - and those are GET values!

The Tech Media and nearly all Tech YouTubers have been either too stupid, or too lazy, to learn how to configure 3rd Gen Ryzen correctly and I have been calling them out for this for many months now, and it seems to be finally bearing fruit.

In his latest video about the 3800XT JayzTwoCents plagiarized my work in this video and didn't even have the decency to give me any credit. This was after I had repeatedly called him out in his previous videos for his ignorance (wilful or not).

JayzTwoCents like you, does not know the meaning of the term "Overclcock". What this term means is that you set a clock and then you have to go above the specification of the CPU in terms of Voltage and Power (Amperage) to achieve that clockspeed.

Configuring on the other hand means that you fix the parameters within spec and then see how much clockspeed can be achieved stably within those parameters.

The "Over" in "Overclocking" is short for "Over specification" and the whole term is "Over Specification Clocking" or "Overclocking".

CineBench R20 is a perfectly good tool to use to test the stability of a system.

You prattle on about Prime95; but which version, what parameters, what are you actually wanting to test with the parameters?

The thing is that when I use words, I know what they mean.

All you have done is throw in some buzzwords which you obviously barely comprehend, to criticise points in my post THAT I WAS NOT EVEN MAKING!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lol, cool down a bit..

You stated that you got to 4.15Ghz all-core which is unlikely looking at boosting behaviour of 3th-gen.

None i didnt read the link to the guide you posted, because of the bios-upgrade my mind went to the voltage offset some motherboard makers apply

You dont know me, obviously, and i had no intention to explain LLC in detail.

Prime95 smallfft, as it is a more stable and demanding load generator. I mentioned it once, as a better stress-tool.

But good luck, and bye

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

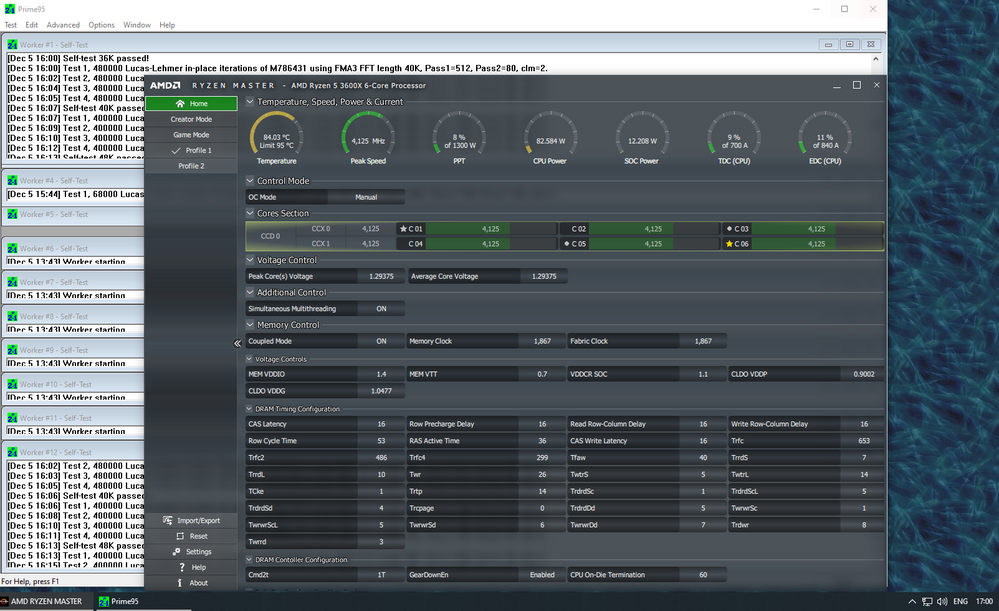

You want me to tell you about Prime95 running small FFTs (not smallest) on a Ryzen 5 3600 with an air cooler which I have configured running for not one hour, not two hours, but over three hours and want to see a screenshot?

So what clockspeed do you think this could be accomplished at?

3.6 GHz?

3.5 GHz?

2 GHz?

LOL, only if you never actually started the benchmark?

Well let's just look and see shall we?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: The "Over" in "Overclocking" is short for "Over specification" and the whole term is "Over Specification Clocking" or "Overclocking".

Seek help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My first ever overspec clocking (overclock) was with my Intel 8088 going from 4.77 MHz to the dizzying heights of 6 MHz.

I sense the Dunning-Kruger is strong within you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: JayzTwoCents like you, does not know the meaning of the term "Overclcock".

OK I understand now, you are NOT talking about overclocking.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I can gather, your comprehension seems to be permanently out to lunch.

In all the time you have been whining you have not, as far as I can tell, ever defined the term "Overclocking".

If I go with what I have garnered, it appears that you think that anything beyond base clock is overclocking, thus anything beyond 3.5 GHz on my 3950X is overclocking - this being the only number that AMD categorically states with regards to an all-core clockspeed. Thus, if everything is overclocking then nothing is overclocking, and we are left with a term which has no meaning.

The only other clockspeed mentioned is "4.7 GHz" for a single core boost but this is prefaced by the salescritter/marketdroid weasel-words "Up to" (which basically means, "Never gonna happen"). The only time the 3950X ever achieves a 4.7GHz single core boost is when the system is not under load, and that's really when you NEED the boost, i.e. when the system is doing naff-all.

You are not arguing in good faith having been nothing other than deliberately obtuse and therefore superfluous to requirements as far as any kind of discussion is concerned.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jayz2Cents explains it perfectly.

His ideas are great.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It does seem like the semantics are tripping people up here. And overclock literally is just raising the clock speed above specification, which by itself will not alter component longevity if not accompanied by a voltage increase. You do not necessarily have to raise the voltage to overclock, but most users do as the gains are otherwise limited.

Regardless, the matter at hand is a chip that is less performant now, than it was 10 months prior when set at 1.3V. The chip was run at the stock boosting settings for those 10 months. While interesting to be sure it does not "prove" anything.

"This is a clear indication that the CPU has degraded over the period of less than a year (September to July)."

Well, it is an indication that something has changed, but is it the CPU or the motherboard? What you would need is a different CPU, that you also tested within that exact same motherboard that was never run under stock settings. If that CPU is still equally as performant as it has always been, that would put the blame on the CPU. And again, is it the voltages used or a manufacturing defect inside that particular CPU? With an n of 1 and no control any conclusion would be incredibly difficult to make. What would be very interesting is to drop the CPU into an identical motherboard and see if the performance recovers.

personally speaking my 3900X that I have had since late August, has not changed R20 scores outside standard deviation. Despite being set at the stock settings for the entire time period. Does that prove the boosting voltages to not degrade 3000 series CPUs? Certainly not. I also cannot test the scenario you listed above, as I never logged the maximum manual overclock at a set voltage to compare to. So all I can say definitively is in my circumstance, the CPU runs exactly as it did 11 months ago in R20.

Does your friend have any benchmark data over the course of the 10 months to look for shifts and trends? I imagine as a standard computer user, he probably hasn't been running benchmarks with any frequency.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm way ahead of you on this one buddy. ![]()

I got TWO 3600X CPUs at the same time, one for me and one for my friend in Sweden.

That 3600X has been sitting here doing nothing at all and I am sending it off to my friend to replace the one now has.

Whilst there is a possibility that what you say is correct, the motherboard he is using has a 10 Phase VRM with 40A powerstages, so I think that is highly unlikely that it would account for the performance degradation.

He is on holiday for a couple of weeks, so I can't send it out to him before that and it will take about a week to get from where I am to Sweden per post.

I am not really going to entertain the whole "Anecdotal" and "N=1 sample size"; because I predicted this would happen and it is a coincidence (and an unfortunate one) that he didn't apply the settings the way I told him to. I had also tested that CPU with regard to stability in both motherboards (I got identical hardware for both of us) and the results were exactly the same.

The reason why I did this is because I knew I wanted to get the 3950X and the GigaByte X570 AURUS XTREME motherboard (when it came down to a more reasonable price) so if there were any differences, I wanted him to have the better of the two (motherboard and CPU).

He has only been using his computer normally, and mainly for gaming.

So the system has been running at moderate levels of stress, the GPU he has is a 1080 Ti.

If I knew you in real life, I would be making a bet with you - and not a small wager either, if you were enough of a sucker to agree to the bet. ![]()

The bet would be that the 3600X I have that I am sending to him will perform as well as it did when I got it, and there will have been no degradation in the performance of the motherboard.

The chiplets used in the 3900X are of higher silicon quality than that used in the 3600X.

The other thing is that you didn't use my methodology to test in the first place and I don't know how conscientious you have been in monitoring not only the clockspeed but also the voltages.

I have a 3900X which I tested and I could get it to 4.4 GHz stable at 1.3 Volts with one CCD disabled. My 3950X will clock to 4.5 GHz at 1.3 Volts with one CCD disabled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What about that fact that you helped him update his bios? Maybe the new bios has some flaw that is causing this. I don't and can't know for sure but it does seem like a possibility to me, it happens all the time with GPU drivers and sometimes just updating the bios causes problems.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The BIOS is no better or worse than any other BIOS, simply because they all basically have the same BIOS called AGESA.

No, the AMD marketdroids wanted clockspeed, and the only way to achieve that is by shoving in a stupid amount of voltage.

The AMD engineers probably wanted multi-core performance at a reasonable voltage (1.3 Volts maximum), but the marketdroids said "What about single core boost speeds" and the Engineers said, "What about it, it's irrelevant".

The markedroids won out and it did naff-all good, because reviewers (who I am convinced are recruited from the shallow end of the gene-pool, but not as shallow as where they recruit salescritters/marketdroids from, which, on a good day, barely counts as a puddle ) will just point to the single core benchmark and denigrate AMD anyway in comparison to Intel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have to agree with nec_v20 on that. I have never observed a UEFI update having a large impact on manual overclock settings. They obviously affect boosting settings, but I haven't personally observed a large change in what is possible in manual settings by changing a UEFI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, that will be interesting. You tested both 3600X's in the motherboard he has? Or at least the identical board? If that chip still matches on his motherboard, you can likely rule it out.

"The chiplets used in the 3900X are of higher silicon quality than that used in the 3600X."

They are. And beyond that, I do have a custom loop in my system with a CPU block that also covers the VRMS, so nothing is getting very hot. So that could certainly lead to a difference as well. Certainly not an apples to apples comparson.

I did notice something that was tied to a different thread you posted regarding the AGESA 1.0.0.4. In all previous UEFI releases, my chip would boost to about 1.325V all core and 1.475V on lightly threaded. It would limit out at these voltages despite extra EDC, TDC, and PPT and temperature headroom. And indication that the FIT feature is working as intended. However, when I installed the Combo AGESA 1.0.0.4, my chip would boost over 1.4V on all core workloads. That is most certainly horrible, and I promptly switched back to 1.0.0.3 ABBA. Not sure which AGESA release he was using? But 1.0.0.4 seemed to be borked. I have not yet tried the 1.0.0.6 release.

"I am not really going to entertain the whole "Anecdotal" and "N=1 sample size"; because I predicted this would happen and it is a coincidence (and an unfortunate one) that he didn't apply the settings the way I told him to."

Sounds a little like confirmation bias there ![]() . But keep up the posts, this is really useful info.

. But keep up the posts, this is really useful info.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The first version of the 1.0.0.4 B AGESA based BIOS for my motherboard was abysmal with regard to my test results compared to 1.0.0.3 ABBA; however GigaByte brought out three further 1.0.0.4 B BIOS revisions and finally go it sorted.

The thing is that I didn't expect to have first hand experience of the degradation, having set up the system I sent to my friend specifically to avoid it.

To answer your question, I tested both CPUs in both boards for a week or so.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

" I also cannot test the scenario you listed above, as I never logged the maximum manual overclock at a set voltage to compare to."

Wait, wait, wait a minute. I actually did test a scenario similar to this.

Read my initial post hear from August 28th 2019.

https://community.amd.com/thread/242916

I can try and reapply those same CCX settings at 1.3V and see if I can duplicate your friends results. I think i'll give that a go this weekend.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh! The other thing I was going to ask before I forget was the concerning the performance data from those Cinebench R20 runs? Even if his clocks are lower now, is the performance also lower? Just trying to make sure the discrepancy wasn't caused by a "clock stretching" phenomenon which could be altered by firmware.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am very much aware of the situation where an indicated clockspeed does not translate to an actual increase in performance and took that into account in my months long experimentation with my 3rd Gen Ryzen CPUs (3600X, 3900X and 3950X).

Here is something which I have noticed is that ambient temperature affects the CineBench runs from one day to the next.

Obviously ambient temperature is the starting point for the efficacy of the cooling solution.

The way this manifests is that a CineBench R20 score I can achieve with my settings can vary by 100 or more (outside the normal run to run variance) depending on how cool my room is.

Because I have had two spine operations and I have spinal arthritis, I keep my room temperature at 28 degrees Celsius; however if I do a run just after I wake up, when I have not turned on the heating and the room temp is around 20 degrees Celsius, with the same settings the CineBench R20 score is markedly higher even though the clockspeed is set to the same value.

I have a temperature sensor about an inch and a half away from middle intake fan of my 360 rad and it is in the same position for every test, and I use this measurement to define my ambient temperature.

I am not just talking about a single run either.

This difference in ambient temperature does not however affect the single core score.

On any given day, the score I get at 1.3 Volts is the same score I get if I raise the voltage to 1.3125 or 1.325 (and yes, I do reboot the system between raising the voltage even though I can change it without the need to reboot).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

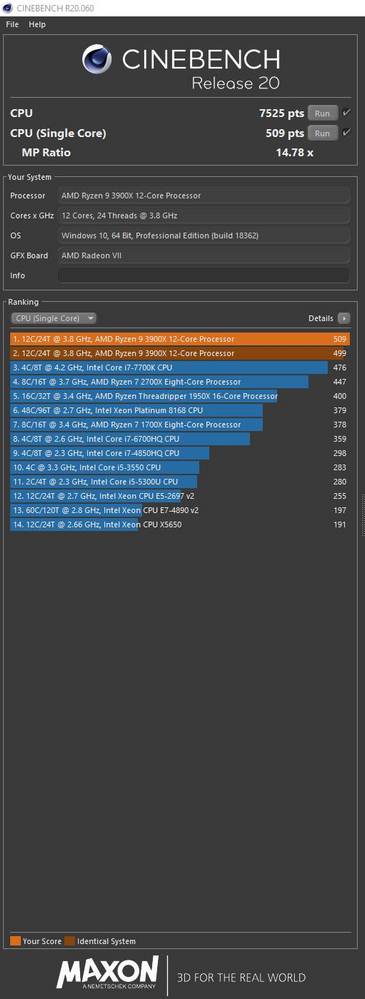

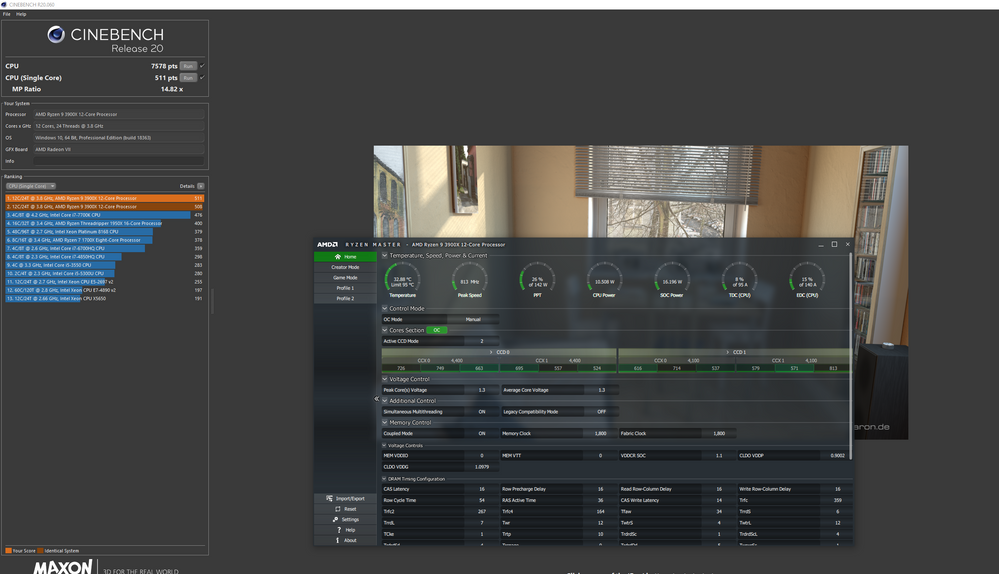

Posting a few updates. So I reran my settings for the CCX specific settings. Here is what I had back in August of 2019.

Figure 1: Manual per CCX setting using Ryzen Master August 2019.

CCX0 is at 4.5 GHz, CCX1 is at 4.4 GHz for CCD0.

CCX0 and CCX1 are at 4.1 GHz for CCD1. Voltage is at 1.3V.

I obtained the following CB20 score.

Figure 2: Cinebench Result Manual CCX (1.3V)

So here is what I saw in attempting to repeat those settings.

Figure 3: Manual CCX setting using Ryzen Master July 2020.

CCX0 is at 4.4 GHz, CCX1 is at 4.4 GHz for CCD0.

CCX0 and CCX1 are at 4.1 GHz for CCD1. Voltage is at 1.3V.

The clockspeeds are identical except for CCX0 on CCD0. That CCX cannot run at 4.5 GHz at 1.3V any longer. Try as I might, CB20 simply does not run. Dialing it back to 4.4 GHz allowed the run to finish. That result is interesting because, CCX0 on CCD0 contains the fastest cores, and thus would have had the most exposure to high voltages under lightly threaded workloads over the last 11 months. So it does look like it is being affected.

The only caveats are my actual CB20 scores. They are actually identical (within margin of error) between the 4.4GHz vs 4.5GHz setting. So I'm not sure what to make of that. The single threaded clockspeed dropped but it didn't affect the chips performance.

I think I will actually stick with the per CCX settings moving forward. It largely mitigates the lost lightly thread speeds of an all core setting setting, and I can still stick within a lower power envelope.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While being aware of the possibility of per CCX clocking, I was a bit dubious about its practical use as it would involve targeting the applications to the cores/threads.

This is something I discussed how to achieve in my post:

https://community.amd.com/thread/236646

There is no point in being able to clock a CCX higher, if the programs won't cooperate by actually using those cores//threads.

Another thing to consider is that by clocking some CCXs higher and some lower, you end up with a swings and roundabouts scenario with applications that create an all-core load, such as CineBench, Blender etc. What I mean is that what you gain with some cores you lose on others.

It was for this reason that I abandoned this approach pretty early on in my experimentation with 3rd Gen Ryzen after I got my 3950X.

With regard to not being able to notice a difference between 4.5 GHz and 4.4 GHz, I would suggest that you keep an eye on the temperature of the CPU, to see if that could be having an affect.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In a scenario where all the CCDs are equal, it is a complete waste of time. The only reason I looked at specific CCX settings back in August 2019 was due to the massive disparity in efficiency between the CCDs on my 3900X. Certainly it is possible that with better yields the CCDs on these chips are more similar now, rendering this sort of analysis obsolete.

My thinking was, why waste the bolus of your power budget, attempting to boost cores that simply do not boost efficiently beyond a certain point? It also largely mitigates the lightly threaded losses vs the boosting algorithm while still providing an increase in multithreaded boosting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How did you send the hardware to your friend Sweden?

Boat?

Aircraft?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From a post office.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So you don't know?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Of course I know where the post office is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not think you can compare the two systems at all.

Too many variables.

Different locations.

Different environments.

Something could have happened to parts during shipping.

No real proof of how the friend in Sweden ran their PC.

Perhaps they really overvolted the CPU for months on end.

You have no proof.

You have a theory.

You want to prove yourself correct.

You create this post.

You control the entire outcome.

So you can say you are correct.

Pointless.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I also have experience of degradation by using default setting. I just found out this week. I have Ryzen 3600 and Asrock steel legend b450m. I built this pc in July 2020. I found its Fit Voltage is very low which is 1.22V. Then I test for overclock and can get 4.400 GHz at 1.212 V and I am very impress with that.

When I read in forum, many people recommend to use default setting because it is the best way to manage voltage and frequency. As I using my pc for various task, but not CPU demanding, so I use default setting instead of overclock. I also want to keep this PC for long time.

Latest I do overclock is 12 Sept (2 weeks ago). This is after I change CPU thermal paste and I want to test temperature on stock and on max performance. This time I still get 4.400 GHz at 1.212V. After that I revert to default setting.

Then this week I just try the overclock profile and cannot get 4.400 at 1.212 V. The highest I can get at 1.212 V is 4.300 GHz. The CPU degrade by 0.100 GHz within two weeks. And at the whole time between this duration, I only using default setting. It is very recent and I remember that I only using this PC now to play game - Sekiro and NFS Heat. Other than that, doing light work only. Average 4 hour per day.

If I never try overclock, I will never find out that my CPU has degrade. The performance at default is as usual and still work as advertise. Only the overclock performance is degraded.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

actually Gigabyte Boards are "not" Standard!

to use Standard settings for Voltage and LLC you need to set Voltage to "normal" and "normal" && LLC to "Standard"!

Gigabyte, ASUS and MSI use their own settings for their "auto" - which are different from "AMD stock"

Laptop: R5 2500U @30W + RX 560X (1400MHz/1500MHz) + 16G DDR4-2400CL16 + 120Hz 3ms FS