Drivers & Software

- AMD Community

- Support Forums

- Drivers & Software

- Re: My monitor supports 10bit but cannot be enable...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

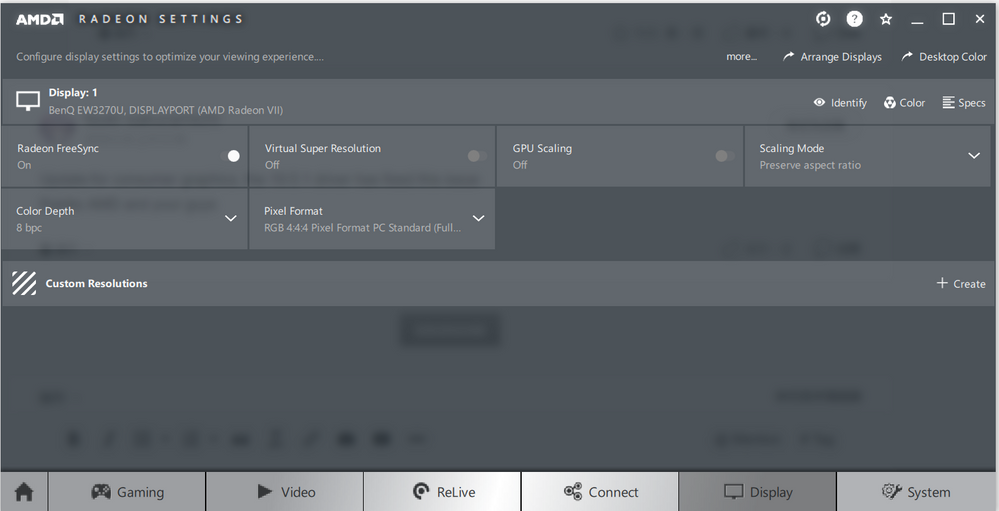

My monitor supports 10bit but cannot be enabled in Radeon setting

CPU: 2700X

MB:MSI X470 Carbon

GPU :R9 390

OS :win10

monitor: benq ew3270u

i get a new PC

In the beginning, Microsoft installed the driver for version 17.1.1 for me.

In the 17.1.1 driver, my monitor supports 10bit, and windows also tells me that it is 10bit.

I then updated my driver to version 19.1.2.

I found that my monitor can only choose 6bit and 8bit, no longer have 10bit options.

Windows also told me that my monitor is 8bit

How can I solve this problem?

thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That’s a great question. I have several apparent duplicates that show up in the drop down box as well. I don’t know why they are there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I already went to ToastyX and downloaded version 1.4.1. Based on your previous note, I was preparing the information you pictured in your email. But I won't be able to send it until later today because of a mentorship program I'm engaged in.

Thank you

David

-

fsadough <amd-external@jiveon.com> wrote:

"Re: My monitor supports 10bit but cannot be enabled in Radeon setting"

To view the discussion, visit: https://community.amd.com/message/2902999?commentID=2902999#comment-2902999

>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know how similar my issue was, but I have the same issue connecting to my 4k TV over HDMI on ver.19.3.1. Its a 10-bit panel and the only way I get a stable video signal its at 8-bit and 4:2:0 instead of the supported 4:2:2 or 4:4:4. The default settings the radeon software assigned when I first plugged it in don't work at all. This is very frustrating because it worked just fine in the Nvidia GPU i just replaced.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This doesn't help. You need to provide detailed information. Which GPU? Which TV? Which HDMI cable version?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

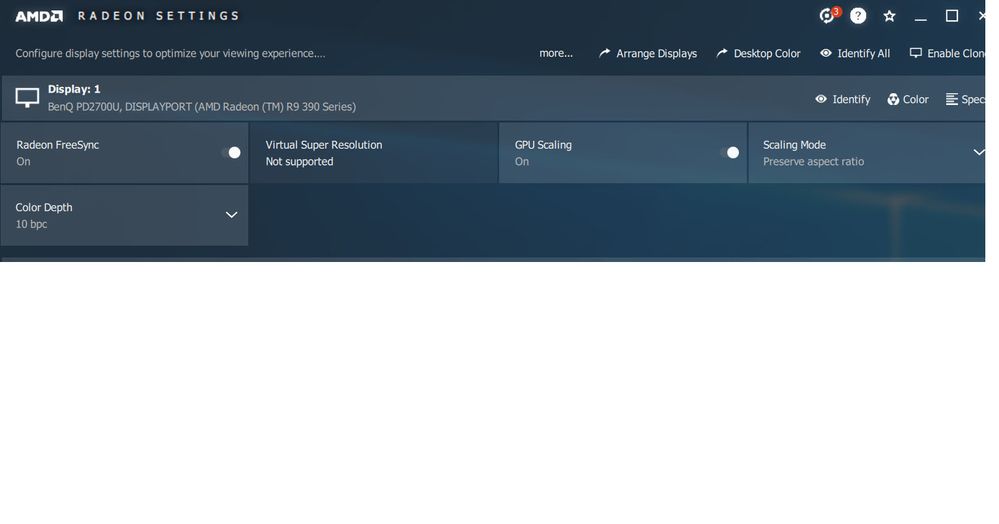

I just registered to say that I have the same issue and the same monitor as the OP.

CPU: i7-4790x

MB: Asus Maximus VII Ranger

GPU :RX VEGA 64 (Also RX580 Nitro)

OS :win10

monitor: benq ew3270u

3840x2160 @ 60Hz using DP 1.2 I can set 10-bit at 4:2:0 but it always drops back to 8-bit when on 4:4:4.

My old RX580 was the same, although I could set 10-bit successfully if I rolled back to Crimson Relive v17.4.3 drivers. That's not an option for the Vega though.

The fact that I use the same monitor as the OP tends to suggest that the monitor is the problem. But the fact that the 17.4.3 Crimson drivers seemed to work also suggests a driver/compatibility issue. Any help would be appreciated.

My EDID (very slight difference to the OP's);

00 FF FF FF FF FF FF 00 09 D1 50 79 45 54 00 00

26 1C 01 04 B5 46 27 78 3F 59 95 AF 4F 42 AF 26

0F 50 54 25 4B 00 D1 C0 B3 00 A9 C0 81 80 81 00

81 C0 01 01 01 01 4D D0 00 A0 F0 70 3E 80 30 20

35 00 C0 1C 32 00 00 1A 00 00 00 FD 00 28 3C 87

87 3C 01 0A 20 20 20 20 20 20 00 00 00 FC 00 42

65 6E 51 20 45 57 33 32 37 30 55 0A 00 00 00 10

00 00 00 00 00 00 00 00 00 00 00 00 00 00 01 B8

02 03 3F 70 51 5D 5E 5F 60 61 10 1F 22 21 20 05

14 04 13 12 03 01 23 09 07 07 83 01 00 00 E2 00

C0 6D 03 0C 00 10 00 38 78 20 00 60 01 02 03 E3

05 E3 01 E4 0F 18 00 00 E6 06 07 01 53 4C 2C A3

66 00 A0 F0 70 1F 80 30 20 35 00 C0 1C 32 00 00

1A 56 5E 00 A0 A0 A0 29 50 2F 20 35 00 80 68 21

00 00 1A BF 65 00 50 A0 40 2E 60 08 20 08 08 80

90 21 00 00 1C 00 00 00 00 00 00 00 00 00 00 20

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is under investigation by AMD Display Team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

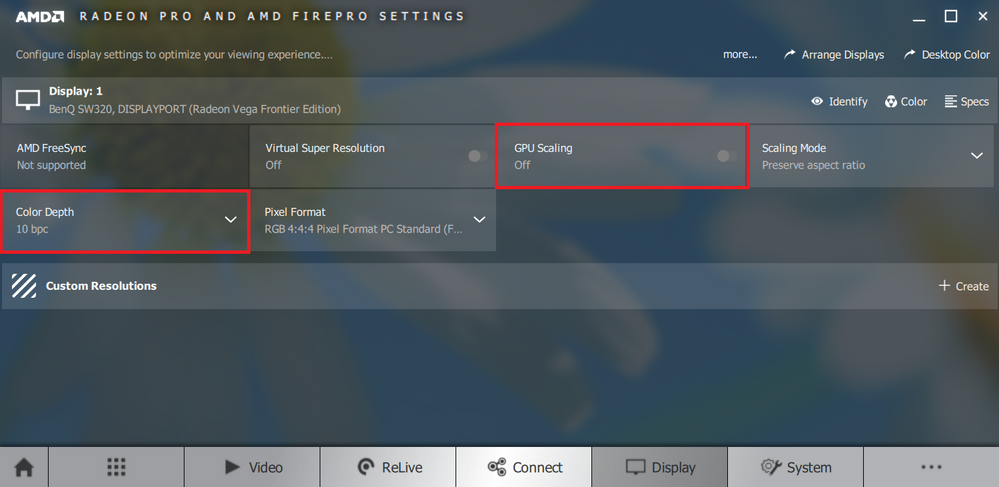

I have AMD HD7950. Does it support 10 bit color depth?

Driver: 19.3.2

Connection: Display Port 1.4

Monitor: BenQ SW320

Video Card: AMD Radeon HD7950

CPU: i7-3770K

OS: win10

Somehow I was switching around setting and was able to switch to 10 bpc. But then it automaticaly switched back.

When I set fps to 30Hz. It's indeed able to perform 10 bpc.

On 60Hz I have only 6, 8 bpc available

00 FF FF FF FF FF FF 00 09 D1 54 7F 45 54 00 00

1D 1C 01 04 B5 46 28 78 26 DF 50 A3 54 35 B5 26

0F 50 54 25 4B 00 D1 C0 81 C0 81 00 81 80 A9 C0

B3 00 01 01 01 01 4D D0 00 A0 F0 70 3E 80 30 20

35 00 C0 1C 32 00 00 1A 00 00 00 FC 00 42 65 6E

51 20 53 57 33 32 30 0A 20 20 00 00 00 10 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 10

00 00 00 00 00 00 00 00 00 00 00 00 00 00 01 40

02 03 2B 40 56 61 60 5D 5E 5F 10 05 04 03 02 07

06 0F 1F 20 21 22 14 13 12 16 01 23 09 7F 07 83

01 00 00 E3 06 07 01 E3 05 C0 00 02 3A 80 18 71

38 2D 40 58 2C 45 00 E0 0E 11 00 00 1E 56 5E 00

A0 A0 A0 29 50 30 20 35 00 80 68 21 00 00 1A 04

74 00 30 F2 70 5A 80 B0 58 8A 00 C0 1C 32 00 00

1A 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 61

Thanks in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, but I think you need to wait for the fix in May 2019

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

insfi, I have 2 BenQ SW320s and the Radeon Pro WX 7100, and I have been battling this issue for over a year. I have learned a few things. My experience may help you in the interim until there is a fix for this issue. I have found 3 ways to achieve 10 bpc for my hardware/software combination -- YMMV, but it can't hurt to try. Perhaps one of these will be satisfactory for you until a permanent fix is available:

- set the refresh rate on your monitor (Advanced Display settings in Windows Settings) to 30 Hz -- this one you already found

- set the Pixel Format in the GPU driver to YCbCr 4:2:2. The impact of this change is to reduce the amount of color information displayed (some of it gets thrown away, unlike full RGB 4:4:4). This will be most noticeable in line art, vector drawings, CAD, small text, Excel cells -- anything with very thin lines. If you do color-critical work, this may not be acceptable for you. When you make this change, you may find that the Color Depth automatically changes to 10 bpc. If not, try changing it to 10 bpc. This worked for me at the full resolution of the BenQ SW320 (3840x2160) or 4K@60Hz

- use the Enterprise driver from Feb 2018 (18.Q1) with monitor refresh rate at 60Hz, Pixel Format at RGB 4:4:4 and Color Depth at 10 bpc. Although this driver works at the full RGB 4:4:4 pixel format, the driver has other issues that may be an issue for you. One of them is a performance issue in CorelDraw, which was fixed in the 18.Q3 driver from AMD, and in a CorelDraw update from Corel (for the newest versions since v2017). I don't know if other applications suffer this issue (glacial performance opening files, copying and pasting, printing).

In addition to these changes, you may need to enable the 10 bit pixel format setting in the Advanced Settings of the Radeon Pro driver settings (old Catalyst style dialog box). To verify that the whole pipeline (from O/S settings, VESA certified DP cables, BenQ monitor driver, AMD GPU driver to application) was delivering 10 bpc, I used a 10-bit ramp PSD file (attached) in Photoshop. It's the only test I know to use for quick visual verification. When 8 bpc is active, there is obvious banding in the gradient that the file contains. When 10 bpc is active, it's smooth gray from one end to the other with no hint of banding in the gradient. Good luck.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dealio 10 bit pixel format and 10bpc are two different things

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks fsadough. I am aware that they are different. I hope my post was not confusing to anyone -- I was careful to use the same terms AMD uses, Color Depth and Pixel Format, to distinguish them. But I think it's the unfortunate inconsistency in their names across software vendors that adds to the confusion. AMD uses "Color Depth" and "Pixel Format", but Windows 10 uses "Bit Depth" and "Color format", and Adobe just uses "30 bit display". Even the term 10 bpc is not consistently used: 10 bits per color vs. 10 bits per channel vs. 10 bits per component.

Maybe it's the last paragraph where I seem to confound the two. To explain, my experience is that when the Radeon Setting Color Depth is set to 10 bpc, and the Radeon Pro Advanced Setting labeled "10-bit pixel format" is not enabled, the file named "10-bit test ramp.psd" (found elsewhere on this forum) will show banding in the gradient in Photoshop CS6 and Photoshop CC. And banding in this test file is a visual indication that Photoshop is not using a color depth of 10 bpc. Why Photoshop is not using 10 bpc when both the O/S and the Radeon Settings panel report that it is enabled is a separate and open question that I don't understand. However, when I enable the "10-bit pixel format" setting and load the same test ramp file into Photoshop, I see no banding in the gradient (dark gray to light gray), and this is the expected outcome with 10 bpc color depth. So it seems that in Photoshop, the two -- 10 bpc color depth and 10-bit pixel format -- are somehow tied to each other. This is a relevant test for me because I use Photoshop frequently.

If this visual test is somehow inaccurate or invalid, please explain. In addition, if you know a better visual test that can be used to verify that 10 bpc color depth is enabled, please advise. It's not that I don't trust the AMD driver console, but I tend to live by "trust but verify" when it comes to technology.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm following this thread.

I have the same problem. BenQ SW320 with Radeon Vega 56 on 18.11.2 drivers and can't switch to 10 BPC.

Using Global Wattman/Radeon Display Settings - when selecting 10bpc on the drop down menu, the screen will momentarily turn black, but then will switch back to 8bpc. It will only switch to 10bpc if Hz is reduced to 30, but mouse lag is bad, then must revert back to 60HZ.

Using Display Port v1.4 cable (i think thats the latest version that support 10bpc)

Please confirm if the 'May '2019' drivers will contain the fix. ? ? ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@fsadough tested the 19.Q2 driver due out May 15, and he demonstrated that the problem is fixed in that release. See this message thread

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ahhhhh finally! Thank You for the head's up. Will patiently wait for the updated drivers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update:for consumer graphics, the 19.5.1 driver has fixed this issue

thanks AMD and your guys

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem reappeared. When I updated the driver from 19.5.2 to 19.6.2, my monitor could not be set to 10bpc again.

After that I uninstalled the driver using DDU and installed the 19.6.2 driver. This problem also exists.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no reason to install every driver release. Revert to the previous/working driver.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A positive update driver keeps the GPU in top condition

New features, new optimizations, these are great

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unless mentioned specifically most performance updates only update for specific games. Unless a driver fixes an issue you have or is required do to an OS upgrade or Windows update then there really is not reason to update a driver. Unless it does something new you need then you are kinda play Russian roulette with the results. You will always have a less problematic user experience if you take the avenue of if it isn't broken don't fix it, IMHO. Obviously everyone is welcome to do what they want. If you are the experimental type, expect to do a lot of back tracking.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your work on looking at this.

I purchased one of these monitors in Amazon Prime deal a few days ago. PU.

Will check with RX Vega 64 Liquid and PowerColor Vega 56 Red Dragon when I put those GPU back in a machine as primary display GPU

I am currently using the monitor with an RTX2080 to test Radeon Image Sharpening filter and anlother pre-existing Contrast Aware Sharpening shader.

I am then upscaling from 2K Ultra to 4K resolution either using the GPU or the Monitor and comparing with a Native 4K Ultra Image.

You might be interested in using it for gaming.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

19.6.2 is an optional driver. Don't let the numbering of the driver version fool you. 19.5.2 is a main release and contains the 10bpc fix. The 19.6.2 driver however originated from a source prior to the fix. You should revert back to 19.5.2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

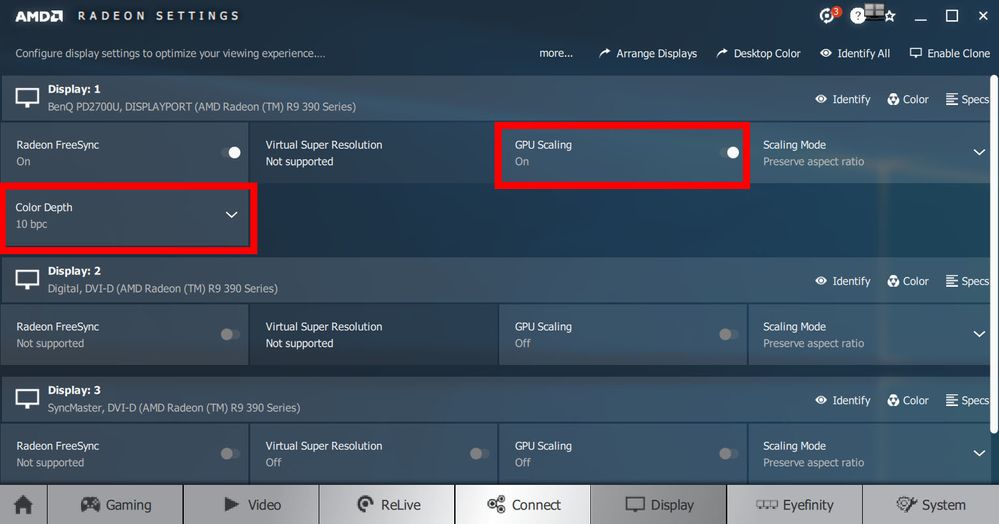

I had the same issue, but I fixed it in the AMD Radeon settings. I enabled the GPU scaling option and then 10bits became available.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I have the same issue. Here is my gear:

Graphics: Ryzen 2400 G (Navi 11)

Mainboard: Gigabyte Aorus B450 M

Monitor: 27UD58-B

Connection: Certified Premium HDMI Cable

Driver: 19.20.17.01-190715a-344742E

EDID:

00 FF FF FF FF FF FF 00 1E 6D 08 5B DD 9B 05 00

03 1D 01 03 80 3C 22 78 EA 30 35 A7 55 4E A3 26

0F 50 54 21 08 00 71 40 81 80 81 C0 A9 C0 D1 C0

81 00 01 01 01 01 08 E8 00 30 F2 70 5A 80 B0 58

8A 00 C0 1C 32 00 00 1E 04 74 00 30 F2 70 5A 80

B0 58 8A 00 C0 1C 32 00 00 1A 00 00 00 FC 00 4C

47 20 55 6C 74 72 61 20 48 44 0A 20 00 00 00 10

00 00 00 00 00 00 00 00 00 00 00 00 00 00 01 1B

02 03 30 70 4D 90 22 20 05 04 03 02 01 61 60 5D

5E 5F 23 09 07 07 6D 03 0C 00 10 00 B8 3C 20 00

60 01 02 03 67 D8 5D C4 01 78 80 03 E3 0F 00 06

02 3A 80 18 71 38 2D 40 58 2C 45 00 E0 0E 11 00

00 1A 56 5E 00 A0 A0 A0 29 50 30 20 35 00 80 68

21 00 00 1A 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 3D

3840x2160@60hz, ycbcr 4:2:2, 10-bit does not work as opposed to the monitor's specs. Only 8 bit available

3840x2160@30hz, ycbcr 4:2:2, 10-bit works fine, but 30hz is really... meh.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

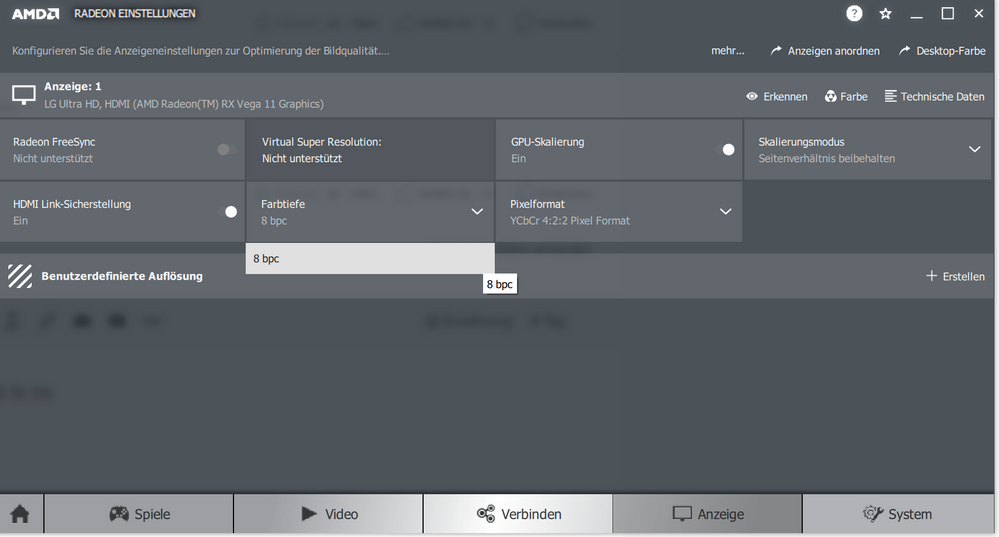

You need to enable GPU Scaling in AMD Radeon settings and then 10bit becomes available.

Please check the screenshot below.

I think you also need to be connected via Display Port 1.4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, Displayport 1.2 or 1.4.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hm, GPU-Scaling does not work for me. Unfortunately, my mainboard has only HDMI and DVI...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I've a similar problem but GPU scaling does not resolved it.

The Color Depth option is available at 6 bpc and 8 bpc if connected via DisplayPort but if I try to select 8 bpc it turns back to 6 bpc.

If the monitor is connected via HDMI instead I can select 8 bpc option and the Color Depth works fine.

Thank you

Monitor: ACER CB272 A (27"; 1920x1080; DP 1.2; HDMI 1.4)

GPU: AORUS Radeon RX 5700 XT 8G (rev. 1.0)

Cable: IVANKY LANVADA-DD01P (DisplayPort 1.4)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solved the issue with Color Depth option.

I've just download the "Custom Resolution Utility" (CRU) : https://custom-resolution-utility.en.lo4d.com/windows

Now my AMD GPU works fine with the bcp selected at 8.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Enabling and Configuring GPU Scaling | AMD

GPU Scaling Overview

Most games are designed to support multiple resolutions with different aspect ratios. The aspect ratio is the proportional relationship between width and height of the image. For example, an image with a resolution of 1280x1024 (width x height) has an aspect ratio of 5:4, while an image with a resolution of 1920x1080 has a 16:9 aspect ratio.

The GPU Scaling option within Radeon Settings allows rendering games and content requiring a specific aspect ratio to fit on a display of a different aspect ratio. GPU Scaling can be applied using one of the following modes:

- Preserve aspect ratio - Expands the current image to the full size of the monitor while maintaining the aspect ratio of the image size. For example, at a resolution of 1280x1024 (5:4 aspect ratio), the screen will have black bars on the left and right side.

- Full panel - Expands the current image to the full size of the monitor for non-native resolutions. For example, at a resolution of 1280x1024 (5:4 aspect ratio), the screen will stretch to fill the monitor.

- Center - Turns off image scaling and centers the current image for non-native resolutions. Black bars may appear around the image. For example, setting a 24" monitor (native resolution of 1920x1200) at a resolution of 1680x1050 (16:10 aspect ratio) or lower will place a black border around the monitor.

Enabling GPU Scaling and Selecting the Desired Scaling Mode

To enable GPU Scaling within Radeon Settings, the following conditions must be met. Otherwise, the GPU Scaling option will not be available:

- A digital connection must be used. A digital connection is one of the following:

- DVI

- HDMI

- DisplayPort/Mini DisplayPort

- The display must be set to its native resolution and refresh rate. For instructions on how to set resolution and refresh rate for a display, please refer to article: Adjusting Display Brightness, Resolution and Refresh Rate.

- The latest supported graphics driver for your AMD Graphics product should be installed. For instructions on how to install the latest graphics driver, please refer to article: How to Install Radeon™ Software on a Windows® Based System

To enable GPU Scaling, follow the steps below:

- Open Radeon™ Settings by right-clicking on your desktop and selecting AMD Radeon Settings.

- Select Display.

- Toggle the GPU Scaling option to On. The screen will go black momentarily while GPU Scaling is being enabled.

- Once GPU Scaling is enabled, select the desired mode by clicking on the Scaling Mode option.

The available scaling modes are:

Preserve aspect ratio - Expands the current image to the full size of the monitor while maintaining the aspect ratio of the image size. For example, at a resolution of 1280x1024 (5:4 aspect ratio), the screen will have black bars on the left and right side.

Center - Turns off image scaling and centers the current image for non-native resolutions. Black bars may appear around the image. For example, setting a 24" monitor (native resolution of 1920x1200) at a resolution of 1680x1050 (16:10 aspect ratio) or lower will place a black border around the monitor.

NOTE! This feature is not designed to fix the issue of black borders on HDTV. To resolve black borders on HDTV, please refer to article: Unable to adjust a digital display to match the resolution of the desktop.Full panel - Expands the current image to the full size of the monitor for non-native resolutions. For example, at a resolution of 1280x1024 (5:4 aspect ratio), the screen will stretch to fill the monitor.

NOTE! Scaling Mode will be applied immediately once selected. - CloseRadeon Settings to exit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

YCbCr 4:4:4 and RGB 4:4:4 doesn't support 10 or 12 bit color on most devices, set it to YCbCr 4:2:2 in order to utilize 10 and 12 bit color space.