After achieving in 2020 the AMD 25x20 Energy Efficiency Goal for mobile processors, the company’s engineers were hungry for a new, bold challenge in processor energy efficiency to benefit customers and our world, which we have now identified. By 2025, AMD aims to deliver a 30x increase in energy efficiency in the rapidly growing Artificial Intelligence (AI) and High-Performance Computing (HPC) space.[1] The goal focuses on accelerated compute nodes in the data center using AMD CPUs and AMD GPU Accelerators for AI training and HPC applications.

Focused on minimizing energy consumption while meeting explosive demands for AI and HPC workloads, the new 30x goal will require the company to increase the energy efficiency of a compute node at a rate that is more than 2.5x faster than the aggregate industry-wide improvements made during the last five years.[2] The big picture? Compelling benefits from HPC and AI applications while potentially saving billions of kilowatt hours of electricity and potentially reducing energy use per computation by 97% over the next five years.

Background

Looking at current and projected computing demands, the AMD team decided that it would be most meaningful to focus on the component that constitutes the fastest-growing segments in datacenter consumption — accelerated computing.

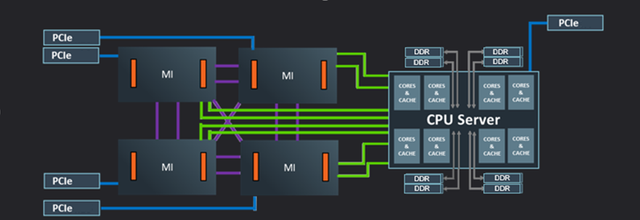

Accelerated compute node

Source: AMD

Accelerated compute nodes, essentially a domain-specific configuration that enables optimal performance for compute, enable the most powerful and advanced computing systems in the world. Using these nodes, researchers can train AI neural networks and deploy large-scale supercomputers to conduct critical research underlying the most promising and urgently needed scientific breakthroughs of our time.

Over the next decade, AI and HPC deployments will drive significant developments across a variety of fields such as climate prediction, genomics, drug discovery, alternative energy, materials sciences and speech/image recognition. And researchers may identify other important areas, too.

While critical, these workloads require significant computing power and thus can be energy intensive. With global demand for AI and HPC deployments exploding, if companies don’t take action these two areas could dominate energy consumption within data centers in the coming years.

Why It Matters

AMD recognizes the growing, yet unmet need to drive durable, sustainable energy efficiency improvements in accelerated computing. This vision also expands on the company’s long-term commitment to environmental stewardship, product energy efficiency and environmental, social and governance (ESG). In order to continue delivering the computing power our world needs, AMD is inspired to innovate and find new approaches to bring the energy efficiency curve up while the industry sees diminishing gains from manufacturing improvements in smaller processing nodes.

In short, delivering a 30x energy efficiency increase by 2025 would support growth in some of the world’s most intensive computing needs while taking steps to mitigate the associated risk of greater energy use.

How We’ll Get There

To achieve this goal, AMD engineers will prioritize driving major efficiency gains in accelerated compute nodes through architecture, silicon design, software and packaging innovations while publicly benchmarking our progress annually.

Upon learning about this new AMD goal, industry analyst Addison Snell, the CEO of Intersect360 Research, said, “A 30-times improvement in energy efficiency in five years will be an impressive technical achievement ".

Snell is right: If achieved, this will be an impressive feat, and success is certainly not a forgone conclusion. The same was true for the AMD 25x20 Energy Efficiency Initiative, but we are excited to take on this challenge to deliver radical energy efficiency improvements in the near future. To learn more about the new energy efficiency initiative, visit: amd.com/en/corporate-responsibility/data-center-sustainability.

by Sam Naffziger

Senior Vice President, Corporate Fellow and Product Technology Architect

[1] Includes high performance CPU and GPU accelerators used for AI training and High-Performance Computing in a 4-Accelerator, CPU hosted configuration. Goal calculations are based on performance scores as measured by standard performance metrics (HPC: Linpack DGEMM kernel FLOPS with 4k matrix size. AI training: lower precision training-focused floating point math GEMM kernels such as FP16 or BF16 FLOPS operating on 4k matrices) divided by the rated power consumption of a representative accelerated compute node including the CPU host + memory, and 4 GPU accelerators.

[2] Based on 2015-2020 industry trends in energy efficiency gains and data center energy consumption in 2025.