Hello,

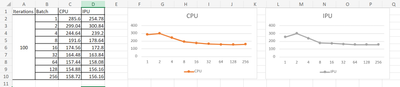

I have been running inference on Ryzen AI IPU using different models. While calculating the Throughput of these models( eg. InceptionV3) on IPU and CPU, it is observed that throughput is almost similar for both CPU and IPU (as can be seen in attached screenshot).

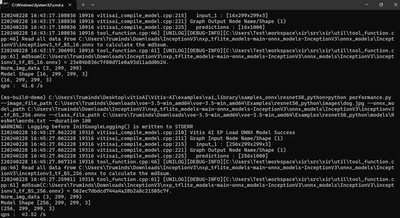

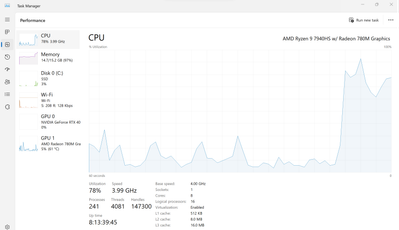

Also, while inferencing the models running on IPU(Screenshot attached-"Vitis AI EP load ONNX model success" shows that IPU is accelerated),I monitored the task manager and the utilization of CPU went up to 70-78%(Screenshot attached). It appears like a lot of layers of the model are falling back on CPU when the model is accelerated on IPU.

I want to know is there any way I can get information about which layers are being executed on IPU, and which of them are falling back to CPU? Also, is there any other alternative explanation of why the throughput results of CPU and IPU are almost similar?

Regards,

Ashima