Authors: Bingqing Guo (AMD), Cheng Ling (AMD), Haichen Zhang (AMD), Guru Madagundapaly Parthasarathy (AMD), Xiuhong Li (Infinigence, GPU optimization technical lead)

The emergence of Large Language Models (LLM) such as ChatGPT and Llama, have shown us the huge potential of generative AI and are contributing to an unprecedented technological revolution today. However, when talking about the opportunities that come with generative AI, it is always in parallel with challenges like high training and inference costs in terms of the hardware infrastructure, rapid iteration of models, inferencing frameworks, and so on. AMD, through its powerful GPU computing platforms like AMD Instinct™ accelerators, AMD Radeon™ GPUs, and AMD ROCm™ software, offers developers and the AI community alternative solutions for AI training and inferencing. Meanwhile, with the establishment of an open AI software ecosystem, AMD allows more partners to participate in contributing variable AI acceleration solutions, particularly for large AI models on AMD GPU platforms.

INFINIGENCE and LLM Acceleration on AMD GPU Platforms

INFINIGENCE, a newly established tech company, founded by top talents from Tsinghua University and Internet giants, is committed to optimizing LLM training and inference for the economic efficiency of generative AI applications. INFINIGENCE is actively improving inference performance and facilitating LLM adaptation to diverse hardware. The recent introduction of FlashDecoding++, a state-of-the-art LLM inference engine, offers up to 3X speed up on an AMD Radeon™ RX 7900XTX GPU and an Instinct™ MI210 accelerator, respectively, compared to mainstream PyTorch implementations1,2, demonstrating great examples of leveraging AMD open AI platform to provide developers with alternative acceleration solutions.

The core idea of FlashDecoding++ is to achieve true parallelism in attention calculation through asynchronous methods and optimize the computation in the Decode phase. Key features of this implementation include:

- Asynchronized softmax with unified max value. FlashDecoding++ introduces a unified max value technique for different partial softmax computations to avoid synchronization. Based on this, the fine-grained pipelining is proposed.

- Flat GEMM optimization with double buffering. FlashDecoding++ points out that flat GEMMs with different shapes face varied bottlenecks. Then, techniques like double buffering are introduced.

- Heuristic dataflow with hardware resource adaptation. FlashDecoding++ heuristically optimizes dataflow using different hardware resource (e.g., Tensor Core or CUDA core) considering input dynamics.

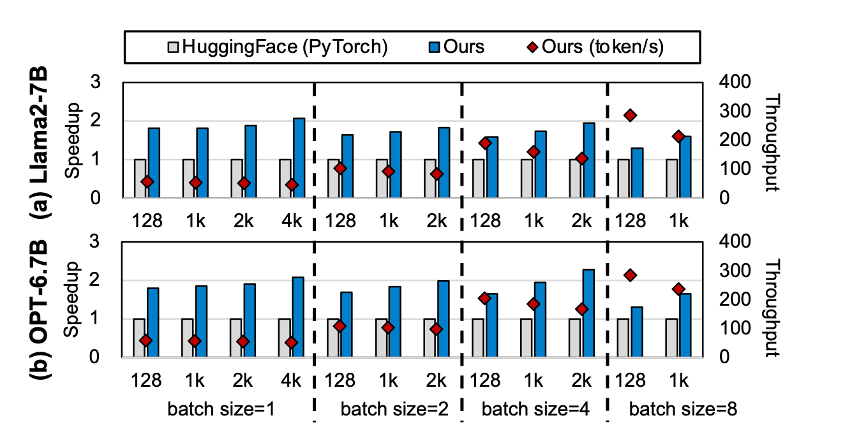

Figure 1: FlashDecoding++ (proposed by INFINIGENCE, i.e., ‘Ours’ in the figure) Speedup of the decode phase for (a)Llama2-7b and (b)OPT-6.7b on AMD Radeon™ RX 7900XTX, where the throughput is indicated by the red label, and the speedup over un-optimized PyTorch models hosted on Hugging Face (baseline - gray bar) is indicated by the blue bar1.

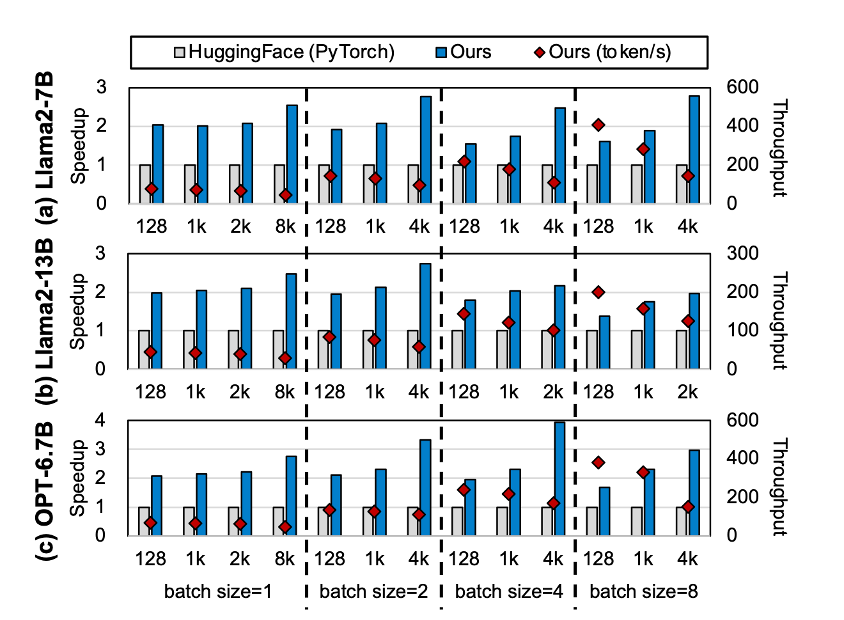

Figure 2: FlashDecoding++ (proposed by INFINIGENCE, i.e., ‘Ours’ in the figure) Speedup of the decode phase for (a)Llama2-7b, (b)Llama2-13b and (c)OPT-6.7b on AMD Instinct™ MI210 Accelerator, where the throughput is indicated by the red label, and the speedup over un-optimized PyTorch models hosted on Hugging Face (baseline - gray bar) is indicated by the blue bar2.

More Optimization

INFINIGENCE places technical innovation at the core of their driving force, continuously enhancing the performance and efficiency of large model technologies. In addition to the FlashDecoding++ inference engine for GPUs, INFINIGENCE applies optimizations such as sparse attention and low-bit quantization, through which the model's storage capacity has been reduced by a considerable factor, targeting to minimize the computational workload and GPU memory requirements. INFINIGENCE’s in-house developed inference engine achieves significant increase in inference speed through efficient fine-tuning systems, adaptive compilation, and effective communication compression technologies.

Conclusion

The optimized implementations like above further enrich AMD AI developer community, helping with the highly efficient AMD Instinct™ accelerators in processing complex AI workloads such as LLMs, thus making is possible to provide data center users with a complete set of inference solutions that can meet the high throughput, low latency in the performance. AMD is empowering more ecosystem partners and AI developers by building the open software platforms, such as ROCm™, ZenDNN™, Vitis™ AI, and Ryzen™ AI software for innovations on GPUs, CPUs and Adaptive SoCs.

Call to Action

To get more details about FlashDecoding++ LLM inference optimization or try INFINIGENCE technology, please contact sales@infini-ai.com. If you need more insight on AMD AI acceleration solutions and developer ecosystem plans, feel free to send email via amd_ai_mkt@amd.com for any questions.

FOOTNOTES

Use or mention of third-party marks, logos, products, services, or solutions herein is for informational purposes only and no endorsement by AMD is intended or implied. GD-83.

- Data was tested and published in the paper FLASHDECODING++: FASTER LARGE LANGUAGE MODEL INFERENCE ON GPUS https://arxiv.org/pdf/2311.01282v4.pdf by Infinigence in November 2023. Data was verified by AMD AIG marketing in December 2023. The testing was performed on the platform with an AMD Radeon RX 7900XTX GPU, ROCm 5.6, Intel Core i9-10940X. Testing models are OPT-6.7B and Llama2-7B. may vary based on hardware and system configuration and other factors.

- FLASHDECODING++: FASTER LARGE LANGUAGE MODEL INFERENCE ON GPUS, by Infinigence in November 2023. Data was verified by AMD AIG marketing in December 2023. The testing was performed on the platform with an AMD Instinct MI210 accelerator, ROCm 5.7, AMD EPYCTM 7K62 CPU. Testing models are Llama2-7B and Llama2-13B. Results may vary based on hardware and system configuration and other factors.