From product recommendation to autonomous vehicles to online chatbots, artificial intelligence (AI) is becoming increasingly prevalent in our daily lives. Every time we interact with these applications, we are leveraging trained neural network models in a deep learning process called inference. Considering the range of applications which may employ inference, it becomes likely that inference will be run across different hardware targets deployed across the cloud, edge, and endpoint. This hardware heterogeneity drives software challenges, especially when developers often find themselves developing inference applications on different hardware targets using independent software stacks.

Tencent’s Real-World Use Cases

In recognition of this software challenge, Tencent recently released their Tencent Accelerated Computing Optimizer (TACO) Kit, their cloud service for customers that aims to allow all developers, regardless of their AI experience level, to perform deep learning training and inference using their choice of model, framework, and hardware backend. While their customers interact with a clean and simple user interface, Tencent’s TACO Kit does the heavy lifting under the hood to help ensure that the customer’s workload is running optimally. To harness the best inference performance from AMD EPYC™ CPU backends, Tencent incorporates ZenDNN, AMD’s AI inference library, into their TACO inference pathway, also known as TACO Infer.

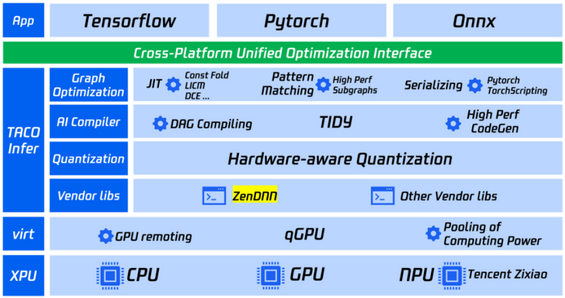

Diagram 1. Architectural overview of Tencent’s TACO Infer.

The above diagram is an architectural overview of Tencent’s TACO Infer. Prior to TACO Infer, the burden of managing multiple hardware targets separately for inference deployment and performance tuning would fall on the shoulders of Tencent’s cloud users. Given the breadth of model frontend types, hardware backends, and software versions, users might find themselves overwhelmed by the different combinations; the abundant availability of community tools contributes another dimension of complexity. Instead of evaluating the most cost-effective and performant solutions, users are inclined to opt for familiar choices due to the effort and time involved with exploring variations. This inhibits cloud users from matching their applications with the most appropriate computing type and fully releasing the potential of cloud computing.

TACO Infer aims to address this issue by providing users eager for accelerated computing with a cross-platform unified optimization interface and easy access to highly optimized heterogeneous power in Tencent Cloud. The extensible design of TACO Infer facilitates the integration of ZenDNN as a kernel backend when targeting AMD EPYC processor-based devices. For ease of use, TACO Infer requires no changes to deep learning frameworks, such as TensorFlow or PyTorch. Linking an application with TACO’s software development kit (SDK) is sufficient to enable optimizations, including those provided by ZenDNN. Incorporation of ZenDNN further pushes the performance gain that TACO can achieve, as demonstrated by the data points that Tencent collected below:

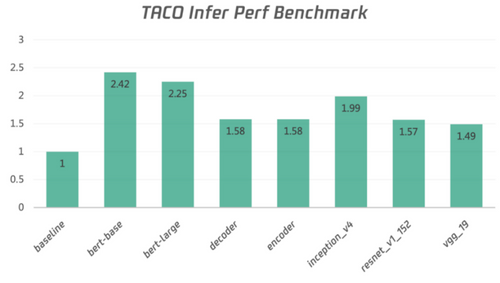

Diagram 2. Inference performance improvement using ZenDNN in TACO Infer compared to generic TensorFlow, both running on AMD EPYC CPU backends in Tencent testing.

Diagram 2 above depicts the throughput performance gain achieved in Tencent testing with the latest release of TACO Infer using Tencent’s Cloud Bare Metal (CBM) standard SA2 instances and latency-optimized batch sizes of 1. Across a variety of computer vision and natural language processing type deep learning models, the optimizations enabled by TACO and ZenDNN jointly improve inference performance compared to the generic TensorFlow baseline.

In support of the AMD-Tencent collaboration, the director of the Tencent Cloud Server department, Lidong Chen, comments, “AMD and Tencent are working together to optimize Tencent Accelerated Computing Optimizer (TACO) Kit to deliver the best AI user experience. By optimizing TACO with ZenDNN, we already see promising performance uplift running on AMD EPYC CPUs.”

Get Started!

To learn more about Tencent’s TACO Kit for inference, refer to Tencent’s TACO Kit documentation center.

To evaluate AMD’s ZenDNN AI inference library on AMD EPYC processors, refer to the ZenDNN Developer Central page.