- AMD Community

- Communities

- Developers

- Server Processors

- Re: IOMMU on KVM Guest NIC reduces throughput by f...

Server Processors

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

IOMMU on KVM Guest NIC reduces throughput by factor of 10 on EPYC 7282

I'm trying out SEV on an EPYC 7282. Everything seems great until I try network operations. I've noticed that KVM guests running with SEV were experiencing significantly reduced network performance. I then added <driver iommu="on"/> to a guest not running with SEV and saw the same problem. I set up a large data transfer from each guest and noted that IOMMU was causing the guest to transfer at a rate 10% of a guest configured without it.

I've tried tinkering with IOMMU-related boot parameters to no avail. I'd simply ignore it and not use IOMMU, except that it is required for virtio devices running with SEV.

Any hints would be welcome. Here is the NIC configuration:

<interface type="direct">

<mac address="52:54:00:0b:a4:8f"/>

<source dev="ens4f0" mode="bridge"/>

<model type="virtio"/>

<driver iommu="on"/>

<address type="pci" domain="0x0000" bus="0x01" slot="0x00" function="0x0"/>

</interface>

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can wrap this up. I moved one of my guests to an Intel box and enabled iommu there. I'm seeing the same performance issue on Intel. I'm not seeing it on s390, though. So, this must be a Ubuntu/QEMU/x86_64 thing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello sinanju,

Some questions:

- What operating system are you running exactly and what kernel?

- What is the version of KVM?

- What adapter?

- What's the platform?

- Have you tried bare metal performance to ensure that is operating efficiently?

Also, have you consulted the Linux® Network Tuning Guide for AMD EPYC™ 7002 Series Processor Based Servers? It's written around bare metal performance, but can still be a starting point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks,

- Ubuntu 20.04, 5.4.0-40-generic #44-Ubuntu SMP Tue Jun 23 00:01:04 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

- QEMU emulator version 4.2.0 (Debian 1:4.2-3ubuntu6.1)

- Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

- ThinkSystem SR655, type 7Z01

- Even from KVM guests not using IOMMU (and, so, not using SEV), I'm getting line-rate transfer speeds. Performance without IOMMU is ducky.

Yes, I have consulted that document, as well as some other sources. I've tried iommu=pt but saw no change.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. We will have to take a closer look at get back to you. I am not sure if we have that NIC available though if we need to reproduce the environment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again sinanju,

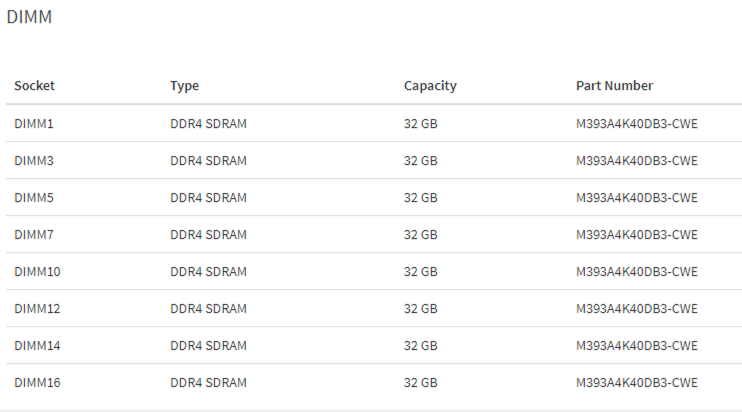

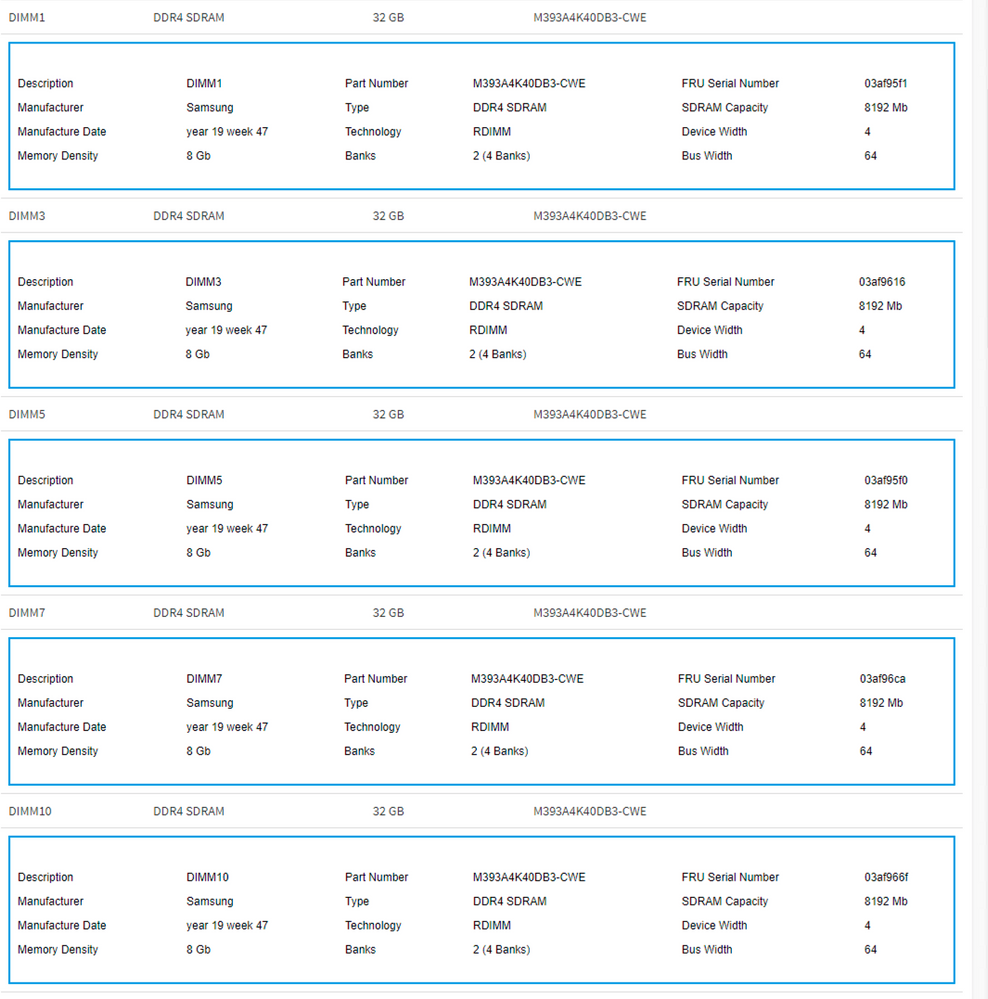

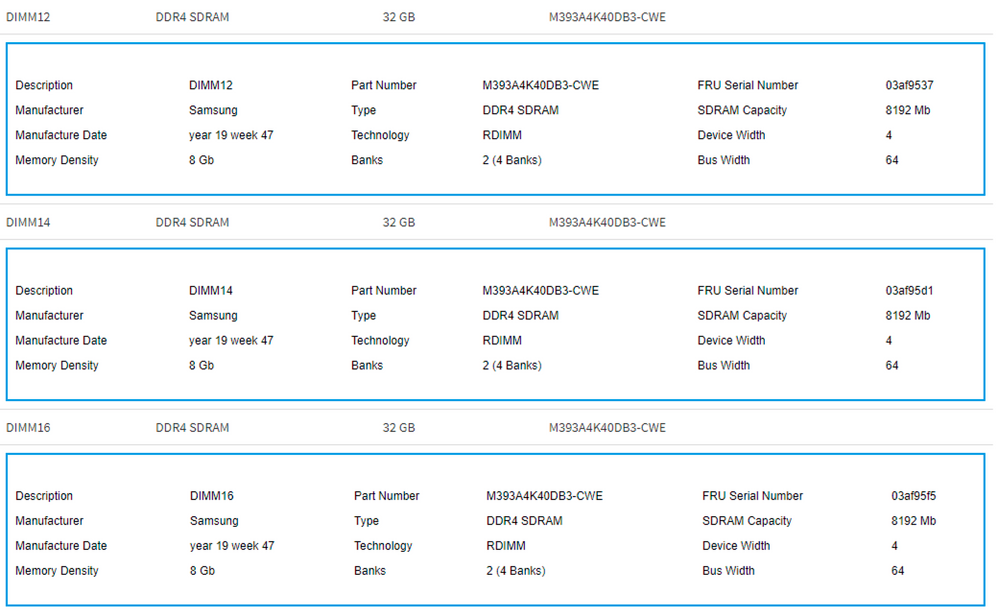

What is your memory configuration? How many DIMMs, what size and location?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Given you are not using all the memory slots, I have noted that performance is better with all slots filled with RAM when doing demanding workloads.

The manual says you have 16 memory slots in today. You have a few 32GB DIMMS on the board. The machine supports 128GB DIMMs but those are butally expensive.

I have a lot of experience with computer chess which can use all of the RAM you imagine and run banks of servers into the dirt. Many chess enthusiasts with 4 CPU boards and a ton of RAM have lots of comments on what works and what does not.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm out of ideas tinkering with this. If you have any advice, I can pick it up again. Lenovo and Linux are constants in this equation for me. I'll keep an eye open for good news from your side... or, at least, confirmation of the bad news.

Thanks for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can add one more observation: There does not appear to be a general degradation of transfer performance. There appears to be a ceiling. I did some testing with wrk2 and up to a point non-SEV guests both with and without IOMMU perform similarly. But, as the demand is ratcheted up, guests with IOMMU seem to hit a speed limit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can wrap this up. I moved one of my guests to an Intel box and enabled iommu there. I'm seeing the same performance issue on Intel. I'm not seeing it on s390, though. So, this must be a Ubuntu/QEMU/x86_64 thing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the update. We suggest looking into your full xml creating the VM to ensure the NIC is being properly defined.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which is a reasonable thing to suggest, except the exemplar provided by libvirt.org is not different from what I am using.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is not the NIC. Our lab has installed a different make/model NIC and the problem is unchanged. I have a:

- Non-SEV guest without IOMMU

- Non-SEV guest with IOMMU

- SEV guest with IOMMU

Only the first is able to transfer at line-rate. The others are transferring at ~10-15%

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some additional info if you're going to reproduce it (many thanks, btw). I used the following as a staring point:

https://cloud-images.ubuntu.com/focal/current/focal-server-cloudimg-amd64.img

I used the following command line create the guest domain:

sudo virt-install --name sev-guest --memory 4096 --memtune hard_limit=4563402 --boot uefi --disk /var/lib/libvirt/images/sev-guest.img,device=disk,bus=scsi --disk /var/lib/libvirt/images/sev-guest-cloud-config.iso,device=cdrom --os-type linux --os-variant ubuntu20.04 --import --controller type=scsi,model=virtio-scsi,driver.iommu=on --controller type=virtio-serial,driver.iommu=on --network network=default,model=virtio,driver.iommu=on --memballoon driver.iommu=on --graphics none --launchSecurity sev

The ISO simply changed the default password. I also tried replacing the hard limit with locked backing memory. No change.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To observe the transfer speeds, if you're going to try to reproduce this, I installed nginx. I used wget from another system to transfer a collection of 1 GB files.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lenovo ThinkSystem SR655 Server Product Guide > Lenovo Press

The network adapter is not mentioned in the manual so its likely not tested by Lenovo.

I have some PCIe SPF+ cards in my shop which support dual ports. They seem to work on everybody's machine I have tried the cards in so I cannot see why the Intel device would be any problem.

I know that I was noticing problems with Hyper-V my AMD desktop until a new BIOS fixed the problem. I so I am wondering if there is a fault in the Lenovo BIOS as AMD manages the BIOS rather than the board makers.

As a suggestion see if Hyper-V runs OK on the machine. This will narrow down the problem of operating system vs hardware.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Optimizing Linux for AMD EPYC™ 7002 Series Processors with SUSE Linux Enterprise 15 SP1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

fixlocal wrote:

Optimizing Linux for AMD EPYC™ 7002 Series Processors with SUSE Linux Enterprise 15 SP1

I'm familiar with it. IOMMU is mentioned 4 times. Once in text, three other times in a sample configuration file.

"Finally, any device that uses 'virtio' needs to gain an XML element looking like: <driver iommu='on'/>"

The sample configuration file is not substantially different than either the libvirt.org exemplar or my own.