- AMD Community

- Blogs

- Server Processors

- 4th Gen AMD EPYC™ Delivers Market-Leading Perform...

4th Gen AMD EPYC™ Delivers Market-Leading Performance & Efficiency versus NVIDIA Grace Superchip

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Industry benchmarks show single- and dual-socket 4th Gen AMD EPYC™ systems delivering between ~2.00x and ~3.70x higher performance and more than double the energy efficiency of a 2P NVIDIA Grace CPU Superchip system.

My previous blogs highlighted how 4th Gen AMD EPYC™ processors outperform both the latest 5th Gen Intel® Xeon® Platinum processors and Ampere® Altra® Max M128-30 processors for important workloads. Today, I’m going to delve into how 4th Gen AMD EPYC™ processors compare against NVIDIA Grace™ CPU Superchip processors in terms of both performance and energy efficiency.

4th Gen AMD EPYC processors continue setting new standards in datacenter performance, power efficiency, security, and total cost of ownership, driven by continuous innovation. The 4th Gen AMD EPYC™ processor portfolio offers cutting-edge on-premises and cloud-based solutions to address today’s demanding, highly varied workload requirements.

The extensive AMD EPYC ecosystem comprises over 250 distinct server designs and supports more than 800 unique cloud instances. AMD EPYC processors also hold over 300 world records for performance across a broad spectrum of benchmarks, including business applications, technical computing, data management, data analytics, digital services, media and entertainment, and infrastructure solutions.

NVIDIA recently launched their Grace™ CPU Superchip alongside some eye-opening performance comparisons. It's essential to carefully evaluate these claims because the benchmark results they published are minimal and lack important system configuration details. By contrast, AMD consistently showcases superior performance and power efficiency using extensive industry-standard benchmark publications. For example, currently, there are 5927 official SPEC CPU® 2017 publications for AMD EPYC processors, compared to zero for the NVIDIA Grace. As you will soon see, 4th Gen AMD EPYC processors deliver commanding energy efficiency and performance uplifts versus the NVIDIA processor.

Please be aware that I am only covering a subset of the tested workloads in this blog. The x86 processor architecture supports a broad range of enterprise, cloud-native, and HPC applications, but compatibility issues restrict the number of workloads that can be executed on the ARM-based NVIDIA Grace.

AMD compared several systems powered by single-socket and dual-socket configurations of AMD EPYC 9754 processors (code name “Bergamo,” featuring 128 cores and 256 threads/vCPUs), dual-socket AMD EPYC 9654 (code name “Genoa,” with 96 cores and 192 threads/vCPUs), and a dual-socket system utilizing the NVIDIA Grace processor (featuring 144 cores/vCPUs in a “2 x 72 cores” format). Each AMD EPYC system was equipped with 12 x 64GB of DDR5-4800 memory per socket, unless otherwise specified in the following sections. The NVIDIA system included the maximum 480 GB of LPDDR5-8532 memory currently supported by server vendors.

Power Efficiency

Modern data centers are striving to meet rapidly growing demands while optimizing power usage to control costs and achieve sustainability goals. The SPECpower_ssj® 2008 benchmark from the Standard Performance Evaluation Corporation (SPEC®) provides a comparative measure of the energy efficiency of volume server class computers by evaluating both the power and performance characteristics of the System Under Test (SUT).

Figure 1 illustrates that a single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by ~2.50x and ~2.75x, respectively. Further, a dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~2.27x on the same tests.[1][2][3]

Figure 1: SPECpower_ssj® 2008 relative performance

General Purpose Computing

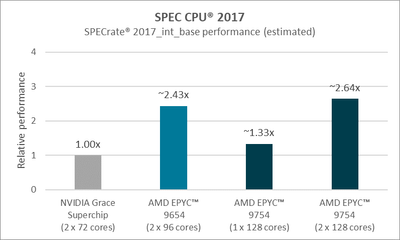

The Standard Performance Evaluation Corporation (SPEC) developed the SPEC CPU® 2017 benchmark suite to evaluate the performance of computer systems. SPECrate® 2017_int_base scores assess integer performance and is widely acknowledged as a premier industry standard for evaluating the capabilities of general-purpose computing infrastructure.

Figure 2 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by an estimated ~1.33x and ~2.64x, respectively. Further, a dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by an estimated ~2.43x on the same tests.[4][5][6]

Figure 2: SPECrate® 2017_int_base relative performance (estimated)

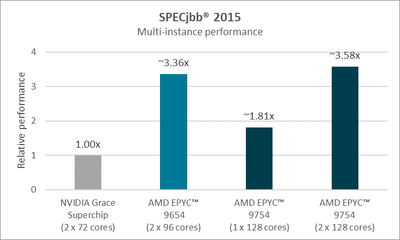

Server-Side Java

4th Gen AMD EPYC processors deliver performance, efficiency, and compatibility and allow you to deploy cloud native workloads with no compromises and without expensive architectural transitions. Java® has become a universal language across enterprise and cloud environments. The SPECjbb®2015 benchmark evaluates Java-based application performance on server-class hardware by modeling an e-commerce company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases, and data-mining operations.

Figure 3 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by ~1.81x and ~3.58x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~3.36x on the same tests when comparing SPECjbb2015-MultiJVM max-jOPS.[7][8][9]

Figure 3: Server-Side Java relative performance

AMD EPYC powered systems are using SUSE Linux Enterprise Server 15 SP4 v15.14.21 with Java SE 21.0 for AMD EPYC 9654 and Java SE 17.0 LTS for AMD EPYC 9754. NVIDIA Grace system using Ubuntu 22.04.4 (kernel v15.15.0-105-generic) with the latest Java SE 22.0.

Transactional Databases

MySQL is extensively utilized as an open-source relational database system across enterprise and cloud environments. AMD used the HammerDB TPROC-C benchmark to evaluate Online Transaction Processing (OLTP). The HammerDB TPROC-C workload is derived from the TPC-C Benchmark™ Standard, and as such is not comparable to published TPC-C™ results, as the results do not comply with the TPC-C Benchmark Standard.

Figure 4 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by ~1.58x and ~2.16x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~2.17x on the same tests.[10][11][12]

Figure 4: MySQL TPROC-C relative performance

The test systems were set up with Ubuntu® version 22.04, MySQL™ version 8.0.37, and HammerDB version 4.4. Each system hosted multiple virtual machines (VMs), each having 16 cores. Median values from three test runs were combined for comparative analysis.

Decision Support Systems

MySQL is widely used in Decision Support Systems deployments as well. AMD used HammerDB TPROC-H to evaluate Design Support System performance. The HammerDB TPROC-H workload is derived from the TPC-H Benchmark™ Standard, and as such is not comparable to published TPC-H™ results, as the results do not comply with the TPC-H Benchmark Standard.

Figure 5 illustrates that single- and dual-socket AMD EPYC 9754 systems configured as shown above outperformed an NVIDIA Grace system by ~1.42x and ~2.98x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same system by ~2.62x on the same tests.[13][14][15]

Figure 5: MySQL TPROC-H relative performance

The test systems were set up with Ubuntu® version 22.04, MySQL™ version 8.0.37, and HammerDB version 4.4. Each system hosted multiple virtual machines (VMs), each having 16 cores. Median values from three test runs were combined for comparative analysis.

Web Server

NGINX™ is a flexible web server known for its ability to act as a reverse proxy, load balancer, mail proxy, and HTTP cache. It's designed to efficiently handle client requests and deliver web content. NGINX can operate as a standalone web server or boost performance and security by serving as a reverse proxy for other servers. AMD used the popular WRK tool to evaluate performance by generating significant HTTP loads during benchmarking.

Figure 6 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by ~1.27x and ~2.56x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~1.89x on the same tests.[16][17][18]

Figure 6: NGINX relative performance

This benchmark test placed both the server and client on the same system to reduce network latency and focus on CPU processing power measurement. The systems were configured with Ubuntu® v22.04 and NGINX v1.18.0. Each system hosted multiple instances, each equipped with 8 cores. The workload was tested for 90 seconds in each run, and the median requests per second (rps) values from 3 runs per platform were aggregated to compare relative performance.

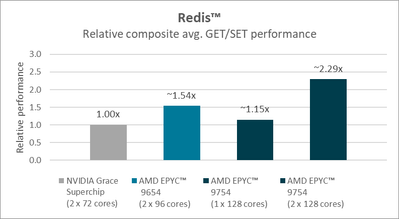

In-Memory Analytics

Redis™ is a powerful in-memory data structure store that serves as a distributed, key–value database, cache, and message broker, with optional durability features. AMD used the widely recognized purpose built redis-benchmark tool to measure Redis server performance.

Figure 7 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by ~1.15x and ~2.29x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~1.54x on the same tests.[19][20][21]

Figure 7: Redis relative performance

The systems were set up with Ubuntu® v22.04 and Redis v7.0.11, along with the redis-benchmark v7.2.3 client. Each client created 512 connections for GET/SET operations with key sizes of 1000 bytes to its respective Redis server. Each system hosted multiple instances, each equipped with 8 cores. The workload test executed 10 million requests on each system, and the median requests per second (rps) values from three runs were aggregated to compare relative performance.

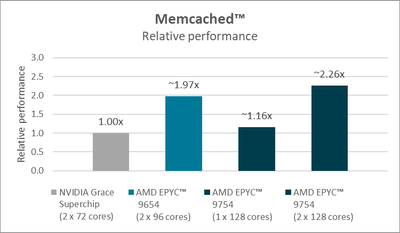

Cache Tier

Memcached™ is a high-performance, distributed in-memory caching system designed to store key-value pairs for small chunks of arbitrary data such as strings or objects. It typically caches results from database or API calls, as well as rendered pages. AMD selected the widely utilized memtier benchmarking tool to assess latency and throughput uplifts.

Figure 8 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace™ system by ~1.16x and ~2.26x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~1.97x on the same tests.[22][23][24]

Figure 8: Memcached relative performance

The systems were configured with Ubuntu® v22.04, Memcached v1.6.14, and memtier v1.4.0. Each memtier client established 10 connections, utilized 8 pipelines, and maintained a 1:10 SET/GET ratio with its corresponding Memcached server. Each system hosted multiple instances, each equipped with 8 physical cores. The workload test processed 10 million requests on each system, and the median requests per second (rps) values from three runs per platform were aggregated to assess relative performance.

High Performance Computing (HPC)

HPC influences every aspect of our lives, where performance is crucial from manufacturing to life sciences. ARM processors handle lighter workloads well but face challenges with critical data-centric and HPC workloads. Many applications have not been adapted for ARM, and those that have often lack the advanced features found in x86 processors that boost HPC performance. Memory capacity is another significant consideration: 4th Gen AMD EPYC processors support up to 3 TB of memory, whereas ARM-based NVIDIA Grace are limited to 480 GB.

Compiling HPC applications to run on ARM processors and debugging runtime failures is a nontrivial undertaking, to say the least. AMD engineers ran into the following issues attempting to run common open-source HPC workloads for testing that required quick turn-around times:

- NAMD throws runtime errors despite source code changes.

- GROMACS fails to compile and requires manual intervention at source level.

- OpenRadioss fails to compile and requires both changes and a pull request to enable it for ARM instructions.

- OpenFOAM® and WRF® dependencies fail to compile.

AMD engineers were able to compile and test the following open-source HPC workloads without considerable hurdles:

- HPL requires minor changes in the build system (cmake) to compile and run with the ARM performance math library.

- Quantum ESPRESSO compiles and runs with the ARM performance math library, but scalapack is not available in this library.

These challenges illustrate the importance of the x86 processor architecture compatibility offered by all AMD EPYC processors across all current generations. Let's compare the relative performance of these two workloads.

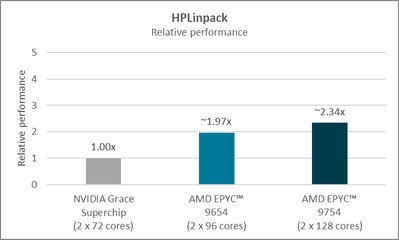

High Performance Linpack

High Performance Linpack (HPL) is a software package and benchmark that evaluates the floating-point performance of HPC clusters. This portable implementation uses Gaussian elimination to test the ability of the cluster to solve dense linear unary equations of a given degree. AMD ran the HPLinpack 2.3 matrix benchmark.

Figure 9 illustrates that the dual-socket AMD EPYC 9754 system outperformed the NVIDIA Grace system by ~2.34x. Further, a dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~1.97x on the same tests.[25][26]

Figure 9: HPL relative performance

The AMD EPYC 9754 and 9654 systems feature 1.5 TB of DDR5-4800 memory and run Red Hat Enterprise Linux 9.4 with kernel 5.14.0-427.16.1.el9_4.x86_64, while the NVIDIA Grace system is equipped with 480GB of LPDDR5X-8532 memory and operates on Red Hat Enterprise Linux 9.4 with kernel 5.14.0-427.18.1.el9_4.aarch64+64k.

Quantum ESPRESSO

Quantum ESPRESSO is an open-source suite that performs nanoscale electronic-structure calculations and materials modeling based on density-functional theory, plane waves, and pseudopotentials. The Quantum ESPRESSO 7.0 ausurf benchmark was used to compare the performance of the systems.

Figure 10 illustrates that the dual-socket AMD EPYC 9754 system outperformed the NVIDIA Grace system by ~4.08x. Further, a dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~3.46x on the same tests.[27][28]

Figure 10: Quantum ESPRESSO relative performance

The AMD EPYC 9754 and 9654 systems feature 1.5 TB of DDR5-4800 memory and run Red Hat Enterprise Linux 9.4 with kernel 5.14.0-427.16.1.el9_4.x86_64, while the NVIDIA Grace system is equipped with 480GB of LPDDR5X-8532 memory and operates on Red Hat Enterprise Linux 9.4 with kernel 5.14.0-427.18.1.el9_4.aarch64+64k.

Video Encoding

FFmpeg is an exceptionally versatile multimedia framework renowned for its ability to encode, decode, transcode, stream, filter, and play nearly any type of video format, from traditional to cutting-edge technologies. It is widely adopted for its comprehensive capabilities in video and audio processing, including format conversion, video scaling, editing, and seamless streaming.

Figure 11 illustrates that single- and dual-socket AMD EPYC 9754 systems outperformed an NVIDIA Grace system by ~1.60x and ~2.90x, respectively. Further, dual-socket AMD EPYC 9654 system outperformed the same NVIDIA system by ~2.38x on the same tests.[29][30][31]

Figure 11: FFmpeg relative encoding performance

The systems were set up with Ubuntu® v22.04 and FFmpeg v4.4.2. Each instance of FFmpeg transcoded a single input file with 4K resolution in raw video format using the VP9 codec. Each system hosted multiple instances, each configured with 8 cores. Performance on each system was evaluated based on the median total frames processed per hour across three test runs.

Conclusion

This blog highlighted the leadership performance of both general-purpose AMD EPYC 9654 and—most importantly—cloud-native AMD EPYC 9764 processors versus NVIDIA Grace across eleven key foundational, database, and HPC workloads. This combined with the data I shared in my blog last June demonstrates that 4th Gen AMD EPYC processors retain their market leading performance and power efficiency position.

We’re not stopping there: AMD is poised to extend our commanding performance and energy efficiency leads even further with the forthcoming debut of 5th Gen AMD EPYC processors (codenamed “Turin”) with up to 192 cores per processor and the fully compatible x86 architecture that seamlessly runs your existing and future workloads. I’ll say more when the time comes; meanwhile, let’s keep this shrouded in a little mystery.

Competition is the key to a thriving technology industry because it spurs ongoing innovation and advances that have the potential to solve humanity’s most pressing challenges and meet emerging needs head on. I am thrilled to be able to say that AMD EPYC processors are leading the pack. I can also assure you that AMD is relentlessly focused on maintaining our leadership in current and future generations of AMD EPYC processors.

Raghu Nambiar is a Corporate Vice President of Data Center Ecosystems and Solutions for AMD. His postings are his own opinions and may not represent AMD’s positions, strategies, or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

Endnotes

- SP5-279: As of 07/12/2024, a 1P AMD EPYC™ 9754 system delivers a 2.50x SPECpower_ssj® 2008 overall ssj_ops/watt uplift versus a 2P NVIDIA Grace™ CPU Superchip system. Configurations: 1P 128-core EPYC 9754 (33,014 overall ssj_ops/watt, 2U, https://www.spec.org/power_ssj2008/results/res2023q3/power_ssj2008-20230524-01270.html) versus 2P 72-core Nvidia Grace Superchip (13,218 overall ssj_ops/watt, 2U, https://www.spec.org/power_ssj2008/results/res2024q3/power_ssj2008-20240515-01413.html. SPEC® and SPECpower_ssj® 2008 are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-280: As of 07/12/2024, a 2P AMD EPYC™ 9754 system delivers a 2.75x SPECpower_ssj® 2008 overall ssj_ops/watt uplift versus a 2P NVIDIA Grace™ CPU Superchip system. Configurations: 2P 128-core EPYC 9754 (36,398 overall ssj_ops/watt, 2U, https://www.spec.org/power_ssj2008/results/res2024q2/power_ssj2008-20240327-01386.html) versus 72-core Nvidia Grace Superchip (13,218 overall ssj_ops/watt, 2U, https://www.spec.org/power_ssj2008/results/res2024q3/power_ssj2008-20240515-01413.html. SPEC® and SPECpower_ssj® 2008 are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-278: As of 07/12/2024, a 2P AMD EPYC™ 9654 system delivers a 2.27x SPECpower_ssj® 2008 overall ssj_ops/watt uplift versus a 2P NVIDIA Grace™ CPU Superchip system. Configurations: 2P 96-core AMD EPYC 9654 (30,064 overall ssj_ops/w, 2U, https://www.spec.org/power_ssj2008/results/res2022q4/power_ssj2008-20221204-01203.html) versus 2P 72-core Nvidia Grace Superchip (13,218 overall ssj_ops/watt, 2U, https://www.spec.org/power_ssj2008/results/res2024q3/power_ssj2008-20240515-01413.html. SPEC® and SPECpower_ssj® 2008 are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-276: SPECrate®2017_int_base comparison based on published and estimated results as of 05/08/2024. Configurations: 1P AMD EPYC™ 9754 (981 SPECrate®2017_int_base, 128 total cores, https://www.spec.org/cpu2017/results/res2023q2/cpu2017-20230522-36613.html versus 2P Grace CPU Superchip; 740 est. SPECrate® 2017_int_base, 144 total cores as per NIVIDIA claim: https://developer.nvidia.com/blog/inside-nvidia-grace-cpu-nvidia-amps-up-superchip-engineering-for-h.... OEM published scores will vary based on system configuration. SPEC® and SPECrate® are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-277: SPECrate®2017_int_base comparison based on published and estimated results as of 05/08/2024. Configurations: 2P AMD EPYC™ 9754 (1950 SPECrate®2017_int_base, 256 total cores, https://www.spec.org/cpu2017/results/res2023q2/cpu2017-20230522-36617.html versus 2P Grace CPU Superchip; 740 est. SPECrate® 2017_int_base, 144 total cores as per NIVIDIA claim: https://developer.nvidia.com/blog/inside-nvidia-grace-cpu-nvidia-amps-up-superchip-engineering-for-h.... OEM published scores will vary based on system configuration. SPEC® and SPECrate® are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-275: SPECrate®2017_int_base comparison based on published and estimated results as of 05/08/2024. Configurations: 2P AMD EPYC™ 9654 (1800 SPECrate®2017_int_base, 192 total cores, https://www.spec.org/cpu2017/results/res2024q2/cpu2017-20240421-42980.html) versus 2P Grace CPU Superchip; 740 est. SPECrate® 2017_int_base, 144 total cores as per NIVIDIA claim: https://developer.nvidia.com/blog/inside-nvidia-grace-cpu-nvidia-amps-up-superchip-engineering-for-h.... OEM published scores will vary based on system configuration. SPEC® and SPECrate® are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-282A: As of 07/12/2024, a 1P AMD EPYC™ 9754 system delivers a 1.81x SPECjbb2015-MultiJVM max-jOPS uplift versus a 2P NVIDIA Grace™ CPU Superchip system. Configurations: 1P AMD EPYC 9754 (446,246 SPECjbb2015-MultiJVM max-jOPS (192,284 SPECjbb2015-MultiJVM critical-JOPS), 128 total cores, https://www.spec.org/jbb2015/results/res2023q2/jbb2015-20230517-01045.html; 2P NVIDIA Grace CPU Superchip (247,011 SPECjbb2015-MultiJVM max-jOPS (61,383 SPECjbb2015-MultiJVM critical-jOPS), 144 total cores. https://www.spec.org/jbb2015/results/res2024q3/jbb2015-20240711-01295.html. SPEC® and SPECjbb® 2015 are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-283A: As of 07/12/2024, a 2P AMD EPYC™ 9754 system delivers a 3.58x SPECjbb2015-MultiJVM max-jOPS uplift versus a 2P NVIDIA Grace™ CPU Superchip system. Configurations: 2P EPYC 9754 (883,097 SPECjbb2015-MultiJVM max-jOPS (383,660 SPECjbb2015-MultiJVM critical-JOPS), 256 total cores, https://www.spec.org/jbb2015/results/res2023q2/jbb2015-20230517-01044.html; 2P NVIDIA Grace CPU Superchip (247,011 SPECjbb2015-MultiJVM max-jOPS (61,383 SPECjbb2015-MultiJVM critical-jOPS), 144 total cores. https://www.spec.org/jbb2015/results/res2024q3/jbb2015-20240711-01295.html. SPEC® and SPECjbb® 2015 are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- SP5-281A: As of 07/12/2024, a 2P AMD EPYC™ 9654 system delivers a 3.36x SPECjbb2015-MultiJVM max-jOPS uplift versus a 2P NVIDIA Grace™ CPU Superchip system.

Configurations: 2P AMD EPYC 9654 (828,952 SPECjbb2015-MultiJVM max-jOPS (365,847 SPECjbb2015-MultiJVM critical-jOPS), 192 total cores, https://www.spec.org/jbb2015/results/res2023q2/jbb2015-20230419-01034.html; 2P NVIDIA Grace CPU Superchip (247,011 SPECjbb2015-MultiJVM max-jOPS (61,383 SPECjbb2015-MultiJVM critical-jOPS), 144 total cores. https://www.spec.org/jbb2015/results/res2024q3/jbb2015-20240711-01295.html. SPEC® and SPECjbb® 2015 are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information. - SP5-256: In AMD testing as of 04/04/2024, a 1P AMD EPYC™ 9754 system (1 x 128C) running 16 MySQL v8.0.37 VMs using 16 vCPU /VM delivers ~1.57x the HammerDB v4.4 TPROC-C performance of a 2P Grace CPU Superchip system (2 x 72C) running 9 VMs using 16 vCPU/VM. Each instance ran one MySQL database with the schema created for 256 warehouses. All VMs were simultaneously loaded by an individual HammerDB client instance per VM. The workload was run for 10 minutes each, and the aggregate of median New Orders Per Minute (NOPM) values were recorded across 5 runs per platform to compare relative performance. The HammerDB TPROC-C workload is an open-source workload derived from the TPC-C Benchmark™ Standard, and as such is not comparable to published TPC-C™ results, as the results do not comply with the TPC-C Benchmark Standard. AMD System configuration: CPU: 1 x AMD EPYC 9754 (128C, 256 MB L3) on an AMD reference system; RAM: 12 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) and a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used. TPC, TPC Benchmark and TPC-C are trademarks of the Transaction Processing Performance Council.

- SP5-257: In AMD testing as of 04/04/2024, a 2P AMD EPYC™ 9754 system (2 x 128C) running 32 MySQL v8.0.37 VMs using 16 vCPU /VM delivers ~2.16x the HammerDB v4.4 TPROC-C performance of a 2P Grace CPU Superchip system (2 x 72C) running 9 VMs using 16 vCPU /VM. Each instance ran one MySQL database with the schema created for 256 warehouses. All VMs were simultaneously loaded by an individual HammerDB client instance per VM. The workload was run for 10 minutes each, and the aggregate of median New Orders Per Minute (NOPM) values were recorded across 5 runs per platform to compare relative performance. The HammerDB TPROC-C workload is an open-source workload derived from the TPC-C Benchmark™ Standard, and as such is not comparable to published TPC-C™ results, as the results do not comply with the TPC-C Benchmark Standard. AMD System configuration: CPU: 2 x AMD EPYC 9754 (128C, 256 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used. TPC, TPC Benchmark and TPC-C are trademarks of the Transaction Processing Performance Council.

- SP5-258: In AMD testing as of 04/04/2024, a 2P AMD EPYC™ 9654 system (2 x 96C) running 24 MySQL v8.0.37 VMs using 16 vCPU /VM delivers ~2.17x the HammerDB v4.4 TPROC-C performance of a 2P Grace CPU Superchip system (2 x 72C) running 9 VMs using 16 vCPU /VM. Each instance ran one MySQL database with the schema created for 256 warehouses. All VMs were simultaneously loaded by an individual HammerDB client instance per VM. The workload was run for 10 minutes each, and the aggregate of median New Orders Per Minute (NOPM) values were recorded across 5 runs per platform to compare relative performance. The HammerDB TPROC-C workload is an open-source workload derived from the TPC-C Benchmark™ Standard, and as such is not comparable to published TPC-C™ results, as the results do not comply with the TPC-C Benchmark Standard. AMD System configuration: CPU: 2 x AMD EPYC 9654 (96C, 384 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used. TPC, TPC Benchmark and TPC-C are trademarks of the Transaction Processing Performance Council.

- SP5-253: In AMD testing as of 05/08/2024, a 1P AMD EPYC™ 9754 system (1 x 128C) running 16 MySQL v8.0.37 VMs using 16 vCPU/VM delivers ~1.42x the HammerDB v4.4 TPROC-H performance of a 2P Grace CPU Superchip system (2 x 72C) running 9 instances using 16 vCPU/VM. Each instance ran one MySQL database at SF30. All VMs were simultaneously loaded by an individual HammerDB client instance per VM. The aggregate of median Queries per Hour (QphH) values were recorded across 3 runs per platform to compare relative performance. The HammerDB TPROC-H workload is an open-source workload derived from the TPC-H Benchmark™ Standard, and as such is not comparable to published TPC-H™ results, as the results do not comply with the TPC-H Benchmark Standard. AMD System configuration: CPU: 1 x AMD EPYC 9754 (128C, 256 MB L3); RAM: 12 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket); RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used. TPC, TPC Benchmark and TPC-C are trademarks of the Transaction Processing Performance Council.

- SP5-254: In AMD testing as of 05/08/2024, a 2P AMD EPYC™ 9754 system (2 x 128C) running 32 MySQL v8.0.37 VMs using 16 vCPU/VM delivers ~2.98x the HammerDB v4.4 TPROC-H performance of a 2P Grace CPU Superchip system (2 x 72C) running 9 VMs using 16 vCPU/VM. Each instance ran one MySQL database at SF30. All VMs were simultaneously loaded by an individual HammerDB client instance per VM. The aggregate of median Queries per Hour (QphH) values were recorded across 3 runs per platform to compare relative performance. The HammerDB TPROC-H workload is an open-source workload derived from the TPC-H Benchmark™ Standard, and as such is not comparable to published TPC-H™ results, as the results do not comply with the TPC-H Benchmark Standard. AMD System configuration: CPU: 2 x AMD EPYC 9754 (128C, 256 MB L3 per CPU) on AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used. TPC, TPC Benchmark and TPC-C are trademarks of the Transaction Processing Performance Council.

- SP5-255: In AMD testing as of 05/08/2024, a 2P AMD EPYC™ 9654 system (2 x 96C) running 24 MySQL v8.0.37 VMs using 16 vCPU /VM delivers ~2.62x the HammerDB v4.4 TPROC-H performance of a 2P Grace CPU Superchip system (2 x 72C) running 9 VMs using 16 vCPU/VM.. Each instance ran one MySQL database at SF30. All VMs were simultaneously loaded by an individual HammerDB client instance per VM. The aggregate of median Queries per Hour (QphH) values were recorded across 3 runs per platform to compare relative performance. The HammerDB TPROC-H workload is an open-source workload derived from the TPC-H Benchmark™ Standard, and as such is not comparable to published TPC-H™ results, as the results do not comply with the TPC-H Benchmark Standard. AMD System configuration: CPU: 2 x AMD EPYC 9654 (96C, 384 MB L3 per CPU) on AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used. TPC, TPC Benchmark and TPC-C are trademarks of the Transaction Processing Performance Council.

- SP5-268: In AMD testing as of DATE, a 1P AMD EPYC™ 9754 system (1 x 128C) running 16 NGINX™ v1.18.0 instances using 16 logical CPU threads per instance delivers ~1.27x the performance of a 2P NVIDIA Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each WRK v4.2.0 client created 400 connections fetching a small static binary file from its respective NGINX server. The workload test was run for 90 seconds each, and the aggregate of median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 1 x AMD EPYC 9754 (128C, 256 MB L3) on AMD reference system; RAM: 12 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-269: In AMD testing as of DATE, a 2P AMD EPYC™ 9754 system (2 x 128C) running 32 NGINX™ v1.18.0 instances using 16 logical CPU threads per instance delivers ~2.56x the performance of a 2P NVIDIA Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each WRK v4.2.0 client created 400 connections fetching a small static binary file from its respective NGINX server. The workload test was run for 90 seconds each, and the aggregate of median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 2 x AMD EPYC 9754 (128C, 256 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-270: In AMD testing as of DATE, a 2P AMD EPYC™ 9654 system (2 x 96C) running 24 NGINX™ v1.18.0 instances using 16 logical CPU threads per instance delivers ~1.89x the performance of a 2P NVIDIA Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each WRK v4.2.0 client created 400 connections fetching a small static binary file from its respective NGINX server. The workload test was run for 90 seconds each, and the aggregate of median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 2 x AMD EPYC 9654 (96C, 384 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-262: In AMD testing as of 03/28/2024, a 1P AMD EPYC™ 9754 system (1 x 128C) running 32 instances using 8 logical CPU threads per instance delivers ~1.13x and ~1.17x the Redis™ v7.0.11 GET and SET performance, respectively, with a composite average of ~1.15x the performance of a 2P Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each Redis-benchmark 7.2.3 client created 512 connections setting/getting a key size of 1000 bytes from its respective Redis server. The workload test was run for 10M requests each, and the aggregate of median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 1 x AMD EPYC 9754 (128C, 256 MB L3) on an AMD reference system; RAM: 1 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-263: In AMD testing as of 03/28/2024, a 2P AMD EPYC™ 9754 system (2 x 128C) running 64 instances using 8 logical CPU threads per instance delivers ~2.33x and ~2.26x the Redis™ v7.0.11 GET and SET performance, respectively, with a composite average of ~2.29x the performance of a 2P Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores- per instance. Both server and client were run on the same system to minimize network latency. Each Redis-benchmark 7.2.3 client created 512 connections setting/getting a key size of 1000 bytes from its respective Redis server. The workload test was run for 10M requests each, and the aggregate of median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 2 x AMD EPYC 9754 (128C, 256 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-264: In AMD testing as of DATE, a 2P AMD EPYC™ 9654 system (2 x 96C) running 48 instances using 8 logical CPU per instance delivers ~1.39x and ~1.68x the Redis™ v7.0.11 GET and SET performance, respectively, with a composite average of ~1.54x the performance of a 2P Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each Redis-benchmark 7.2.3 client created 512 connections setting/getting a key size of 1000 bytes from its respective Redis server. The workload test was run for 10M requests each, and the aggregate of median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 2 x AMD EPYC 9654 (96C, 384 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-259: In AMD testing as of 04/02/2024, a 1P AMD EPYC™ 9754 system (1 x 128C) running 32 Memcached™ v1.6.14 instances using 8 logical CPU threads per instance shared between the server and client delivers ~1.16x the performance of a 2P NVIDIA Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each client created 10 connections, 8 pipelines, and a 1:10 set:get ratio with its respective Memcached server using the Memcached_text protocol. The aggregate median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 1 x AMD EPYC 9754 (128C, 256 MB L3) on an AMD reference system; RAM: 12 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-260: In AMD testing as of 04/02/2024, a 2P AMD EPYC™ 9754 system (2 x 128C) running 64 Memcached™ v1.6.14 instances using 8 logical CPU threads per instance shared between the server and client delivers ~2.26x the performance of a 2P NVIDIA Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each client created 10 connections, 8 pipelines, and a 1:10 set:get ratio with its respective Memcached server using the Memcached_text protocol. The aggregate median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 2 x AMD EPYC 9754 (128C, 256 MB L3 per CPU) on AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-261: In AMD testing as of 04/02/2024, a 2P AMD EPYC™ 9654 system (2 x 96C) running 48 Memcached™ v1.6.14 instances using 8 logical CPU threads per instance shared between the server and client delivers ~1.97x the performance of a 2P NVIDIA Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Both server and client were run on the same system to minimize network latency. Each client created 10 connections, 8 pipelines, and a 1:10 set:get ratio with its respective Memcached server using the Memcached_text protocol. The aggregate median requests per second (rps) values were recorded across 3 runs per platform to compare relative performance. AMD System configuration: CPU: 2 x AMD EPYC 9654 (96C, 384 MB L3 per CPU) on an AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: SMT=ON; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on a production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-273: AMD testing as of 07/03/2024. The detailed results show the average uplift of the performance metric (TFLOPS) of this benchmark for a 2P AMD EPYC 9654 96-Core Processor powered system compared to a 2P Grace CPU Superchip powered system running select tests on Open-Source hpl. Uplifts for the performance metric normalized to the Grace CPU Superchip follow for each benchmark: * matrix: ~1.97x. System Configurations: CPU: 2P 144-Core Grace CPU Superchip; Memory: 2x 240 GB LPDDR5-8532; Storage: SAMSUNG MZTL23T8HCLS-00A07 3.84 TB NVMe; BIOS: 1.1A; BIOS Options: Performance Profile; OS: rhel 9.4 5.14.0-427.18.1.el9_4.aarch64+64k; Kernel Options: mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance. CPU: 2P 192-Core AMD EPYC 9654 96-Core Processor; Memory: 24x 64 GB DDR5-4800; Infiniband: 200Gb/s; Storage: SAMSUNG MZQL21T9HCJR-00A07 1.92 TB NVMe; BIOS: RTI1009C; BIOS Options: SMT=OFF, NPS=4, Performance Determinism Mode; OS: rhel 9.4 5.14.0-427.16.1.el9_4.x86_64; Kernel Options: amd_iommu=on, iommu=pt, mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance; Disable C2 States. Results may vary based on system configurations, software versions, and BIOS settings.

- SP5-274: AMD testing as of 07/03/2024. The detailed results show the average uplift of the performance metric (TFLOPS) of this benchmark for a 2P AMD EPYC 9754 128-Core Processor powered system compared to a 2P Grace CPU Superchip powered system running select tests on Open-Source hpl. Uplifts for the performance metric normalized to the Grace CPU Superchip follow for each benchmark: * matrix: ~2.34x. System Configurations: CPU: 2P 144-Core Grace CPU Superchip; Memory: 2x 240 GB LPDDR5-8532; Storage: SAMSUNG MZTL23T8HCLS-00A07 3.84 TB NVMe; BIOS: 1.1A; BIOS Options: Performance Profile; OS: rhel 9.4 5.14.0-427.18.1.el9_4.aarch64+64k; Kernel Options: mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance. CPU: 2P 256-Core AMD EPYC 9754 128-Core Processor; Memory: 24x 64 GB DDR5-4800; Infiniband: 200Gb/s; Storage: SAMSUNG MZQL21T9HCJR-00A07 1.92 TB NVMe; BIOS: RTI1007D; BIOS Options: SMT=OFF, NPS=4, Performance Determinism Mode; OS: rhel 9.4 5.14.0-427.16.1.el9_4.x86_64; Kernel Options: amd_iommu=on, iommu=pt, mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance, Disable C2 States. Results may vary based on system configurations, software versions, and BIOS settings.

- SP5-271: AMD testing as of 07/03/2024. The detailed results show the average uplift of the performance metric (Elapsed Time) of this benchmark for a 2P AMD EPYC 9654 96-Core Processor powered system compared to a 2P Grace CPU Superchip powered system running select tests on Open-Source quantum_espresso QE 7.0. Uplifts for the performance metric normalized to the Grace CPU Superchip follow for each benchmark: * ausurf: ~3.46x. System Configurations: CPU: 2P 144-Core Grace CPU Superchip; Memory: 2x 240 GB LPDDR5-8532; Storage: SAMSUNG MZTL23T8HCLS-00A07 3.84 TB NVMe; BIOS: 1.1A; BIOS Options: Performance Profile; OS: rhel 9.4 5.14.0-427.18.1.el9_4.aarch64+64k; Kernel Options: mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance. CPU: 2P 192-Core AMD EPYC 9654 96-Core Processor; Memory: 24x 64 GB DDR5-4800; Infiniband: 200Gb/s; Storage: SAMSUNG MZQL21T9HCJR-00A07 1.92 TB NVMe; BIOS: RTI1009C; BIOS Options: SMT=OFF, NPS=4, Performance Determinism Mode; OS: rhel 9.4 5.14.0-427.16.1.el9_4.x86_64; Kernel Options: amd_iommu=on, iommu=pt, mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance, Disable C2 States. Results may vary based on system configurations, software versions, and BIOS settings.

- SP5-272: AMD testing as of 07/03/2024. The detailed results show the average uplift of the performance metric (Elapsed Time) of this benchmark for a 2P AMD EPYC 9754 128-Core Processor powered system compared to a 2P Grace CPU Superchip powered system running select tests on Open-Source quantum_espresso QE 7.0. Uplifts for the performance metric normalized to the Grace CPU Superchip follow for each benchmark: * ausurf: ~4.08x. System Configurations: CPU: 2P 144-Core Grace CPU Superchip; Memory: 2x 240 GB LPDDR5-8532; Storage: SAMSUNG MZTL23T8HCLS-00A07 3.84 TB NVMe; BIOS: 1.1A; BIOS Options: Performance Profile; OS: rhel 9.4 5.14.0-427.18.1.el9_4.aarch64+64k; Kernel Options: mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance. CPU: 2P 256-Core AMD EPYC 9754 128-Core Processor; Memory: 24x 64 GB DDR5-4800; Infiniband: 200Gb/s; Storage: SAMSUNG MZQL21T9HCJR-00A07 1.92 TB NVMe; BIOS: RTI1007D; BIOS Options: SMT=OFF, NPS=4, Performance Determinism Mode; OS: rhel 9.4 5.14.0-427.16.1.el9_4.x86_64; Kernel Options: amd_iommu=on, iommu=pt, mitigations=off; Runtime Options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governer - Performance, Disable C2 States. Results may vary based on system configurations, software versions, and BIOS settings.

- SP5-265: In AMD testing as of 04/16/2024, a 1P AMD EPYC™ 9754 system (1 x 128C) running 32 instances using 8 logical CPU threads per instance delivers ~1.60x the FFmpeg v4.4.2 raw to vp9 4K Tears of Steel encoding performance of a 2P Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Each FFmpeg instance transcoded a single input file with 4K resolution in raw video format on an NVMe drive into an output file with the VP9 codec on a separate NVMe drive. Multiple FFmpeg jobs were run concurrently on each system, and aggregate performance on each system was compared using the median total frames processed per hour across 3 runs. AMD System configuration: CPU: 1 x AMD EPYC 9754 (128C, 256 MB L3) on AMD reference system; RAM: 12 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default OS: Ubuntu® 22.04.1 LTS; Kernel: Linux® 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Tears of Steel (CC) Blender Foundation | mango.blender.org. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-266: In AMD testing as of 04/16/2024, a 2P AMD EPYC™ 9754 system (2 x 128C) running 64 instances using 8 logical CPU threads per instance delivers ~2.90x the FFmpeg v4.4.2 raw to vp9 4K Tears of Steel encoding performance of a 2P Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Each FFmpeg instance transcoded a single input file with 4K resolution in raw video format on an NVMe drive into an output file with the VP9 codec on a separate NVMe drive. Multiple FFmpeg jobs were run concurrently on each system, and aggregate performance on each system was compared using the median total frames processed per hour across 3 runs. AMD System configuration: CPU: 2 x AMD EPYC 9754 (128C, 256 MB L3 per CPU) on AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: -default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Tears of Steel (CC) Blender Foundation | mango.blender.org. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

- SP5-267: In AMD testing as of 04/16/2024, a 2P AMD EPYC™ 9654 system (2 x 96C) running 48 instances using 8 logical CPU threads cores per instance delivers ~2.38x the FFmpeg v4.4.2 raw to vp9 4K Tears of Steel encoding performance of a 2P Grace CPU Superchip system (2 x 72C) running 18 instances using 8 physical CPU cores per instance. Each FFmpeg instance transcoded a single input file with 4K resolution in raw video format on an NVMe drive into an output file with the VP9 codec on a separate NVMe drive. Multiple FFmpeg jobs were run concurrently on each system, and aggregate performance on each system was compared using the median total frames processed per hour across 3 runs. AMD System configuration: CPU: 2 x AMD EPYC 9654 (96C, 384 MB L3 per CPU) on AMD reference system; RAM: 24 x 128 GB DDR5-4800; Storage: 6 * 1.7 TB Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO; NIC: NetXtreme BCM5720 Gigabit Ethernet PCIe @ 1Gbe; BIOS: RTI1006C; BIOS Options: default; OS: Ubuntu 22.04.1 LTS; Kernel: Linux 5.15.0-105-generic; OS Options: Default. NVIDIA System configuration: CPU: 2 x NVIDIA Grace CPU Superchip (72C, 228 MB L3 per socket) on production system; RAM: 2 x 240 GB LPDDR5_SDRAM-8532; Storage: 3 TB NVME SAMSUNG MZTL23T8HCLS-00A07 & 900 GB NVME SAMSUNG MZ1L2960HCJR-00A07; NIC: Ethernet Controller 10G X550T, 2Ports; BIOS: 1.1; BIOS Options: Default; OS: Ubuntu 22.04.4 LTS; Kernel: Linux 5.15.0-101-generic; OS Options: Default. Tears of Steel (CC) Blender Foundation | mango.blender.org. Results may vary based on factors including but not limited to BIOS and OS settings and versions, software versions and data used.

-

AI & Machine Learning

23 -

AMD

1 -

AMD Instinct Accelerators Blog

1 -

Cloud Computing

31 -

Database & Analytics

22 -

EPYC

108 -

EPYC Embedded

1 -

Financial Services

16 -

HCI & Virtualization

25 -

High-Performance Computing

33 -

Instinct

9 -

Supercomputing & Research

8 -

Telco & Networking

12 -

Zen Software Studio

4

- « Previous

- Next »