We are using QEMUS Inter-VM Shared Memory (IVSHMEM) technology on a AMD Ryzen 9 5900X processor.

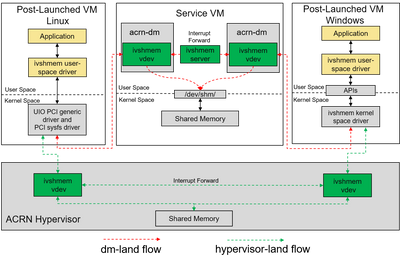

From QEMU's specification: IVSHMEM is designed to share a memory region between multiple QEMU processes running different guests and the host. In order for all guests to be able to pick up the shared memory area, it is modeled by QEMU as a PCI device exposing said memory to the guest as a PCI BAR. On a Linux VM you will use a UIO driver to map the memory to the devices.

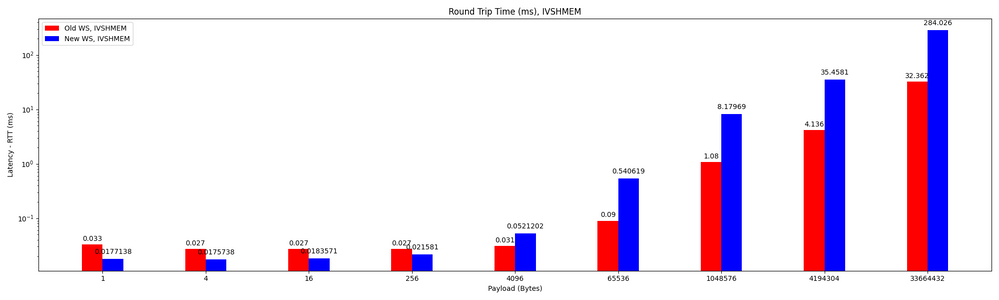

We bench-marked performance for this technology communicating a block of data of different sizes between two Linux machines; in both an AMD and an Intel processor. IVSHMEM allows to have an interruption so our basic test consists of writing a block of memory to the shared memory, send a interruption to the other VM, then the other VM copies the data out and notifies with another interruption.

For this test the performance on AMD degrades rapidly after the standard page size (4k). Check this plot with round-trip-times on both processors: Intel in Red, AMD in Blue. *Note: scale is logarithmic.

We have tried to understand these results without any luck. We have tried checking cache misses, using hugepages, pinning processes to the cache blocks near each other, we checked the dTLB, and performance profiles. Of course AMD-V and AMD I/O are active. Main memory and other components are similar. Intel performance even beats AMD's WS on Laptops and other low-performance processors. The graph results are almost identical for many intel processors, like:

- 11th Gen Intel(R) Core(TM) i7-11700K @ 3.60GHz

- 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80GHz

- 12th Gen Intel(R) Core(TM) i7-12700K

- 10th Gen Intel(R) Core(TM) i7-10510U CPU @ 1.80GHz

Is there any design or implementation difference that makes the AMD processors worse for this scenario? Are there any know issues with IOVA performance, or memory handling in general?

Thanks,