- AMD Community

- Support Forums

- PC Graphics

- Re: Top tier AMD card performs worse than 2 genera...

PC Graphics

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Top tier AMD card performs worse than 2 generations older Nvidia

Hi,

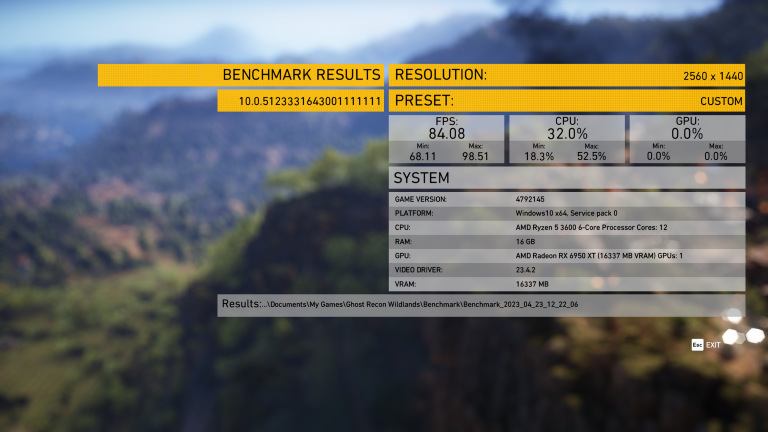

I have low performance with XFX 6950XT card.

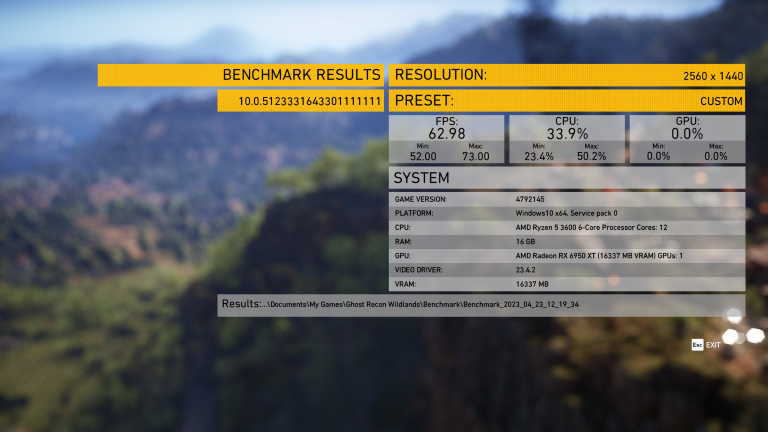

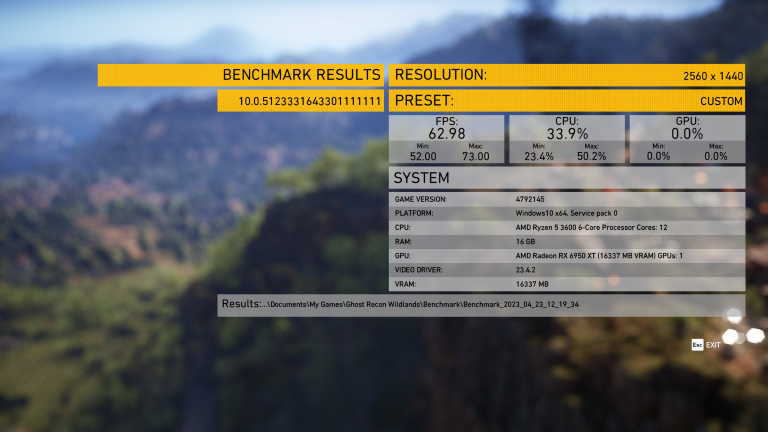

High settings 1440p ~70fps (CPU usage ~50%, GPU ~45%, temps within norms). With stutters

If I swap it for 1080TI in the same machine with High settings (same scene) ~100fps (CPU usage ~80%, GPU - 100%). Smooth play.

Game: Ghost Recon Wildlands

I tried:

* Updating BIOS, chipset, drivers, windows

* Switching off FreeSync

* Switching off all fancy settings in Adrenaline drivers

My setup:

Ryzen 3600, 750W PSU

I know Ryzen 3600 is bottleneck for this card, BUT it should at least reach same level of utilization (80%) as with Nvidia card, shouldn't it ? And given this is more powerful card I should get at least some FPS improvement, not a degradation in performance.

3DMark and Cinebench scores seems to match comparable systems.

Ghost Recon Breakpoint works better. 1080Ti - 82fps in bench, 6950xt - 94fps.

It looks like a unique combination of this game and drivers/settings ? Any Ideas ?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@johnnyenglish - yes, all the other suggestions have been read, taken into consideration.

Nothing worked so far. Two cables or one, 750W or 1000W, DDU or no DDU, Gen3 or Gen4

Just 2 days and 100EUR waisted to eliminate those.

So, rather shooting in the dark and using deduction I have come to a conclusion if none of the suggestions are true, this means that the one that is left is true, however improbable (at least at first).

And the only conclusion left - AMD is garbage. Yes, I understand, I am writing in the AMD community and I can be cancelled, banned or swatted, so let me qualify this - AMD Radeon 6950XT is garbage compared to 1080TI in Ghost Recon Wildlands with High/Ultra settings. That's actually what in essence is the title of this thread - the answer was in front of our eyes all along!

Now, my next thought was - how do I get evidence to support that. Unlike many of us here I don't like to function on internet folklore. My thought process was - if this is the case and I have any luck proving - the only thing I am able to control is the settings of the game, because I wouldn't be able to get game source code or talk to developers. So I did exactly that - painstakingly switched on/off every setting in graphics and video tabs and running the benchmark.

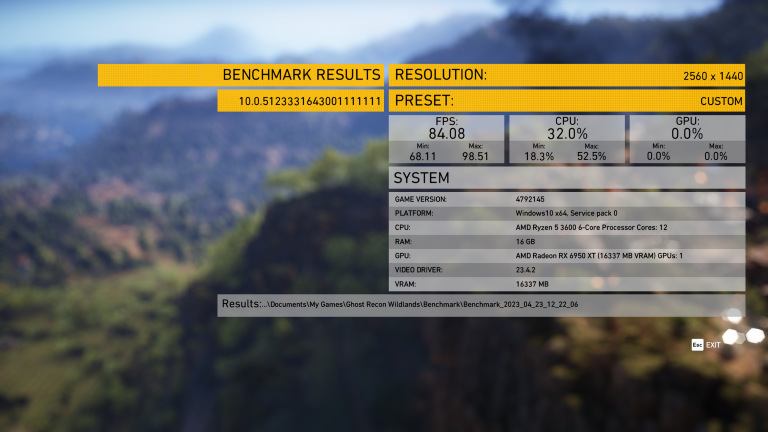

The result - "Turf Effects" and "Vegetation Quality" made all the difference. So when those are swithced of, instead of 40/70 fps, I am now getting 70/100, no stutters, just like @goodplay with his Ryzen 5 5600x. So it all make sense - Nvidia proprietary dx11 feature is used in an old game. Developers and AMD are not really keen on making it work with Radeon cards, specially if most people running high end cards would also have something like 5800 at least, which is able to chew through vegetation rendering with software mode when Radeon cards don't cut it.

In short this is a case of GPU manufacture favorism by game developers combined with weak CPU for modern times.

Default Ultra profile: turf effects On, ultra vegies

Custom Ultra profile: turf effects Off, low vegies, everything else ultra.

Thanks and happy communing !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yea its not an idea its just completely true you are having an insane cpu bottleneck expyrience. Ryzen3600x at 1440p, in my case first cpu which is cabable to run my 7900xtx without bottleneck at higher 3440x1440 (ultrawide) is actualy 7800x3d. plus use separated 8 pin cables , not pigtail. I am also kinda sure 1080ti is missing dx 12 so you are missing some graphics quality compare to 6950xt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please, read carefully, maybe next time you will waste less time.

1080ti DOES support dx12. The game I am testing Ghost Recon Wildland DOES NOT use dx12, it is dx11 game.

So I am not loosing any quality it is **bleep** no matter which GPU.

Now, I don't know what you mean about running anything at 4K, and what your 7900xtx has to do with anything. The card in question is 6950xt and the resolution in question is 1440p.

Next, the bottlenecked CPU should reach it's limits before it is bottlenecking ? Isn't it the case ? Granted not every game is capable of extracting 100% of CPU's. The example would be a game that is not using all the cores. But since I know this game is capable of pulling 80% out of my CPU (benchmark with 1080ti) - I expect it to reach at least 80% when it is bottlenecked. The fact that it doesn't actually suggests that GPU is bottlenecking and not CPU.

And lastly, If you want to check other sources. This benchmark

https://www.gpucheck.com/game-gpu/ghost-recon-wildlands/amd-radeon-rx-6950-xt/amd-ryzen-5-3600/ultra

captures my configuration exactly (CPU and GPU) reaching 146fps with Ultra settings. Where I get 70 with High.

Regarding 8 pin connector you were absolutely correct. That was the culprit and now I am getting the promised FPS. Just kidding. Aside the fact that this is internet folklore, do you think an insufficient power draw would somehow manifest in synthetic benchmarks I mentioned ? I think it would ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gpucheck, one of 100's of (amazon affiliate program?) supposed tech sites with garbage info.

I've played it at 1440p, doesn't fully utilize the card, went to ultra 4k vsr for a fairly stable 60 fps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What FPS do you get on 1440p High/Ultra ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Min/max 71/101 ultra benchmark, in game was 72/86.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting 40/70 on Ultra benchmark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeh, that's not good, i don't use any tweaks, oc, monitor is set 120Hz (my pref.).

I do get up to 120 fps in some areas, others down to 72/86.

I ran GRB bench (DX) got min/max 101/120, in game mostly 115-120, some drops to 90's on 1440p.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

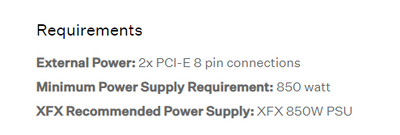

Your PSU is the minimum for a reference 6950XT but as for the XFX you will need to bump it up to 850Watt.

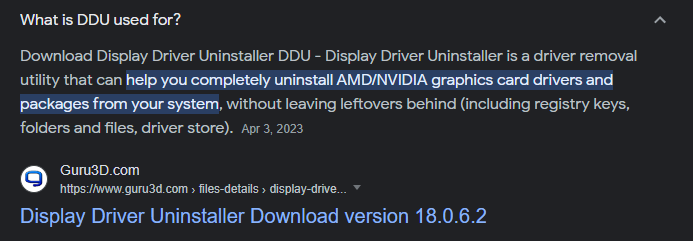

You also did not mention DDU, so I'm guessing the old nvidia drivers may or are causing some issues in your system.

At 1440p bottleneck is less of an issue. Why? I tested on a 2700X.

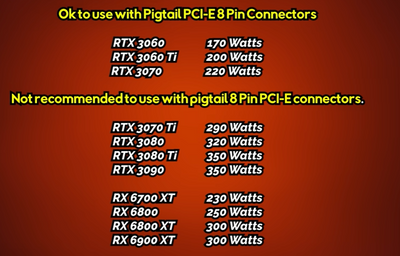

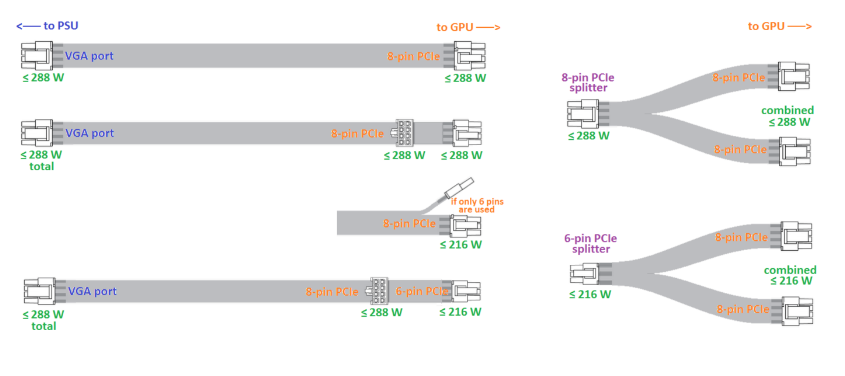

Do you really think that daisy chaining is internet folklore?

Long story short. Its not! On the majority of cards differences will be little to almost nothing, but on a RX6800 and RX6950XT they are a bit more notorious or outright hazardous, specially when pushing the OC. I already tested on mine and if you don't believe my word, go have a look at tech tubers, Jayz2cents saw some tiny differences as well, and that was on a 1080ti let alone todays beasts.

There is also an official Seasonic recommendation to not daisy chain or pigtail (whatever they call it) on certain cards.

And finally, please be polite with other members even if you feel they do not add anything of value to you.

Good Luck

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My apologise on being rude here, on that part you are right. I will try to contain my sharp words.

Regarding the pig tailing cable, I tested that with pig tail and dedicated cables. Absolutely no difference.

Are Nvidia drivers really might be the issue ? Is this something documented ? Tested ? Would like to know more. This is the first intelligence suggestion in this thread.

But I am afraid the last. Let me explain.

Regarding XFX recommendation it is... "recommendation", which usually means it must cover edge-cases as well, like really power hungry systems, so 850W is fair.

In the mean time my system is sipping power. My CPU max TDP is only 65W..., add another 100W for components (which is ridiculous). Ok round it up to 200W - which is insane, this leaves 550W for GPU. Now, you know a lot about cables and you added a picture which says top cards consume 300W. How in the world 550W headroom is not enough ? Is it less than 300W ? Even if XFX glued two of 6950XT together and it requires 600W (and thus 750W is not enough) how could you deliver this in 2 PCI-E cables ?

It seems I am being rude again, but logic and math should be also a criteria which shows respect to each other and not only using nice words.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DDU is a widely used tool to clean up the driver mess (files and such) that could cause some conflicts in the OS level. May not help but its for the troubleshooting.

The pigtail/daisy chain is a general recomendation, it may not hit you if are not squeezing all the juice, and that is one of your concerns. I've helped some cases of intense crashing just by using a dedicated power cable for each 8PIN.

The CPU TDP is 65 but that is not the CPU power draw, the 2700X 105TDP with PBO tweaks had a a 170Watt powerdraw. The 3600 should hit the 90 to 100ish Watts stock.

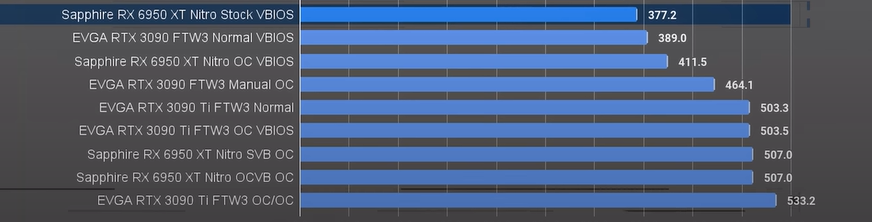

About the PSU, its not really about average scores but more about stability on peak requirements, so-called "transient spikes". I could not find any relevant data on transient spikes on Radeon but bellow you have the averages. The sapphire is not XFX but the power draw should be silimar, you have 377 on average and if you even consider pushing the clocks they'll go up quick.

For comparison, my RX6800 spikes at 350Watts although averages at 250. I haven't measured the 6950XT but intend to do it soon.

I'll try to keep digging for more help but I'm pretty sure it could be software related or a hard strange restriction being applied.

Another good tip is, I would also try and change to pBIOS or qBIOS if your card has dual BIOS, again, just for troubleshooting purposes.

Lastly, I would get MSI afterburner and raise the powerlimit to 110% only, but not the clocks. Or do it in Adrenalin, should be the same.

Good Luck

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Crank the options for 6950xt and make it work harder.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calling daisy chain a internet folklore is the reason why you can't even get your GPU running properly. People give you solutions but you keep questioning them or plainly refusing help. The other guy already told you to use DDU on your Nvidia Drivers then install your AMD Drivers. But of course you're gonna go around claiming internet folklore.

I think your issue is just one of those internet folklore and you're here to just troll.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Both of these suggestions are eliminated and not the cause of an issue. Tried DDU, helped diddly squat. As well as tried dedicated cables - nada. Please, read all my post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: pcie cabling/connections, from a safety (protecting your components) point of view.

Add an image (from another site) re the mentioned 288W,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my experience, if there are power issues, the app will crash or pc will shutdown, not just run slower.

Running both (CPU/GPU) at approx 50% utilization means the wait is on something else. More likely is something like running gpu in x4 or x8 mode (use gpuz to test), gen 4 pcie buggy so force gen 3, memory bandwidth issues, rebar is activated for 6950xt and cpu cannot handle it, ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the suggestions.

Unfortunately tried rebar(on/off), gen3 - no luck.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In most of the times that will be true, gpu trips PSU and system crashes. In other situations, daisy chain wont trip the PSU as wattage just wont cleanly go through the cable, its hazardous and can crash the systems, but there is a small chance that the gpu wont get the power it needs or just enough to do a slow run. Again, its not the majority, but it needs to be done as troubleshooting so we can move on to next steps.

I still feel that if XFX stated 850 as minimum, then this should be checked too.

PCI lanes is also a good way to go, could be in a 4x mode, 8x shouldnt cut that much but if you have 2 nvme and the board manual states that populating a certain slot will make PCIe be cut to 8x, well, its worth checking.

Trying the card in another system will be one of the best troubleshootings to right now.

Also see the other suggestions, but at this point if it keeps giving you problems, assume the loss and open a warranty ticket.

Good luck

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Daisy chain is ruled out, forget about it already... I am running on two cables.

I will try 1000W PSU tomorrow, almost certain won't make a difference, since 3dMark is drawing 360W and scores are good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, like I told you before, be mindful on what you write in a community forum, keeping that posture will only lead to no one replying or worse .

You already have an example above, be humble when you seek help, no one here is obligated to do so. They, like me, are trying to help you out with no second intwntions.

Have you even read the other suggestions?

Good luck

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@johnnyenglish - yes, all the other suggestions have been read, taken into consideration.

Nothing worked so far. Two cables or one, 750W or 1000W, DDU or no DDU, Gen3 or Gen4

Just 2 days and 100EUR waisted to eliminate those.

So, rather shooting in the dark and using deduction I have come to a conclusion if none of the suggestions are true, this means that the one that is left is true, however improbable (at least at first).

And the only conclusion left - AMD is garbage. Yes, I understand, I am writing in the AMD community and I can be cancelled, banned or swatted, so let me qualify this - AMD Radeon 6950XT is garbage compared to 1080TI in Ghost Recon Wildlands with High/Ultra settings. That's actually what in essence is the title of this thread - the answer was in front of our eyes all along!

Now, my next thought was - how do I get evidence to support that. Unlike many of us here I don't like to function on internet folklore. My thought process was - if this is the case and I have any luck proving - the only thing I am able to control is the settings of the game, because I wouldn't be able to get game source code or talk to developers. So I did exactly that - painstakingly switched on/off every setting in graphics and video tabs and running the benchmark.

The result - "Turf Effects" and "Vegetation Quality" made all the difference. So when those are swithced of, instead of 40/70 fps, I am now getting 70/100, no stutters, just like @goodplay with his Ryzen 5 5600x. So it all make sense - Nvidia proprietary dx11 feature is used in an old game. Developers and AMD are not really keen on making it work with Radeon cards, specially if most people running high end cards would also have something like 5800 at least, which is able to chew through vegetation rendering with software mode when Radeon cards don't cut it.

In short this is a case of GPU manufacture favorism by game developers combined with weak CPU for modern times.

Default Ultra profile: turf effects On, ultra vegies

Custom Ultra profile: turf effects Off, low vegies, everything else ultra.

Thanks and happy communing !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NVidia and AMD having been "sponsoring" games like this for decades. NVidia has the bigger dev team, so more "special" features. Remember NV Physx? Still need that for some older games. Willing to bet that if dev had multithreaded the feature better that it would work better on your cpu, but then they got a boat load of cash from NV to focus on NV performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your emotional pitchworks are your weakness here my friend. Would be fine, just accept that fact your hardware just not matching, unless your gpu is defective somehow, thats very unlikely. Even gpu utilization can be kinda missleading , its just shows all pipelines are used, but it doesnt shows how + engine you are running also metters. I had most of the time fully utilized gpu in crysis remaster with 7900xtx paired together with ryzen 5600x, however performance was horrible after upgrade to AM5 platform r7800x3d i have pretty much 40% more frapes per seconds. This is how it works, pairing some serious gpu with old, bellow avarage cpu is just bad idea. If you are looking for maximal frame rates. Thats just fact, you can argue whatever you want, but it wont help you to solve the issues. Thats like guy who was angry on the forum about his new 4090 it gave him just 1fps in world of tanks, compare to 3080 10gb, well he used intel core I7 8gen, cpu was just clearly bottleneck. You have basicaly two framerates one for cpu another for gpu, the lower number is what you will realy get. Talking about AMD fan forum realy isnt reliable i was most of the time nvidia gpu user i am just buying the best offer for me. Offend someone who tryes to help you , will always just hurt you in long run.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well I am not trying to offend anyone, it's just a friendly banter. And a bit of poking fun of some of the suggestions - which like this case proved (and I am sure so many others) were absolutely bugger all.

And the suggestions that did go to the right direction (weak system), where lacking any kind of hypothesis or rationale. "It's a bottleneck, your CPU is **bleep**, get over it". That did not help at all since my question was posed with specifics in mind.

It did turn out that my CPU is **bleep**, but only when AMD is sharing a "fractal" with it

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here here step right up and get your answer https://pc-builds.com/bottleneck-calculator

Be nice to helpers here their not under any obligation to help anyone it's free gratis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can agree with almost everything, and even the CPU pairing. If you go down the route of 1080p you will get a serious bottleneck of 50 to even 70fps less.

But not at 1440p, which is what I said in my first post.

At 1440p or above, I can assure you that the difference of a 2700X to a 7950X is less that 30fps.

I tried it with my former CPU 2700X and my current CPU 7950X and with a RX6800 and 6950XT.

The issue is, for what it looks like, game optimizations.

But one thing I cannot tolerante from another member, and that is a poor behaviour in a community forum.

I'm not admin or moderador for that matter but I will provide a fair warning, which I did, to where the conversation is heading. After that, I'll pretty much reply in the same manner.

: -)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I went from a 1080ti Ryzen 3600 to a 6950xt then swapped the 3600 for a 5800x3d. GR Breakpoint ran 50% better with all settings maxed at 1440p. This is on an Asrock x570 motherboard. Not sure where your bottleneck was but 99.9% of the internet would agree the 6950xt is faster than the 1080ti and I will agree.

Now if you asked me if the 6950xt was a sensible upgrade in May of 2022, that's a whole different discussion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your CPU is probably the culprit. I went through the same thing with the same game. Get a better cpu. And get a minimum compliant psu while you're at it.