- AMD Community

- Communities

- PC Drivers & Software

- PC Drivers & Software

- Re: X570: NVMe RAID0 very slow with 2 x Intel P45x...

PC Drivers & Software

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

X570: NVMe RAID0 very Slow Sequential Read with 2 x Intel P45x0 NAND SSDs - but SSDs perform fast individually (also at the same time) - but 2 x Optane RAID0 as fast as expected

Hello,

I've found a general motherboard manufacturer-independent compatibility issue with the combination of Intel DC P45x0 U.2 4TB SSDs (tested models P4500 and P4510 so far) and the AMD NVMe RAID feature with X570 chipsets.

A third party (Wendell from Level1Techs, cp. the full detailed forum thread there) can confirm the issue so a personal user configuration error seems unlikely at this point.

Issue cliff notes:

- 2 SSDs are connected to an X570 chipset motherboard, one directly to CPU PCIe lanes, the second one gets its PCIe lanes through the X570 chipset.

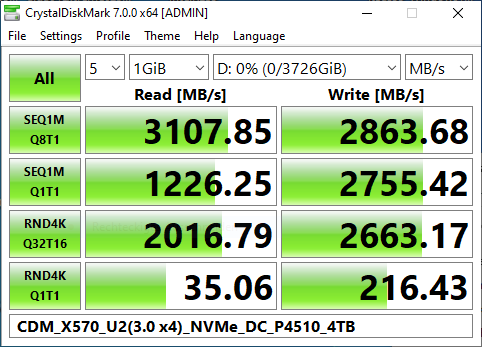

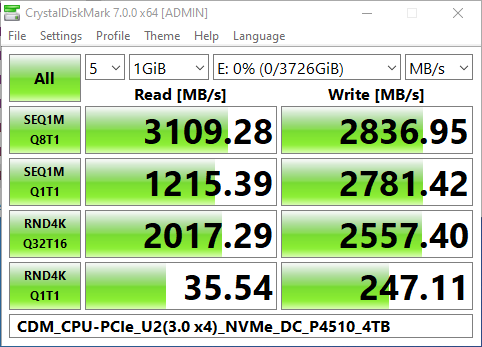

- In non-NVMe RAID operation the SSDs work fine with the expected full performance, even if you test them at the same time so there is no PCIe bandwith limit:

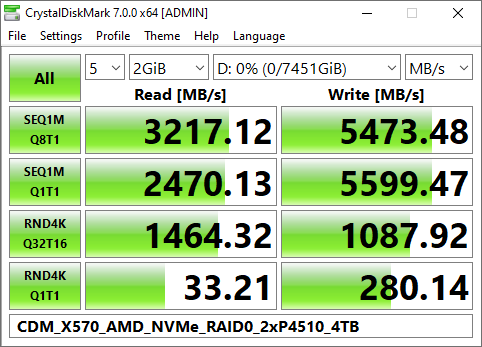

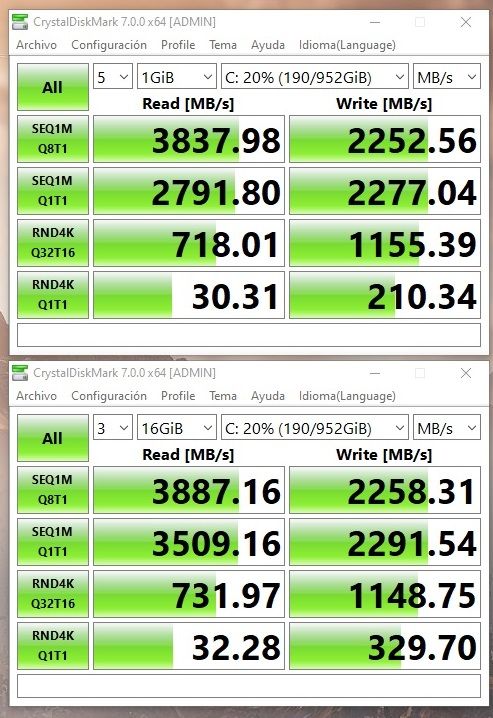

- After enabling the AMD NVMe RAID feature in the UEFI and configuring the drives as a RAID0 array (256 kB stripe size, Read cache On) you get results like this:

As you can see the sequential read result is very weak (expected between 5500-6000 MB/s) compared to a single drive. ![]()

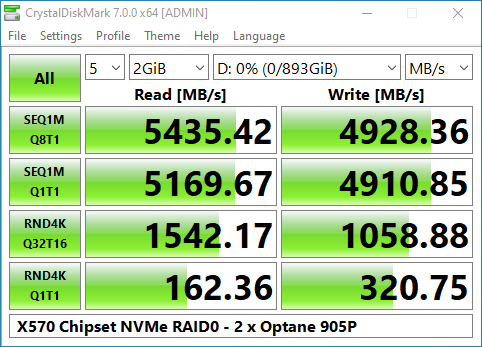

- I then verified that the CPU, the motherboard, Windows or CrystalDiskMark isn't limiting the read performance by connecting two Intel Optane 905P 480 GB SSDs to the very same PCIe interfaces and configured the drives as an AMD RAID0 array with the exact same settings:

Here, the sequential read result scales to pretty much exactly 2x as expected from RAID0 (single Optane SEQ1MQ8T1 read result a little above 2700 MB/s, forgot to take a screenshot). A RAID0 with P4510 drives should be even faster since they have a higher SEQ1MQ8T1 read results (about 3100 MB/s).

- Tested with:

ASRock X570 Taichi, latest UEFI P3.03 (early access for trouble-shooting), 3700X, 2 x 32 GB ECC UDIMM DDR4-2666

ASUS Pro WS X570-ACE, latest UEFI 1302, 3900 PRO, 4 x 32 GB ECC UDIMM DDR4-2666

Windows 10 x64 Versions 1909 and 2004

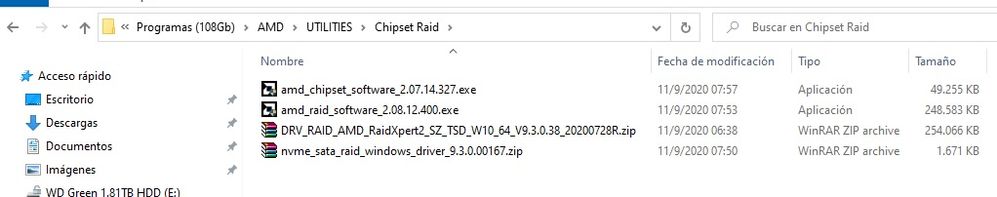

AMD chipset drivers 2.04.28.626

AMD RAID drivers 9.3.0.38

All SSDs with 0 % used space and configured after a secure erase

- The Intel SSDs have the latest firmware installed, unfortunately you cannot downgrade the SSDs' firmware according to Intel ("for security reasons"). Intel's tech support is also "looking into" the issue but I didn't get the impression that they cared very much about it, so I hope that maybe someone on AMD's end could help to clear this up.

- ASRock's support thinks that this is something only AMD can address on the software side.

Is there a way to establish contact with the software engineers that handle the AMD NVMe RAID feature to trouble-shoot this issue and hopefully resolve it with a driver or UEFI (module) update?

Thank you very much for the assistance!

Regards,

aBavarian Normie-Pleb

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

your results show that you are near the saturation point for 4 lanes PCie 3.0

if you want more bandwidth with for X570, you will need to find native PCIe 4.0 hardware

at present SSD products span from 2000-3000 MB/s read speeds

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each SSD is getting dedicated PCIe lanes indiviually (PCIe Gen3 x4, 1 x via X570 chipset, 1 x via CPU) so this has unfortunately nothing to do with my issue.

Also, the X570 chipset is connected to a Zen 2 CPU with a PCIe Gen4 x4 interface meaning the chipset alone could handle two PCIe Gen3 x4 NVMe SSDs with (close) to full performance.

But, since only one SSD is using the chipset and the other is getting PCIe lanes directly from the CPU, this limitation doesn't apply at all to my case.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On my X570-A PRO my Intel 665p 2TB achieves very good speeds.

My SSD is connected directly to my R5 3600 processor.

I was disappointed that the bottom was devoid of logic, adding more chips would make for a 4TB SSD

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue I'm describing only appears when using more than one PCIe NVMe SSD in an AMD X570 system AND configuring them to be an AMD NVMe RAID0 array.

There seems to be a sort of traffic jam from the AMD NVMe RAID driver in Windows when sequentially reading large files from a RAID0 array from the Intel P45x0 SSDs.

What currently baffles everyone looking into this is not knowing what triggers this since as described in my initial posting two Optane NVMe SSDs in RAID0 lead to >5300 MB/s read sequentially but these SSDs should be slower than the P4510 in this particular benchmark.

This means that the AMD NVMe RAID driver is generally able of delivering such throughput but it acts up as soon as it is handling the P4510 4 TB SSDs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have looked at servers which use slightly larger SSD products stuffed into a backplane. These are also PCIe based as the server is a 1U setup which suggests it is meant for database servers and busy web servers in the rack.

What I am not sure about is whether there is software RAID or what the server users. Hardware RAID used to be a needed but now PCIe switches handle the SSD more deftly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD's and Intel's motherboard RAID features are all software-based meaning the UEFI and the drivers handle the show. Somewhere in there is the issue's cause.

There are ways to use high-capacity enterprise NVMe SSDs in normal desktop systems, too - I'm using this one:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That unit is for SATA SSD while modern enterprise SSD products are substantially larger.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nope, just because SSDs use a 2.5" form factor does not mean that they are SATA, all current technologies are available as 2.5" drives - the height of the drives varies from 7 to 15 mm though.

The P45x0 and Optane 905P NVMe SSDs I'm using use the 2.5" form factor with an U.2 interface for PCIe Gen3 x4 connections to the motherboard via SAS HD cables and M.2-to-U.2 adapters.

U.2 SSDs have a height of 15 mm - imagine two current standard 7 mm SATA SSDs stacked on top of each other with a massive aluminum casing to help get rid of the heat (controllers of U.2 SSDs are "a bit" more power-hungry when writing data to the NAND/3D XPoint storage chips).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a similar box I use with 2.5" hard disks to make new boot media etc for laptops or to clone hard disks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I also use such a bay for standard SATA drives since I find it quite useful to be able to easily swap drives without having to open up a system and remove components to get to an M.2 slot, for example.

This is the reason why I use these U.2 drives. I see the point of M.2 drives in laptops etc. but since I'm an old-fashioned dude that doesn't have a window on the PC case I prefer easy access to components over minimalistic builds that look nice on photos.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

U.2 drives are harder to leverage as they use the same 4 lanes of PCIe which tend to be in short supply with AM4.

I have a cable that can connect a U.2 drive to an M.2 slot which affords the ability to install the unit for use or file recovery etc.

Micron has a 30TB SSD which I have considered for the gaming box, but I ended up with the Intel 665p 2TB SSD

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

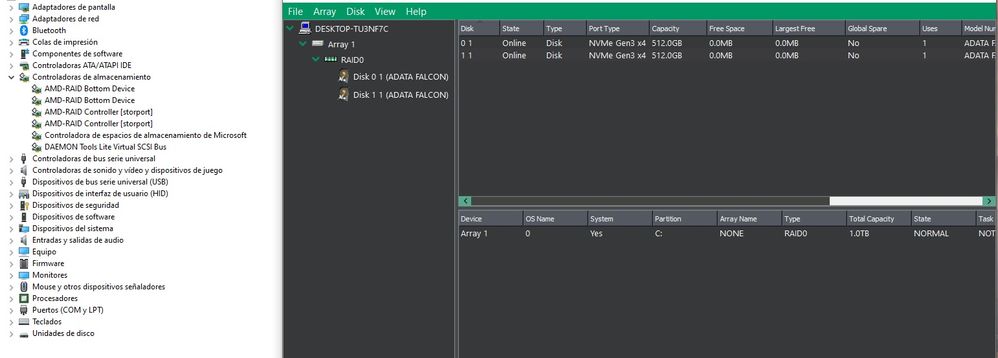

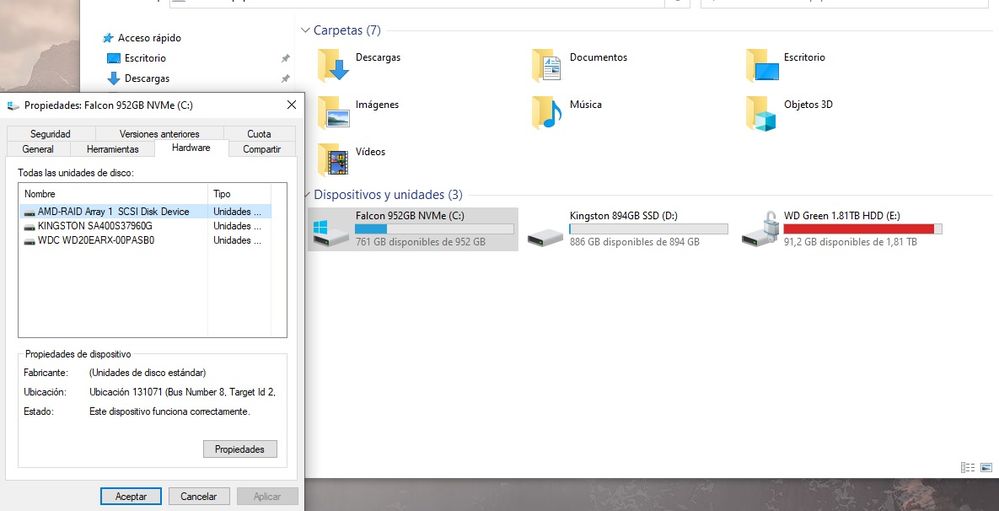

Hi there, I have the same issue: 2 x Adata Falcon 512GB NVMe PCIe3.0x4 RAID 0 Array on an ASUS x570 TUF Gaming Plus (WIFI) + Ryzen 5 3600XT (RAID 0 Chipset+CPU Slots). Reading Speeds are the same as 1 nvme drive tested on Crystal Disk Mark. The Adata Falcons are rated 3500mb/s read 1500mb/s write (Dramless). And the speed on RAID 0 aren't great at all. What could it be?, It could be possible to get a new and better Raid Driver revision in the near future?.

Also is it posible anything like having a bad nvme performer? A bad nvme drive?.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel and Samsung are both known to use a RAM cache to improve performance

RAM less SSD have to depend on the controller to provide all of the bandwidth.