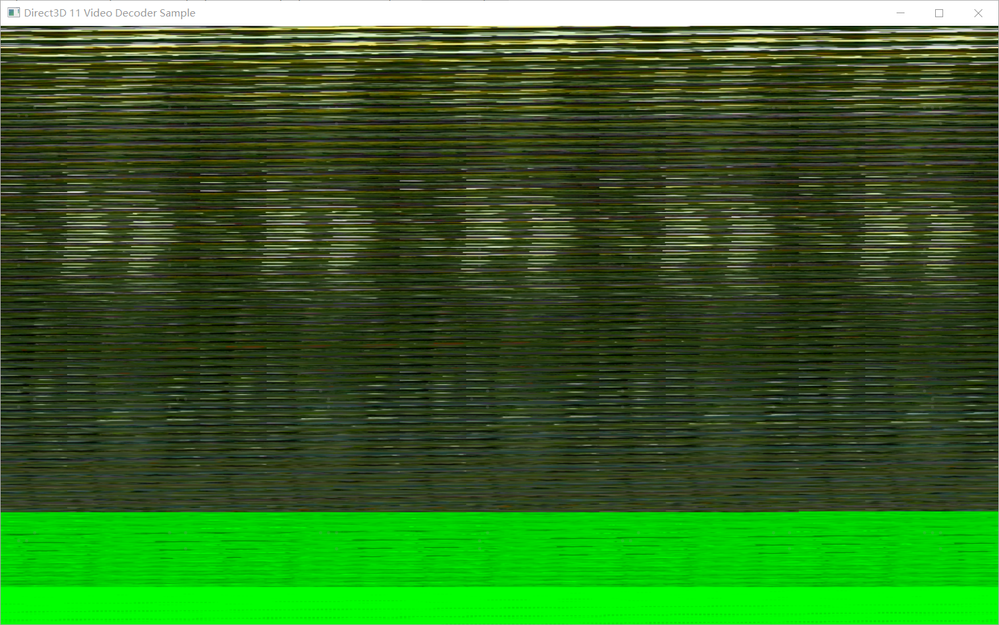

I want to use D3D11 VideoProcessor to render a decoded video, but i found when video's height is like 1080, 360, such as height cannot satisfy mod16, the render result will be blurred screen, but 720, 4k will be ok.

I tried to input a 1080p bmp file and convert to nv12 format then copy to GPU texture2D, same result.

Here is my sample code, just similiar with Windows-classic-samples DX11VideoRenderer.

By the way, i also tested DX11VideoRenderer with topoedit, the 1080p video render result is normal, so i copy the frame texture from GPU to CPU and convert to ARGB then saved to bmp file, the bmp file is similiar with above photo.

I don't know how this happened, my Intel UHD 630 works well, maybe something about data structuring. Is there a method to map NV12 data from 1080p frame to texture2d buffer correctly?

HRESULT CD3D11VideoProcessor::ProcessImagePresent(CWICBitmap& cWICBitmap)

{

HRESULT hr = S_OK;

ID3D11VideoProcessorInputView* pD3D11VideoProcessorInputViewIn = NULL;

ID3D11VideoProcessorOutputView* pD3D11VideoProcessorOutputView = NULL;

ID3D11VideoDevice* pD3D11VideoDevice = NULL;

ID3D11Texture2D* pInTexture2D = NULL;

ID3D11Texture2D* pOutTexture2D = NULL;

IF_FAILED_RETURN(m_pD3D11VideoContext == NULL ? E_UNEXPECTED : S_OK);

try

{

IF_FAILED_THROW(m_pD3D11Device->QueryInterface(__uuidof(ID3D11VideoDevice), reinterpret_cast<void**>(&pD3D11VideoDevice)));

D3D11_TEXTURE2D_DESC desc2D;

desc2D.Width = cWICBitmap.GetWidth();

desc2D.Height = cWICBitmap.GetHeight();

desc2D.MipLevels = 1;

desc2D.ArraySize = 1;

desc2D.Format = DXGI_FORMAT_B8G8R8A8_UNORM;

desc2D.SampleDesc.Count = 1;

desc2D.SampleDesc.Quality = 0;

desc2D.Usage = D3D11_USAGE_DEFAULT;

desc2D.BindFlags = D3D11_BIND_RENDER_TARGET;

desc2D.CPUAccessFlags = 0;

desc2D.MiscFlags = 0;

IF_FAILED_THROW(cWICBitmap.Create2DTextureFromBitmap(m_pD3D11Device, &pInTexture2D, desc2D));

desc2D.Format = DXGI_FORMAT_NV12;

IF_FAILED_THROW(m_pD3D11Device->CreateTexture2D(&desc2D, NULL, &pOutTexture2D));

D3D11_VIDEO_PROCESSOR_INPUT_VIEW_DESC pInDesc;

ZeroMemory(&pInDesc, sizeof(pInDesc));

pInDesc.FourCC = 0;

pInDesc.ViewDimension = D3D11_VPIV_DIMENSION_TEXTURE2D;

pInDesc.Texture2D.MipSlice = 0;

pInDesc.Texture2D.ArraySlice = 0;

D3D11_VIDEO_PROCESSOR_OUTPUT_VIEW_DESC pOutDesc;

ZeroMemory(&pOutDesc, sizeof(pOutDesc));

pOutDesc.ViewDimension = D3D11_VPOV_DIMENSION_TEXTURE2D;

pOutDesc.Texture2D.MipSlice = 0;

// process to present

D3D11_MAPPED_SUBRESOURCE MappedSubResource;

UINT uiSubResource = D3D11CalcSubresource(0, 0, 0);

ID3D11Texture2D* pARGBTexture = NULL;

pInTexture2D->GetDesc(&desc2D);

desc2D.BindFlags = 0;

desc2D.CPUAccessFlags = D3D11_CPU_ACCESS_READ;

desc2D.Usage = D3D11_USAGE_STAGING;

desc2D.MiscFlags = 0;

IF_FAILED_THROW(m_pD3D11Device->CreateTexture2D(&desc2D, NULL, &pARGBTexture));

m_pD3D11DeviceContext->CopyResource(pARGBTexture, pInTexture2D);

IF_FAILED_THROW(m_pD3D11DeviceContext->Map(pARGBTexture, uiSubResource, D3D11_MAP_READ, 0, &MappedSubResource));

BYTE* src=new BYTE[desc2D.Width * desc2D.Height * 6 / 4];

BYTE* src_y = src;

BYTE* src_uv = src_y + desc2D.Width * desc2D.Height;

libyuv::ARGBToNV12((BYTE*)MappedSubResource.pData, MappedSubResource.RowPitch,

src_y, desc2D.Width,

src_uv, desc2D.Width,

desc2D.Width, desc2D.Height);

m_pD3D11DeviceContext->Unmap(pARGBTexture, uiSubResource);

SAFE_RELEASE(pARGBTexture);

// create nv12 texture

ID3D11Texture2D* pNV12Texture = NULL;

desc2D.CPUAccessFlags = D3D11_CPU_ACCESS_WRITE;

desc2D.Usage = D3D11_USAGE_STAGING;

desc2D.MiscFlags = 0;

desc2D.Format = DXGI_FORMAT_NV12;

IF_FAILED_THROW(m_pD3D11Device->CreateTexture2D(&desc2D, NULL, &pNV12Texture));

IF_FAILED_THROW(m_pD3D11DeviceContext->Map(pNV12Texture, uiSubResource, D3D11_MAP_WRITE, 0, &MappedSubResource));

BYTE* dst = (BYTE*)MappedSubResource.pData;

memcpy(dst, src, desc2D.Width * desc2D.Height * 6 / 4);

m_pD3D11DeviceContext->Unmap(pNV12Texture, uiSubResource);

SAFE_DELETE(src);

m_pD3D11DeviceContext->CopyResource(pOutTexture2D, pNV12Texture);

RenderNV12Texture(pOutTexture2D);

ID3D11Texture2D* dxgi_back_buffer = NULL;

hr = m_SwapChain->GetBuffer(0, __uuidof(ID3D11Texture2D), (void**)&dxgi_back_buffer);

IF_FAILED_THROW(pD3D11VideoDevice->CreateVideoProcessorInputView(pOutTexture2D, m_pD3D11VideoProcessorEnumerator, &pInDesc, &pD3D11VideoProcessorInputViewIn));

IF_FAILED_THROW(pD3D11VideoDevice->CreateVideoProcessorOutputView(dxgi_back_buffer, m_pD3D11VideoProcessorEnumerator, &pOutDesc, &pD3D11VideoProcessorOutputView));

D3D11_VIDEO_PROCESSOR_STREAM stream_data;

ZeroMemory(&stream_data, sizeof(stream_data));

stream_data.Enable = TRUE;

stream_data.OutputIndex = 0;

stream_data.InputFrameOrField = 0;

stream_data.PastFrames = 0;

stream_data.FutureFrames = 0;

stream_data.ppPastSurfaces = nullptr;

stream_data.ppFutureSurfaces = nullptr;

stream_data.pInputSurface = pD3D11VideoProcessorInputViewIn;

stream_data.ppPastSurfacesRight = nullptr;

stream_data.ppFutureSurfacesRight = nullptr;

stream_data.pInputSurfaceRight = nullptr;

IF_FAILED_THROW(m_pD3D11VideoContext->VideoProcessorBlt(m_pD3D11VideoProcessor, pD3D11VideoProcessorOutputView, 0, 1, &stream_data));

hr = m_SwapChain->Present(1, 0);

SAFE_RELEASE(pNV12Texture);

SAFE_RELEASE(dxgi_back_buffer);

}

catch (HRESULT) {}

SAFE_RELEASE(pOutTexture2D);

SAFE_RELEASE(pInTexture2D);

SAFE_RELEASE(pD3D11VideoProcessorOutputView);

SAFE_RELEASE(pD3D11VideoProcessorInputViewIn);

SAFE_RELEASE(pD3D11VideoDevice);

return hr;

}