- AMD Community

- Support Forums

- PC Drivers & Software

- Re: 12bit issue for RGB444 4K 60hz (RX480, BenQ EW...

PC Drivers & Software

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12bit issue for RGB444 4K 60hz (RX480, BenQ EW3270u, Windows 10)

Graphic Card: RX480

Radeon Driver: 19.8.1 (same with stable 19.5.1+)

Monitor: BenQ EW3270u

Connection cable: DisplayPort

OS: Windows 10 1903 (was same with 1809)

Problem:

In RGB444 / 4K / 60hz mode, I can't enable 12-bit color depth.

The AMD control panel can see the menu for 12 bit option, but selecting 12 bit blinks the screen (as if it tried to apply it), but comes up reverted to 10 bit.

Note that behavior is exactly the same as it was for 10-bit in this older thread, but the 10-bit issue has been fixed in 19.5.1:

Windows 10 RGB444 4K 60hz 10bits mode issue

Note also that the screen is generally capable of 12 bit (it can be enabled successfully at 30Hz), and BenQ advertises this screen as DP1.4 (which should provide sufficient bandwidth).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, but nonetheless:

- The Display specifies in its EDID that it can do 12 bit

- AMD Driver sees/displays the option

- When setting 12 bit at 30Hz, the screen keeps working (meaning it can take 12 bit). (Problem is at 60Hz)

I am curious why you respond with this graph/info, seeing this thread (also about the previous 10 bit bug) where you notice that you can run that same screen at 12 bit (at 30 Hz):

My monitor supports 10bit but cannot be enabled in Radeon setting

This could be a bug in either of two ways:

- Either it is a driver bug similar to the 10bit bug (meaning the screen + card can do it, but the driver has a bad assumption somewhere)

- Or it is physically impossible to have this screen do 12bit at 60Hz (at 4K), in which case it would be nice if the option didn't show up (I guess)

I do not need 12 bit, but I notice other things behave non-ideal because they likely try to enable 12 bit, like switching HDR on in Windows, or some players/games entering fullscreen, and then dropping to 30Hz or just taking long for the screen to go blank and the setting to revert.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've checked the EDID on my Benq EW3270U and it only supports 10-bit per color over DisplayPort input, according to the EDID Basic Display Parameters block (byte 20 is 0xB5=0b10110101, which decodes to 1=Digital, 011=10 bit, 0101=DisplayPort).

It does support 36-bit color (i.e. 12-bit per color component) over HDMI input, according to CEA/CTA-861 extensions' HDMI 1.4 Vendor Specific Data block (VSDB id 0x000C03).

So the driver probably tries to use 12-bit on the DisplayPort input, which only supports 10-bit bpc.

Here's the contents of my EDID block (use Entech MonInfo or Web Based EDID Reader to decode) :

00 FF FF FF FF FF FF 00 09 D1 50 79 45 54 00 00 26 1C 01 04 B5 46 27 78 3F 59 95 AF 4F 42 AF 26

0F 50 54 A5 6B 80 D1 C0 B3 00 A9 C0 81 80 81 00 81 C0 01 01 01 01 4D D0 00 A0 F0 70 3E 80 30 20

35 00 BA 89 21 00 00 1A 00 00 00 FF 00 48 39 4A 30 30 39 32 31 30 31 39 0A 20 00 00 00 FD 00 28

3C 87 87 3C 01 0A 20 20 20 20 20 20 00 00 00 FC 00 42 65 6E 51 20 45 57 33 32 37 30 55 0A 01 C8

02 03 3F F1 51 5D 5E 5F 60 61 10 1F 22 21 20 05 14 04 13 12 03 01 23 09 07 07 83 01 00 00 E2 00

C0 6D 03 0C 00 10 00 38 78 20 00 60 01 02 03 E3 05 E3 01 E4 0F 18 00 00 E6 06 07 01 53 4C 2C A3

66 00 A0 F0 70 1F 80 30 20 35 00 BA 89 21 00 00 1A 56 5E 00 A0 A0 A0 29 50 2F 20 35 00 BA 89 21

00 00 1A BF 65 00 50 A0 40 2E 60 08 20 08 08 BA 89 21 00 00 1C 00 00 00 00 00 00 00 00 00 00 BB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your detailed analysis, but if no AMD person reads/fixes this..

Also specifically to your sentence:

> So the driver probably tries to use 12-bit on the DisplayPort input, which only supports 10-bit bpc.

What then do you make of it that enabling 12bit at 4k at 30Hz works?

And in the hopes that this eventually gets read by an AMD person:

My only goal is to to get this inconsistency fixed. Either the fix is to really do 12bit, or the fix is to not detect 12bit as available. The current state feels like there's inconsistent redundancy where one part of the driver thinks "we can do it" while the other says "no we can't".

The problem is some tools/programs or maybe even auto switching to HDR seem to see that 12bit is available and try to go for it.. and fail, with long black screen and reverting/falling back to something bad (like 10bit bug fell back to 30Hz before it got fixed).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to the display's manual, HDR only works at 1920x1080@60hz and 4K@60hz (with the disclaimer of "Models with HDR feature only" in the manual). Based on other information, 12-bit color @ 4k only works at 30hz. So that would pose a problem. I would say if you use HDR, then do not use 12-bit color.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes, obviously I will not manually chose it because I know it doesn't work.

But as long as the driver says "hey, 12bit is available", some applications try to automatically switch to it, and fail.

Also, this bug is not directly related to HDR. Colordepth is a separate setting, and the bug is with colordepth.

The only way HDR also plays into this is that applications can request to switch to HDR Mode, which also tries to increase the Colordepth.

Like I said, I don't necessarily want 12bit. I just wish this bug were fixed in some fashion, be it correctly reading what the screen can do, or correctly going through with 12bit, whatever is the right fix. Because due to the autoswitching of some things, it is very frustrating.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@pomo

>What then do you make of it that enabling 12bit at 4k at 30Hz works?

I suppose it works because 4K 30 Hz with 12 bit RGB 4:4:4 fits within the available display interface bandwidth. It looks like the display controller only supports HBR2 (i.e. DisplayPort 1.2) mode, but not HBR3 mode, in spite of being advertised as DisplayPort 1.4 - and HBR2 is not enough for 12 bit 60 Hz in RGB/YCbCr 4:4:4. You have to first select YCbCr 4:2:2 format under Display - Pixel format in Radeon Settings control panel, and then you will be able to enable the 12 bpc Color depth above. It is not actually possible to select 12 bpc when using RGB 4:4:4 or YCbCr 4:4:4 pixel format in 60 Hz mode - so it's a control panel bug, not a driver bug.

The actual reason why the VESA Basic Display Parameters block only reports 10-bit color is the limitiation of the EDID format. It's simply unable to specify supported color depths for every possible RGB/YCbCr encoding - this would require DisplayID standard (blocks 0x26 Display interface features or 0x0F Display interface features) which this monitor does not support.

Anyway EW3270U uses a 10-bit MVA display panel (Innolux M315DJJ-K30 - 8-bit with Hi-FRC in some sources), so 12-bit color would make no visible difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

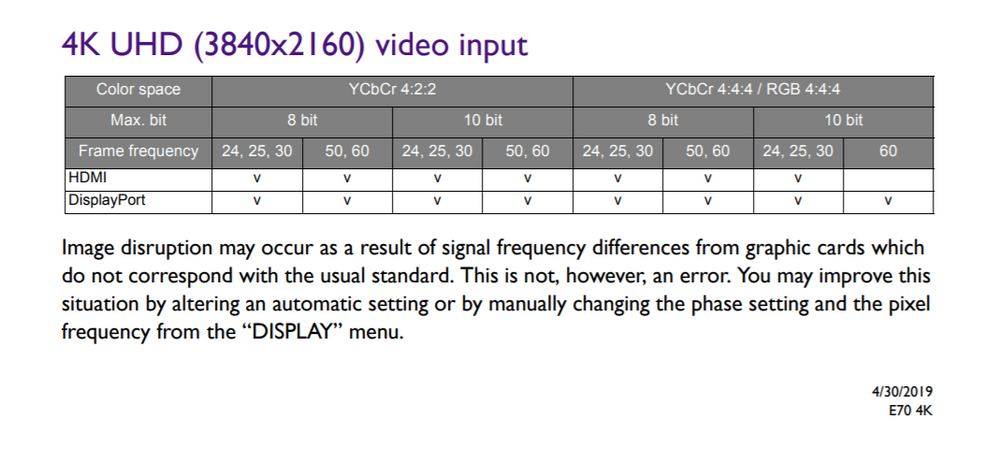

FYI here are the relevant 4K modes for Ew3270U.

10 and 12-bit color 2160p60/p50 modes require chroma subsampling, since they exceed the maximum TDMS bandwidth of 600 MHz specified in the CEA-861 extension block; however 2160p30 with 12-bit RGB 4:4:4 would be within the HDMI 2.0 maximum of 14.4 Gbit/s (after subtracting the 8b/10b coding overhead):

- CEA 2160p60 and 2160p50 modes use VIC 96 and 97 with 594 MHz pixel clock (based on standard CEA-861 timings), which require either 14.256, 17.82, or 21.384 Gbit/s for 8, 10, or 12-bit RGB/YCbCr 4:4:4 (24, 30, or 36 bits per pixel), while YCbCr 4:2:2 requires 9.504, 11.88, or 14.256 Gbit/s (2/3 the bandwidth). These modes also support YCbCr 4:2:0 subsampling (1/2 the bandwidth) with 7.128, 8.91, or 10.692 Gbit/s.

- CEA 2160p30 and 2160p25 modes use VIC 94 and 95 with 297 MHz pixel clock, which require 7.128, 8.91, or 10.692 Gbit/s for RGB/YCbCr 4:4:4, while YCbCr 4:2:2 requires 4.752, 5.94, or 7.128 Gbit/s (2/3 the bandwidth).

10-bit 60 Hz and 12-bit 30 Hz VESA RGB modes fit within the DisplayPort HBR2 maximum bandwidth of 17.23 Gbit/s (with 8b/10b coding overhead removed), but 12-bit 60 Hz require either HBR3 or 4:2:2 chroma subsampling:

- VESA 3840x2160 @60Hz mode uses custom 533.25 MHz pixel clock (based on VESA CVT formula) and requires data transfer rate of 12.798, 15.998, or 19.197 Gbit/s for 8, 10, or 12-bit RGB 4:4:4 (24, 30, or 36 bits per pixel), while YCbCr 4:2:2 requires 8.532, 10.665, or 12.798 Gbit/s (2/3 of RGB bandwidth).

- VESA 3840x2160 @30Hz mode uses custom 262.75 MHz pixel clock, which requires data transfer rate of 6.399, 7.999, or 9.599 Gbit/s for 8, 10, or 12-bit RGB/YCbCr 4:4:4; for YCbCr 4:2:2, it's 4.266, 5.333, or 6.399 Gbit/s (2/3 of RGB bandwidth).