- AMD Community

- Communities

- Developers

- OpenCL

- Re: Poor performance of copying data between the C...

OpenCL

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Poor performance of copying data between the CPU memory and GPU memory

Hello,

I'm a researcher developing Particle-in-Cell simulations in plasma physics using OpenCL with AMD's GPUs. Particle-in-Cell is an iterative method (iterating through time), which means we've got a "for" loop in which all kernels are enqueued for every time step.

A part of the algorithm is solving the Poisson equation, but in this case this is too simple of an operation to solve on the GPU, therefore should be done on the CPU. Thus, every time step we need to copy a small amount of data (400 floats, 1,6 kB of data in total) from the GPU memory to CPU memory and back using clEnqueueReadBuffer() and clEnqueueWriteBuffer() functions. However, despite the small amount of data, we're experiencing a massive overhead (over 90% of the program runtime) while performing the copy, rendering the whole program unusable. Mapping the buffers performs somewhat better, but it's still really slow.

I'm developing on Windows, and having discussed this with my colleagues who work with Linux, it appears the overhead doesn't exist on Linux. Switching to Linux is not desired, however, because some development tools we're using are unavailable for Linux.

I'm using the AMD Radeon Pro WX 9100 GPU, running the newest enterprise drivers. Any idea what could be causing this massive overhead? Could it be a driver-related issue?

Thank you for the help!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect, memory pinning cost for transferring the data from GPU to host might be the main reason for this poor performance. Each clEnqueueReadBuffer call inside the loop needs to perform pinning and it takes time. As the "AMD OpenCL Programming Optimization Guide" says:

1.3.1.1 Unpinned Host Memory

This regular CPU memory can be accessed by the CPU at full memory bandwidth; however, it is not directly accessible by the GPU. For the GPU to transfer host memory to device memory (for example, as a parameter to

clEnqueueReadBuffer or clEnqueueWriteBuffer), it first must be pinned (see section 1.3.1.2). Pinning takes time, so avoid incurring pinning costs where CPU overhead must be avoided.When unpinned host memory is copied to device memory, the OpenCL runtime uses the following transfer methods.

• <=32 kB: For transfers from the host to device, the data is copied by the CPU to a runtime pinned host memory buffer, and the DMA engine transfers the data to device memory. The opposite is done for transfers from the device to the host.

To avoid the pinning cost, use pre-pinned host memory during the data transfer. Here are couple of ways to achieve this:

Option 1 - clEnqueueReadBuffer()

deviceBuffer = clCreateBuffer()

pinnedBuffer = clCreateBuffer(CL_MEM_ALLOC_HOST_PTR or CL_MEM_USE_HOST_PTR, COPY_SIZE)

void* pinnedMemory= clEnqueueMapBuffer(pinnedBuffer)for (int i = 0; i < N; i++) {

clEnqueueReadBuffer( deviceBuffer, pinnedMemory )

Application reads pinnedMemory.

...

}

clEnqueueUnmapMemObject( pinnedBuffer, pinnedMemory )

Option 2 - clEnqueueCopyBuffer() on a pre-pinned host buffer

deviceBuffer = clCreateBuffer()

pinnedBuffer = clCreateBuffer(CL_MEM_ALLOC_HOST_PTR or CL_MEM_USE_HOST_PTR, COPY_SIZE)

for (int i = 0; i < N; i++) {

clEnqueueCopyBuffer( deviceBuffer, pinnedBuffer )

void *memory = clEnqueueMapBuffer( pinnedBuffer )

Application reads memory.

clEnqueueUnmapMemObject( pinnedBuffer, memory )}

For more details, please find the section: "Application Scenarios and Recommended OpenCL Paths" in the "AMD OpenCL Programming Optimization Guide".

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for reporting this. Could you please provide a minimal test-case that reproduces the issue?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Certainly, I wrote up a quick example which demonstrates this issue. I'm attaching the host code, a simple kernel is contained within. Hopefully this is helpful!

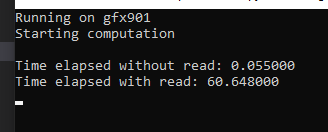

There's two loops, one with pure computation, and one with an extra read command to read some data from the GPU buffer. The code performs a simple vector addition on the GPU.

With the current setup, we're performing 1 million iterations for both loops, and each vector has 10 million elements. We're copying 400 floats from the GPU, which is 1.6KB of data.

The first loop takes about 50 milliseconds to finish, while the second loop (with the extra read command) takes about 60 seconds to complete, making it over 1000x slower. Is this expected behavior?

Hardware setup:

CPU: Intel Core i7-9800X

RAM: 32GB DDR4-2666 memory

GPU: AMD Radeon Pro WX 9100, running with the latest enterprise driver

OS: Windows 10 Pro 1809

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for providing the reproducible test-case. We will look into this and get back to you soon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect, memory pinning cost for transferring the data from GPU to host might be the main reason for this poor performance. Each clEnqueueReadBuffer call inside the loop needs to perform pinning and it takes time. As the "AMD OpenCL Programming Optimization Guide" says:

1.3.1.1 Unpinned Host Memory

This regular CPU memory can be accessed by the CPU at full memory bandwidth; however, it is not directly accessible by the GPU. For the GPU to transfer host memory to device memory (for example, as a parameter to

clEnqueueReadBuffer or clEnqueueWriteBuffer), it first must be pinned (see section 1.3.1.2). Pinning takes time, so avoid incurring pinning costs where CPU overhead must be avoided.When unpinned host memory is copied to device memory, the OpenCL runtime uses the following transfer methods.

• <=32 kB: For transfers from the host to device, the data is copied by the CPU to a runtime pinned host memory buffer, and the DMA engine transfers the data to device memory. The opposite is done for transfers from the device to the host.

To avoid the pinning cost, use pre-pinned host memory during the data transfer. Here are couple of ways to achieve this:

Option 1 - clEnqueueReadBuffer()

deviceBuffer = clCreateBuffer()

pinnedBuffer = clCreateBuffer(CL_MEM_ALLOC_HOST_PTR or CL_MEM_USE_HOST_PTR, COPY_SIZE)

void* pinnedMemory= clEnqueueMapBuffer(pinnedBuffer)for (int i = 0; i < N; i++) {

clEnqueueReadBuffer( deviceBuffer, pinnedMemory )

Application reads pinnedMemory.

...

}

clEnqueueUnmapMemObject( pinnedBuffer, pinnedMemory )

Option 2 - clEnqueueCopyBuffer() on a pre-pinned host buffer

deviceBuffer = clCreateBuffer()

pinnedBuffer = clCreateBuffer(CL_MEM_ALLOC_HOST_PTR or CL_MEM_USE_HOST_PTR, COPY_SIZE)

for (int i = 0; i < N; i++) {

clEnqueueCopyBuffer( deviceBuffer, pinnedBuffer )

void *memory = clEnqueueMapBuffer( pinnedBuffer )

Application reads memory.

clEnqueueUnmapMemObject( pinnedBuffer, memory )}

For more details, please find the section: "Application Scenarios and Recommended OpenCL Paths" in the "AMD OpenCL Programming Optimization Guide".

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, this is working! Now I'm getting 0.7 seconds on the loop which involves the repeated reading operation, which is very manageable. Thank you very much, I missed the topic of the pinned memory entirely when analyzing this earlier.

There is one issue I'm observing, and that's the fact that test_buffer gets filled with 0s instead of actual values, however, this is most likely an unrelated issue, so I'm marking your answer as correct.

Thank you again!