- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Optimizing data transfer with APU (best way to test zero-copy?)

So finally I have got my APU test system (I paid for it!):

-CPU: AMD Ryzen 5 2400G

-MB: Asrock X470 Fatality Gaming mini-ITX

-RAM: G.Skill 3200 C14, 16GB*2

-OS: Windows 10 Pro

-IDE and compiler: Visual Studio 2017 Community

Basic benchmark:

https://imgur.com/a/i9k9Xvm

As it turns out, the exact same OpenCL code runs *slower* on the APU, comparing to running it on RX 480 (7950 not tested).

Here is my though, appreciated if you can provide some ideas as to what might be done to check the bottleneck.

The operation:

-From host I created an array of 200e6 single-precision float (A). Two more containers,B andC of the same size are also created on host.

-Three cl_mem buffers are created with flagCM_MEM_USE_HOST_PTR with pointers to the above three containers, asd_A withCM_MEM_READ_ONLY andd_B, d_C withCM_MEM_WRITE_ONLY

-One cl_mem is additionally created as a temporary storage,d_temp without using HOST_PTR flag. It hasCM_MEM_READ_WRITE

-No mapping is done at all, as all operations are carried out by GPU alone. (Is this even correct? This seems to contradict many use case of USE_HOST_PTR)

-Two kernels are run,

kernel 1 is a scaling operation which do d_temp=k*d_A,

kernal 2 reads d_temp and create the outputs d_B = d_temp*cos(global_id*k) and d_C = d_temp*sin(global_id*k)

-Operations are finished. Buffers are freed on the GPU.

With the above, RX 480 spent around 0.40 sec, but APU spent up to 0.62 sec. I was expecting GPU to be faster as it was limited by PCI-E bus.

(The total transfer is 800 MB host-to-device, and 1600 MB device-to-host, should I expect shorter time from RX 480?)

I suspected I haven't done something to allow zero-copy, although I did make sure the 4k alignment and 64 kB buffer size was fulfilled.

Another guess is that, although now I removed the PCI-E bus limit, now with APU I am limited by the RAM bandwidth which is at max 40 GB/s. Still, I expected the time spent should be less.

Your comments are appreciated. If I wasn't clean somewhere and you wouldn't mind looking at the code, let me know and I am glad to share it.

- Labels:

-

OCL Performance and Benchmark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

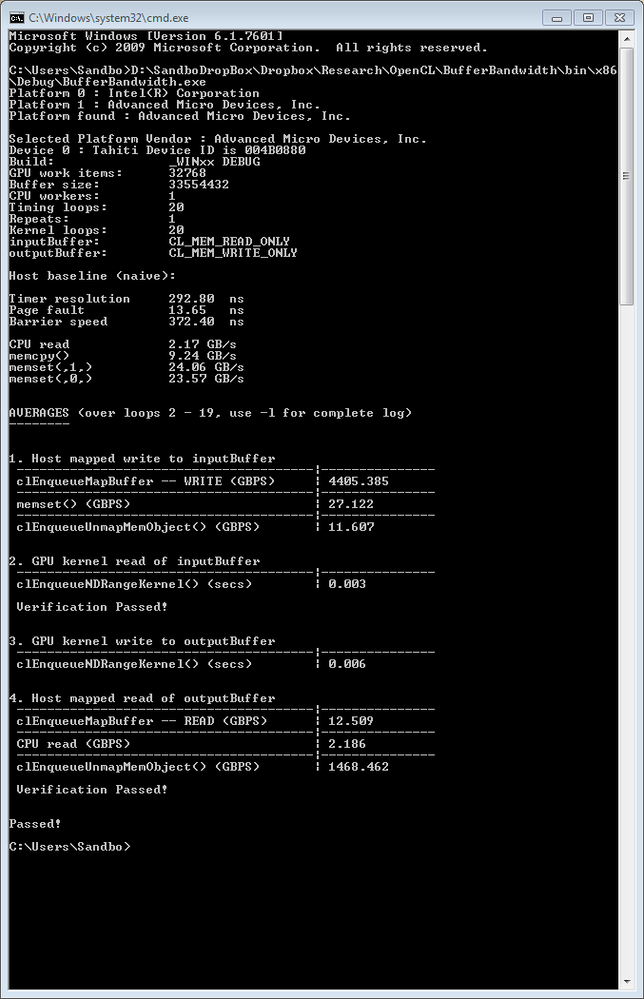

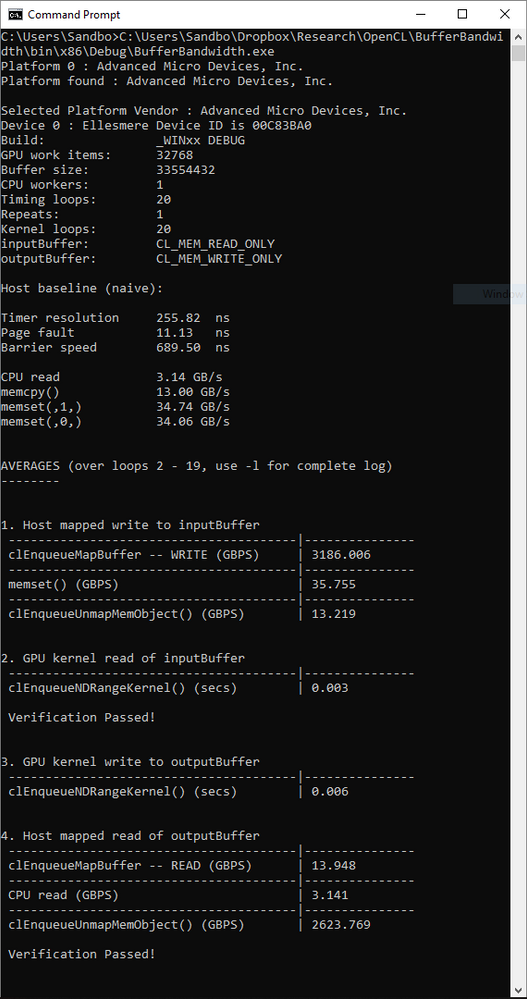

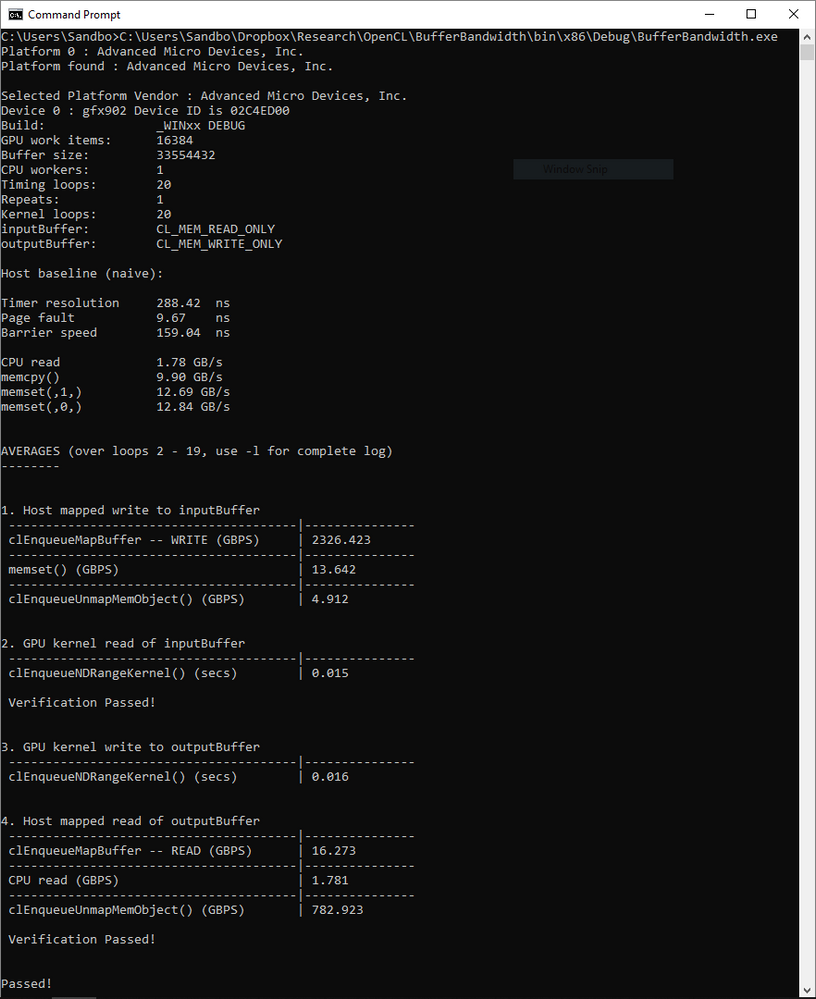

By using the Bufferbandwidth sample came with AMD SDK 3.0, I found that the write from host-to-device is excessively slow on APU, comparing to two other discrete GPU platforms:

AMD HD 7950@ PCI-E 3.0 x16

RX 480 PCI-E 3.0 x16

Vega 11 @ 2400G (only 4.912 GBPS for unmapping)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just comparing the memset values, CPU/Host performance seems better on those discrete GPU platforms than APU. If a zero-copy buffer is used as input buffer (host to device), I think, map/un-map values should improve for APU. Please run the sample with argument "-if 3" (i.e. for CL_MEM_USE_HOST_PTR) and check. Use argument "-h" for more options.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks dipak, indeed later after I programmed the APU with zero-copy code, APU spent a significantly shorter time in the processing with the exact same code and test data : APU (0.25s) vs dGPU (0.4s).

I am not sure about the memory transfer, though, the APU system is brand new with 3200 C14 16GB*2 which is way faster than the other two.

The RX480 platform packs i7-6700k and 3000 C15 8GB*2, and the 7950 one should be DDR3 1600 C7 8GB*4. I was also puzzled by that part alone. Could that be because of AMD system's having a little worse memory performance (sorry if this was offensive as this is an AMD forum lol)

Maybe if this is solved I can see a further improvement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

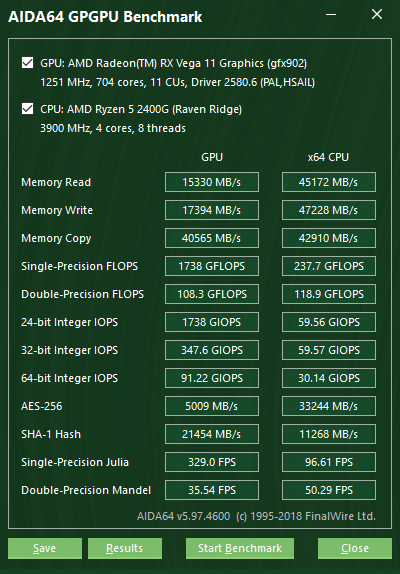

If I may ask one more question, I wonder what makes the memory read/write slow on AIDA64 GPGPU test.

From their website: GPGPU Benchmark | AIDA64

They mention the read/write refers to how fast the host-device transfers are, which from what I understood should be the same as copy for APUs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know how the benchmark program measures the read/write bandwidth. The exact numbers may vary depending on multiple factors such as buffer type, buffer size, method of transferring data etc.

For some experiments, you can run the BufferBandwidth sample with command-line option "-ty" to select a different transfer method. For example, "-ty 3" provides bandwidth information using clEnqueue[Read/Write] with pre-pinned memory.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could it be a busy-wait or spin-wait loop which accesses to memory(atomically?) and stealing memory bandwidth of integrated-GPU part? How much demanding is a spin-wait-on-RAM when GPU is also copying some data from/to RAM?

I assume there are profiling commands and to increase resolution of measurement, there are spin wait type synchronizations.