- AMD Community

- Communities

- Developers

- OpenCL

- Re: Memory bandwidth anomaly

OpenCL

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Memory bandwidth anomaly

Hi,

Recently I did some tests about GDDR5 memory bandwidth.

In a few words:

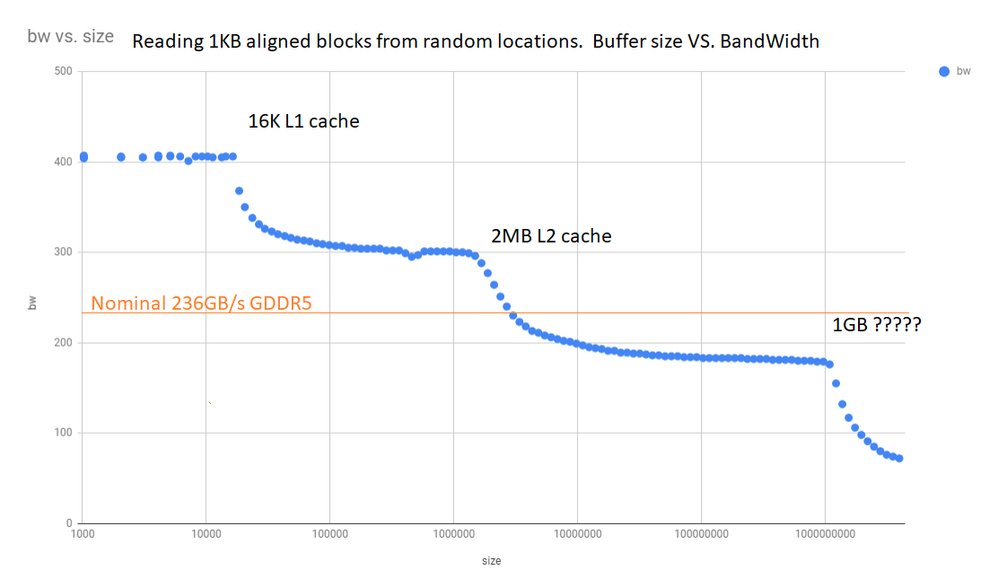

It launches numberOfCUes*4 waveFronts. Each WF is reading 1024 bytes from a random aligned location in a large buffer.

The whole kernel reads 100GB total. The ideal bandwidth is 1.850GHz*256bit/8*4=236.8GBps

I'm varying the buffer size from 1KB to 4GB and got the following results:

You can easily spot to 2 cache levels on it. But I've found a third something above 1GB.

Anyone has an explanation what is that?

I have 8GB and I only tested this up until 4GB, but it seems like if I use the whole memory, the bandwidth will settle at 1/3 of the nominal bandwidth.

This behavior with PC memory would be unacceptable for sure. But what's the case with graphics memory?

Thank You for the answers!

Solved! Go to Solution.

- Labels:

-

OCL Performance and Benchmark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there!

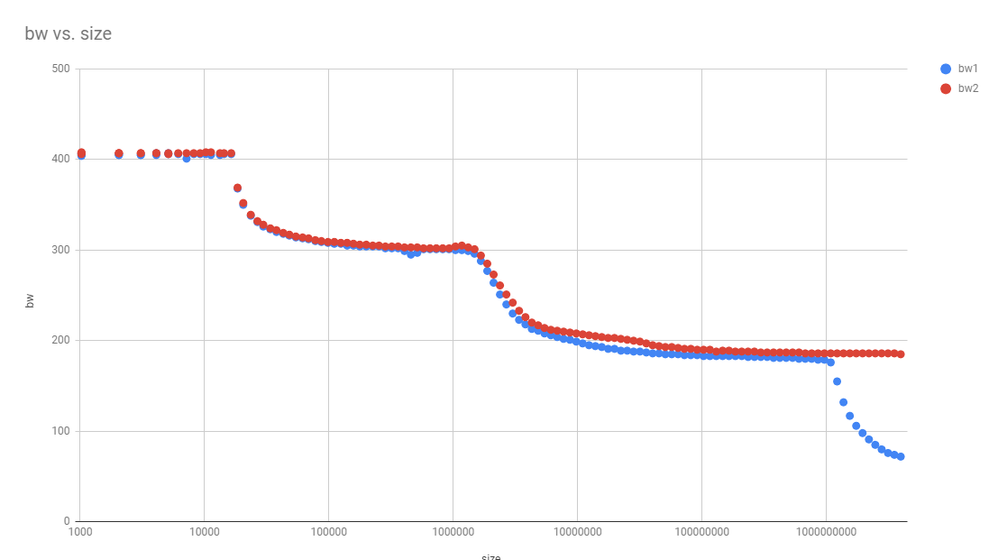

Just to venture a guess, could it be the TLB thing?

So, the default page size is 4K (I think), and if you read memory at random, you'll be hitting different pages a lot. The page will either be in the TLB or not; if not, it will incur a significant slowdown as it'll have to update the TLB, and that's a very slow operation on the CPUs as well. I think this has been an issue in the mining community a while ago, then AMD shipped so-called blockchain drivers that increased the page size to 64K (again, I think...), and everything started to work fine for them after that. As far as I know, there are two TLB levels, so there should or could be another step in your bandwidth graph somewhere.

For instance, see this release, and look for the large page info -> https://support.amd.com/en-us/kb-articles/Pages/AMDGPU-Pro-Driver-17.40-for-Linux.aspx

If you're on Windows, the Crimson or Adrenaline drivers should provide a switch between the graphics and compute workloads. For example, see this page -> https://support.amd.com/en-us/kb-articles/Pages/DH-024.aspx I assume the compute workloads mean large pages, though I can't be sure.

I'm sorry if the cause of your problem is different and/or if I'm wrong, as I don't want to give you bad advice, but I think running your test with large pages is worth a shot.

Finally, would you mind sharing the model of your card and your memory benchmark kernel?

Best,

sp314

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there!

Just to venture a guess, could it be the TLB thing?

So, the default page size is 4K (I think), and if you read memory at random, you'll be hitting different pages a lot. The page will either be in the TLB or not; if not, it will incur a significant slowdown as it'll have to update the TLB, and that's a very slow operation on the CPUs as well. I think this has been an issue in the mining community a while ago, then AMD shipped so-called blockchain drivers that increased the page size to 64K (again, I think...), and everything started to work fine for them after that. As far as I know, there are two TLB levels, so there should or could be another step in your bandwidth graph somewhere.

For instance, see this release, and look for the large page info -> https://support.amd.com/en-us/kb-articles/Pages/AMDGPU-Pro-Driver-17.40-for-Linux.aspx

If you're on Windows, the Crimson or Adrenaline drivers should provide a switch between the graphics and compute workloads. For example, see this page -> https://support.amd.com/en-us/kb-articles/Pages/DH-024.aspx I assume the compute workloads mean large pages, though I can't be sure.

I'm sorry if the cause of your problem is different and/or if I'm wrong, as I don't want to give you bad advice, but I think running your test with large pages is worth a shot.

Finally, would you mind sharing the model of your card and your memory benchmark kernel?

Best,

sp314

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's it, Thank You very much!

I'm using the RX470 on Win10 64, ver 18.9.2

And here's the small kernel: 4 dword nicely aligned reads per stream, and a little math in a loop.

uint nextRandom(uint a){ return (int)a * (int)0x8088405 + 1; }

__attribute__((reqd_work_group_size(64, 1, 1)))

__kernel void kernel1(uint loopCnt, uint bufCnt, __global uint* buf){

uint gid = get_global_id(0);

uint lid = gid & 0x3f;

uint seed = gid>>6;

uint sum = 0;

uint blockCnt = bufCnt/256;

for(uint iter=0; iter<loopCnt; iter++){

seed = nextRandom(seed);

uint addr = (((ulong)seed*blockCnt)>>32)*256;

for(uint i=0; i<256; i+=64)

sum += buf[addr+i+lid];

}

buf[gid] = sum;

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, cool, I'm glad it worked for you. Though, one minor thing. May I suggest that you use uint seed = (gid >> 6) + 1; instead of uint seed = gid >> 6; ?

So, your PRNG is x = 0x808stuff * x + 1. With seed = gid >> 6, wave 0 will start with seed 0, and produce the sequence of pseudo-random numbers 1,a,b,c,d... in the main loop. Wave 1 will start with seed 1, and produce the sequence a,b,c,d... Essentially, wave 0 is using the same sequence, just trailing behind wave 1. So it could be that wave 1 is pulling the data into the caches, and wave 0 is reusing that data from the caches instead of hitting the memory.

Now, I don't know if this is happening because the waves could quickly diverge due to the timing and what not, and in any case the effect is minor, but I thought that since I've spotted this thing, I should mention it, just in case.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed, it's a good point!

Not in this current test with 8192 streams, but in general one should be cautious with this simple RNG ![]()

(This is how security risks born, haha)