Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Re: GCN ISA Assembler

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GCN ISA Assembler

Hi All

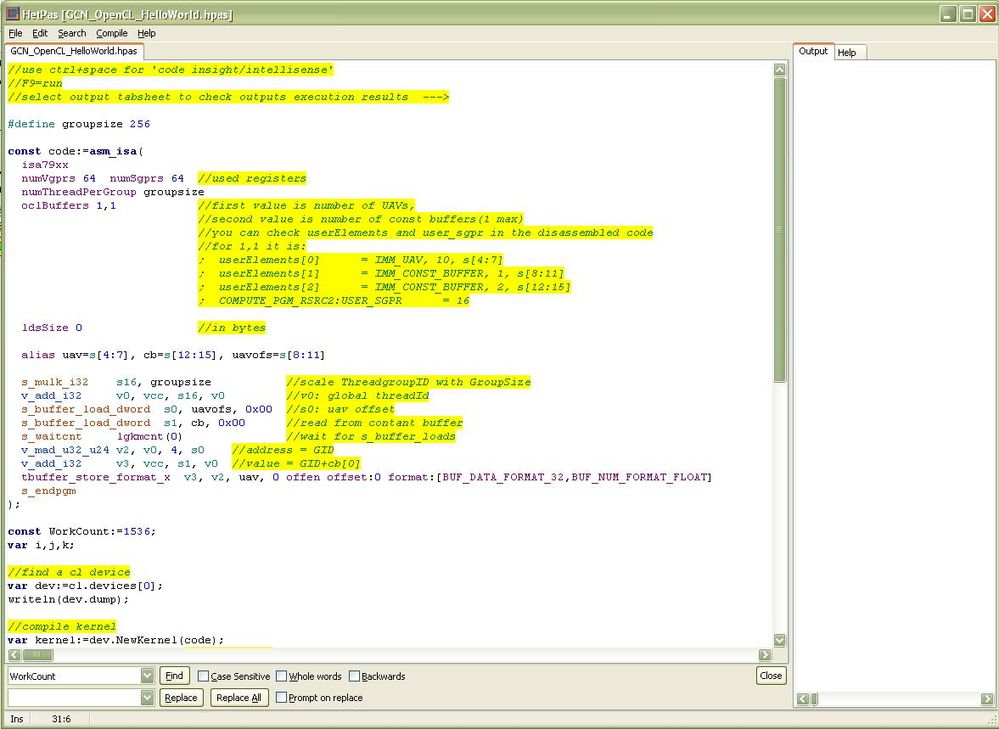

I'm proudly presenting the first preview release of my GCN ISA Assembler / AMD_IL errorchecker / scripter IDE featuring syntax_highlighting and code_insight (ctrl+space) for fast assembly development.

Here you can download it and get more info -> http://realhet.wordpress.com/2012/11/14/hello-world/

Note that this is a spare time project, full of bugs, don't plan to do anything serious with it, and use it on your own risk only!

Hardware I was able to test it on already: HD4850, HD5770, HD6970:CAL + AMD_IL; HD7970:CAL,OpenCL + GCN_ISA, I can only hope it works on other devices too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey realhet,

that is really cool! I'll definitely play around with that over the weekend!

This can finally be a response to the missing mul24_hi in OpenCL. Do you already have some examples of how much faster some problem runs in an ISA implementation compared to OpenCL (e.g. your Mandelbrot)?

I think I could make good use of the HW carry and interleaved s_mul_u32.

What would be the best approach to move from an OpenCL implementation to ISA? Take the compiled result of my kernels and start optimizing? Or is it possible to mix OpenCL code with just a few ISA-ASM functions?

Exciting !!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I really hope that it will work on your system too, not just here ![]()

>missing mul24_hi

Also you can inline codes that my assembler doesn't knows with DD (define dword) instruction.

>Examples on ISA vs. OpenCL

No, I haven't. But I can try to port that mandel demo to OpenCL.

I think there is a way to force OpenCL to compile to that 4 instruction main loop:

v_mul_f32 v0, X, X

v_mad_f32 Y2, X, Y, halfCy mul:2 ; yn=2xy+Cy

v_mad_f32 X, Y, Y, -v0

v_add_f32 X, -X, Cx ; xn=x^2-y^2+Cx

The only extra things here are the mul:2 output modifier and the neg() input modifiers. But those are exists on any hardware, so I think Ocl use it. (Unfortunately I can't try it now, I gotta wait for a few weeks until I have hd7xxx access again)

But I'm kinda lame in OpenCL, It would be no representative test if I try to optimize it haha.

>interleaved s_mul_u32

Actually I've found out that you don't have to strictly interleave one by one. For example the instruction scheduler can handle situations like 8 v instructions followed by 4 s instructions. The key is the long term ratio between V and S instruction dwords (2dword instructions eats more). The more S you use, the more threads it will need and then you have to lower register usage down to 84 or even 64 to get full V alu utilization.

>OpenCL -> ISA

Well I really don't know... I've came from OpenGL's fragment shader a long time ago, then I've found early OpenCL on HD4850, it was kinda unoptimal in those beta times. I saw what AMD_IL code it makes and I decided to write the AMD_IL code myself. Then finally GCN came out a year ago, and I decided to fall one more level deeper ![]()

>OpenCL with few ISA.

That's quiet impossible. You know, even we could mark a special part in OCL source and then patch it later with ISA, then how would we know what register is what variable and stuff. Not mention that opencl unrolls a lot -> duplicating repetitive code -> and that code will be optimized globally by the AMD_IL compiler.

Although thinking in GCN ISA is fun IMO: For example whenever you do an IF, you have to think in 64bit SRegs and bitwise operations. I'ts like an x86 with 2048bit SSE that comes with very flexible memory read/write instructions ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Congratulations realhet! ![]()

I thought you were probably working on something like this but I was really surprised to see the integrated development environment, which looks very nice.

I've been developing a somewhat different set of tools for working with GCN. It uses an ANCII C compiler ported to GCN and separate full assembler so that the entire GCN/hardware environment can be exposed at the C level. Something OpenCL is unlikely to ever to do. Users work from an ordinary source file with sections for Opencl C, ANSII C, GCN, and AMDIL. Later, I'll try to post some examples somewhere.

I'm not sure I'm as brave as you to open it for public use, but I maybe I'll try. Like you said, there will always be a lot of bugs first time out, but I think most people around here understand that. Congrats again and good luck.

BTW I tried to compile one of your examples but it was unable to open the a temporary file (precompile_out or something like that) in the C root dir. Do I need to do anything special? Could it be a windows permission/path thing?

drallan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thx for positive vibes!

Sorry, I forgot to say that it writes some temp files in the C:\ dir (I have UAC disabled cause I still use XP mainly lol). Also if you feed it an OpenCL source (with NewKernel()) it will redirect it's temp files into the C:\ .

It's really cool that you also made a solution that you can use all the languages (host and gpu) from a single file ![]() I'm looking forward to see your examples.

I'm looking forward to see your examples.

And the reason I've published this is that I've reached to the end of a job, and I have no more fear that someone will beat me at GCN asm on that particular field. My actual project is my own realtime video decoding/processing/VJing and stuff, so everyone feel free to beat me in that, I don't care and up for the challenge. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I've tested HetPas a bit. On my machine with an HD5770 it works well (of course except the GCN part 🙂 ).

On my box with an HD7850, it crashes during startup. As hetpas is stripped code, I could not find out anything meaningful about why it dies (OK, it dies in a push, so obviously the stack pointer is bad, but I could not see when that happened).

I'm afraid it can be because I have to access this machine with remote desktop. Maybe I'll try teamviewer or something ... It'll be a while until I can get to the console. Normally, OpenCL applications have no problem with remote desktop on AMD (unlike nvidia).

If you could create a version of hetpas that is not optimized & stripped that much, then maybe I could find out what's going wrong. Or if you still happen to have the debug symbols of the build on your website ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi and thx for checking it!

As you requested, I've tried to attach debug infos, and a stackTracer, but oddly Delphi's linker threw an internal error. Maybe I'm using generics too excessively or something. (I never included debug info before because I usually tested it inside the IDE and that needs only dcu files). The problematic part can be somewhere in 50k lines ![]()

So the best I can do is to put a detailed function/line map file near the exe file so at least I can investigate the problems location from the exception's address. (Please redownload the zip from the website before you try it again)

Remote desktop: I've tried it with VNC Viewer only. But thats really weird why it throws an error with the most official remote assistance software. Note that, when the IDE starts it does nothing GPU related at all, only static linking cal's and opencl's dlls.

HD7850: That's a big question mark for me, because the only hardware I was able to test was Tahiti, so there's a chance that my current attempt to inject ISA into OpenCL's elf will fail on the smaller GCN chips. In a few weeks I'll have access to a HD7750, and I'll check. Hopefully the only difference will be the chip target id's, because the chips only differs in no. of CUes and DoublePrec units/CUes (as I think).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, not sure if that is correct, as I did some address magic in order to get from the runtime addresses to the addresses in the map file.

Do you have something called SelfTest, which can be related to a line number 2844 (het.Objects.pas)? Is there anything that could cause an access violation?

In the machine code above the exception, I see a "call 0058D8E4", which I translated to het.Objects.TSelfTest.SetName.

Does that help somehow? BTW, the problem does not depend on RDP, it hapens the same way when running the UI directly.

I the followed the program by single-stepping. The call chain of the abort is as follows:

System.InitExe

System.StartExe

System.InitUnits

in InitUnits there is a loop that appears to initialize static objects. The initialization of object 0x68 throws the exception. The call address was 0x006401D0, (translated 23F1D0) which is not in your map.

So it appears something very basic is missing on this machine. Do you require any frameworks/tools/addons/engines/whatever?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thx again for testing! ![]()

Finally I've tracked down that debuginfo problem. (There was a WindowPlacement properti I saved to the ini file, I did it with a class_helper, and when I made it published in the mainform, then the linker dropped that internal error. Sad that class_helpers can't contribute to the Runtime Type Information, on which my script lang is pretty much based on.)

I've uploaded the new exe, and it became 7MB bigger, so there is working debuginfo in it.

Also made a change: When the selftest fails it will ask you If you want to continue anyways(bad choice), or just check the exception information and exit.

This error you've discovered in your machine is very weird. It's in the heart of the system, so if that test fails, then all other thing could fail also (like the cl/decive/kernel/buffer object hierarchy). This is my own oop framework which does automatic obejct lifetime management, also automatically casts notifications of object/property changes.

"Do you require any frameworks/tools/addons/engines/whatever?"

Not at all, it only needs an XP environment and the cal,cl dll's from the Catalyst driver.

I can only think that one of those uncommon things are blocked on your system:

- It sometimes writes some temp files into the 'C:\' (for example the source file after macro precompilation)

- It uses WriteProcessMemory to be able to notify about property changes. (Replaces empty property.setter functions with custom code) Also there are some Variant related patches like case insensitive = operator for strings. <- Maybe your system hates self-modifying code.

The TSelfTest.SetName() function is an example of this:

In the code is just an empty function:

procedure TSelfTest.SetName(const Value: ansistring);begin end;

And in the executable it is patched automatically to became this:

procedure TSelfTest.SetName(const Value: ansistring);

begin

if FName<>Value then begin

FName:=Value;

Notification(FieldIdentifier);

end;

end;

So after patch, in your debugger you can see a jmp instruction instead of an empty function.

Can you pls specify the system you tried to run it on?

(All I know that It runs on: Intel core2+winXP-32, AMD Athlon2+win7-64, Intel core(1)+win7-64, I'll ask more friends to try and hopefully we can reproduce the error)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Self-modifying code, evil-evil! I did not know that there is still any OS out there allowing for that, but as you have a list of platforms where it works, there may be options to configure that.

With the new binary, I get the SelfTest failed popup. Continuing brings up the UI, with an empty, grey left side. Anyway I can load any of the examples into it. Compiling adds "Compiling OK (0.001 sec)" to the status line. Trying to run the code locks up HetPas, and strange enough, also all other GPU computing applications (I was running a few trial-factoring programs).

My System is a Xeon X5650 (hex-core) 2.66GHz, hyperthr. enabled, 6GB, HD7850, W7SP1-64, UAC disabled, DEP enabled.

OK, as DEP (Data Execution Prevention) almost sounds like prohibiting self-modifying code, I disabled it, rebooted, and voila:

elapsed:0.0940783619880676 for GCN_OpenCL_mandel

elapsed: 5.347 ms

Cycles (including latency): 600 for GCN_OpenCL_latency_test

elapsed:0.000502757262438536 for GCN_OpenCL_Fibonacci_recursive

Really cool! Now I have something to play with ... and you can document that HetPas does not work with DEP enabled ![]() .

.

I'll try to find some more time soon to test my own kernels. Is HetPas creating binary kernel files that can be used by OpenCL's clCreateProgramWithBinary to load it into "normal" OpenCL programs? My ideal workflow (given that AMD does not want to support GCN-ASM) would be to write/use my normal OpenCL kernels, let it compile, try to optimize the resulting ASM, and finally use the optimized binary kernel ... some day.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm happy that this had been solved. I thought it was something extra-weird bug in my framework.

On Windows you can read/write in another process's space too, that's evil haha. But this DEP feature can be very useful for web servers against code injection I guess.

So It works on 7850, It's great ![]() Maybe I can assume that every GCN card will do.

Maybe I can assume that every GCN card will do.

>Is HetPas creating binary kernel files that can be used by OpenCL's clCreateProgramWithBinary to load it into "normal" OpenCL programs?

Of course! It generates OpenCL elf files with the help of the official OpenCL compiler. Your ISA code and the specified parameters (LDS size, VRegCount, etc.) are patched into the latest OpenCL elf image. So if there will be slight changes in the official elf, it will hopefully follow that.

Your 'ideal workfow' is kinda supported, I made the compiler to be 100% compatible with the AMD-Disassembler's output, though there are some instruction encodings that I did not implemented yet (images, some double-float ops,...).

For those unsupported instructions you can use then 'dd' command to inline literal code dwords. (dd 0x1234567, 0x7438278, ...)

Another restriction is at kernel parameters:

__kernel proc(__global *int a, __global *int b, __global *int c,__constant *int k){};

I support only this type of parameter config. You can use 0..n __global buffers and 0 or 1 constant buffers as the last parameter.

You must specify this with the oclBuffers [noOfGlobalBufs], [noOfConstBufs] command.

Some day I'll update the help-file to include GCN_ISA stuff. Until that Ctrl+Space is the biggest help while coding.

Anyways, good luck with it! ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi realhet,

I had a lot of trouble with my OpenCL code, so optimizing the GCN ISA was out of question for a while 😞

Getting on top of them, I'm coming back to hetpas. Do you have a version with more complete instruction set? Not that I'm as far as really missing some, and I already experimented with the DD instruction ... but I thought I ask ...

A more difficult issue is the kernel parameter list. My one typically looks like that:

__kernel void cl_barrett32_77_gs(__private uint exponent, const int96_t k_base,

const __global uint * restrict bit_array,

const uint bits_to_process, __local ushort *smem,

const int shiftcount, __private int192_t bb,

__global uint * restrict RES, const int bit_max64,

const uint shared_mem_allocated // only used to verify assumptions

#ifdef CHECKS_MODBASECASE

, __global uint * restrict modbasecase_debug

#endif

)

Of course I can rearrange the global* to the beginning. But do I need to put my const (and other) __private parameters into __constant memory? And even worse, I have this __local parameter where the host needs to define how big it is. Hmm, I could try and pass some -Dshared_size=xxx to the compiler and define the shared mem inside the kernel ... need to check.

Bdot

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

There are some new encodings:

I had to work with LDS, so there are:

ds_*_b32, ds_*_b64, ds_*x2_b32, ds_*x2_b64

I'm not sure about ds_atomics tho'.

But the bigger change was in the macro preprocessor, I implemented some NASM goodies:

#define dsValueCnt 36

#assign dsStride dsValueCnt*4

Macro definition with #macro and #end (C-style multiline #define still works)

#macro smemRead(dwaddr,value)

enter

s_temp smemAddr

s_mov_b32 smemAddr, dwaddr

s_lshl_b32 smemAddr, smemAddr, 2

s_add_i32 smemAddr, smemAddr, uavofs

s_buffer_load_dword value, uav, smemAddr

s_waitcnt lgkmcnt(0)

leave

#endm

New local register allocation:

v_temp_range 2..84 //first you can define a free range of registers of bot types

s_temp_range 8..11,14..104

then you can alloc:

s_temp x,y,z

s_temp retaddr[4] align 2 //this allocates an array, thet is aligned to 2 dwords and can be accessed with [constant index]

You can define variable scopes with enter/leave: 'leave' unallocates all the s_temps and v_temps you allocated after 'enter'. Watch out for #macro_parameter and temp name collisions, these are just macroes, not functions.

There is a repetitive macro too:

__for__(i:=0 to 30, Inner(CLen,i) ) expands to Inner(CLen,0) Inner(CLen,1) ... Inner(CLen,30)

Still there is 'alias' instruction: you can map aliases to registers with it.

alias uav=s[4:7], TID=v0, LTID=v1, GrpID=s12, uavofs=s13

v_mov_b32 LTID, v0

s_mul_i32 s1, GrpId, groupsize

...

My last project used 700 lines of code just for a 20 instruction inner loop. It would be quiet impossible without automatic register allocation. If I were chosen the way 'map registers manually', then I still be fighting with bugs probably ![]()

----------------------------------------------------------------------------------

Kernel parameters:

Right now it is in the form:

__global anything* you can have zero or many of this

followed bye __constant anything* you can have 0 to 1 of this.

oclBuffers 2,1 means 2 uavs and 1 const buffers.

This is this simple because inside the kernel, you have to access the buffers in your code too.

The 'oclBuffers 1,1' configuration is simple:

; userElements[0] = IMM_UAV, 10, s[4:7]

; userElements[1] = IMM_CONST_BUFFER, 1, s[8:11]

; userElements[2] = IMM_CONST_BUFFER, 2, s[12:15]

; COMPUTE_PGM_RSRC2:USER_SGPR = 16

uav:

read a qword from s[8:11] : 0 (s[8:11] is a resource const) this is the forst __global's base offset

read/write from res s[4:7] offseted with the base offset

cb:

simply read/write res s[12:15]

If you use 2 __globals, this will be changed a lot. You'll have to debug that how OpenCL does it.

More __globals are not a problem but you'll have to load an array of resource constants from a given res const first. If there is more than 3 IMM_ buffers then it will pack the uavs into an IMM_prt_buffer (or wathever).

Also if you play with parameters, then then USER_SGPR will change too (in this sgpr residet the ThreadGroupIndex at kernel start).

Kernel domain range:

Because the generated kernel doesn't use the 'domain parameter buffer' (this way it can have 1 uav and 1 CB without PRT_Buffers (indirect reads)) you have to calculate the thread indices manually:

There is a linear WorkGroupGroupId in COMPUTE_PGM_RSRC2:USER_SGPR (you can see in the disasm, mostly s12 or s16).

And there is always V0 which contains then ThreadId inside the WorkGroup.

__private: I don't know what is it. If these are expicit registers, then feel free to use any of the allocated regs. You can specify used regs for kernel with numVgprs 64 numSgprs 64. Vgprs64 is ideal, Vgprs84 is mediocre, Vgprs128 is ok, and at Vgprs128+ you really have to watch out for S and V instruction interleaving. Sgprs can be 105 at maximum, I didn't noticed any slowdown because of the S-regs.

__local: -> ldssize [bytes]

And yes, you have to put all the constants on a single constant buffer (trust me, It's easier to pack it on the HOST side than reverse engineer how OCL interleaves them with other domain/uav_base_offset/etc data ![]() ). On the kernel side individual constant parameters are loaded the same way as you pack them into a single buffer (with s_buffer_loads), but on my side, my prog just can't handle complicated headers like this. So the param order is: [uav[, uav[, uav[, ...]]]], [cb]

). On the kernel side individual constant parameters are loaded the same way as you pack them into a single buffer (with s_buffer_loads), but on my side, my prog just can't handle complicated headers like this. So the param order is: [uav[, uav[, uav[, ...]]]], [cb]

Good luck with GCN asm! ![]()

PS: Oh! I added GCN minihelp: you can press F1 on a GCN keyword in the editor, and get a small description on the complicated ones. Or you can browse available instructions in the help pane. And use Ctrl+Space for coding (if you haven't use already).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

If anyone interested, I've made a small post explaining a simple GCN HelloWorld program.

This example implements and runs this simple OpenCL kernel:

__kenel test(__global int *uav, __constant int *cb)

{

int gid = get_global_id(0);

uav[gid] = gid + cb[0];

}

You can check it here -> http://realhet.wordpress.com/2013/06/10/gcn-hello-world-example/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very good article, thanks ! Your explanation on how registers are used for buffer resources is of first interest for me, I had given up on this for weeks (since my question on SO didn't get any answer).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, You're welcome!

I'm not sure if I mentioned Table 8.5 in the ISA manual. That's the info on that 128bit Buffer Resource Descriptor.

In cases like __global int* it is quiet simple: There's a 48bit base offset in it, and a stride of 0, also the size is simply set to $FFFFFF00 (not much effort to protect the memory ![]() ) The last interesting field in it is data format which is 32bit.

) The last interesting field in it is data format which is 32bit.

In your SO example: s[4:7] is this big flat resource for your parameter.

s[8:11] is a small resource: it contains dword offsets for all the parameters.

The things are getting complicated when you use 3 or more parameters: there will be a 64bit pointer to an array of resources and/or offsets passed to the kernel at startup. (even there are as many as 16 user elements, OpenCL will use only 3 of them).

Anyways, if you understand s_load, s_buffer_load, buffer_load/store, tbuffer_load/store instructions then you can 'decode' that how the parameters work in your kernel. (FYI: s_load is the only one that works with 64bit absolute address, all else are using 128bit buffer resource descriptors)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the clarification ! But all those offsets are a bit confusing me.

In my particular example, after the first s_buffer_load, s0 contains the base offset of the "data" parameter, which only depends on the data type, is it correct ? If it's the case, why isn't the offset seamlessly added to the base address directly in the buffer resource descriptor ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know why is it that redundant.

Here's (__global int *a,*b,*c,*d): That's 4 Buffer Resource and 4 offsets total. And you have a 64bit address s[2:3] for the Buffer Resources, and a separate Buffer Resource (s[4:7]) for the offsets.

a=dwx4(s[2:3],0x50) ofs dw(s[4:7],0)

b=dwx4(s[2:3],0x58) ofs dw(s[4:7],4)

c=dwx4(s[2:3],0x60) ofs dw(s[4:7],8)

d=dwx4(s[2:3],0x68) ofs dw(s[4:7],0xC)

I agree, that 4 Buffer Res would be enough... Maybe this enables to pass pointers (that point inside specific buffers) to the kernel, not just whole buffers, but as I know, there is no such thing in OpenCL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks (again) for your answers !

realhet wrote:

Maybe this enables to pass pointers (that point inside specific buffers) to the kernel, not just whole buffers, but as I know, there is no such thing in OpenCL.

Sub-buffers may be one of the reasons to manage things like this. But I don't see how the compiler could be aware of it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi realhet,

Thank you for your developing HetPas!

Do you know how HetPas could be made to work with the Cat14.x drivers? As many users are updating their drivers, this incompatibility is becoming an issue for me ...

I noticed that Cat14.4 does write -save-temps ... is that what was needed for the disasm to work?

Do you have plans to build a version adjusted to Cat 14.x? Is it possible at all?

Thanks,

Bdot

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bdot,

You're welcome!

I had checked what's with 14.6beta, and it turned out that the driver developers changed the way parameters (buffers) are passed to the kernel. It's improved and uses less instructions and less vregs for my small testcase. It need some time but unfortunately my current job doesn't involve gpu programming, so thats why it is stuck at 13.4. Btw I wonder if 13.4 supports the new R290 cards. Maybe not, and then this is indeed a problem... But sooner or later I gonna have time off, and then I wanna do some hobby programming on GCN, so I'll probably have time to understand how the new elf works.

"I noticed that Cat14.4 does write -save-temps ... is that what was needed for the disasm to work?"

The problem is with -save-temps -fno-opencl -fno-il -fno-llvmir combination. It produces an ELF that only contains the binary executable. And you are unable to load this type of elf and disasm it, unless you are using an older catalyst (below 13.4 for example 12.10 is great). So you can see disasm for the opencl test, but no disasm for the mandelbrot example which is written in asm, and it has no higher level sources included in the elf file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

If anyone interested, there is a new version of HetPas accessible on my site.

The most important improvement that it is now compatible with Catalyst 14.6, 14.7, 14.9 and 14.12. You can generate binaries using any of these versions that can be executed on any of these versions. Also it works with the recent R9 cards now.

I've also made a case study on implementing an alternative crypto-currency (Groestl) in GCN ASM.

You can read the series of blog-posts here: Implementing Groestl hash function in GCN ASM | HetPas

Although in the end it turned out while using appropriate OpenCL compiler(14.7) and with some black magic the OCL version became so fast that I approximate the final performance improvement over OpenCL only 10%. But it was a fun project for my holidays and I learned much from it. Hope someone can learn from it too. In the series of blog-posts I go through the most obvious optimizations to reach a noticeable speedup, and finally it resulted that those techniques can also be used in optimizing the OpenCL kernels.

My next hobby project will be a rope simulation with a little twist. I hope I will get to make it in this year, haha.

(Sorry about the lack of 'hello world' examples, they are still broken on the latest drivers. They need Catalyst 13.4.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello realhet, awesome work you're doing here!

I'd love to look at it in detail and get some GCN ISA as well... the CL compiler is borderline random sometimes.

I would like to ask if you can take a look at my GRS-MYR implementation. Users have reported various interesting things with it... the cool thing is that I really wrote it for clarity over performance. I was expecting a very minor loss of speed....

Instead most users (7800 up, 280 up) reported a huge drop in rate... users with low end cards happen to churn along great at like 5x the speed! It seems to me GCN cores are not always the same but AFAIK CapeVerde and Tahiti are the same design. Do you have any theory on why could this be happening?

As a last note: I'm currently inclined to believe the T-table approach might be not optimal for GPUs.The nvidia folks seem to have a bitslices groestl implementation which increased perfomance by 3x.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I've checked your code: it's the same 8 table lookup thing. So I guess the same things should be applied:

- VGPRS<=128

- CodeSize<=32KB (for the main loop)

- Table lookups can be balanced between LDS and L1. 3x LDS and 1x L1 is the sweet spot.

CapeVerde vs. Tahiti scalability issue. I don't know... This thipe of thing doesn't use any shared resources, the code just can run on the CUs alone. So it should be scalable without a problem. Give a lot of workitems to it...

Bitslice: I checked it a bit and if I see it well, you just can't avoid the lookups. Those are the bottlenecks now, and that's why I can only slightly outperform a well optimized ocl code with asm. Maybe the lookpus can be faster as they don't need to be 64bit... Can you tell exact speeds on that NVidia bitslice approach? Current T-Table ocl is 33MH/s on the R9 290x. And the asm well be like 36 MH/s when somebody implements the first/last round optimizations.

As you said 'NVidia', I've heard about that awesome 3 operand bitwise logic instruction: all the 16*16 logic operand combinations can be selected by an immediate parameter. Maybe the NVidia version uses that too. Currently we have the only 3 operand bitwise operation that is BFI (a&b | ~a&c).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I know it is the same. It has been written to be the same in theory but it has this strange behavior (it results in 7750 beating 7850 and NV 750Ti).

The program I distribute adjusts worksize to both CU count and nominal driver clock, resulting in a number of hashes which is always 64n.

I also have several other variants (including mixed LDS/L1 which produced the very same results as yours); none seem to be considerably better than this on my hardware.

Unfortunately, I haven't got much from my users. Some of them don't even have proper English, I cannot really blame them. Most of this community seems to be very jealous of their data, and the few data they give is usually incomplete.

I cannot tell if their speedup stems from the operation you mention but I've heard NV has a swizzle instruction which is awesome for this kind of things. I hope next GCN will have it as well (if not on Tonga already) because for those simple algorithms it seems to be a far cry from LDS sharing.

Thank you very much for your time!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GCN has swizzle too. (If you meen between the workitems of a wavefront)

I had the idea to try that with LiteCoin so that wat the LDS could be the bottlenect kinstead of the MEM and the math could be realized somewhat paralel using ds_swizzle instruction. As it is an ASIC territory it doen't worth it except for learning/experimenting purposes.

Small card/big card problem: Are you kernel launches taking at least 50ms? If you're around the minimum number of workitems it is risky because the LDS is 'randomizing' execution times. So I think it is better to have a few million *n instead of 64n workitems. Long running kernels always produce the best performances. This is only problematic when you have to process realtime data, but in mining it is not a problem.

And start with the biggest issue first: First VGPRS opt as going down from 150 down to 128 can run 2x faster. Then InstructionCache hits can add let's say 50%. and only after that the LDS/L1 balance became important.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

realhet wrote:

GCN has swizzle too. (If you meen between the workitems of a wavefront)

I used the wrong term. They refer it as "shuffle" I think. But yes, I mean permuting private registers transversally across work items in the same wavefront. Is it exposed in OpenCL? I think I've missed it completely. Perhaps it is in CL2?

realhet wrote:

I had the idea to try that with LiteCoin so that wat the LDS could be the bottlenect kinstead of the MEM and the math could be realized somewhat paralel using ds_swizzle instruction. As it is an ASIC territory it doen't worth it except for learning/experimenting purposes.

I also did the same. My data does not support the idea of scrypt being memory-bound (regardless of either bandwidth or latency). My readings in CodeXL gave me ~95% VALUBusy if memory serves. This seems to go well with its reputation of being "too hot". If memory serves, my bandwidth measurements were ~30%. Everything I attempted to lower latency usage (at the expense of ALU) resulted in lower performance which I consider typical behavior of ALU-bound scenarios. Most of those measurements with GAP 2 if memory serves.

realhet wrote:

Small card/big card problem: Are you kernel launches taking at least 50ms? If you're around the minimum number of workitems it is risky because the LDS is 'randomizing' execution times. So I think it is better to have a few million *n instead of 64n workitems. Long running kernels always produce the best performances. This is only problematic when you have to process realtime data, but in mining it is not a problem.

This is not under my control. My software does not target core miners but rather occasional users. It is focused on keeping the system responsive so I target ~30ms per dispatch. Most are much lower. Testing is performed usually at ~100ms.

Grs-myr kernel under (14.9 I think) consumed 104/20 VGPR/SGPR and took 16.08 KiB. VALUBusy was around 30%. I'm afraid I don't understand your terminology... shouldn't it be small enough?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, and I wish you a happy new year ![]() !

!

Please check out my GCN Quick Reference Guide here -> GCN Quick Reference Card | HetPas

(Almost) every instruction is listed. GCN3 features are highlighed with red color.

Big thanks to AMD for the ISA manuals, and to matszpk for the amazing ISA encoding documentation here -> ClrxToc – CLRadeonExtender

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you realhet. I wish you happy new year too.

I have small affair. I found yet another error in the AMD GCN ISA manual: Just one opcode is not correct (I verified it): DS_WRITE_SRC2_B64 is not 204, but 205 (like in GCN 1.2).

Opcode 204 just hangs up GPU ![]() . That's all. Thank you for attention.

. That's all. Thank you for attention.

EDIT: I forgot about: opcode for DS_WRITE_SRC2_B32 is incorrect too. It should be 141. Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you heard the news? ![]()

Finally there will be an officially supported way to inject asm into the gpu.

I really hope they'll also implement the DD instruction to be able to compile any machine code.

So no more ELF patching/hacking will be needed when a new driver comes out.