PC Graphics

- AMD Community

- Support Forums

- PC Graphics

- Re: New RX 6800 very impressive!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

New RX 6800 very impressive!

I was having issues with running FarCry 5 and just general grief for a while now. I was on the fence of whether to go "green" or try a 6000 series card after all the prior driver issues, error 18's, etc. So, I bought the Gigabyte RX 6800, for not exactly a deal at about $200 over MSRP but not a rip off either. It is a BEAST! I was in remorse over not having enough to buy the XT until I installed this.

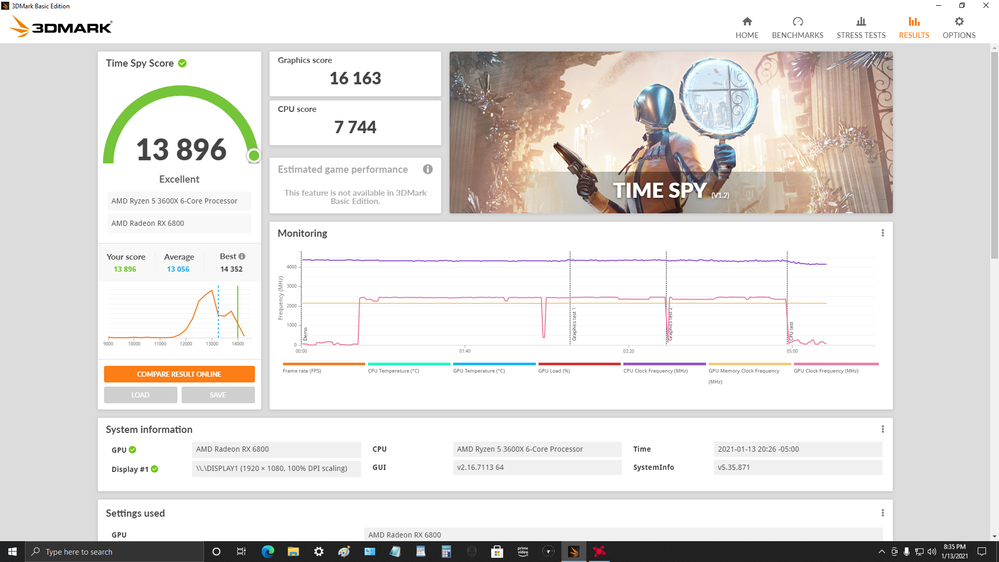

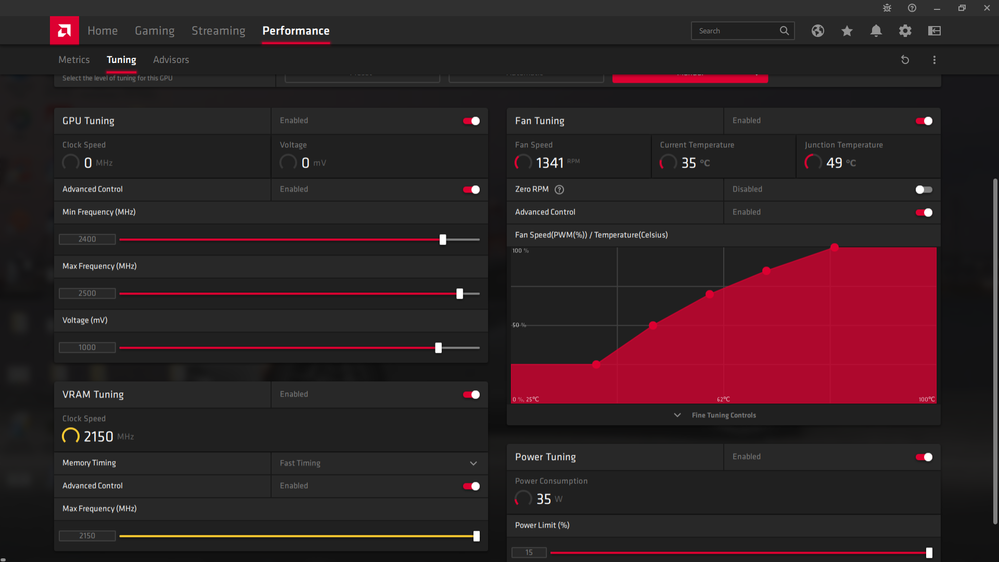

Overclocking was a snap. The 20.2.1 driver works fine. I have no complaints amazingly, none! Not saying all "partner" cards or reference models will run this well but mine's set to 2400 min/2500 max GPU clock, 2150 VRAM clock, 1.00mv and passes 3D Mark TimeSpy with incredible numbers. If I try lowering the VRAM the card performs worse. Reason i say that is some reviews mention the ceiling for the VRAM as 2100, not in my case. It never pegs those settings anyway in testing or games. It will hit 2501 or so GPU and VRAM will run fairly steady at 2140-48. Temps at 70c or so under full load.

An XT model doesn't have near the "headroom" for overclocking as they come running pretty "hot" out of the box. See attached results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i got the 6800 xt ... nothing to complain lol ( except 2 or 3 bugs with 1 or 2 games, but other games don't , so overall stability is excellent , but of course the drivers and the card are new, I'm not a child whinning for every little thing that don't go my way NOW, as i see often on forum lol) so in fact pretty impressive , coming from a radeon VII which was already good at 4K, but with last games beginning to have to lower some settings, ....but not anymore with 6800xt :)

base model from amd => oc to 2650mhz - 2100mhz ram and undervolt set on 1030 (on air !! this is crazy!)

for now i play AC valhala > 60 fps 4k ultra

finished cyberpunk at 4k ultra with cas fidelity set from min 80 to 100 at 45 to 60 fps

playing BF V at 4k ultra with resolution set to 120%

Project cars 2 , NFS heat etc

no gpu crash seen for now after one month gaming, (sometime new game AC valhalla crash but nothing very annoying, i just restart the game and continue) , besides other games i play never crashed

yes these rx 6000 are pretty strong 4k gaming cards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can hit 2600 but it wasn't stable at 1.00mv and I'm trying to keep temps down while getting superior performance. If I goosed it to even the max voltage, it might do 2600 but not worth any aggravation since it runs so good where it's at. It's basically a reference card in a Gigabyte box. They set the base clock a few Mhz higher than a "stock" card is all. I also only play at 1080p, so FPS is incredible.

You found out, there's no OC "headroom" on the XT and there's only "headroom" on the non-XT because they left the clocks so low. Smoke and mirrors, lmao! Like my old RX 5600 XT running as good if not better than the RX 5700 XT, it was just too low on VRAM for some title's. Nice thing is I can resell it for more than I paid for it right now, which I might do. I might just hang onto it in case this acts up. Did you move the min clock up to within 100Mhz of the max clock? If not, do it, smooths the card out and boosts overall FPS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

mmh in fact i stopped trying to oc after seeing perf decrease above 2650, but mine can do 2700 and maybe more but I think it would need some unlocked bios for that to be efficient, unlocked TDP and power limit a bit and it would be easy to go above 2800 (if that was unlocked too lol, but at least 2800 easy ) ...

my radeon VII was good for that : 1800mhz base clock, oc @ 2188 with 1.29V (watercooled) and +77% power limit, this card was fun too

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have a base XT you need a better cooler to go higher. It's built like mine, no flow through cooler, a block of aluminum and a metal backplate with no thermal pads. I saw the teardown on both of these "reference" cards. Lackluster in the cooling dept., worse for the XT. The VII was basically this card, minus better memory handling. That was a very good card not long ago.

World's First 7nm Gaming GPU | Radeon™ 7 Graphics Card | AMD

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

:) my 6800xt is watercooled , i get the same oc as on air, 2650 ...the only difference is now it's 70-75° tjunction temps and on air it was 100°-105 tjunction temps ...about thermal pads on backplate , i'm not sure about this efficiency , as i was mounting my waterblock i was searching for info or tests about that , saw these yt video on tearing down etc, and did not find any data except guys on oc forums speaking about maybe very minor improvement, when not none or worse, maybe no backplate can even be better with case airflow , ... reality vs some yt reviewers/influencers trying to make "content" ;) ... if really it was changing something they would have put some, that's my view lol

but to go higher in freq, it's really a matter of bios unlocking in fact, base cooler is really sufficent , i had my wc loop from my VII that a could reuse, so I did, but in reality i didn't get any throttling without it, so ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only observation that I have made where it isn't as good is in one game which bucks the trend. It is in an Indie game that I'm playing. "Workers and Resources Soviet Republic".

Having all the stats on the screen, from Afterburner, RTSS and HW Info I can see how it performs with the CPU and the GPU, in terms of load, clocks, fans etc and then GPS.

That game uses my 9900k @5ghz reasonably well. But hardly makes use of the GPU. However it does perform better in terms of the FPS on the 1080Ti than it does on the 6800xt. That is consistent.

That is a bit disappointing at they moment as it is my "go to" game.

Nvidia 1080Ti....

AMD 6800XT Red Devil LE.....

Neither overclocked. GPU usage is low, as expected. But the 1080Ti does seem a better performer in that title.

Others perform as expected.

The only issue in these times is, of course, price and availability.

Couldn't seem to place an image within the forum, but a link to it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

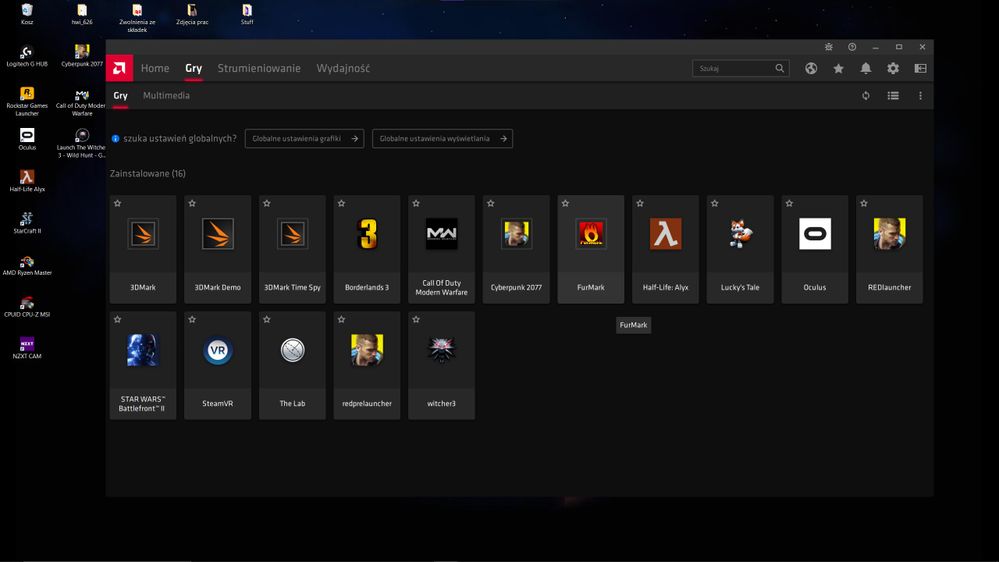

That game seems a little CPU bound but you really should play with the OC settings in Adrenaline to get the most out of the card. By sliding the min/max GPU clocks to within 100Mhz, you'll notice a huge difference. I'd also recommend setting the OC to "Automatic", then select " undervolt GPU" to find the lowest voltage it can run at, write it down. Switch to manual OC and enter the number or slide the bar to that voltage. Unlock all the settings so you can move the min GPU within 100 Mhz of your max. Slide the "power limit" to max, this gives more power only if needed under load. Turn off "zero RPM" fan setting. You can leave the stock curve or move it up a bit. Here's a pic of my settings, yours would be different. These changes really wake the card up, no matter what 6000 you have.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi mackbolan, i am a noob on overclocking but i have a rx 6800 too and wanted to give it a try, why did you undervolt the card? i didnt understand that, and why force it to min of 2400mhz?

i Only did the basics here, i have put memory and max power to maximum and max mhz increased by 7%, my card can reach 2400 mhz and are running good, no crashs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forcing the min GPU clock to be within 100Mhz of the max stops frequency fluctuation and voltage spikes that could cause a random reboot. So mine is minimum 2400/maximum 2500 at 1.00mv, VRAM was stable at 21

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Firstly thanks very much got what you posted about overclocking the card. I did try overclocking it, also managing to overclock the memory by 100mhz.

However with what you have written I now have a more methodical way of doing that. Again many thanjs, I'll give that a go. Perhaps more so with other games. A manual fan curve seems more appropriate.

The game noted..... Yup it has elements of being CPU demanding over that of GPU. A bit like Cities Skylines. But I have noticed the following...With the 1080Ti it is as tho the GPU is taking on more of the rendering and is able to run at much higher frame rates than the 6800xt can come close to.

It is as tho the drivers themselves aren't able to work fully with the game engine and support that rendering through hardware, and so it is off loaded to the CPU. A bit like when running a part of the Time Spy benchmark that does CPU rendering.

Overclocking with the 6800xt and that game does nothing as the GPU load is already pretty low as it is.

Taking a very opposite experience earlier today is when running the game Medieval Dynasty. With that one, and GpuZ open on my second monitor, you can see the GPU load up to 99%, the clocks at full, the power load draw high and the FPS just hitting 100 or so. That game benefits from overclocking.

So the Workers game is not being utilised correctly / enough by the 6800xt with the latest drivers. Whether that'll get improved I suppose it's up to AMD. I have reported it, but it is an Indie game, of that matters.

Coming back to the overclocking, and what you wrote, I would not have thought about undervolting as doing anything other than make things unstable.! I guess that you always have your fans running, low when not playing games, rather than stopped with having a manual fan curve.

With the Nvidia card I had a 2D and a 3D profile for the clock speeds.

With the 6800xt do you do it by each game..? You wouldn't want those clock settings to be permanently applied.?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run all the time with those settings, no issues at all. Yes the fans run all the time, much better than an idle of 60c no load, 70c junction no load as it was stock. No driver will fix a CPU intensive game only the game dev can do it and I wouldn't count on it. Like FarCry 5 indicates "Ryzen" in the splash screen, yet Intel CPU's will run it better as would Nvidia cards up until this RX 6800. Now I can run that game with full textures, "Ultra" everything at 1080p, my preferred resolution for gaming, and never dip under 100 FPS. Running without full textures but "Ultra" it is around the 130 FPS mark. I think you run an Intel CPU as well and that factors in as I run an X570 board which allows the card to use PCIe 4.0, giving me extra bandwidth for the VRAM and running a highly tuned 3600X with highly tuned 16GB 3600 RAM with custom sub timings. The 1080Ti is a PCI 3.1 card and that would pair better with your given setup, yet in more GPU demanding games, the RX 6800 will pull ahead regardless of slot gen. Basically matching AMD chipset/CPU/GPU will yield better results even in a CPU bound title vs. Intel and this card. If you plan on sticking with Intel, to benefit from PCIe 4.0 you need a Z490 chipset and a LGA 1200 board, then compare the 1080Ti to the RX 6800, much fairer comparison. Although to use SAM, you need to have an AMD 5000 series GPU/500 series chipset. This RX 6800 in my setup at 1080p will most definitely keep up with or pass the 1080Ti but a more fair competition would be an RX 6800 XT or RX 6900 XT, the latter preferred, but that card is meant to trade blows with the RTX3090. This setup is meant to go against the RTX 3070 but keeps up with or passes the RTX 3080 and it's not the XT.

Call me a "fan boy" or whatever, but AMD paired with AMD usually works best these days. If using Nvidia, I would want a PCIe 4.0 card like the RTX 3080, since it has 10GB VRAM. Won't be long an AMD will have a better answer to DLSS and raytracing, probably via a driver update for the 6000 series cards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for the reply, i will try yours settings to see what happens here, i always though that voltage should be as high as possible to have better performance, i would never imagined that it is better to undervolt it.

How much performance did you get with your overclock? from the games i played it seens that i gained 6 to 7% performance with my simple OC, i play in 4K, i dont know if that change something regarding OC and performance gained.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The cards come with too much voltage to make sure all operate more or less the same. So if one has poor silicon, the extra voltage might stabilize it without causing the issue to be noticed by the end user. It's a way to avoid RMA's basically and it's done within reason enough that if the card would fail to operate at 1.025mv, they would RMA the card. More voltage doesn't always equal better performance. It depends on what you're trying to OC. Like a Ryzen CPU doesn't like high voltage for a long time. Intel's don't like a whole lot either but more will sometimes enable a higher clock. More voltage always equals more heat. Heat eventually erodes the part. With most GPU's built today, Nvidia included, less voltage the better to achieve the most stable but high clock speed possible. The "old" days of feeding more voltage to gain maximum performance are gone. We work the other way, factory high to low.

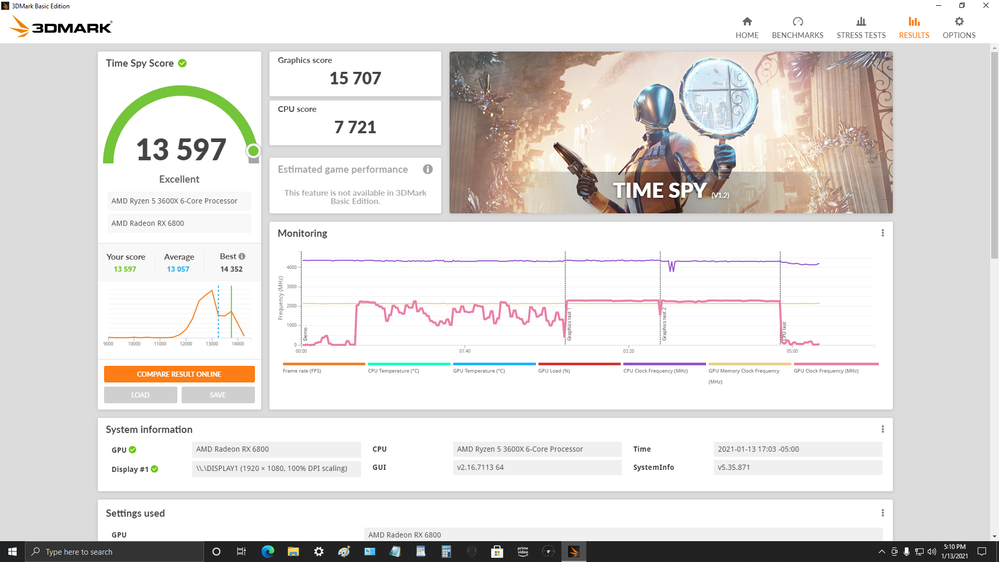

I don't know what percentage's is better. Look at the original pic of my 3D Mark TimeSpy score here. Then the one at the beginning of this post. You'll notice the clock is much straighter in the 1st pic, post OC vs. this stock test, where the clock is inconsistent. The OC results in better frame times, smoother gaming overall. FPS depends on title and i didn't run any games pre-OC so not really sure what I gained there. With FreeSync on in BF4, Ultra, 1080p average FPS is 174-178 via Radeon readings. With my RX 5600 XT I was at 154.7-164 FPS. So 20-30 FPS more? But much smoother and with the OSD on I see the FPS at 200 more than anything less, however FreeSync smooths out any tearing. Temps run around 70c-50c depending on what's being rendered. FarCry 5 was the biggest leap since I now get a constant 100+ FPS with all the "eye candy" up where the RX 5600 XT was at 95-100 on "high" settings and medium texture levels. Still need to try more games but I'm sure this won't disappoint.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have placed a post in the General section as to why I do not get the option to add images to my posts or replies...

Anyway.... I ran a Time Spy bench with my "new" settings...

I applied 2400 min and 2500 max overclocks.

1100mV. Memory overclocked to 2100mhz. Power limits raised to max value.

As yet the fan profile is still on auto. I did try it on manually and then also to remove the zero fan option. What I do not like is that you can't lower the fan down to a very low level manually. I noticed on your manual curve that also applied. Seems a little odd..?

You can see on that Time Spy bench that the clock speeds are less erratic than what happens when left on automatic.

Overall it seems a worthy performer :)

EDIT: Does anyone know how to assign or what is the shortcut key for the "Metrics Overlay" and not the ALT+ R key for the full overlay..?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nice score! Now lock your CPU cores to an all core OC to stop it from hopping all over the place and you'll find the Soviet game running faster. Notice how your CPU line is all wonky? i9-9900K is hitting 5Ghz no problem, in fact your BIOS probably has a setting for it to be a one click operation. It does not void your Intel warranty, I checked because I was going to build with that same CPU. Intel even has a $20 overclock insurance deal where they'll replace the CPU for any reason.

You can hit "delete" to remove the current hot key combo and click on the space again to modify it. Why you are missing the "add phot" icon, no idea. I'm using Edge, so if you're using another browser or browser security plug in it may block that option.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried yours settings in my rx 6800 and worked perfectly, i gamed on it for 2 hours and no crash, i noteced that my performance now is 10% higher compared to stock clocks, the only thing concer me now it is junction temperute, saw it reachs 99 degrees some times, but mustly of the times it stays on 90, GPU is just 68 celsius, it is normal so high junction temperature?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is some crazy oc and I don't believe it should be used as daily settings. Things have limits but it's not good for hardware to toast it every time while gaming. Clocks will go lower with time on the same settings and termal pad degradation will be much faster with such temps.

Dunno of someone noticed, even when you set some voltage values, gpu ignores them with more oc. So when you will put something like 2350 with 950mv it will skyrocket to 1025 limit. So just because someone is changing to lower value, doesn't mean gpu works with those settings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The OC is not "crazy". AMD's software doesn't allow for "crazy" nor does the card's BIOS. Thermals for me and others are lower even with this OC, than stock. Stock was idle 60c, game 90c+ because the cooler can't reduce temps quick enough when the fans start from 50c or so. By that temp, you want to be spinning around 40-50% on a reference card. These don't have the best coolers to start with, if you have the basic "box" design without any flow through the rear of the card like on some "partner" cards that come with an OC out of box.

It doesn't ignore the voltage under load. If you see it going to 1.025, that's not the max and it's simply the power limit kicking in as needed. Thermal pad degradation happens over time, a lot of time, unless you're running the card out of it's temp spec. That is nearly impossible because the card will throttle and most users would notice performance going down and back off voltage or clocks.

I also think you're trying to compare the 6000 series to the 5000 series or earlier designs and that's apples to oranges. Even with MSI's tool you can't go beyond AMD's limits. Unlike older cards you could check off the box to go over the limits.

Solved: 6800 XT Safe Temperature Range? - AMD Community

110c is the TjMax, obviously we want to remain lower and 70-80c is far from baking anything. Looks like GPU throttle starts at 90c, I never get past 80c and even that temp doesn't stay fixed while gaming. Temps go up and down even in demanding titles from 50-80c, more often hovering around 67c. That's both junction and core. Not all cards are equal, that's why I said "try" my settings if you have a similar card. By no means is anything I posted a danger to the GPU or thermal pads.

Running a card at 500Mhz and ramping it to 2500Mhz, while in game as well is worse. The voltage spikes higher, the clocks are all over causing stutter and whatnot. Some systems reboot from the PSU tripping the surge safety. So I consider this a workaround until AMD fixes the drivers for these 6000 series cards. You don't buy these for economy. It's like buying a Bugatti and expecting good gas mileage and using it to go to the grocery store. These are enthusiast to high end cards for serious gamers, not Roblox players.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just a remark amd gpu throttles at 110 , ... so whatever the temps you can't get over 110 and 110 is absolutely safe for tjunction

if you have tjunction at 90 with oc , it's excellent , even more excellent if less than that (on air)

as said , my tjunction with 6800xt watercooled, and the "little" oc i can do ( but pretty decent as things are locked , 2650), is 70-80 that fresh and cool for tjunction and would let a lot of space to go further oc with temps

with radeon VII we were not locked as short , i could oc until i reach 105-110 tjunction (with watercooling too , up to 2188mhz , starting from 1800) and that was even corresponding to max freq i could get with approximately +0.25V! stable ,.. and then it's not worse to get further if card throttle or you need a better cooling system (at one moment i changed my old radiator to a new larger one , i nearly gained 3 to 5°)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you turn off "zero RPM" for the fan? Possibly raise the fan curve for temps over 50c. At 70c you should really be running the fans at 80%. However, you're well within spec. I see 70-80c junction in games at 1080p. 1440p or 4K will stress the card more, so more fan curve should help. Getting the junction temp down a little should also get you more than 10% over stock. The biggest gains are using 1080p by far though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did try an all core full overclock without speedstep etc. It made no difference in FPS for that particular game. I didn't think it would but I do agree that the solution for that one will either lie with the Devs, I am in contact with, or even AMD with their drivers - altho unlikely.

Running the card with the core clocked to 2400 to 2500 has no effect at all when it is in Windows - just check the core clock then. I agree that using the software that forms part of the official drivers will do no harm setting it to those values you have noted. And you are right regarding the max temp that these cards are able to run at. If anything undervolting the card as you, and I, have been able to do might have more of a positive effect on its longevity.

I will probably turn off the zeroRPM for the fan, I'll see about that. As it is by doing so I'm then obliged to have to accept a minimum of 35% of the full fan speed running all the time.

That value is hard coded within the BIOS. I understand that the BIOS can be modified etc and that my card has a switch for dual BIOS, but that is something that I'm not concerned with. A pity that there is a need for that to happen, but there is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have the dual BIOS, just turn on the power! Those fans barely make noise and at 35% and should be no more than a "woosh" at worst. I can't even hear my fans at 80%. Of course they are more or less the AMD fans, nothing fancy. Remember with a PCIE 3.1 board you're missing tons of VRAM bandwidth. It's significant because it affects the entire PCIe BUS, from CPU to RAM latency to the GPU memory bandwidth and more. It's not like it was moving form 2.0-3.0. This is a big jump. 10Gbp/s at the USB 3.1 is one huge difference from the 5 they had.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing that I have realised when it comes to PC's and noise - it is very subjective on what the user considers as loud or acceptable. It is not just about dB. we are all different in what we are willing to listen to.

In that respect my overclock of the 9900k at 5Ghz and then this GPU running as it is means that I get a good combination between performance and low noise. I very much do understand how the PC as a whole could be improved by what you suggest, or I thought that I did a little. You have explained it much better tho, thanks for that. Altho I didn't really want anything more influencing me to go out and spend another load of money, my new Dell 165hz Freesync Pro monitor and this card was expensive enough..!

Yeah, I understand how well these cards would compliment a AMD focussed build, in terms of motherboard and a Ryzen chip. If I hadn't have got this 9900k running at 5Ghz then that might be something I would consider. You can see from the Time Spy bench that the PC is running fine. Spending the kind of money needed to make any change is simply too much, especially in these times we live in and rarely ever able to get anything at RRP. Then again even being able to buy a GPU like I have just done is a challenge. I shudder a little to think what I paid for this card to what was suggested they should be priced at. My goodness the challenge wasn't just about the mental gymnastics that I needed to perform to accept the pricing but actually grabbing one in stock before it vanished from my basket before I could pay for it..!

I don't know but I just hope that one day prices might stabilise a little better than where they are now, there are many factors tho to suggest that we might have to get used to some increases beyond what was once RRP or even MRRP for the AIB cards, and other hardware. Sad times if correct.

I am most impressed with both the drivers and the GPU so far.

It did make me smile, perhaps coincidence, but as I carefully watched the drivers for the GPU being installed I noticed some of the files labelled "ccc" etc etc - reminded me of the ATI Catalyst Control Centre of many years ago.lol

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correctly, for me 1500rpm are what I can stand, so it's around 1300-1400, that means stock 2250mhz with 89% voltage (900mv) probably if not that spikes it would be stable on 850mv as in games it crashes after some time. So I'm waiting for working curve editor in msi afterburner. I'm sure it's capable of 820mv with stock power but those God damned power spikes are killing stability.

To be honest, I'm a bit disappointed with the drivers. They are much more user friendly compared to nvidia but under the hood it's a mess.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had always been a user of Afterburner - RTSS - HWinfo64. Between them all I would get great statistical information, I posted a screenshot earlier in the thread.

But, like you know AB can't, as yet control much with the 6800 cards. The creator has not got his sample card and an update should be out soon.

However I had still got those three software utilities installed but not started (they were disabled from running in the system tray) and yet I still found an issue with Wattman (I think it is called that) where my settings for the Power Tuning and the VRAM would go back to their defaults after the PC was switched off and then restarted. Removing them, perhaps it was only the AB software, and now on a fresh boot all my settings are now where they should be without those two being reset.

I'm very new to these drivers and at first I set an individual profile for each game. Not I have set a global profile that seems to be working as I like. I also like the overlay Metric that can be enabled by shortcut. Not as comprehensive or as detailed as those three software utilities but it is ok.

For me the Nvidia Control Panel has not changed in a very long time, always unselected the Geforce Experience when installing. They worked tho and that served me well for many years.

What makes you think of them as being a mess, in what way..?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Probably it's motherboard/psu related (motherboard and bios more likely). I have noticed that wattman settings are resting when I reset computer. And it sounds like something is going on in power pcie power off. Maybe check bios for power off options and other "on power loss" etc. Options in advanced tabs.

For me it's a performance and optimization mess. Some games are working much better than others. Maybe it's related to game optimization itself but it's so weird that 2080ti has 200-210fps in warzone (at least in select screen) and rx6800 is pulling only 130-140fps in the same scene. In game itself it's 160 vs 100fps in similar places, so definitely there is something wrong. On the other hand cyberpunk is rocking in 60-70fps on RX compared to 55-60 on RTX.

And yes, for me afterburner is must have, as perfect as it gets with voltage and power control, nothing can beat it. I know that probably frame times won't be as smooth with it but still, I prefer stability over frame times.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ah, I understand you.

I did have some reservations when moving over to Red Team card due concerns similar to what you have expressed in relation to some game differences.

I think that it was in my first post within this thread that I noted, with disappointment, my initial findings with the game Workers and Resources Soviet Republic. I have played that game a lot and have always monitored both the CPU and GPU usage and overall FPS performance. Being a city builder type of game the FPS is not absolute, in terms of determining of how well it runs. The CPU, perhaps IPS (Instructions per second) still contributes to that overall feel. However FPS is still a factor and can be measured.

With my 1080Ti I would often cap the FPS to 100, that way I would not put the GPU under too much load and so not be too loud with the fans. If I did not cap it I could run at around 120 FPS on a certain sized city. On a new map the FPS would go beyond the Gsync range of the monitor, at 165hz. With that setup, and my 9900k at 5Ghz all was well.

The same city with the same CPU and now with the 6800XT Red Devil (overclocked or not) and the performance is overall significantly lower. Yup the GPU load is very low , it gives me the impression that the GPU is not picking up correctly and much of the rendering is being done by the CPU and not the card. It is not as tho the GPU load is high, as noted it is in fact low.

As noted with the 1080Ti and that game it ran at a significantly higher FPS consistently where the 6800XT is not struggling as in overloaded, per se, but is really not able to come close to the FPS that the 1080Ti did.

It is as tho the games engine / driver at a low level are not working with the GPU to optimise the games potential. Depending on what particular fence you sit on you can point to the Devs of the game as needing to ensure that it works as it should with the AMD card or with AMD to offer wider support with certain types of game engines and how they are utilised.

To edge my bets I have contacted both.

I do remember having these feelings in the past when I had an AMD / ATI card. then it was titles like total War and civilisation type games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check if in games tab there is automatically added that game. I had huge problem with Wither3 where I had some ridiculous 60 frames on 1080p and after manually adding this game to games tab, it started to work like charm with 150fps (110 enbseries). I have promised myself to never buy amd gpu... damnit me and rtx shortages, I had 3070 ordered but it took 2 weeks and still it was out of stock where I have ordered it so I canceled and RX6800 showed up as available to collect same day, and I was already 2 weeks without gpu/computer as I sold my 2060super for almost same price I bought it, aaand cyberpunk release was 2 days later.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do wish that the icon was there for me to post screen shots, ah well.

Yes it is within the games settings, as such....

This is a screen of the 1080Ti showing a scene. The card is limited to 100FPS for fan noise etc....

Now I'm using the Metric Overlay only it doesn't seem to show it when taking a F12 screenshot when using Steam. But the EXACT same screen and I get 40 FPS.

There is a performance difference when you go from a limited 100fps to then 40.

I'll try and see if I can get that screenshot with the overlay showing.

Point taken on Nvidia stock, zero 3080's for me. The prices are crazy with whatever you buy.

I was very lucky to be able to get this Red Devil 6800XT LE. The firs tone got snatched from my basket as I was waiting for my payment to go through. By chance I checked later and they had added another - right place at the right time. Sad times really.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

turn off anti-lag for sure !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did do and now....

still half of what I know the 1080Ti was capable of, also that 100fps was limited. I tried to get the screens to match as best as I could. You can see the FPS in the top right.

Might not be too popular writing this, but I do not think that I would see this with an Nvidia card. It is just the way that it can sometimes be where certain games seem to perform as you would expect on either the Green or Red type of cards and not as well with another. Broad generalisation I know but I have found that it has merit.

Unfortunate if the game you are playing is one of the not good ones.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I did raised my fan cooler, it is running on 2850 rpm at 67 degrees Celsius for the GPU, GPU is perfectly cool, just junction temp is high, but I did some testing here and I finded out that even using the RX 6800 on default mode, the junction temp already reaches 90 degrees and GPU reaches 77, so I guess that reaching 100 degrees in junction temp and 70 on GPU with oc is not such a big deal, my rx 6800 is the reference model and I use it with my case open and I have a ar conditioner on my room so ambient temp is like 21 celsius.

I did notice too that my GPU is running all the time on 99% of use, all the time it is using all the gpu and all the time the power is like 233w, when you play in 1080p you get 99% of use all the time too?

Another question, do you guys know if AMD supports VRR? Can’t find any option to enable this on the driver, I have found freesync option, my TV doesn’t support freesync but it does support VRR

Before my RX 6800 I was using the GTX 1070 ti, and my rx 6800 is pulling out the double, some times even triple fps compared to my gtx, very strange you guys getting worse fps with rx 6800 compared to gtx 1080 ti, I always played in 4k so maybe is that the difference.

@mackbolan777 wrote:Did you turn off "zero RPM" for the fan? Possibly raise the fan curve for temps over 50c. At 70c you should really be running the fans at 80%. However, you're well within spec. I see 70-80c junction in games at 1080p. 1440p or 4K will stress the card more, so more fan curve should help. Getting the junction temp down a little should also get you more than 10% over stock. The biggest gains are using 1080p by far though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BrainsCollector @Vimes @Xpaulo360

IW (game engine) - Wikipedia That is the legacy of the COD game engine. It is a game that favors Nvidia cards. a little "trick" I used for NFS Shift2 was to download just PhysX from Nvidia and then the game ran ok. Not saying this will work but it might even work in that Soviet game. At worst it does nothing. Game engines are extremely important to know if you're seeing huge differences in performance from game to game. Some research into your game and what engine it uses can help you make better decisions about what hardware to buy or if something like adding PhysX alone will help. The games you are talking about are written in C+, C++,a nd Python, meaning CPU intensive with the mathematical computations involved. All "open world" MMO's are tough to play with any mid-range system. These game dev's make it look easy when it's really not for the average rig. CyberPunk is a good example of an obvious blight in engineering of a game. That one there is no fix for until the dev's fix it. That's my game engine "101" course.

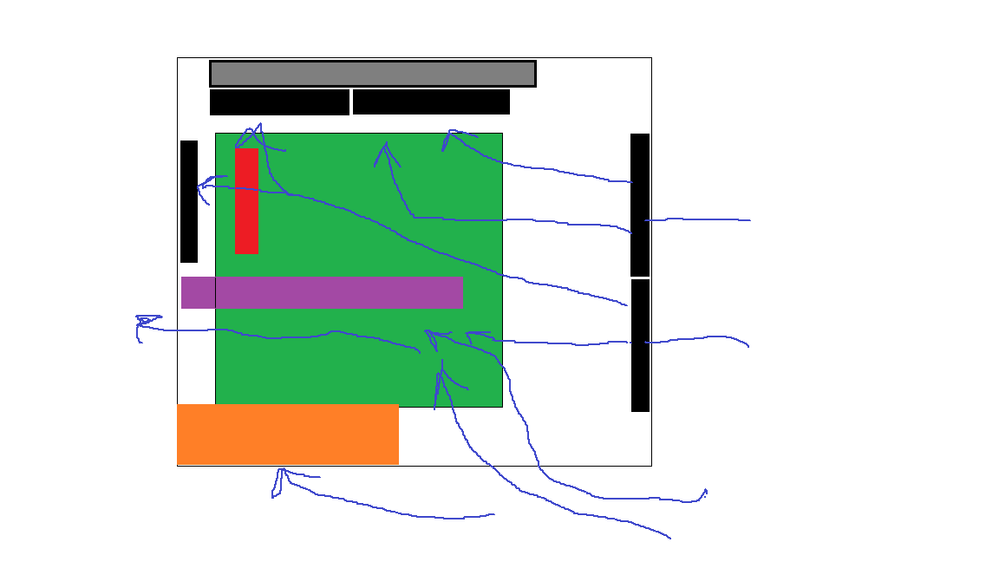

Now for running "open case", that is sub-optimal. As one would think running an A/C or pointing a fan directly at the card would help cool it, all it does is stagnate the air flow over other components. Your case should have slightly positive pressure and flow air from from to rear strongly. A piece of tissue or napkin over your intake and exhaust is a good tool to find out. It should stick hard to the intake and blow fairly strong on the exhaust. If you pop the side while the PC is on and the fans kick up, you have it right. Running a side intake or fan on the bottom as intake is a good plan. In general, more intake than exhaust. The internal air getting to 26-30c is fine, it means that that air is absorbing the heat and moves it out of the case. If the internal air is always cold, your in a freezer or you have no airflow which actually makes parts like the VRM, south bridge, and RAM run hotter because they have heatsinks designed for front to rear air flow. See my diagram for my Fractal R4, side fan is not shown.

100c junction is hot but not over the 110c limit, I would advise putting the side back on and adding a side fan, or increase cold air intake as noted above. These "partner" cards like the Red Devil, have different fans, and a higher factory OC, so they tend to run hot. That model in particular does and it does have noisier fans than a reference card by far. Supposedly they move more air so you should be able to run them slower with the same affect. From reviews the Red Devil runs hotter than most other cards. The cooler has a strange boxy design to it and the thing is thick. I would think one would definitely want good front/side air flow to that card for sure.

VRR would be the same as FreeSync in AMD for the most part. I did find this article about the RX 6800 and VRR:

VRR with RX 6800 XT and LG B9 not working. : Amd (reddit.com)

HDTV's make terrible monitors in general.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yup indeedy, as I mentioned in the second post I made in this thread on page 2 "drivers and game engine".

Putting the 1080Ti back in and all is good, as are my other games that were having some issues.

I had similar problems around 2008, at that time it wasn't Indie titles but games that were considered AAA such as Total War, IIRC it was Medieval II, and the card I had was the toasty ATI 4870. I seem to also remember some pretty bad texture issues, in Civ IV iirc.

I had also posted in another thread within the Graphics section where many are having issues with another particular game and poor performance.

I understood that when you thought that by maintaining a 5Ghz overclock on the CPU it would improve the game that it would speed it up but I didn't think so, sadly it made no difference.

As noted by someone else in that other thread....

Nvidia seems to be much better when it comes to that. Kind of a disappointment, even though the 6000 series performs well in most AAA titles. I guess non AAA titles don't sell GPUs and they don't focus at all on those!

....I think that there is an element of truth in that. Not solely down to AMD to resolve but also also down to some game engines and other factors just preferring one architecture over another. As a consumer you just have to hope that the choice of games you are playing, and will do so in the future, are covered with the hardware you buy. If not then you have to make some tough choices.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you try adding PhysX? I found out it's still a separate download from any Nvidia driver. It doesn't even belong to Nvidia as it's an open source deal. Might help, won't hurt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a very old case here, i dont have its sides anymore, the case is white (like the white from 90s that now are more yellow then white kkkkkk), so my case dont have any cooler on it and i dont use a fan pointing out to the GPU, i just mention the A/C because where i live is normal to have like 30c, so at least my PC doesnt run on a pretty hot ambient, when i have some spare money i will buy a new case.

Reggarding the VRR, i have a C9 tv, and i guess i will need to wait on AMD to make the VRR work, because it work on PS5 and the new xbox but it doent work on the rx 6000 series, i tried using CRU too but it did work for like 10 minutes before i get no signal input, so i needed to reboot on safe mode to undone what i did it CRU.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to enable Freesync on non freesync monitor or TV - YouTube

This guy has your same TV and a full tutorial on how to enable FreeSync using CRU and Nvidia Gsync. Sounds weird, but I watched it and it does work for the C9. I think you ran out of bits, it's the one part in the video where it tells you how to delete unused refresh rates in CRU to allow for the proper amount of free bits.

VRR is supposed to work with the 6000 series cards but I haven't found any links that show it actually working yet. It is primarily a console oriented thing, so it might be hit or miss. You can try using "enhanced sync" in the Radeon software, I hated it because it caused a lot of lag. Alternatively try locking the frame rate in the Radeon software to the max of your refresh rate or use an in game based FPS limiter.

Unless a TV specifically says it supports FreeSync or GSync, your on your own to figure out how to make it useable. HDTV's typically make for horrible monitors if you want more than 120Hz. Most only allow for 60Hz. In those cases, dealing with some screen tearing or using in game FPS limiters are the best option. Be sure to use the latest version HDMI cable as well, most HDTV's lack DP ports and adapters are a bad idea with AMD. Sadly the VRR is supported via Nvidia but only in the RTX2000 series cards on your C9.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes i watched this same video and did the same thing as he did, it actually worked, i could enable freesync in the AMD driver, but after like 10 minutes it stopped working and my screen turned black, after that only entering in safe mode to get signal again.

All my life i used v-sync in my games, i think it work fine though, no screen tering at least and when you have the horse power to lock on 120 fps it is very smooth, my tv is 120hz so 120 fps is what i need, the problem is to get 120 fps in 4k, even with a rtx 3090 you can do that in most games, very sad.

i know that nvidia works because my tv is gsync, but i was really desapointed with 8 or 10gb of vram in those cards, in 4k a lot of games already use 10 or 11 gb of vram, dont know why nvidia thinks is fine to get only 10gb, and another thing is that here where i live the rtx 3070 is a lot more expensive then the rx 6800, makes no sense really, as for me the ray traycing thing doenst matter a thing, it wont work in 4k no matter what video card you have, they say like "dlss is a game changer, you get 4k and high fps", but thats a lie, i saw dlss working in a lot of games and you loose a lot of image quality and sharpening using dlss, 4k is 4k, no upscalling will get that insane level of quality image, if you use a tv of 55 inch 4 foot of distance from your face is very clear the benefits of 4k, and dlss doenst get there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so you can lock your FPS in the driver to 120 or do it per game if the game has that setting. 120 FPS at 4K is very hard to achieve and you could probably leave all frame limiting off on that HDTV. If you do use a frame limit, go slightly higher than the refresh rate, like even 144 is ok because manufacture's tend to fib a little and the refresh rate tends to go past the advertised slightly. When you're going at 120 FPS or higher, FreeSync or VRR does little. It when the game's FPS drops for a cut scene to say 50 FPS, it smooths out stutter. FreeSync and other frame control options truly benefits those with a 90Hz or lower refresh rate. I have a 185Hz 1080p and FreeSync doesn't seem to matter one way or another. I think it might help in cut scenes, but doesn't seem to do anything. FreeSync stops holding the FPS back past 90, so I'm usually above that.

GPU usage during game is normally at 99%, out of game no. Forgot to answer that part. BTW, feel free to point a desk fan into your case at a 45 degree angle pointing front to rear, since you have that type of case.