General Discussions

- AMD Community

- Support Forums

- General Discussions

- Re: AMD fires back at 'Super' NVIDIA with Radeon R...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD fires back at 'Super' NVIDIA with Radeon RX 5700 price cuts

MD unveiled its new Radeon RX 5700 line of graphics cards with 7nm chips at E3 last month, and with just days to go before they launch on July 7th, the company has announced new pricing. In the "spirit" of competition that it says is "heating up" in the graphics market -- specifically NVIDIA's "Super" new RTX cards -- all three versions of the graphics card will be cheaper than we thought.

The standard Radeon RX 5700 with 36 compute units and speeds of up to 1.7GHz was originally announced at $379, but will instead hit shelves at $349 -- the same price as NVIDIA's RTX 2060. The 5700 XT card that brings 40 compute units and up to 1.9GHz speed will be $50 cheaper than expected, launching at $399. The same goes for the 50th Anniversary with a slightly higher boost speed and stylish gold trim that will cost $449 instead of $499.

That's enough to keep them both cheaper than the $499 RTX 2070 Super -- we'll have to wait for the performance reviews to find out if it's enough to make sure they're still relevant.

AMD fires back at 'Super' NVIDIA with Radeon RX 5700 price cuts

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GTX 1650 SUPER has 4 GB of GDDR6 Memory.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you look at the numbers for Techspot's RX 5500 XT review, the 5500 XT 8GB and the GTX 1650 Super are effectively the same in performance, and you can see the very large jump in performance from the 1650 to the 1650 Super (37%), which is about the average that AMD claims the 5300 will have over the 1650, which is reasonable especially if clocks are able to be raised to 5500 levels, which is possible given it's the same GPU and has an 8 pin power connector.

The one saving grace for it is that there are likely a much larger stockpile of RDNA GPUs than there are Turing GPUs, especially since there has been no mention of a GTX 1650/60 replacement yet, though that will likely change once RDNA2 cards launch and enough dies accumulate which didn't bin high enough to reach RTX 3060 levels of performance...

Now if it's priced at $100, that's a whole different ball game, but anything north of $125 is going to make it another dust collector.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think they really should have put 4GB VRAM on it.

I think they also need something that can run on a single 75 Watt PCIe Connection with no additional 6 or 8 pin PCIe Power connection leads.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm betting they used a PCIe 8 pin so AIBs can make highly overclocked models since it is the same GPU as the 5500, and the last thing they want is to have another publicized "AMD Violates PCIe SIG Spec" hassle. I don't think we will be able to see a 75w version. To quote the TomsHardware review of the 5500 XT:

Lower clocks won't shave off 40w, and a cut down GPU won't perform well enough in 2020 compared to an iGPU to matter, especially when Renoir is out. Not even Turing GTX was that efficient...

Also, correct me if I'm wrong, but don't "eSports" people want 144 or 250FPS because they die if they don't?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well it is good to see anything new at the bottom end. While AMD has been void at the top end. Both have had nothing but refresh at the bottom. So I applaud a low end product that can game at 60fps 1080p. Even if it won't be long lived as new titles come out. The current low end has been too low end.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I meant to add that this product being less than 4gb is pretty sad. If it had 6gb it would be a super card at the low end.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with running off PCIe power. It is what made the 1050ti as popular as it was.Id like to see 6gb on that card vs the 3. Even if it drove the price up just a little. It would then have a huge market of people who could now game with it and not have to have new power supplies or new cases to fit a bigger card.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It does make you wonder what you could do though at the low end with RDNA2 architecture. Is the power consumption enough lower than RDNA that a low power card could be possible. Even if they can't quite get to 75 watts even having to pull just a little more from a 6 pin could make most off the shelf or home theater systems upgradeable to such a card without needing a new power supply.

One of the things I am really wondering about RDNA2 is the sound. It isn't mentioned much but there are a ton of complaints about RDNA cards used in home theater. RDNA has some huge issues with sound compatibility, stability and even being able to play without skips in sound.

Have you guys heard this addressed at all with RDNA2?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't heard anything confirmed about it, though it's reasonable to assume there will be -some- efficiency improvements unless AMD is pulling another Vega64 and is pushing it to very limit.

There is also the possibility that AMD is purposely being extremely tight lipped, not running any web benchmarks or anything, and that RDNA2 is extraordinarily powerful beyond 50% faster than the 2080Ti, and they want to drop the hammer like they did with the HD 4870, along with debuting a brand new software development team with a commitment to quality which matches or exceeds nVidia's. That's a one in a billion chance, but who knows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Vega 64, R9 Fury. more of the same.....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I like the optimism. Unfortunately by comparison when the 4870 came out I would say that ATI had a pretty good run of products and reliable drivers. Hoping for better drivers to come with RDNA2 is likely not likely. I can't imagine if that is the case why they wouldn't already have the drivers seriously improving. Unless this will mean a whole new separate driver moving forward. I doubt that though. My guess is we will get good new hardware and same old drivers. Maybe once released the team can really concentrate on better divers. However if RDNA2 doesn't launch with them, it will cause a lot of further ill will in the community.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD have already made the jump to 7nm and added more power gating to Navi in an attempt to drop the power consumption.

I am not sure how they expect to improve the Power Consumption on Big Navi RDNA2 by "50%" and beat Nvidia Turing RTX 2080Ti on TSMC 12nm, never mind these newer Nvidia cards on smaller (7nm?) process note, based on looking at Gears 5 performance.

Many reviews I read quote the AMD "TDP" for Navi RX5700XT and state that it is "not that far behind" Nvidia Turing 2070 Super.

I disagree.

Drop the RTX 2070 Super or RTX 2080 down to match RX5700XT FPS performance or just increase the Power Target and set maximum fan speed on RX5700XT and try to catch the 2070 Super and see what the performance / power consumption difference is then.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay I have to share this for shigrins. I have an old phenom II 1090t setup. It had a 1050ti in it I wanted to rob to put in a compact I5 setup my son was using but I built him the new R5 3600 setup a couple weeks ago that has a GTX 2060. This 1050 had originally been in this system so I was returning it to that setup.

That being said the 1090T setup I uninstalled the driver. Ran DDU and put my R9 380x back in the system. I loaded a late 2019 driver and set it up in my shop to replace an old phenom 3 core on AM2.

Stupid me I thought I would play with the 2020 driver on it. So I ran DDU again and installed the driver. It installed fine. Or so I thought. I was running after the install but the next time I restarted the screen went to a solid color, sort of a green and the system would never boot again. The card doesn't work and either the motherboard or CPU shot craps. All I get is a single beep. No display. Even with everything unplugged.

So I found it funny as I have even doubted the people that claim the driver killed my computer.

I realize it is an old computer but not a single leaking capacitor etc... on that board.

I pretty much decided it wasn't worth trying to trace it down. I did put that 380x in another pc to test it. It is dead now.

All I know is it all worked fine. Loaded the 2020 driver and dead computer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have an XFX R9 390X OC Black Edition.

It is running on Hybrid Adrenalin 2019 19.12.1 + 20.8.3 Driver.

There is one problem though.

The fan control is completely broken for the R9 390X on any Adrenalin 2019 or Adrenalin 2020 driver.

If I leave the card on Automatic Fan control the fans remain off.

If I use the GPU to run as a blender acclerator or for gaming I have to make sure I set the Fans to Manual and 100%.

If I do not do that then the GPU heats up to very high temp and then the fans kick in and the GPU downclocks.

Even the manual control for the fan speed is broken though.

The set RPM does not match the reported RPM. There is also a hysteresis effect in the fan input speed.

I reported that to AMD ages ago on AMD Reporting Form for both Adrenalin 2019 and 2020 drivers.

Still broken.

Maybe it is the same issue killed your R9 380X?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow that sure would explain it. Don't know how to prove it. Or that any of the powers that be would care. Just sucks as while that system is no good for current games it is fine for anything from a few years back plus was still great for browsing and streaming.

It is good to know a logical explanation though. Thanks for the reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So did you watch the RTX3000 series launch?

Obviously Nvidia marketing and we need to wait for benchmarks and analysis.

They claim 2.7x shader performance 1.7x RT-Core performance and 2.7x Tensor Core Performance.

They have built the GPUs on Samsung 8n Nvidia Custom Process and uses Micron GDDR6X memory.

They claim a 1.9x Performance / Watt improvement.

They are claiming ~ 1.6x - 1.8x performance in "RTXon" games like BFV.

Interesting to me, they are claiming > 2x performance in Blenders Cycles.

Not much discussion on basic Rasterising performance and many questions to answer.

Some new technology such as GTX IO - to get data quickly from SSD into GPU VRAM Memory using PCIe Gen 4.

A new low latency technology called Nvidia Reflex - sounds like Nvidia want a marketing tick ro match "Radeon Anti-Lag" which only shows minimal barely perceptible benefit at low refresh rates <= 60FPS.

New High Refresh rate GSync displays for eSports.

New Nvidia Broadcast App which uses AI to perform Noise Removal, Blur Background, Replace Background, Green Screen, Auto Zoom and Tracking.

I am watching the details about the new GPUs and the strange push pull fan design next.

Obviously we need to wait for real reviews and benchmarks, but it looks like Big Navi will have to be something really special to even compete.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, I am at work so have not seen any of it yet. Will look tonight. So 8nm. I thought they confirmed 7nm last week. Just shows you, don't listen to much as being gospel until it releases.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome I see the 3070 will be $500! So many were saying it would raise to $600.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: Just shows you, don't listen to much as being gospel until it releases.

Correct - just what I have been saying. I do not watch Tech YouTubers prediction videos because they are Yoyo Clickbait.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If those prices hold - yes that looks a great price for a 2080Ti level of performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have already loved how Control looks at 1440. I would love to run RTX on High at 4K and that 3070 would likely do it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Figure custom 3070's will likely be close to $600 if not $650, and this is all assuming AMD decides to pricematch nVidia again. It would be very nice to have a price and performance war again where the consumer wins and not the bank books...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am sure plenty will but EVGA and Zotac typically will have a $500 dollar card that usually still has better cooling and clock speed than reference. They are typically void of RGB. Looking at their sites they both have such a card but no MSRP yet plus EVGA has another with all the RGB bling.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

EVGA especially, their KO range of cards were all no doubt hot sellers, but I do hate the product segmentation especially in the nVidia camp where every AIB will have half a dozen models of the same card separated only by stock clocks and cooler... Let's just hope XFX is done with their moronic "THICC" series...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can see at the top having a few models a watercooled rgb, rgb, and plain reference priced model.

I would think 3 skews should be enough. You are right though I think some have had 6 or 7 models of the same series.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only thing technical aspects I do not like about the RTX3090/3080/3070 from what I see so far is the Total Graphics Card Board Power of 350/320/220Watt and the physical size (>40mm 2 slot) of the GPUs.

Not as bad as Vega 64 Liquid (TDP = 345 Watts but Power Slider of + 50% ...) and likely AIB will introduce an AIO Liquid Cooled versions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With M.2 becoming much more of a thing though, 2.75 slot coolers are going to be the new normal as the first PCIe slot has 3 full slots of space, especially on higher end motherboards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I have seen that.

If you use an ADT Link M.2 -> PCIex16 connector you still need to be able to get the cable out - so > 2 slot is still not ideal.

Nvidia are still supporting SLI on the 3090... not sure what motherboards will support that though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does NVLink require anything beyond the NVLink bridge? If not, then it will be supported by all motherboards with two or more PCIe slots.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"So did you watch the RTX3000 series launch?

Obviously Nvidia marketing and we need to wait for benchmarks and analysis.

They claim 2.7x shader performance 1.7x RT-Core performance and 2.7x Tensor Core Performance.

They have built the GPUs on Samsung 8n Nvidia Custom Process and uses Micron GDDR6X memory.

They claim a 1.9x Performance / Watt improvement.

They are claiming ~ 1.6x - 1.8x performance in "RTXon" games like BFV.

Interesting to me, they are claiming > 2x performance in Blenders Cycles.

Not much discussion on basic Rasterising performance and many questions to answer"

Interesting stuff going on here. First and foremost.

"They claim a 1.9x Performance / Watt improvement."

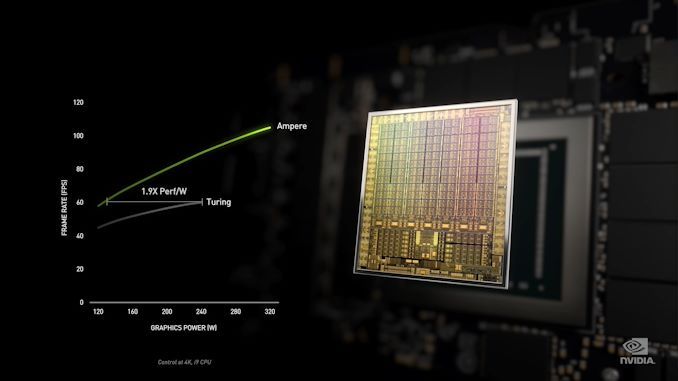

Probably not. Looking at the chart they displayed at the reveal.

It is typical to compare GPUs at a fixed level of power consumption, not a fixed fps. If you compare 160W on both curves and look at the performance uplift it is more like 1.4X. But since there are no standards for this type of thing I guess they can measure it however they want.

"Not much discussion on basic Rasterising performance and many questions to answer"

This is also interesting, given the size of the dies and the number of CUDA cores they claim. It doesn't look like the dies are big enough to physically hold 2X the number of shaders. And in fact, they don't.

This is from a Q&A session on reddit.

- "Could you elaborate a little on these doubling of CUDA cores?

[Tony Tamasi] One of the key design goals for the Ampere 30-series SM was to achieve twice the throughput for FP32 operations compared to the Turing SM. To accomplish this goal, the Ampere SM includes new datapath designs for FP32 and INT32 operations. One datapath in each partition consists of 16 FP32 CUDA Cores capable of executing 16 FP32 operations per clock. Another datapath consists of both 16 FP32 CUDA Cores and 16 INT32 Cores. As a result of this new design, each Ampere SM partition is capable of executing either 32 FP32 operations per clock, or 16 FP32 and 16 INT32 operations per clock. All four SM partitions combined can execute 128 FP32 operations per clock, which is double the FP32 rate of the Turing SM, or 64 FP32 and 64 INT32 operations per clock.

Doubling the processing speed for FP32 improves performance for a number of common graphics and compute operations and algorithms. Modern shader workloads typically have a mixture of FP32 arithmetic instructions such as FFMA, floating point additions (FADD), or floating point multiplications (FMUL), combined with simpler instructions such as integer adds for addressing and fetching data, floating point compare, or min/max for processing results, etc. Performance gains will vary at the shader and application level depending on the mix of instructions. Ray tracing denoising shaders are good examples that might benefit greatly from doubling FP32 throughput.

Doubling math throughput required doubling the data paths supporting it, which is why the Ampere SM also doubled the shared memory and L1 cache performance for the SM. (128 bytes/clock per Ampere SM versus 64 bytes/clock in Turing). Total L1 bandwidth for GeForce RTX 3080 is 219 GB/sec versus 116 GB/sec for GeForce RTX 2080 Super.

Like prior NVIDIA GPUs, Ampere is composed of Graphics Processing Clusters (GPCs), Texture Processing Clusters (TPCs), Streaming Multiprocessors (SMs), Raster Operators (ROPS), and memory controllers.

The GPC is the dominant high-level hardware block with all of the key graphics processing units residing inside the GPC. Each GPC includes a dedicated Raster Engine, and now also includes two ROP partitions (each partition containing eight ROP units), which is a new feature for NVIDIA Ampere Architecture GA10x GPUs. More details on the NVIDIA Ampere architecture can be found in NVIDIA’s Ampere Architecture White Paper, which will be published in the coming days.."

So, there are actually physcially 0.5X the number of shaders in Ampere as listed. The data path for each SM allows either 64FP32 64INT32 just like Turing, or 128 FP32. So in a FP32 heavy workload the int32 path is shutoff, and the FP32 path clocked up to allow two executions per cycle. That's significant because, it will never be a straight 2X increase in performance. There is overhead that has to be programed for the engine to decide when to use 64 + 64 or 128. Depending on how well that works you could get close to having physically double the number of shades. Of course, in workloads that include a high number of integer instructions the engine will perform exactly like Turing.

So NVidia are getting a little creative with how they are marketing a FP32 "core".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: It is typical to compare GPUs at a fixed level of power consumption, not a fixed fps. If you compare 160W on both curves and look at the performance uplift it is more like 1.4X. But since there are no standards for this type of thing I guess they can measure it however they want.

An RTX2080 OC or Reference model runs at 225W Total Graphics Card Power Input.

An RTX 2080Ti OC or Reference model runs at 260W Total Graphics Card Power Input.

A normal AIB RTX2080Ti model has a reference spec of 250W Total Graphics Card Power Input.

Since that performance versus power curve is given at 250 W point for Turing it looks like they show RTX2080Ti versus Ampere running same game on 4K 60FPS.

It seems perfectly sensible to me.

Most 4K displays are 60Hz, so 60 FPS is a good point to compare.

Why would you even look at the 160W point on that graph at all?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: So NVidia are getting a little creative with how they are marketing a FP32 "core".

No they are not.

They have changed the architecture.

So what.

I do not see Nvidia hiding what they have done.

They have explained the architechture.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Why would you even look at the 160W point on that graph at all?"

if you look at any other part of the graph other the the part they picked the improvement is less than 1.9X, which is the definition of cherry picking. An average user could expect an increase of up to 1.9X performance per watt, in actuality what is observed will be far less. NVidia could have used the term "up to" or set their performance/watt as an average, but they didn't. Hence disingenuous. Anandtech discusses it at length as well.

NVIDIA Announces the GeForce RTX 30 Series: Ampere For Gaming, Starting With RTX 3080 & RTX 3090

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will say one thing I liked about the way they released this and that is that there was no name calling or frankly any mention of competition at all.

They only compared to themselves. It is obvious that the architecture for cuda has changed and a doubling of cuda cores will not equal a doubling in performance and it can't process what it used to in one pass.

As you said it doesn't matter. It's just a change and it's okay for architecture to change.

We will just have to see when cards are in hand how that translates.

On another note did you see Steve's video at Gamers Nexus. He pretty much ripped Intel a new exit hole on how sour they were with AMD. It was pretty funny. And disturbingly accurate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

They haven't changed the architecture at all.

Turing RTX 2080 Ti: 4352 CUDA cores.

Can it do 4352 INT32 instructions per clock? Yes.

Can it do 4352 FP32 instructions per clock? Yes.

Ampere RTX 3080: 8704 CUDA Cores.

Can it do 8704 INT32 instructions per clock? No, only 4352.

Can it do 8704 FP32 instructions per clock? Yes, but only if there are no INT32 instructions, otherwise limited to 4352.

"I do not see Nvidia hiding what they have done.

They have explained the architechture."

There marketing material sure makes it seem like this is an apples to apples core count increase over Turing, which is not what is happening. Again, it would have been more transparent to list the CUDA core number as 4352 for the RTX 3080 with a maxium FP32 throughput of 8704.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess it's really how much of a change you consider an architecture change.

For instance one of the big changes that RDNA as over GCN is how it changes how compute is handled.

AMD considers that a new architecture. I would call it more of a change to current architecture.

Not really that dissimilar to what Nvidia is doing other than we really won't understand what they have done for sure until cards come out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is a CUDA core on:

Pascal

Turing

Ampere

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess I don't really understand why it is necessary? Games are primarily FP32 because...that is what developers have always done. So even if the second FP32 datapath is only utilized half the time, it is still a tremendous achievement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nvidia changed the architecture to have two datapaths.

Datapath 1 can do FP32.

Datapath 2 can do FP32 or INT32.

NVIDIA's Ampere SM Detailed and the Reason why the RTX 3080 is Limited to 10GB of Memory | Hardware ...

Pascal had Datapath1.

Turing had Datapath 2.

These new Gaming GPUs have a mix of the two.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

TU116: When Turing Is Turing… And When It Isn’t - The NVIDIA GeForce GTX 1660 Ti Review, Feat. EVGA ...

In Turing.

" NVIDIA broke out their Integer cores into their own block. Previously a separate branch of the FP32 CUDA cores, the INT32 cores can now be addressed separately from the FP32 cores, which combined with instruction interleaving allows NVIDIA to keep both occupied at the same time. Now make no mistake: floating point math is still the heart and soul of shading and GPU compute, however integer performance has been slowly increasing in importance over time as well, especially as shaders get more complex and there’s increased usage of address generation and other INT32 functions. This change is a big part of the IPC gains NVIDIA is claiming for Turing architecture."