- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD Announces Vega II for February 7th: $699, ~30% performance improvement vs Vega 64

https://www.tomshardware.com/news/amd-ryzen-7nm-cpu-radeon,38399.html

So it's going to cost 100% more than the RX 2060, yet only perform about 30% better, or over 75% more than Vega, yet deliver 30% more performance...So much for getting somewhat excited about Vega II making its way to light. Wonder how thick a coating of dust these will collect in the warehouses, as there is -no- reason to purchase them, since at $700 you're higher than the RTX 2070, and if you're a content creator you should just go with Vega. Worse yet, no pressure on nVidia, so prices will remain sky high.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

![]() Meh, kind of disappointing with this one. I was hoping its performance would be beyond the 2080TI but it matches it 2080. I'll wait for the new Navi GPU's thats supposedly going to be announced later this year first before I spend my money. I'm not all that excited for the Ryzen 3000 either. I'd rather have a 7nm 16 core 32 thread CPU, if not higher.

Meh, kind of disappointing with this one. I was hoping its performance would be beyond the 2080TI but it matches it 2080. I'll wait for the new Navi GPU's thats supposedly going to be announced later this year first before I spend my money. I'm not all that excited for the Ryzen 3000 either. I'd rather have a 7nm 16 core 32 thread CPU, if not higher.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well supposedly Ryzen 3000 matches the Intel 9900K in terms of single threaded IPC, that's a full 32% faster than the first generation Ryzen (1000 series) and 23% faster than Ryzen Plus (2000 series), that is a good reason to be excited IF it holds true, which, given it was demoed live at CES by AMD with task manager shots, holds much credence. Ryzen 3000 will also be chiplet in nature (CPU die + I/O die) like EPYC, so programs which depend on memory access should also perform much better, so as someone with a 1800X, I am definitely interested.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder if this is just reference GPUs or if there will be custom ones available too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a Content Creator / Developer... the Radeon VII is exceptional value, as 16GB HBM2 for $700, however as a Gamer; I'd rather an 8GB Variant that cost $500 and suddenly it becomes an attractive Enthusiast / Performance Gaming Card.

With this said, if the rumours are true that only 20,000 will be available for launch with a further 40,000 (for the full production run) as AMD decided to switch to a "Full Ramp" on Navi due to Engineering Samples coming back above expectations, with likely the original plan to release and segment Vega 20 for the Enthusiast / Performance / Prosumer Market (so we likely would've also seen an 8GB Variant replacing the current Vega 64 and possibly a cut-down version replacing the Vega 56; at the $500 and $400 Price Points respectively) … still as it stands what we'll ultimately see is Navi as the Mainstream.

Now they've essentially denoted "Mid-2019" as the release of Ryzen., and Lisa Su also mentioned Navi 7nm as part of said "Future Announcements closer to Launch" … which we know May is their 50th Anniversary., so I think while I did originally suggest they'd keep with the Late-March / Early-April launch (inline with previous years); what we'll likely see is a massive announcement at GDC (in March) that covers the entire Ryzen and Radeon (Navi) 3000-Series; with a May Launch of essentially all of their Catalogue (keep in mind May-June is when they've historically launched their APU; with Ryzen with Graphics being no different).

The rumours for said products also are very close to my original predictions, in both price-points and estimated performance; plus we know that the 500-Series Chipset (X570) Boards are going to be shipping in Feb / Mar... which will actually be good to be able to get the Motherboards and see some BIOS Revisions prior to the CPU / APU launches.

•

It of course is very frustrating not to see the Radeon 3070 and 3080 at least announced, even if nothing was revealed beyond the MSRP and suggested performance.

As if my predictions and the rumours (of which there are 3 that align very closely) are true; then we should be looking at the Radeon 3080 being $250 MSRP with RX Vega 56 / GTX 1070Ti / RTX 2060 Performance except those Cards are $350 MSRP.

Now here's the thing, the GTX 1070Ti … at least in terms of Performance within the GTX 10-Series Product Stack, is Fantastic Value (for an Enthusiast GPU and Relatively speaking)., I mean you're getting "Close" to GTX 1080 Performance ($500) for about $100-150 less.

Yet as a Mainstream (GeForce xx60) … well as such, it's suddenly $50 - 100 overpriced with a weird performance spot, where it's very close to the Next-Tier in terms of Traditional Gaming Pipelines; and single digit performance difference in RTX Gaming Pipeline to it's bigger brother the RTX 2070.

So., it's in this weird area; where in the RTX 20-Series Product Stack it's Good Value but in terms of Generational and Market Segment it's supposed to be for, it's actually quite bad due to being overpriced.

As such IF (as the rumours suggest) the above is true for the Radeon 3080., then it's mere announcement would mean NVIDIA would have to drop the RTX 2060 to match; which would mean either taking the hit in terms of selling at a loss or alternatively need to try to do PR Damage Limitation; as their main selling point (which focused on it's being "Good Performance Value"), would instantly disappear.

Keep in mind that the Turing Architecture are BIG (and Expensive) Processors to produce, with less than ideal Yields and Quality.

This means they're going to be expensive... NVIDIA already knew they'd be selling in a small margin as opposed to their big margins they have been enjoying; especially with the GTX 10-Series.

•

What AMD really need to do, is well what they're doing to Intel right now with Ryzen... basically forcing NVIDIA into a position where they just look considerably worse value (from a Consumer Perspective) while also doubling down on GPU Open for Developers with an "Open Source" Catalogue that matches Gameworks in "Plug-and-Play" integration, that provides AMD Optimisation.

Force NVIDIA into their Margins... into their Ecosystems... and most important CONTROL that Ecosystem.

People are mentioning this in regards to the G-Sync Compliant certification., where AMD essentially won out on the Adaptive Sync Standard; but at the same time their refusal to focus on Branding and Quality Control with say a "FreeSync Basics" and "FreeSync Premium" … well it's giving NVIDIA an "In" to pull a Microsoft of "Embrace, Expand, Exterminate" (and that's EXACTLY what NVIDIA will do., via charging for said G-Sync Certification; something that will be given to only the Premium Adaptive Sync Displays, which are already more expensive; so what's an extra $50-100 per Certification and Branding License., while at the same time they have THEIR branding all over the Premium Products).

•

Now the last thing I will note., is the Forza Horizon 4 demonstration of Ryzen 3000 + Radeon VII … was actually quite telling in regards to the performance.

Sure, AMD themselves are denoting RTX 2080 Performance; but note how their Demonstration was 86 - 120 FPS at 4K HDR, which is RTX 2080Ti (86 - 100 FPS) performance territory in said game and quite handily beating the RTX 2080 (73 - 84 FPS)

+35% over Vega 64 in Battlefield V (DX12) … actually places it at ~72FPS (2160p) / ~168 FPS (1080p) Avg. Vs. the RTX 2080(Ti) ~67 (84) FPS (2160p) / ~148 (182) FPS (1080p)

And if as noted above, the rumour is true they deliberately did a Limited Run to switch to a Navi Full-Ramp... this would strongly suggest that Navi is likely to be even more impressive.

I'm somewhat excited to see the Reviews from Gamers' Nexus and alike in the near future., because while what AMD showed off was fairly "Out-of-Context" Benchmarks with obviously very little Apple-to-Apple Comparisons; at the same time, the projections based on their slide claims does suggest that they might actually be underplaying how good it could be for gaming.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems unlikely that a 8Gb variant was ever in the works. Vega VII actually has less stream processors and compute units than Vega 64 and Fury X, but achieves a 30% increase partially due to higher clocks but also to the vastly improved pixel pushing power of the ROP/memory bandwidth. Vega VII has twice the number of ROPs and memory channels over Vega 64. Cut that to 8Gb, and you lose two memory channels, leaving you with a slightly upclocked Vega 60.

Vega VII became viable when NVidia left the price/performance of their upper GPUs unchanged with the Turing launch. The RTX 2070 replaced the over 2 year old GTX 1080 with similar price/performance, while the RTX 2080 replaced the GTX 1080 Ti, again at a similar price/performance. The 2080 Ti was introduced at an entirely new price/performance level. Vega VII can then drop in at the RTX 2080 level for a similar price, while Vega 64 and 56 continue to be priced between the RTX 2070 and RTX 2060.

The very fact that the Vega VII and RX 590 exist at all is a good indication that Navi is still a ways away. It seems unlikely that AMD would bother rounding out the product stack around the Vega 64/56 only to disrupt that a quarter later with a RTX 2070 level GPU priced at $250.

All that being said, I wouldn't expect too much from Navi. It is, at the end of the day still a GCN product. AMD has likely milked all the performance out of GCN that they can during their time on the 28nm process node. The HD 7970 and R9 Fury X were all on the same process node, and all GCN products. Vega 64, was essentially IPC identical to Fury X, with a similarly clocked Vega 64 performing the same as the Fury X. We got a 30% improvement from the die shrink and improved power delivery for higher clock speeds. Now again, we have another die shrink, with slightly higher clocks, but vastly increased ROPs and memory bandwidth at the expense of compute units and stream processors. The net gain? Again about 30%. So I really don't think we'll see really significant IPC improvements and compute stream processor gains until Arcturus.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I think the Radeon VII is good for Compute and Content Creator and good to see an RX Vega 64 7nm refresh at last. I like the triple fan cooler and I hope the GPU is ~ 2 slot 40mm wide so I can fit a number of them in PC case.

I think it is dead in the water as a high end gaming GPU versus Nvidia RTX 2080 GPU.

I think 4 banks of HBM2 with 2GB capacity would be fine on Radeon VII for memory bandwidth and performance and HBCC should address any need for > 8GB VRAM.

HBM2 is only available in 4GB modules as far as I know but it they were made in 2GB modules with 2 1GB stack high instead of 4 1GB stack high then they should also be able to be clocked faster giving a higher performing memory.

The Fury X would perform faster these days if HBM overclocking wasn't disabled...time that was fixed now.

I own both R9 Fury X and RX Vega 64 Liquid and they perform ~ the same if i set the RX Vega 64 Liquid to run at same GPU and CPU clocks.

Any performance increase I see with RX Vega 64 Liquid is due to higher GPU CLK and MEM CLK Frequency in most applications.

In some cases like Forza Horizon 4 DX12 I am convinced AMD don't bother to optimise theR9 Fury X/Fury/Nano Drivers at all, which is why RX580 4GB is now faster than a Liquid Cooled R9 Fury X on that title. Memory capacity is not the issue.

Seems to me the base and boost clocks of the Radeon VII are similar to RX Vega 64 Liquid.

I am hoping AMD will allow DX11 Crossfire between RX Vega 64 Liquid and Radeon VII.

Sure I would end up with effective 8GB Vram but who really needs 16GB for gaming?

I hope at least AMD make sure the RX Vega 64 Liquid GPU and Radeon VII can run together in DX12 MultiGPU.

I own both RX Vega 64 Liquid and RTX 2080 OC cards now. They cost me ~ same price taking into account BFV game deal with the RTX 2080 OC.

I bought the RTX 2080 OC reluctantly but the RX Vega 64 Liquid is disappointing versus the RTX2080 OC.

The RTX 2080 OC I own is a 2 slot wide 40mm GPU, with a basic 2 fan cooler.

It beats the overclocked/undervolted RX Vega 64 Liquid hands down on everything I have tested so far with Adrenalin 18.12.1 driver without even overclocking the Nvidia GPU.

It is about 30% faster than RX Vega 64 Liquid in DX12.

I am glad to see I will be getting a FreeSync enabled driver for the RTX2080 OC soon, so I will still be able to use FreeSync on my monitor if I need to use it.

AMD Adrenalin Driver 2018 - 2019 stability is really bad for me and features like Radeon Chill and Automatic Overclocking need serious attention.

I am convinced that some work on Radeon Chill could really improve gaming power consumption and keep gaming performance reasonable as well.

Automatic Overclocking results in an instant PC System hang and Black Screen and Audio Buzz with the RX Vega 64 Liquid, starting from default power mode settings.

The RTX 2080 OC has an automatic overclocking feature as well. It works fine on the same PC, does not crash, and give me another 3-5% performance depending on the options I set.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD claims Vega II will match or beat the RTX 2080, though it's going to draw near 300w to do it...Still, $700 is way too much, especially with the high power draw and lack of ray tracing.

https://www.tomshardware.com/news/amd-radeon-vii-specs-pricing,38414.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't see the missing ray tracing as a loss, since it's not really usable feature in the 2080 anyway. In the 2080 ti, it might be somewhat usable.

Aren't those 3 games really friendly for AMD GPUs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's more for people who buy a card for the long term, this is the high end realm, not the $200 mainstream realm, so something which may be commonplace in a year (you know how deep nVidia is involved in nearly every game studio) factors in considerably to the decision, especially with a card which demonstrates 60 and 75fps capability at 4K with full details, so there's even less of the "Well I'll buy it now and game at reduced quality until the next card is released" factor. Even if it's a minor part, the power consumption figures are also another factor, especially in warm climates and high electrical rates, especially when this card costs exactly the same as the RTX 2080 so there's no value pro to add to the list. If nVidia decides to axe $50 or $100 off the 2080 to appease the people who only see raw FPS numbers, AMD really won't be able to counter that since Vega II is tied to very expensive 16GB HBM2, which is the main reason Vega 64 and 56 continue to sell at such prices.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those are all very good points. NVidia just removed one of the biggest reasons to get an AMD GPU when they announced that they will support Freesync in some fashion. When I browse and read reddit/forums/whatever, the only reason given to buy Amd is due to freesync, and because nvidia sucks. I see this as a significant blow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After AMD made the comeback with the HD 4000 series until last year AMD was trading blows with nVidia pretty much so the choice for most people was usually the less expensive model of the two, which was usually AMD. Then the cryptocurrency boom hit and blew up prices at the same time Raja stonewalled on Navi and pushed out an inferior Vega, and Lisa Su reorganized RTG to devote 75% of resources to the semicustom market, leaving nVidia virtually untouched at the mid and high end with solid perks of the 1070/Ti/1080 over Vega, such as lower power consumption and a lower price for either a higher performing or -very- slightly lower performing model. The RX 580 and GTX 1060 is the only matchup which allows them to trade blows, but they're finding themselves more and more less able to sustain 60fps as detail levels increase.

The only GOOD side is that Lisa Su is being really smart with Navi and not rushing it, with next generation XBOX and PS5 depending on CHEAP, efficient GPUs which can deliver a steady 60fps at 4K they can't afford to give them a reason to potentially switch to an AMD/nVidia combination, not after having a lock all these years, though it also helps that both Intel and nVidia have a history of slacking off performance and innovation without the presence of a competitor. Still, let's hope she is going...

and not...

And AMD can go out and buy themselves a new headquarters since the former CEO sold it on a leaseback agreement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I will be testing the Nvidia FreeSync Driver as soon as it is released on an RTX2080 OC. I will let you know if it works.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just purchased an LG 4k monitor with Freesync because the Acer Monitor my wife had failed and actually caused Windows to not boot up. Gave my newer (3 y/o) Acer monitor to my wife which is is still good even though a part of the IPS panel is starting to go bad.

The LG has AMD Freesync which I thought I wouldn't be able to use since I have a Nvidia GTX 1070. Now you are saying that Nvidia is coming out with a GPU driver that will be able to use Freesync?

That would be great. I will be able to use my Freesync Monitor and see how it works.

Thanks for the update.

EDIT: I just "enable" Freesync on my monitor Menu and I get video from my Nvidia GPU Card connected via Display Port. but I lose my desktop and just see the background image and working taskbars no desktop icons or gadgets. I believe that this isn't normal. Maybe when the Freesync Nvidia driver comes out in January 15, Freesync will work correctly when "enabled" on my Monitor Menu.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I am still waiting for the Nvidia Driver. FreeSync is supposed to be supported on GTX 10 and RTX 20 series GPU's. The Driver is due for public release on Jannuary 15th as far as I understand it.

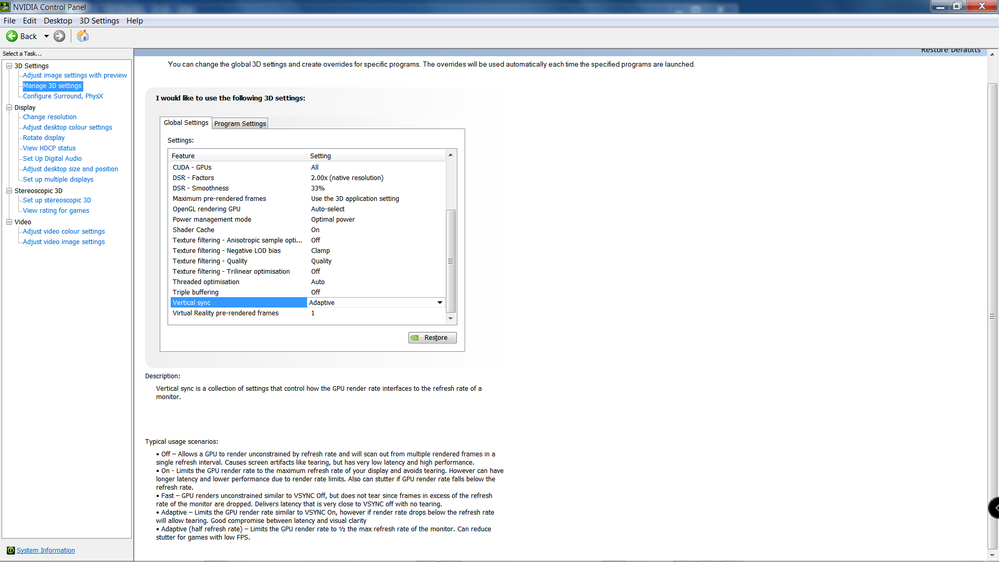

Your Nvidia GPU should have some options to help with lack of FreeSync Support depending on your situation:

See:

And also there is a good article on those options on Anantech.

Search for "Fast Sync & SLI Updates: Less Latency, Fewer GPUs" with Google and you will find the link.

I was about to purchase new 4K Monitors for my RX Vega 64 Liquid and RTX2080 GPU's so the fact that both will support FreeSync Monitor is great news to me.

I will keep you posted when I get the driver installed.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. I will be sure my Nvidia Control panel has "Adaptive" enabled for Vsync.

Thanks to you I learned that Nvidia will now start supporting Freesync on Monitors. I bought my LG 4k monitor knowing it had Freesync compatible but resigned to the fact that my Nvidia GPU card wouldn't be able to use it. But wasn't a concern at the time when I purchased the new monitor. But now it is icing on the cake if I am able to use Freesync. it is a plus.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With Adaptive, vsync is enabled when FPS >= monitor refresh rate. When below it, vsync is off, so you can get tearing, especially with a 60hz refresh monitor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You don't really get tearing when FPS is below the monitors refresh rate. Tearing occurs when there is information from multiple frames in a single screen draw, and occurs when the frame engine delivers frames in excess of the monitors refresh rate.

When frames drop below the refresh rate, you can get stuttering if the drops are relatively severe.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Adaptive sync in nvidia control panel is different. It's just normal vsync when at or above monitor refresh rate, and off when below it, as I mentioned. With Gsync/freesync, you won't get tearing in the gsync/freesync range. If not utilizing one of those technologies, you most certainly do get tearing when below the refresh rate of the monitor, because with vsync off, the buffer can be overwritten in the middle of the screen refresh. With the fps bove the refresh rate of the monitor, you are more likely to notice frame skipping.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you are referring to adaptive vsync. Adaptive sync (no "V") is the term for the open standard variable refresh tech that underlies AMD FreeSync, and now Gsync Compatible monitors as well. As for tearing below the monitor refresh rate, that is what triple buffering is for. You will still notice the stuttering however, especially if changes in frame rate are quite large during gameplay.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is worth pointing out, that it isn't as though AMD cards are missing ray-tracing. Ray tracing is done via Microsoft DXR, and extension of DX12, which uses the already existing DX12 graphics and compute engines. NVidia simply added the RTX extensions to drive DXR over their tensor cores. AMD could in driver, enable DXR ray tracing over the standard compute engine. Any DX12 capable GPU is capable of running DXR.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This data is actually pretty interesting.

The supposed Radeon VII is shown producing a Firestrike score of 27,400. The reference Vega 64 produced a result around 22,400. That is about a 22% increase. However, there are users with the Vega 64 Liquid edition that post scores over 28,000. Depending on the overclocking headroom on the Radeon VII, it may not be that much faster than a liquid Vega 64.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't base any decision on that worthless piece of garbage known as 3DMark. It's a meaningless synthetic benchmark that does not reflect real world usage. I HATE Unigine benchmarks fell out of favor. Haven, Valley, and Superposition use a much more realistic load level, and don't shove "BUY ME!!!!!!" and adverts in your face the way 3DMark does with the free version.

I need to stop being lazy and do a round of testing with Superposition with my Fury Nano with the current driver set and the first Crimson release, see if there is such a large performance difference between old and new drivers that some sites are showing in their tests, where the Fury Nano under-performs an RX 580.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

RE: I need to stop being lazy and do a round of testing with Superposition with my Fury Nano with the current driver set and the first Crimson release, see if there is such a large performance difference between old and new drivers that some sites are showing in their tests, where the Fury Nano under-performs an RX 580.

Have you seen that the R9 Fury X is performing worse than RX 480 4GB on some titles?

Please take a look at Forza Horizon 4 and Resident Evil 2 if you can.

I looked at Forza Horizon 4 benchmark. A GTX780Ti, with only 3GB of GDDR5 can get through that benchmark - I do see low memory warnings but the benchmark completes. A 4GB HBM R9 Fury X or Fury or Nano completes the benchmark fine, no low memory warnings, but run poorly versus an RX480 4GB or RX580 8GB. Why should that happen?

I have looked at Resident Evil 2 demo. That title does request a ridiculous amount of VRAM. I would have to purchase the title to test the real game.

Another one you might want to look at if you have an R9 Fury X is to use Sapphire Trixx + last AMD Driver that allowed HBM overclocking (shortly before RX Vega 56/64 were launched) and test Batman Arkham Origins demo. I see + 6.6% performance improvement by overclocking the HBM memory on an R9 Fury X. No explanation given why I cannot overclock HBM anymore with AMD Drivers. .

I think the R9 Fury X/Fury and Nano GPU's are deliberately neglected these days, which sends out a great message to people who bought those previously premium top end AMD GPU's. They were only launched latter part of 2015.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

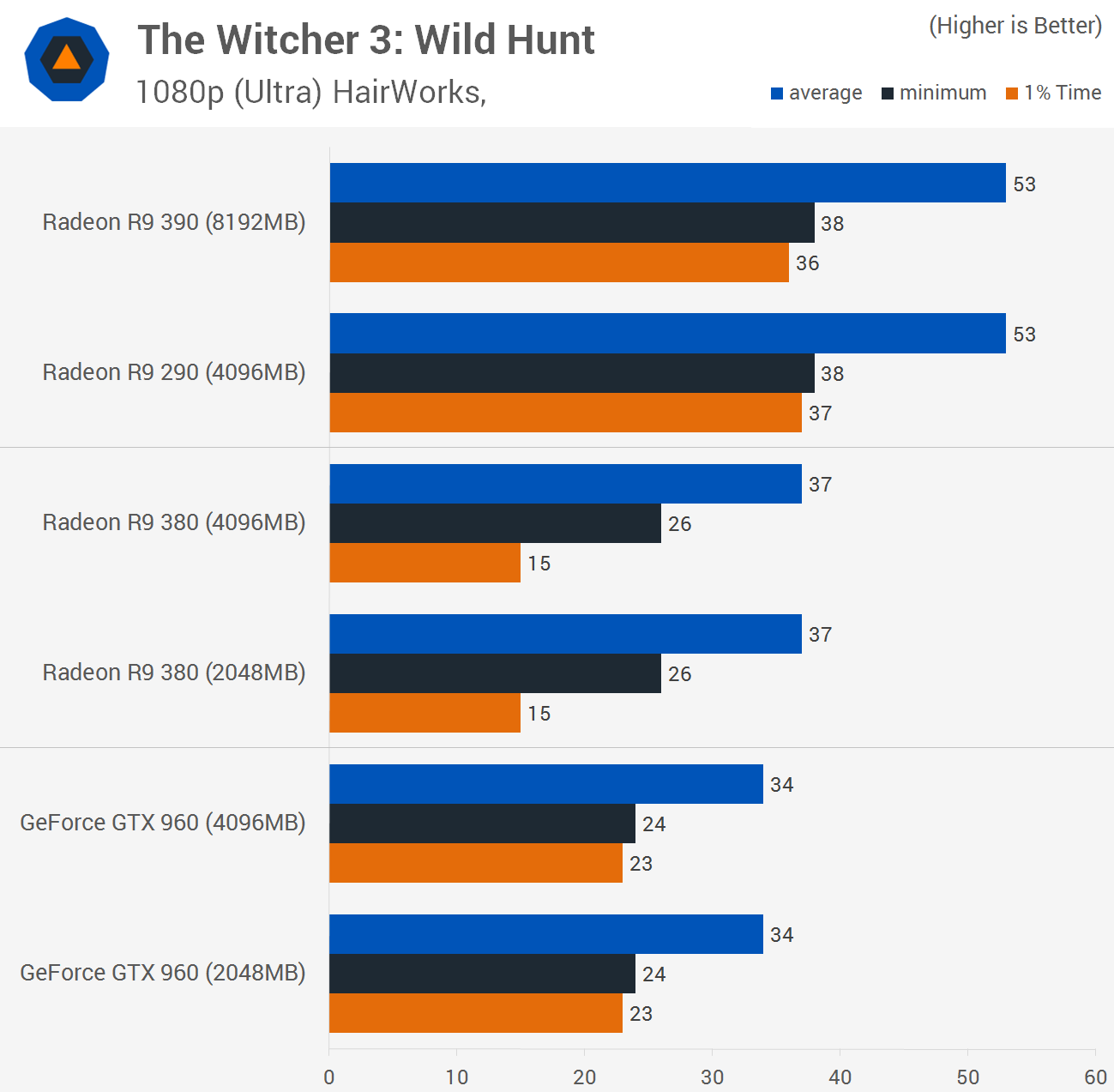

Worse than that, check out these three images, taken about a year apart from the Fury Nano, RX 480, and RX 580 reviews in The Witcher 3, a game which does not benefit from more than 4GB VRAM at 1920x1080 max details as shown by Techspot (bottom). The R9 Nano performs worse than a RX 580, RX 480, and even the R9 390, and as you said, the more powerful Fury X is trumped by them too.

amdmatt you're an engineer, can you shed any light on this issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why do you think the Nano is underperforming? In the original image, the Nano is running at 58.5fps in Witcher 3 and this is the only time the Nano appears on the charts. The following chart shows the RX 480 running at 65.3 fps so you assume it is running better than the Nano?

What is telling here, is the R9 390X and the GTX 980, the only GPUs to cross the charts. The R9 390X ran at 55.9 in the original chart, below the Nano. In the second chart, it has miraculously jumped up to 67.4 fps. Wouldn't that data indicate that something (driver, game optimizations) lead to improved fps for the entire line? Wouldn't you expect the Nano to still be above R9 390X? The GTX 980 showed a similar progression between the two charts.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do think the Radeon VII has been compared to Vega 64 Reference card in the AMD announcement, not an RX Vega 64 Liquid.

3DMark Firestrike scores can depend on what values are used in AMD Settings, what processor is used etc.

3DMark Firestrike is a DX11 test at 1080p.

I think these GPU should be tested at 2K/4K preferably in DX12 to compare them better.

So tests like Firestrike Ultra for DX11 and TimeSpy Extreme for DX12 would be a better way to compare the GPU's in 3DMark, ensuring default AMD Settings are used and the cards are tested on exactly the same system.

There are other benchmarks that could be used to compare the cards, many others exist.

Individual benchmarks are just indicators of how GPU's and drivers perform on those particular benchmarks and the results may not be reflected in performance on a game or other application.

We will all soon find out how much difference in performance there is based on independent reviews from tech press.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

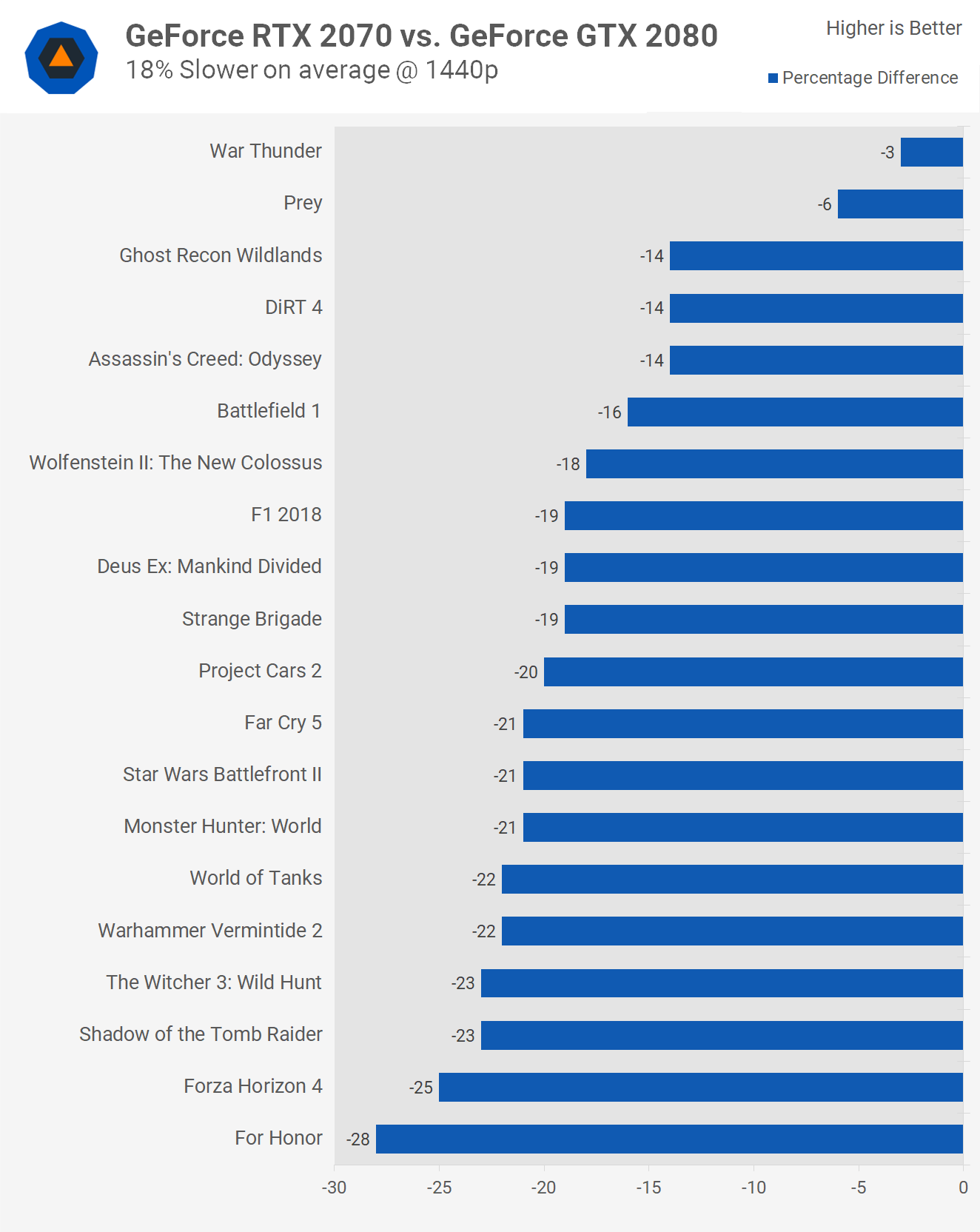

There are several problems. One is that it has no FP64 performance, so it's not a prosumer card like you'd slot in a Threadripper system for serious professional work then serious gaming. Two is that there are RTX 2080 models for $700, and as they are both more efficient and support ray tracing, they are cheaper to run and have more future proofing ability. And third, and the BIGGEST problem, is the $500 RTX 2070, and -possibly- the $400 Vega 64 reference models. As Techspot showed, the RTX 2070 is only 18% slower on average than the RTX 2080 (and Vega 64 is only about 25% slower than the 2080), but the 2070 is 30% less expensive and Vega 64 reference is 47% less expensive, plus we know nVidia is gouging the market because there is no competition from AMD, so these prices can change overnight if Vega II really does trump the RTX 2080.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as those benchmarks go, they were done at different times, which could mean different versions of game.

I think this one is showing what you expected. Lies, Da** lies, and benchmarks.

---

On another note, buying a 2080ti is like buying a bare bones upgraded PC. About the only place it makes a little bit of sense is for 4k gaming, which is a tiny fraction (1.3%) of the gamer market, according to steam stats (64% at 1080p). The 2080ti still seems to have high failure rates if you read reviews and follow the nvidia 20-series forum. I can't believe so many are buying this grossly overpriced GPU.

Vega 64 pricing is a joke as you mentioned, but you can probably blame HBM. NVidia is priced competitive where it has competition, and now they support freesync, which removes one of the most compelling reasons to consider AMD. But when you combine how many actually use a resolution where AMD can compete, it's not as dire as it looks for AMD. The real battle is at 1080p and potentially 1440p.

Which is at the 2070, Vega, 1060, 480, 580, type of cards, best value wins. The 2080 is basically a slightly faster 1080ti. So I still see the VII as good news, because it appears that AMD will finally compete at the 1080 ti level. It's progress. If someone wants to buy a new GPU, they have a choice between AMD and NVidia all the way up to 2080 level. Ray tracing is microsoft directx API feature, not yet another nvidia priority feature. So AMD can support it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think alot of people are jumping the gun. Vega ii hasn't been released to the public yet. Don't forget, Vega 64 was billed as the Titan Killer. The competition would be a good thing but Nvidia is better positioned to drop prices if they have to. Vega ii should have been released two years ago.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Technically Vega II is just a rebadged Radeon Instinct MI5 with FP64 disabled. It really shouldn't be released at all, but AMD needs a high margin, high end card, no matter how power hungry or deafeningly loud it will be, until Navi is released in around July.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And to get back on topic, it seems that TomsHardware has confirmed it, Vega II is fast, but it's also hot and loud as it's not using a liquid cooler, likely due to cost, but a 3 fan cooler that dumps hot air back into the case through the top. If it's aiming for the RTX 2080 in terms of performance, and if the price is equal, which we know it will be as AMD has announced it at $700, same as an RTX 2080, then the power draw and noise produced become important factors.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm surprised they didn't advise people to "Just Buy it".

I would have liked to see a Liquid Cooled version, like Vega 64 Liquid and Fury X available also to those who prefer them and want more performance.

But those are even more expensive to make, more complex, and fitting one of them in a PC case can be a problem, never mind 2.

Regarding "Hot and Loud" lets see the benchmark data from multiple reviewers.

This article actually says this:

"Now that Nvidia’s reference design employs axial fans, it’s open season for AMD to kick waste heat back into your chassis as well."

I think having AMD reference designs like this is an improvement, not a step backwards.

If you have a good PC case with proper ventilation you should be O.K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: Technically Vega II is just a rebadged Radeon Instinct MI5 with FP64 disabled. It really shouldn't be released at all, but AMD needs a high margin, high end card, no matter how power hungry or deafeningly loud it will be, until Navi is released in around July.

I think that AMD may have a number of die manufactured that are not good enough versus electrical specification to go in Radeon Instinct MI50 so they either destroy them or sell them in high end gamer or prosumer GPU.

I think selling them to consumers is the better option.

At least people who look for an AMD GPU that can perform at RTX2080 level may have an option now.

I have not seen the latest reviews about FP64 situation yet. It is very likely it has been limited in performance less than a Radeon Instinct MI50 but it depends by how much.

It may have significantly better FP64 performance than than Vega 64.

RE: no matter how power hungry or deafeningly loud it will be

We need to wait for reviews.

The power consumption figures do look high.

However that cooler on the reference design looks a vast improvement to me.

Triple fans, and more specifically, a Vapor Chamber cooler - only seen on the Sapphire Nitro+ Limited Edition Vega 64 and 56?

It looks like the card might be able to fit in real 2 slot withough blocking more than 1 additional PCIe slot.

That cooler might perform well enough to keep noise levels down.

I look forward to seeing full reviews, launch pricing, and availability of this GPU in next days.

No matter what happens though ... I think driver stability on AMD high end GPU's (RX Vega 64, 56, FuryX,Fury,Nano, needs improving now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After spending this not even 3 years ago, and seeing it decline in performance in benchmarks from AMD's trusted third party reviewers, to a point where it performs worse than slower cards, my next card may be nVidia out of spite, and I haven't used an nVidia card since 2004.

And I'm also the same person who, by choice, bought the ill performing X800GTO, X1950GT (twice, one AGP, one PCIe when I upgraded to a motherboard which did not support AGP), and HD 2900Pro.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I understand your point. .I could not even get hold of R9 Nano at launch for the MSRP.

I own a number of R9 Nanos now, some used, some new in sale.

I used to run them in DX12 MultiGPU / DX11 Crossfire but I also purchased R9 Fury X's second hand and I mostly run those instead now.

At least I didn't spend more than 250 on any of the Nanos.

Two R9Fury X's cost me 350 + some time repairing one of them.

Buying Fiji GPU's now in 2019 doesn't make sense since new RX Vega 56 @ 300 + free games or Vega 64 @ 400 + 3 free games now.

However I bought a new RX Vega 64 Liquid and it has been so unstable I took it out of my PC and bought a new, 'low end', Palit RTX2080 OC.

RE: my next card may be nVidia out of spite, and I haven't used an nVidia card since 2004.

We have something in common here. Last new Nvidia GPU I bought was in 2014, a GTX780Ti. It still runs great and is fine for 1080p. I use it asd my main GPU in one of my PC's.

Today the Nvidia GPU's, at same price point, are simply better and their drivers are far more stable from what I see.

I am about to test this RX Vega 64 Liquid in new Ryzen Build and if it still causes crashes/PC Hangs/Corrupted Windows 10 OS it is getting returned under warranty and I will see if I can get a refund. From what I see though, I may get told that Vega crashing is normal behavior.

Bye.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pretty similar experience for mine. My last NVidia GPU was the GTX 8800 in 2006. While I didn't pick up the Radeon cards you mentioned, I did pick up the original Phenom and several Bulldozer based processors despite the vastly superior Intel processors at the time. I have never actually built a system using Intel, but those original Phenom processors where probably some of the worst I dealt with from a quality standpoint. Phenom II was vastly superior.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: I think this one is showing what you expected. Lies, Da** lies, and benchmarks.

For one example I looked at in detail, R9 FuryX,Fury,Nano are definitely reported as underperforming on Forza 4 Horizon on same benchmark setup and date versus RX580 4GB. In that case it is not because of memory capacity.

There is an incresing trend where RX580 4GB is performing better than R9 Fury X in new benchmarks.

Also requests for fixes / bug reports RE: Adrenalin drivers for R9 Fury X are ignored.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think that Forza Horizon 4 uses a lot of newer features not found in older GPUs. Tonga and Fiji for example are strongly tessellation bound and can have poor performance if a game engines leans heavily into that. Polaris and Vega improved the tessellation performance and so don't suffer the same fate.

It is interesting how in that title the Polaris GPUs also beat out the GTX 980 Ti, and the Vega 64 Liquid runs between the GTX 1080 Ti and RTX 2080