- AMD Community

- Communities

- Red Team

- Gaming Discussions

- Re: Is 20GB VRAM Necessary in Future Gaming?

Gaming Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is 20GB VRAM Necessary in Future Gaming?

Hello AMD community,

As we continue to see advancements in gaming technology, it is natural to question what future gaming requirements might look like. One topic that comes up frequently is the necessity of having 20GB VRAM or more for gaming purposes. While some high-end graphics cards currently offer this amount of VRAM, is it really necessary for future gaming?

I am curious to hear your thoughts on this matter. Do you think that 20GB VRAM will become a necessary requirement for gaming in the future, or do you believe that 20/24GB VRAM is overkill? Are there any particular factors or developments in the gaming industry that might make 20GB VRAM more necessary in the future?

Personally, I am on the fence about this topic and would love to hear some insights from others in the AMD community. Let's start a discussion and see where it takes us!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When i take a look on some badly optimized AAA Games like Hogwarts Legacy or Forspoken, the answer is yes!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been playing Dead Space Remake at max setting with RTAO at 3840x1080, and according to AMD's own Adrenalin monitoring software, I'm using about 10GB already. So, 8GB VRAM is fast becoming the bare necessity. As for The Returnal and Forspoken, I ain't too concern as my rigs have 32GB of system RAM, while my cards are 24GB and 16GB. But those games don't interest me, but yes, I can see a time when 12GB becomes the bare minimum, even for 1080P gaming. With 16GB of VRAM and higher, one cannot be blamed for feeling a certain amount of the 'longevity' of their card vis-a-vis 8GB and 10GB cards.

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe 20GB is overkill at the moment but in 2 years time it will be the sweet spot, my advice is if you are buying a graphics card today is min. 8GB for 1080P, 12GB for 1440P and 16Gb for 4K, ideally go for the 12GB, 16GB cards

The RX 6750XT or a RX 6800 are great cards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My opinion on the matter is that more vRAM the better.

As per this comment from a google search:

VRAM's purpose is to ensure the even and smooth execution of graphics display. It is most important in applications that display complex image textures or render polygon-based three-dimensional (3D) structures. People commonly use VRAM for applications such as video games or 3D graphic design programs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with the rest of the guys. In short the more VRAM (and RAM) the better. So, yes, I believe 20GB of VRAM will eventually become the norm as other components.

For example, thinking of

Storage:

We went from HDDs to SSDs, and now to M.2 SSDs.

From 540MB to 2T!

RAM

From 4GB to 16GB of RAM today - today's understood "standard" for gaming.

Display:

From 17" to 34" ultra-wide (curved)

Refresh rates from 45Hz to +160Hz

Overall, I believe the "minimums" will continue to grow as games become more and more demanding (and immersive). Some will say that it's the software that drives the hardware's growth. If you believe it, as I do, then tomorrow's games (developers) are going to require more VRAM naturally.

As I gamer, I expect tomorrow's games to be visually stunning and smooth (no lag, no tearing, high FPS, high refresh rates, photorealistic, etc.). The GPU, and its VRAM, will have to continue to "grow" to deliver these features.

Your biggest fan!

CPU: AMD Ryzen 7 5800X3D GPU: AMD Radeon RX 6800XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The way technology is advancing in both computer's hardware and software, tomorrows games will probably equal to today's movie studio CGI quality which will be super realistic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want 100GB...just cuz. I want the best specs around....just me?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nah mate, not only you. To be honest I'm dreaming of "socketed GPUs" where you just get a GPU-mobo with a set amount and type of memory (e.g. 40GB of HBM2) but can upgrade chip-wise later down the line for example by pulling the card out, taking of the cooler and plonking in a new chip into a LGA socket or something, repasting and stuff being a given.

I mean we had naked CPU dies on sockets for a long time and everyone who ever repasted a GPU was dealing with naked dies aswell - so "damaging the chip" is not even an argument against it, maybe latency is tho 😞

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When looking at recent titles like Company of Heroes 3 hogging up to 11GB of VRAM on medium texture details and high settings leading to filled-VRAM-based crashes/framedrops on my 6700XT while "ultra" even only being available when having more than 16GB VRAM:

Yes, 20GB will eventually become absolutely necessary in games coming out in the future - especially with HDR, high resolutions and refresh rates getting more and more mainstream lately.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Probably not “necessary” but worth to have. Just remember that we got 8 Gigs back in 2015 and with Polaris even in the midrange. Now you see actual offerings almost 7–8 years later, starting with 8 Gigs. Sure we could build and sell a RX 570 8 GB but can not do 16Gb for the upcoming midrange SKUs? Well at least I hope they come with 16 GB, yeah I look at you Radeon 7800 and 7700.......

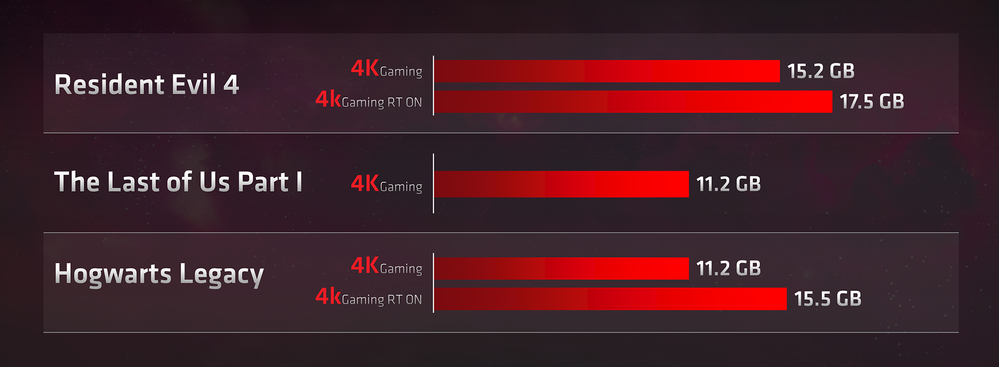

However, with games like Hogwarts, TLOU P1 and many more in the next years I consider 8 at the minimum, 16 in the midrange and 20 for the top dogs as needed depending on quality settings and resolution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HardwareUnboxed did warn its viewers that while the RTX 3070/3070 Ti were excellent cards, due to their 8GB of VRAM, their usefulness for future gaming at high res + max setting + RT would be kneecapped by insufficient VRAM.

Actually I feel for the owners of these cards, even the RTX 3080 10GB, who might have gotten their cards back when they were priced at ridiculous level. Recent games like Hogwarts Legacy, RE4R, Forspoken, and TLOU Pt 1 are foreshadowing what future games may require, 12GB would become the new 8GB standard for mid range cards.

Mid range cards like the RX 7800 XT should be armed with 16GB VRAM, I honestly think nVidia did another crap move by arming the RTX 4070 Ti with just 12GB of VRAM, and it looks like the RTX 4070 would have the same. Yes, 50% more VRAM than their predecessor and like them, are very capable cards that can do with more VRAM. It looks like the same nVidia early obsolescence masterplan for these cards, much like the RTX 3070/3070 Ti.

Just look at the RTX 4070 Ti 12GB competitor in both price and performance, the RX 7900 XT has 20GB of VRAM and would have better longevity than the RTX 4070 Ti. Even Intel's ARC A770 has 16GB VRAM, and has a better chance of lasting a longer time than the RTX 3080 10GB/3080 12GB/3080 Ti 12GB, and obviously longer than the RTX3070/3070 Ti.

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is 20GB VRAM Necessary in Future Gaming?

I think definately yes. We are already near the border of 20 GB, if you play 4K games in its native resolution and with Ray Tracing (RT) on.

From the blog article "Building an Enthusiast PC" by Matthew Hummel:

Games keep getting more demanding for resources. There are games that gladly use several cores at the same time, if it's available, and don't really work well with just 16 GB main RAM -- even if you don't play it in 4K resolution. Star Citizen (Alpha) is such a game and more are coming. So I believe we have arrived in that future already. The next graphic tech invention, after RT, will maybe push us over 20 GB Video RAM usage in 4K gaming.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

20gb is still more than you'll need 99% of the time when gaming. However if you're planning on playing brand new AAA titles at 4k you're going to start seeing more of a need for it. However if you're just planning on doing 1080 12gb should get you buy for a couple more years but 16gb will ensure that your card won't be obsolete by the time you're tempted to buy a new one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was commenting about the VRAM buffer issue in other forums, I'm taken aback that nVidia fanboys who are owners the RTX 3060 Ti/3070/3070 Ti aren't furious about their purchases, and especially at nVidia. nVidia knew that 8GB VRAM isn't quite enough for newer games going forward, yet chose to put 8GB on these relatively powerful cards that are capable of more. They defend their purchases by saying it's okay to reduce texture, image quality and even RT to run within the 8GB VRAM buffer.....what about nVidia much vaunted PQ and RT performance? Suddenly it's okay to reduce PQ and do without RT just to NOT saturate the 8GB VRAM buffer?

Basically, planned early obsolescence as no matter how fast these cards are, once the VRAM buffer is saturated, performance will tank as exemplified by some recent AAA games. Heck, had I'd gotten one of these cards, I'd be pretty upset....but then, I'd be even more upset had I'd gotten an RTX 3080 10GB, as perhaps games are beginning to exceed that buffer size too.

Below is a screenshot of Dead Space Remake, there are traversal hitching and very mild stuttering here and there, but as a whole, the game runs pretty well. I have Freesync set at 120Hz, and the game is pretty darn smooth 99% of the time. I'd snipped the image/screenshot by removing part of the right side of the screen. Note the 'Mem'. 11.2GB is allocated, 10.05GB is utilized...the game was running originally at 3840x1080 natively, max graphics setting + RTAO

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well I do have RTX 3070, I never play new release games, for example I just got started on GTA V. I wait years on getting games and longer on newer gpus. I Play GTA V pretty much all maxed out at 2560x1440 at 165hz and GTA V is the most visually graphic game I have played recently and 8gb vram is more than enough, IMO the game has totally awesome visuals. No Stutter or lag no issues whatsoever. By the time I decide to buy this current generation of games and upgrade my gpu there will be even newer cards with more vram and better specs than what is the latest and greatest now. I am a casual gamer, it takes me time to work through games. By the time I get to the games almost all the kinks are worked out of said game by the time I get to it, then when I have a new gpu that is more than capable to handle the games fully maxed out and most likely no issues. All this just has worked out for me for years, and IMO for me being a casual gamer the best way to go for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Over the years I've gone through a number of GPUs. From the earliest cards like the 3dfx Voodoo, or the old ATI Rage/Xpert cards even up to the HD4350 I had my first foray into OC with... Then my R7 250 with a whole GIGABYTE of GDDR5 (back when most cards were still using GDDR3) and on to my RX570 with 4GB of GDDR5. That was considered mid-range a few years ago, and you could still get Nvidia GPUs with 2 or 3 GB of VRAM. The performance floor has been raised for years, and will continue to do so. Unlike consoles which have a new iteration every 5 to 7 years, PC gaming has been a never ending arms race since the beginning. What is bleeding edge now, will be mid range in 2 years, outdated in 3.5 and obsolete in 5.

My recently installed RX6800XT has been mind-boggling for visuals since I'm still locked to 72fps at 1080p due to not having a monitor upgrade. The card barely spools up. Pushing high framerates with the visuals at or near max at 1440p will probably not make this thing work hard. I simply don't have the budget for 4K, unless someone surprises me with a 55" TV to hang on the wall. Then I can see myself playing a few games from the La-Z-Boy. But will it cut the mustard in a couple of years? Probably not. But if I don't have an upgrade path set by then, I'll do what I've always done in the past: Lower the settings until it's playable. If some of my games stop supporting my hardware, then I'll upgrade.

What I would love to see is a GPU "kit" that you can upgrade, much like what we do with the rest of our rigs. Sell me a PCB with the VRMs, I/O and power connector in place. Have it kitted out with a socket for the GPU, and slots for VRAM. Let me figure out my own cooling solution. Have those PCB designs supported for 5-6 years, and then you can bombard us with new modules every year or two. If you cheaped out on your PCB, you have a smaller window of options, just like you do with a PC MoBo.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Axxemann wrote:

What I would love to see is a GPU "kit" that you can upgrade, much like what we do with the rest of our rigs. Sell me a PCB with the VRMs, I/O and power connector in place. Have it kitted out with a socket for the GPU, and slots for VRAM. Let me figure out my own cooling solution. Have those PCB designs supported for 5-6 years, and then you can bombard us with new modules every year or two. If you cheaped out on your PCB, you have a smaller window of options, just like you do with a PC MoBo.

This is a very good idea. I wonder why no GPU makers have done this yet? Maybe they're afraid of getting too many customers, because this would have crowds storming the gates to buy. And just think about the customer loyalty ... Until the competitors copy the idea, of course. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That idea may sound great and all, but the GPU makers won't allow that, especially nVidia because it'd put their planned early obsolescence masterplan (due to insufficient VRAM buffer) in jeopardy. How else are they gonna get their customer base to upgrade if this were possible,

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MikeySCT wrote:That idea may sound great and all, but the GPU makers won't allow that, especially nVidia because it'd put their planned early obsolescence masterplan (due to insufficient VRAM buffer) in jeopardy. How else are they gonna get their customer base to upgrade if this were possible,

Yeah, you have a good point there. Their agreement with 3rd party GPU makers/OEMs probably forbid such implementations today.

If they allowed OEMs to do this, I guess Nvidia then had to offer something unique and desireable in every new GPU core generation to get customers to upgrade or simply forbid OEMs to allow replacing the GPU core itself. They could also rearrange the pin connections for every new generation to make it impossible to do. Even then it'd be hard to sell complete cards, since users could just spice up their old card with more VRAM and stuff. Knowing how Nvidia operates in the market, they'd probably try to block this type of user customization in hardware, like they did with crypto mining on their consumer GPUs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

IIRC, some of the AIBs were allowed to do this, that is, double the capacity of their cards. The GTX 680 came with standard 2GB VRAM, but the AIBs were allowed to double that capacity to 4GB.

But this is all solidly in the past and nVidia will NEVER, EVER allow this any more. It runs contrary to their early obsolescence masterplan. I mean, imagine a 16GB VRAM RTX 3070 Ti, or an RTX 3080 16/20GB, powerful cards that have sufficient VRAM on call, why upgrade to a 12GB RTX 4070?

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MikeySCT wrote:But this is all solidly in the past and nVidia will NEVER, EVER allow this any more. It runs contrary to their early obsolescence masterplan. I mean, imagine a 16GB VRAM RTX 3070 Ti, or an RTX 3080 16/20GB, powerful cards that have sufficient VRAM on call, why upgrade to a 12GB RTX 4070?

I agree with that. And when you mention Nvidias masterplan, I get an image in my head of a man with a white cat on the lap, stroking the cat gently, and the cat has a diamond neckless around her neck. Not sure why I get that association though. 😜

If Nvidia won't do it, maybe AMD will? 😁

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

S3, Matrox and some others used to have the capability of upgrade, I had a S3 Trio and Virge with upgraded modules from 2 to 4MB.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting, I didn't know that. 👍 So it has been done before, although at a smaller level.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had my little tnt riva 16mb boy, back in time, what a powerfull tool.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

well recently golem's hardware's requirements went out - amongst others 32 gigs of ram (XD!)

like many have said before optimizations the key, and when devs are counting on turbo features like DLSS instead of code review then soon enough 20 gigs can be not enough

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you talking about RAM, or VRAM? As for system RAM, I think 16GB minimum would soon be a thing of the past as even now when I play some games, I see system total RAM usage hitting 14GB - 15GB. Even DSR which isn't known to be a system RAM hog, is chewing up 13.3GB from the screenshot I'd posted. Already RAM usage is coming close to 16GB with certain games, like Returnal require >16GB for higher resolution gameplay.

As for VRAM, 20GB is absolutely fine, a vid Moore's Law is Dead vid I'd seen where he interviews a game dev and he said, basically 16GB is fine for now, though it doesn't hurt to have more. It's the 12GB VRAM that I believe would be an issue, sooner rather than later.

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MikeySCT wrote:

As for system RAM, I think 16GB minimum would soon be a thing of the past as even now when I play some games, I see system total RAM usage hitting 14GB - 15GB. Even DSR which isn't known to be a system RAM hog, is chewing up 13.3GB from the screenshot I'd posted. Already RAM usage is coming close to 16GB with certain games, like Returnal require >16GB for higher resolution gameplay.

I agree. Therefore I'll advice at least 32 GB for gamers building/buying a new PC today. I went all out and bought 128 GB for my new PC. It's not all for gaming though. I do other things with my PC too, like programming, video and image editing, and run VM instance(s).

As for gaming, I play Star Citizen (SC) mostly and that demands more than 16 GB RAM. Not certain how much VRAM it demands, but it's still an Alpha and so under development. Also unoptimized. However, I also hate to close down other programs when playing games, such as the browser and development tools. The Chrome browser alone use about 12 GB with lots of open tabs. I'm also planning to use PrimoCache with 64 GB cache for my 2 TB gaming disk. That's half of my main RAM. I haven't tried it yet but I think that may speed up a game like SC, which loads assets from disk heavily.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

32GB is, or will soon be, the new '16GB' standard for more and more gaming rigs. After upgrading my main rig to 32GB , and building my 2nd rig, I decided to go 32GB as well. And savvy gamers would realize that 12GB is barely enough for newer games, for now, even at 1440P (or, even new so-called 'optimized' games at 1080P).

The new' '8GB' VRAM standard is 16GB VRAM, and some are gonna learn the hard way just like those who'd gotten the RTX 3070 series are beginning to realize. I was surprised to find that MSI actually had a 20GB RTX 3080 Ti.....but nVidia canceled this product line. This card would actually still be quite useful now...

DaBeast02 - AM4 R9 5900X | GB X570S Aorus Elite AX | 2x 16GB Patriot Viper Elite II 4000MHz | Sapphire Nitro+ RX 6900 XT | Acer XR341CK 34" 21:9 FS | Enermax MAXREVO 1500 | SOLDAM XR-1 | Win11 Pro 22H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MikeySCT wrote:

The new' '8GB' VRAM standard is 16GB VRAM, and some are gonna learn the hard way just like those who'd gotten the RTX 3070 series are beginning to realize.

I wouldn't know what problems the RTX 3070 users are experiencing, since I don't buy new Nvidia cards. 😁 I did buy a GTX 1080 Ti used though, because it was cheap, but that was some time ago. The guy I bought it from had already bought a RTX 2080 so the damage was already done, so to speak. 😅

It'll be exciting to see what AMD's next gen. GPU is going to be like. I have a hunch it's going to be better than the competitor, which is struggling with power requirements in the current generation. AMD has a much better efficiency in performance/watt and therefore have more headroom in the next gen. I believe.

I agree with you about the new standard being 16 GB VRAM. I think that's minimum of what a new GPU should have today, unless one wants to upgrade soon again or only play games in 1080P resolution. Personally, I want to run games in 4K if they provide it and then 16 GB VRAM can be too little. Resident Evil 4 in 4K use 17.5 GB VRAM, for example. Not that I plan to play that game but anyway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@petosiris @MikeySCT Good morning guys!

I was having a conversation with one of my colleagues (he's in the Ryzen Product Marketing Team) a couple of days ago, and he mentioned/believes that by the end of this year, the new standard for RAM is going to be 64GB. I argued that it will take a little longer, probably by the end of 2025 given the current AM5 install base in the US.

Your biggest fan!

CPU: AMD Ryzen 7 5800X3D GPU: AMD Radeon RX 6800XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sam_AMD wrote:Good morning guys!

I was having a conversation with one of my colleagues (he's in the Ryzen Product Marketing Team) a couple of days ago, and he mentioned/believes that by the end of this year, the new standard for RAM is going to be 64GB. I argued that it will take a little longer, probably by the end of 2025 given the current AM5 install base in the US.

Good morning, Sam!

That's interesting about main memory. I bought 32 GB RAM when I built a PC 12 years ago so I don't doubt many people will need 64 GB soon.

What more did he say about the future? Spoilers are welcome. 😛

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Had 16Gb and got along real nice but 32Gb feels better.

I feel ambiguous about the 32Gb or 64Gb.

There is no doubt that 64Gb can be very useful for small time creators like me. As for gaming it will take a bit longer to stadardize the 64Gb, although, DDR5 is getting into affordable zone.

While doing productivity work the memory occupancy in task manager can be misleading if you don't dig deeper, this means.

While at 16Gb I had 60% to 80% occupancy depending on workloads, at 60% you may think: "ok, I don't need more". You would be wrong! While doing the same thing with 32Gb, I now sit at 50% to 70%, so the system is using more, because more is available to be used, remember that Windows still uses swap file/page file. If you really want to see you system scream, disable it and check it out. 😁

This kind of remembers when I had 128Mb on Windows 98 and turning off page file actually increased gaming performance.

As for office work, 16Gb is still very generous for the time being, Dell don't even give us the option for 32Gb on some laptops yet. At least with basic 12gen Intel models.

For office productivity like Revit, 32Gb is so slim, we are waiting for the 64Gb precision models to test it out.

Lets wait and see how this rolls out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

About:

"This kind of remembers when I had 128Mb on Windows 98 and turning off page file actually increased gaming performance.

As for office work, 16Gb is still very generous for the time being, Dell don't even give us the option for 32Gb on some laptops yet. At least with basic 12gen Intel models."

I couldn't agree with your more! I remember the 128MB days. Gosh, let's go back a bit more, to the days when we had to update CONFIG.SYS and AUTOEXEC.BAT...remember? (DOS days) LOL.

Your biggest fan!

CPU: AMD Ryzen 7 5800X3D GPU: AMD Radeon RX 6800XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@petosiris Hi!

I have 32GB in my gaming rig (it's an AM4 system), and I'm happy with my reference RX 6800 XT (16 VRAM). In fact, when I built it, I purposely future-proved it so that I don't have to upgrade it for a while (3 years or so). Although I'm itching to build a full-blown AM5 monster so that I can pass my current rig to my boy. We'll see...

About my colleague and whether he said anything about the future. To tell you the truth, not much, we didn't talk about our roadmap although I can tell you the entire team is excited about our 3D tech being the "pillar" of our new Ryzen CPUs.

Your biggest fan!

CPU: AMD Ryzen 7 5800X3D GPU: AMD Radeon RX 6800XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sam_AMD wrote:I have 32GB in my gaming rig (it's an AM4 system), and I'm happy with my reference RX 6800 XT (16 VRAM). In fact, when I built it, I purposely future-proved it so that I don't have to upgrade it for a while (3 years or so).

I did the same with my latest build, thinking ahead and future proof it somewhat because I don't like to build new PCs too often. Also, the technology on both CPUs and GPUs are jumping ahead in -- what we say in my country -- 7-mile leaps. So I can sit on the fence at least one or two generations more now.

Although I'm itching to build a full-blown AM5 monster so that I can pass my current rig to my boy. We'll see...

Good idea. I'm sure he'll be extatically happy with that. 🙂

About my colleague and whether he said anything about the future. To tell you the truth, not much, we didn't talk about our roadmap although I can tell you the entire team is excited about our 3D tech being the "pillar" of our new Ryzen CPUs.

Yeah, me too. I think we all are waiting for the 3D tech to be included in all AMD CPUs, once the heat problem is solved once and for all. And maybe we get 3D tech in GPUs too ... sometime. 😉

While we talk about marketing, I was kind of dismayed when the painter code names was abandoned on CPUs in favor of "Granite Ridge" and Strix Point". There are many more famous painters AMD could use, I think. The Ryzen logo has circle which looks like it's painted with a broad brush too, so I think painter code names are fitting a little longer. But done is done so I'll just have to accept the "ridge", "point", "peak" and whatever comes next. 😅

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, me too. I think we all are waiting for the 3D tech to be included in all AMD CPUs, once the heat problem is solved once and for all. And maybe we get 3D tech in GPUs too... sometime.

Heat has always been the thorn in the side of system architects, HD designers, SoC Eng, EEs, etc. Who knows where we would be today if we didn't have to worry about heat, heat dissipation, conduction, etc? I would be surprised if we do put 3D in our GPUs. Do you remember AMD's HBM? That to me was already revolutionary.

I have to ask you, about your avatar, is that a WWII pilot?

Your biggest fan!

CPU: AMD Ryzen 7 5800X3D GPU: AMD Radeon RX 6800XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sam_AMD wrote:

Heat has always been the thorn in the side of system architects, HD designers, SoC Eng, EEs, etc. Who knows where we would be today if we didn't have to worry about heat, heat dissipation, conduction, etc? I would be surprised if we do put 3D in our GPUs. Do you remember AMD's HBM? That to me was already revolutionary.

Yes, I remember HBM and HBM2 later. HBM was indeed revolutionary. AMD was the main inventor, together with Samsung and Hynix. But then AMD stopped using it, for some reason. I heard that Nvidia's Hopper GPU will use Hynix's HBM3 with 819 GB/s bandwidth. Maybe AMD will do too, instead of GDDR6(X) next time? Exciting times!

I have to ask you, about your avatar, is that a WWII pilot?

Close. It's actually a WWI bomber pilot. I wrote about my avatar in a reply to johnnyenglish earlier today, actually. I can repeat it here. I chose this avatar years ago because ...:

"[...] I admire the spirit, courage and the gentleman's style of these guys. As officers they had manners (which seems to be lost forever in time today) and even had visiting cards (to hand out to ladies they courted). Those were the days ... Except for the war, of course."

Did you know that the first bomber pilots in WWI had the bombs lying between their feet in the cockpit and dropped them by hand down on the enemy? The enemy soldiers, in turn, laid on their backs on the ground and fired their rifles up in the air to try and hit the planes ... Haha. Lack of proper tech led to planes didn't have much impact at all during the first world war. That's also one of main reasons why WWI was a trench war with mostly locked positions and syrup slow advances. The other main reason was the machine gun, which mowed down most advancing foot soldiers. Much the same situation as in Ukraine today, because the planes don't play a big role there either ATM.

The WWI planes got machine guns too but the earliest implementation of that led to the guns destroying the propeller(!) until a smart guy (or gal?) found out that instead of the pilot deciding when the bullets should be fired, the propeller itself manage the trigger mecanism, so the bullets go between the propeller blades. The pilot just press the trigger in the cockpit and the propeller does the rest. 🙂

The tank was also invented in WWI by the english army, and they tried to keep it a secret so they called it "a tank" so soldiers and others would think it was a simple water tank, although a new version with wheels on a belt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tbh I really do hope AMD gives us a 7800XT with 16 GB and 7700 with 12/10 GB option. I am not going to pay above €300 to get a new 8 GB card.

My lineup suggestion would be:

- 7900 XTX 24 GB - ~900 €

- 7900 XT 20 GB - ~ 750 €

- 7900 non XT 20 GB ~ 599 €

- 7800 XT 16 GB - ~479 €

- 7700 XT 12 GB - ~ 349 €

- 7700 non XT 10 GB - ~299 €

- 7600 XT 8 GB - ~249 €

- 7600 non XT 8 GB - ~ 199 €

- 7500 non XT 6GB - ~ 149 €

Remember when we had Hawai with 8 GB ages ago, or even the Vega Frontier Editions with 16Gb? Not to mention the epic value kings with Polaris and 8 GB?

So sad that these times are gone.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With the way the 7840U laptop APU is performing, I can see the 7500 getting scrapped before it ever hits production. AMD just has to figure out how to get desktop caliber performance out of it while having it slot into an existing AM5 socket. At this point, I'm thinking it has to do with power delivery.