- AMD Community

- Communities

- Red Team

- Gaming Discussions

- How to easily get a lot more performance from your...

Gaming Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to easily get a lot more performance from your CPU than overclocking, without overclocking

Before I start, you have to ask yourself one question, "Where does a CPU get its performance from?".

If your answer is, "From the clockspeed of course", or "From the number of cores of course", then I would contend that you would be wrong.

You see it really doesn't matter how many things are doing nothing or how fast those things are doing naff-all and this is the problem you will mostly be confronted with when gaming and/or streaming, and it is the reason why games will not run optimally, no matter how much you overclock or how many cores are added to the system.

Let's begin at the beginning. Windows of the NT family (which is all that has survived) is a pre-emptive multitasking Operating System. What this means is that all the processes running on the system (and a game would be a process - or perhaps have a number of process threads) get a certain time-slice of attention before they are frozen and another process will get attention.

Previous versions of Windows worked on the basis of cooperative mutlitasking whereby a process would have the CPU attention until it relinquished its call. The problem with this is, when a process hangs, then the CPU is basically stuck trying to compute data that is not being requested and will be in a hung state with no way to terminate the process.

To get back to the question of where the CPU gets its speed from, the answer is from the data, and the more fluidly and consistently the CPU can get the data, the more speedily it can execute that data.

I hear you say, "OK Captain obvious, so what's your effing point?".

As CPUs got faster and faster and storage and the data bus started lagging ever far behind, a way had to be found to circumvent this imbalance. Increasing the speed of storage was not and even now in the days of NVME M.2 is not a really viable option.

Thus the concept of Cache was born.

Cache is basically an area of RAM built into the CPU which runs at close the the same clockspeed of the CPU. Whilst the CPU is working on data in the Cache, the Cache itself is loading data the CPU is going to most likely need next. When needing data, the CPU will always look in the Cache first, and if it doesn't find what it needs there will request it from comparatively very slow memory, or, not finding it there, have to fall back on the positively slothlike storage.

So the CPU is happily chomping and digesting the data it gets from the Cache - problem solved?

Well remember when I said that Windows was a pre-emptive Operating System? What this means is that other processes get a time-slice of CPU attention, and of course those processes will load data into the Cache. The Cache is multi associative, meaning it can contain disparate data from different sources, this does not however mean that a new process will not overwrite data vital to the another process - like a game which is running - and when that process gets its time-slice again it can find that it's Cache has been marked dirty and will have to go an retrieve the data again from memory or storage - slowing down that process very, very significantly.

Of course Micro$haft would not be Micro$haft if it didn't actually go out of its way to kick you in the nuts whilst you were gaming.

The Operating System has a mechanism known as "Task Switching" whereby it will take a process waiting for its time-slice on one core and assign it to a completely different core. What this means is that the process will then have absolutely NO data in the Cache and have to go back to either memory or storage to fill it up again, which is incredibly wasteful of CPU time.

So as you can see, the performance of a game is not so much dependant on the speed of the CPU or the GPU, but rather how often the CPU can score a Cache hit as opposed to a Cache miss. And no amount of overclocking can get around this fundamental obstacle.

So that's it? You are screwed? You are at the mercy of random chance and a lucky streak of Cache hits for performance?

Luckily no, and there is something built into the NT family of Operating Systems which guarantees that you will have that lucky streak of Cache hits nearly all the time - thus immeasurably increasing the performance of your CPU.

This is called "Affinity", and you can set it if you open the Task Manager, right click on a process and then on "Set Affinity".

What this does is it allows you to dictate which processes can use which Core of your CPU.

Ah, so problem solved?

Yeah, you wish. The thing is that you have to set every single process Affinity individually, which is a very time consuming grind, and then, to top it all off, when you reboot then you lose all the settings you just made.

There is however a utility you can download for free called Process Lasso

https://bitsum.com/

It is one of the few utilities I have found which allows you to manipulate the Affinity of processes easily and very quickly.

Do NOT mess about with the priority of any processes - this is a recipe for disaster.

With Process Lasso, you can click on the top running process, then go down to the bottom process, and holding down the shift key click on that, then right-click and then set the affinity for all the processes.

Because these are the system processes they only need two cores - so you would assign them to CPU 0 and CPU 1.

If you then load the game, you can assign the game to as many Cores as you want (except of course CPU 0 and CPU 1).

Nearly all games will not use more than four Cores so assigning more Cores to the game can actually be counterproductive - see task switching above.

The thing is though, that because the game now has exclusive rights to the Cores you assigned it will also have exclusive rights to the Cache associated with those cores and cannot be interrupted by other processes.

You want to play a game and stream?

Easy, assign the game to its Cores and then give the streaming software some other Cores from the ones you have left and have not yet assigned.

I helped build and then configure a Ryzen 2700X system for someone who is a member of the Discord Server that I am a mod on and he wanted to play Fortnite.

Leaving the system as it was, out of the box, with only Ryzen master installed we ran Fortnite and the result was 90-135 FPS.

I then got rid of SMT (meaning the 2700X ran on eight Cores/eight Threads) and ran Fortnite and the result was a bit better 105-150 FPS.

Then, using Process Lasso I assigned all running processes to CPU0 and CPU1 and assigned Fortnite to CPU2, CPU3, CPU4 and CPU5 - this left two cores CPU6 and CPU7 untouched.

The result was a steady >200-250 FPS, which is on par with what you would get with an i9-9900K overclocked to 5GHz.

He was using the stock AMD cooler for the Ryzen 2700X and not once did it spin up to the point of becoming audible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While interesting, this data does raise a number of questions.

"What this means is that the process will then have absolutely NO data in the Cache and have to go back to either memory or storage to fill it up again"

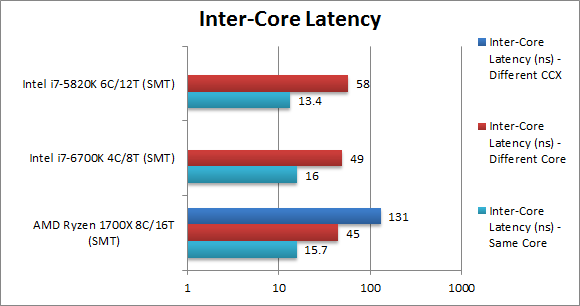

My understanding is that each CCX shares a level 3 cache with all cores contained within that CCX as well as a private level 2 cache per core. If a thread moves to a core within the same CCX, the cached data can be copied from the level 2 cache of the original core, to level 3. This does introduce some latency, but not nearly as much as copying between CCX's. The CCXs share no cache, but data can be copied between them over the infinity fabric. The infinity fabric operates at the speed of memory, which introduces more latency than same CCX cache copies.

What is interesting, is in the example you gave, you saw a big performance bump in Fortnite, but you haven't removed any of the latency associated with Ryzen. By assigning the game to four cores, you can still have between core latency, as threads can be run on any one of the four cores. Beyond that, CCX1 is likely Core(0-3) and CCX2 is Core(4-7), by assigning the game to cores (2-5) you are still split between CCXs and should see the identical latency because you still have an even 50% split of cores to CCXs.

So I am curious where the observed performance improvement is coming from?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Whilst your statement,"My understanding is that each CCX shares a level 3 cache with all cores", is true, you forgot the part where I mentioned that Windows from NT onwards is a pre-emptive multitasking OS.

So sure, the game gets to load its data into the Cache; however when the next process gets its timeslice (and take a look with Task Manager just how many processes are running on your system even at "idle") and the next one after that, and the next one after that etc. et al, ad nauseam and then the game gets its CPU attention there can be precious little left of the data it had loaded into the Cache.

I apologise if that didn't come across clear enough in the original post.

It has nothing to do with removing latency, it has to do with having the game you are running have as much data in the Cache available to it as possible so that it can crunch numbers rather than chew cud.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Isn't that effectively what game mode does in Windows 10? Prioritize the game threads over everything else? In data I had seen, Game mode made virtually no different in performance when running standard background tasks only. It did make a difference if another CPU heavy process was also running in the background, as it so heavily prioritizes the game that the background processes grind to a halt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

buggzluva, this is a really interesting area for a YouTube video, or definitely a review of some kind. Does disabling SMT in Ryzen processors help games in 2019? Also, what about locking game processes to fixed cores vs background processes? Do software applications like "Process Lasso or Project Mercury" offer any tangible benefits? And if so, is are they more effective than the "Game mode" built directly into Windows 10?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not trying to be snide, but I would advise you to go back and carefully read what I wrote.

The "Game Mode" in Windows 10 - at best - tries to limit the amount of times a game will actually be switched away from a core, or cores it was using (as far as I can tell) and fails pretty much miserably at it.

When the game loses its CPU timeslice, not only are the other processes free to mark dirty the L3 Cache but also the L1 and L2 Caches - it is Cache MISSES that will cause you to lose gaming performance. And any Cache-Miss costs you a lot of clocks.

L3 Cache as you probably know is a sacrificial Cache where the CPU will only look if it cannot find the data/instructions it needs in its L1 and L2 Caches.

The fact that I configured the game to run on Cores 2, 3, 4 and 5 means that it straddles both CCXs and can retrieve data/instructions from both L3 Caches if it doesn't have what is required in L1 or L2. Of course for some games it might be better to configure it for Cores 4, 5, 6 and 7.

To you this may all seem shiny and new, but I was using the "Set Affinity" twenty odd years ago in the days of WinNT 4.0 (I honestly cannot remember if it was introduced in the 3.xx versions up to 3.51) when "Multi-Core" meant having two or more physical CPUs in your system - only we called it SMP (Symmetrical MultiProcessor) back then.

Especially if you had a test system where Microsoft Exchange and SQL were running you had to assign them to their own CPU otherwise the system ran like a dog.

So this is not new, and you know what, anyone that wants to fry their system for a few measly FPS by overclocking it to hell and gone, then be my guest.

I actually tried to anticipate interjections, such as the ones you have made in your posts, in the original text. When I originally wrote the post it was a lot longer and I did cut it down by quite a bit of verbiage so that it would be easier to read.

I did however think that I had kept the post comprehensible - obviously in your case I failed in that endeavour and I apologise for that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue isn't that the post isn't comprehensible, but there if little if any empirical evidence to support any tangible gains from Process Lasso, Project Mercury, or Windows Game mode.

You argue that there are a lot of processes in Windows, and there certainly are, but the cache would only be "dirtied" up by the processes if they are consistently writing decent chunks of data even while the system is idle. Are they? To this day, I haven't seen any substantial data set pointing to software such as "Process Lasso" or "Project Mercury" providing any tangible benefit in a large subset of games.

Your speculation that applications would benefit from process affinity is exactly that. One anecdotal observation concerning Fortnite hardly warrants a universal endorsement of this type of approach (which you gave in the initial title). Due to the fact that you have been fixing affinities for 20 years, you must have volumes of data that speak to it's efficacy? If not, then a renew my call for a review to fully suss out the benefits in a much larger data set. As things stand, there doesn't appear to be sufficient data to make any sort of recommendation for or against.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now I see where you are making your mistake.

What I am talking about has bugger all to do with Process Lasso.

What I am alluding to is a part of the Windows OS and has been since WinNT 4.0 which came out in 1995.

Process Lasso is not a program that makes what I stated possible, it merely makes it a LOT easier to manipulate the feature which is BUILT INTO THE OPERATING SYSTEM!!!

You don't need to use Process Lasso or any software whatsoever.

You can do it all by hand - if you are a complete and utter masochist.

You really didn't read my post did you?

You just saw "Process Lasso" and made up your own little story.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"You just saw "Process Lasso" and made up your own little story."

I think you are getting a little tripped up on "Process Lasso". Allow me to correct my previous post.

"The issue isn't that the post isn't comprehensible, but there if little if any empirical evidence to support any tangible gains from setting process affinity.

You argue that there are a lot of processes in Windows, and there certainly are, but the cache would only be "dirtied" up by the processes if they are consistently writing decent chunks of data even while the system is idle. Are they? To this day, I haven't seen any substantial data set indicating that defining process affinity for applications yields tangible benefits.

Your speculation that applications would benefit from process affinity is exactly that. One anecdotal observation concerning Fortnite hardly warrants a universal endorsement of this type of approach (which you gave in the initial title). Due to the fact that you have been fixing affinities for 20 years, you must have volumes of data that speak to it's efficacy? If not, then a renew my call for a review to fully suss out the benefits in a much larger data set. As things stand, there doesn't appear to be sufficient data to make any sort of recommendation for or against."

"and this is the problem you will mostly be confronted with when gaming and/or streaming" Here you use the word mostly, which implies that the majority of applications will benefit from assigning process affinity. I have seen no data to suggest that this is true.

The issue at large here is that you provide a solution to a problem, without first verifying that the problem even exists. Allow me to quote again.

"this does not however mean that a new process will not overwrite data vital to the another process"

It also doesn't mean that it does. The entire argument is based off the premise that background processes overwrite critical data, and they do it a lot. If that wasn't true, setting process affinities for specific cores would be ineffectual. But do they get overwritten a lot? You provide no data to that effect, yet you claim both that this is the problem PC users will "mostly" encounter, and also that setting process affinities will net a user "a lot for performance" than overclocking. What data are you using to make those claims? Is the anecdote about Fortnite in the original post the extent of the empirical data exists?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The use of the "Set Affinity" command back in the day had very limited use and was only used by people like me who worked with WinNT SMP Servers.

When multi-core CPUs started to become common, that knowledge was either not known, or had been forgotten, and, for a number of years after multi-core CPUs came into common use, there wasn't really the need to use it.

My use of Fortnite was because it was the most recent use of it for someone else.

And if you had read the post then you would have seen that I expressly stated:

"Do NOT mess about with the priority of any processes - this is a recipe for disaster."

which led me to believe that you had not bothered to read the post when you mentioned "Project Mercury".

From the way you have spoken about it, it is obvious that you don't, or rather didn't have a clue about the concept of how setting Affinity greatly improves gaming performance, and probably STILL don't grasp it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"From the way you have spoken about it, it is obvious that you don't, or rather didn't have a clue about the concept of how setting Affinity greatly improves gaming performance, and probably STILL don't grasp it."

And what I am saying is, I'm not convinced that the statement in bold is actually true. And since you make it so unequivocally you must have data verifying that claim. Not conceptually mind you, but actually empirical data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are either trolling or being incredibly obtuse.

It is not the fact that I am assigning the game to the cores 2-5 with the set Affinity, but rather that I am assigning EVERY OTHER RUNNING PROCESS AWAY FROM THOSE CORES by limiting them to Core 0 and Core 1.

If you just apply two braincells to the concept you will see how this greatly benefits the game.

Which part of that concept do you not understand?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see how it would conceptually benefit a game. I don't see much data that it in fact does, data you seem to be going to great lengths not to provide.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hehe

btw - there are tools that can increase i/o time for specified threats - that will increase data-rate for your cpu ![]()

but it doesnt matter - when running games your CPU handles so much stuff that a ssd is fast enough to provide enough work fast enough ![]()

Laptop: R5 2500U @30W + RX 560X (1400MHz/1500MHz) + 16G DDR4-2400CL16 + 120Hz 3ms FS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So let's leave the actual speed of the SSD, RAM or Cache out of the discussion (Cache is a lot faster than RAM which is a lot faster than SSD)

Intrinsically just from the latency of the components

L1 Cache is about 200 times faster than RAM

L3 Cache is about 20 times faster than RAM

RAM is about 1,500 times faster than an SSD

RAM is about 100,000 times faster than a mechanical Harddrive.

So as you can see, a CPU is a lot better off getting its data from the Cache than it is trying to get it from RAM never mind and SSD or God forbid a Harddrive.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have to agree here. Even with advancements in non-volatile storage, even an NVMe SSD is still by far the bottleneck in a system when compared to cache or RAM. I'm going to try process lasso out and see what results I get. The issue I currently have, is that most of my games are GPU bound at 1440p (144Hz). I'll have to find a title that really taxes the CPU to gauge the benefits. Anthem launches on Friday, and the demo was extremely CPU heavy on my system. I'll give it a try this weekend and see what gains I see in my fps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think I know where some misunderstandings are coming from.

I posted the original post on another forum and someone replied to me:

"I have not tested this product. but it looks good

thanks for the review"

To which I replied:

"AAARRRRGGGHHH!!

It was not a review of the utility, but rather it is the tool I found, which is available for free, which will allow you to easily do what I am trying to teach people in the post.

The utility is a part of the post, not the reason for it."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right, and to be clear, I'm not saying assigning background processes away from an application doesn't have a large impact, I just haven't seen any numbers on review sites or real world scenarios.

I am currently going to try it out with Anthem. Anthem does seem to be fairly CPU bound in places so I want to run a large free play section with all processes allowed to use all cores, and then assigning the background processes to specific CPUs etc. It does appear however, that Anthem does make use of more than four threads, so I need to be careful not to hamstring the performance by being overly conservative.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The reason for that is because it is essentially forgotten knowledge.

It's not secret, it's just that when NT 4.0 first came out, it basically didn't support any games whatsoever, you could get some games to run on it, but they were few and far between.

Also back then "Multi-Core" systems were server systems that had two or more physical CPUs in them.

The reason for my post is because this knowledge has been almost forgotten, and now it is very relevant to the mainstream of users and especially gamers.

You have to savour the irony of it all though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I replied to someone else on another forum with regard to their inquiries after they improved their performance by following my suggestion:

"You will also find that you can get better results using what I suggested than any OverClocking could ever give you, if you are comparing my way to the OverClocking way.

I would also suggest that if you do OverClock then my way will leverage your OverClock to a far higher degree, because you are limiting the amount of Cores churning out heat and thus your overall energy budget - and thus TDP - will be lower.

So it is not an, "Either-or" but rather an, "In conjunction with".

I should clarify this, every 10 degrees Celsius that the temp of your CPU rises means that 4% more energy needs to be pumped into it just to keep the same performance.

So if you can keep the total temp of your CPU down whilst you are OverClocking your entire system (and the parts of the system that are not putting your system under load are not adding to your energy budget although they are equally OverClocked), then the added penalty of the 4% energy input per 10 degrees (and thus added heat generation) can be mitigated."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've got my R7 1800X, Radeon VII system hooked up to a 1080p TV to ensure that I am in fact CPU bound. I'll run some freeplay (20 minutes or so), using the Radeon performance monitor to record FPS. I'll do that first with all processes set to cores 0-15, and then I'll separate background processes to their own two cores while assigning 4 (or possibly the other 6) to Anthem, and compare the runs. I need to make the runs long enough to capture the variable weather effects that are fairly CPU intensive.

Hopefully I'll have time to do the tests this weekend, but with more snow on the way, I may be digging myself out again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So how did your experiment go?

And when you did the gaming test, did you get rid of SMT?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey!

Actually, the testing with Anthem didn't go well at all. But, that was primarily due to Origin being stuck in the "offline" mode, and I can't seem to resolve the issue. I have moved on to testing a few other titles: Devil May Cry 5, Destiny 2, and Farcry 5. I haven't been turing SMT off, but I can certainly do a run with that disabled as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Because of the way the L3 Cache is configured on Ryzen, you will get the best results with SMT turned off.

Each Thread gives you about 0.65 of a Core's worth of performance and considering that games in general don't use more that four cores/threads you would be hobbling the game to 2.6 Cores of performance.

It would however be interesting to see what the difference in performance would actually be.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info! I'll have results this weekend, likely with Anthem included. It turns out, my PC had a static IP so that it can consistently be found by other computers on the network (media server). Turns out, Origin really doesn't like that setting. I am really not a fan of all these proprietary launchers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

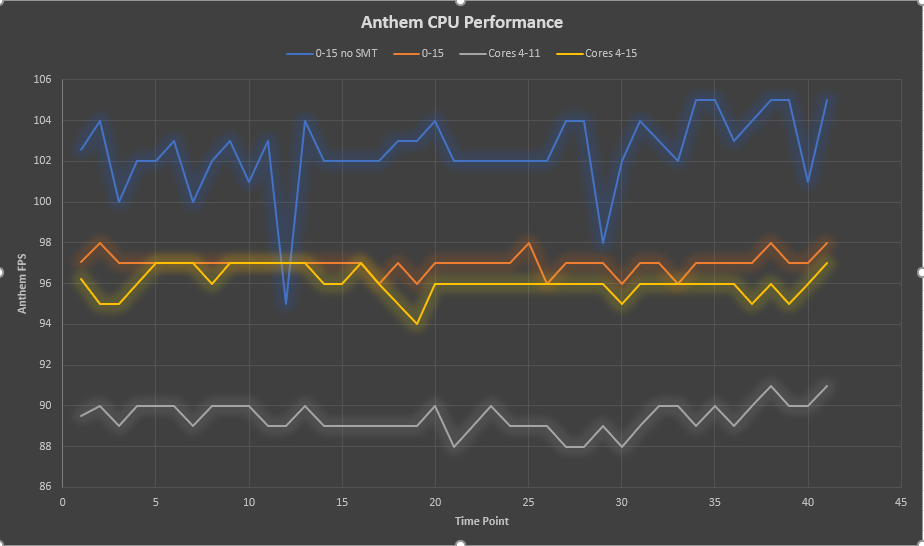

So I played around with this for a bit in Anthem. I positioned myself in the Fort Tarsis bazaar to avoid changes in environmental details. Settings were set to medium at 1440, and run on a Radeon VII.

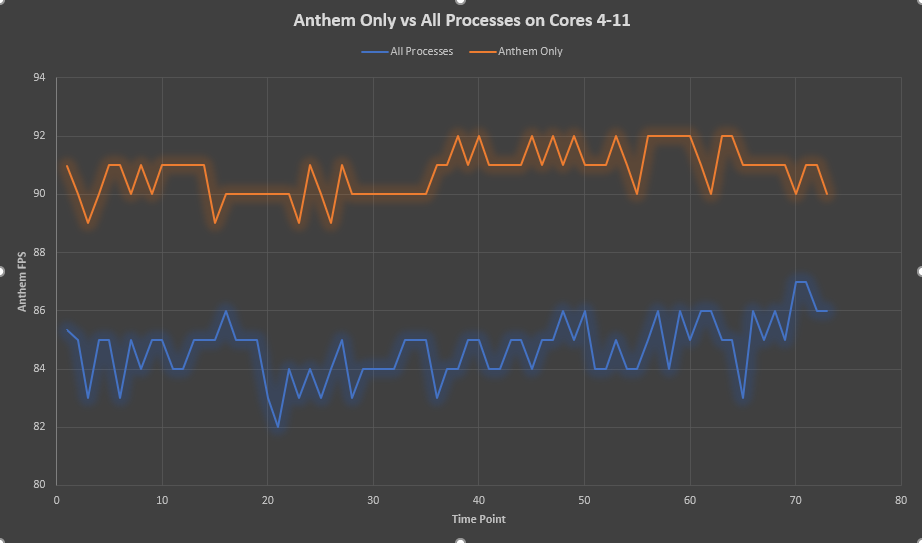

I ran the performance monitor with all processes on all cores (0-15). I repeated that with SMT disabled. I then set all other processes to cores 0-3 and Anthem to cores 4-11. I then repeated that experiment but with Anthem set to cores 4-15. The results are shown below.

Anthem is a title that really loves CPU cores. As shown, moving all other processes to their own dedicated cores and giving Anthem 4 cores to work with resulted in the lowest performance. Giving Anthem two more cores to work with improved my FPS by an average of 7fps (89 vs 96) or 8%. However, giving Anthem the other two cores and allowing all processes to be executed on any core resulted in a 1fps gain on average (97). So in the end, allowing all processes access to all cores yielded marginally higher frame rates than 6 cores dedicated to Anthem and 2 to everything else.

Allowing all processes to remain on all cores, but disabling SMT lead to a further jump in performance of 5fps (97 to 102) or 5%.

So at least for Anthem, any benefit gained by isolating background processes is less than the performance gained by simply having more cores available to the game.

The fact that Anthem uses so many cores, does make it difficult to assess what exactly the benefit of process lasso is. To determine that, I again ran my test with only Anthem on cores 4-11 and all background processes on cores 0-3. I then repeated the run will all processes on cores 4-11. The only difference in performance then, should be the background processes running on cores Anthem is trying to use.

Removing background processes from the cores Anthem runs on netted me about 7% performance in this test. While for Anthem, it is favorable to allow the game to use all cores, if more than eight cores were present in this system, it would be possible to gain additional performance using process lasso.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The best way to help CPU performance, ya know basics: RAM and SSD. After that, each task can be improved in a different way, no matters all the circus you build and settings (already done for Windows optimization). There are different ways to make the CPU reaches a better performance, it is not the same optimization for Adobe Illustrator, Forza Horizon and optimization settings in Adobe Premiere or Corel Photopaint. You have noticed with different settings a better CPU performance when you play Slime Rancher or Counter Strike, and so with other programs like Photoshop. Optimization starts from BIOS to Preferences and Settings in your game or software. (o0)!