- AMD Community

- Blogs

- EPYC Processors

- Performance Analysis of HPC Workloads: AMD EPYC™ w...

Performance Analysis of HPC Workloads: AMD EPYC™ with 3D V-Cache vs. Intel® Xeon® CPU Max with HBM

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

4th Gen AMD EPYC™ processors with AMD 3D V-Cache™ technology stack additional SRAM directly on top of the compute die, thereby tripling the total L3 cache size to as much as 1,152 MB processor compared to a maximum of 384 MB for standard AMD EPYC 9004 processors. This supersized cache can relieve memory bandwidth pressure by storing a large working dataset very close to the cores using that data, which can significantly speed up many technical computing[1] workloads. These new processors continue the high-performance legacy established by 3rd Gen AMD EPYC processors with AMD 3D V-Cache technology and add notable 32-core and top-of-stack processor performance uplifts. AMD 3D V-Cache technology is fully transparent to the host operating system, with neither special requirements nor code changes required to fully realize additional performance.

AMD EPYC 9004 processors with AMD 3D V-Cache technology include the cutting-edge technologies found in general purpose 4th Gen AMD EPYC processors, including “Zen 4” cores built on 5nm process technology, 12 channels of DDR5 memory with supported memory speeds up to 4800GHz, up to 128 (1P) or 160 (2P) lanes of PCIe® Gen5 delivering 2x the transfer rate of PCIe Gen4, 3rd Gen Infinity Fabric delivering 2x the data transfer rate of 2nd Gen Infinity Fabric, and AMD Infinity Guard technology that defends your data while in use.[2] These new processors are socket compatible with existing 4th Gen AMD EPYC platforms.

The 300+ world records earned by AMD EPYC 9004 Series processors are a testament to AMD’s relentless pursuit of performance leadership with industry-leading energy efficiency[3] and optimal TCO[4]. The industry has responded to these efforts: A rich and growing ecosystem of full-stack solutions and partnerships leverage the cutting-edge features and technologies offered by AMD EPYC processors to enable faster time to value for customers’ current and future needs.

Let’s take a deep dive into how 4th Gen AMD EPYC processors with AMD 3D V-Cache technology are delivering market-dominating performance across a broad spectrum of modern workloads. All results shown are based on AMD testing. This blog will showcase superb performance at both the 32-core and per-processors levels by comparing the following:

- 32-core: The 32-core AMD EPYC 9384X versus the 32-core Intel® Xeon® CPU Max 9462 in both High Bandwidth Memory (HBM) and Cache modes.

- Top of stack: The 96-core AMD EPYC 9684X versus the 56-core Intel Xeon CPU Max 9480 in both HBM and Cache modes.

First, I need to describe the three modes supported by Intel Xeon CPU Max processors.

Intel Xeon CPU Max Processor Modes

Intel Xeon CPU Max processors must run in one of three “memory modes”: HBM Only, Flat, or Cache. AMD tested 4th Gen AMD EPYC processors against Intel Xeon CPU Max processors running in HBM Mode and Cache Mode. Flat Mode requires specific application compatibility that would narrow the number of benchmarks we can compare and is therefore not included in this set of experiments.

- In Cache mode, the HBM memory acts as a “Last Level Cache” prior to applications accessing system main memory and no additional consideration is required.

- In HBM Mode, the HBM is a substitute for the node’s main memory and limits us to 64GB per socket.

Let’s see how AMD EPYC processors fared versus Intel Xeon CPU Max processors in a series of test cases. Some of the test results shown below indicate “Insufficient RAM,” meaning that the workload did not fit into HMB memory.

Memory Bandwidth and HBM Limitations

AMD observed some key takeaways when testing applications on Intel Xeon CPU Max processors running in HBM mode:

- The limited capacity of HBM mode causes some benchmarks to fail on a single (2P) system.

- Cache mode requires RHEL 9.x releases that are not officially supported by many ISVs.

- Getting the most performance from Intel Xeon CPU Max processors requires all cores to be performing memory operations in order to see a performance uplift.

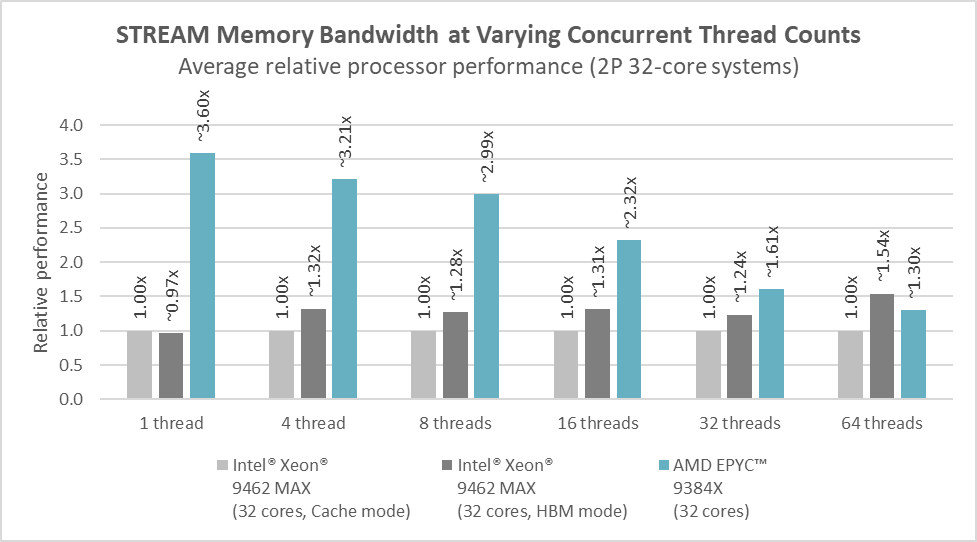

AMD EPYC processors can seamlessly utilize all installed cache and system memory. Micro-benchmarks test a single component or task using simple, well-defined metrics such as elapsed time or latency and are typically used to test individual software routines or low-level hardware components, such as the CPU or memory. The STREAM micro-benchmark is a simple, synthetic benchmark program that measures sustainable main memory bandwidth in MB/s and the corresponding computation rate for simple vector kernels.

Scaling tests require manually setting the number of threads and placement to ensure even distribution of resources when all cores aren't allocated to a job. AMD tested a number of thread combinations, equally distributing them between NUMA domains wherever possible. (Each system tested has 8 NUMA domains, 1 thread ran on a single CPU, and 4 threads were evenly split between 2 CPUs).

Figure 1 shows the composite average of the STREAM benchmark Add, Copy, Scale, and Triad test performance results on the 32-core test systems with 1-64 concurrent threads using ~96GB RAM. The 32-core AMD EPYC 9384X processor greatly outperforms the 32-core Intel Xeon CPU Max system, except that a fully loaded Intel Xeon CPU Max 9462 system in HBM mode outperforms the AMD EPYC processor at 64 threads.[5] This occurs when all processor cores are performing simultaneous memory operations and is an edge case because cores normally use a staggered approach when doing memory copies. It's rare for all cores to be doing this at the same time because most Hybrid MPI/OpenMP applications use only use one thread per thread group for I/O. STREAM threads are evenly distributed between all NUMA domains whenever possible.

Figure 1: STREAM performance (32 cores, varying thread counts)

Workloads Tested

The workloads I’m presenting fall into these categories:

- FEA Explicit

- Computational Fluid Dynamics

- Molecular Dynamics

- Quantum Chemistry with CP2K

- Weather Forecasting with WRF

- Seismic Exploration with Shearwater® Reveal®

- Reservoir Simulation

FEA Explicit

Explicit Finite Element Analysis (FEA) is a numerical simulation technique used to analyze the behavior of structures and materials subjected to dynamic events, such as impact, explosions, or crash simulations. For example, the automotive industry uses FEA to analyze vehicle designs and predict both a car's behavior in a collision and how that collision might affect the car's occupants. Another example is cell phone manufacturers simulating a drop test of their phones to ensure their durability. Using simulations allows manufacturers to save time and expense by testing virtual designs and reducing the need to experimentally test a full prototype.

These simulations start with a very complex digital model of the device to be tested (e.g., a car or a cell phone) and then simulate the physics of a dynamic event (e.g., an impact) by solving a series of differential equations over a period of time. Each stress or strain on one part of the model can create heat, movement, torque, etc. in other parts of the model, looking for areas where the model might deform or fail. These calculations can require high levels of compute and memory bandwidth on a compute node. Further, since an impact on one part of the model can cause changes in a distant part of the model, there can be high communication demands between compute nodes that have to share information between each other about how each of their assigned portions of the model are affected by, or are affecting, each other.

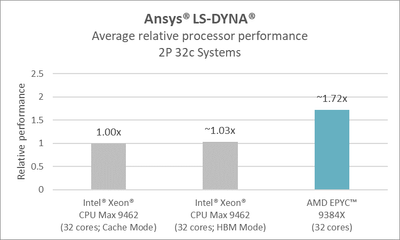

Ansys® LS-DYNA®

Ansys® LS-DYNA® is a widely used explicit simulation program. It is capable of simulating complex real-world short-duration events in the automotive, aerospace, construction, military, manufacturing, and bioengineering industries.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.72x.[6]

Figure 2: Ansys LS-DYNA performance (32-core)

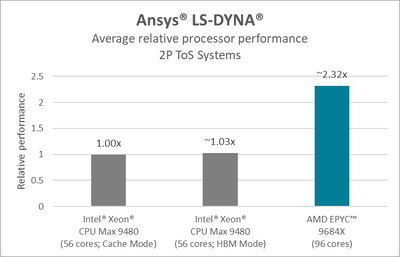

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~2.32x.[7]

Figure 3: Ansys LS-DYNA performance (top-of-stack)

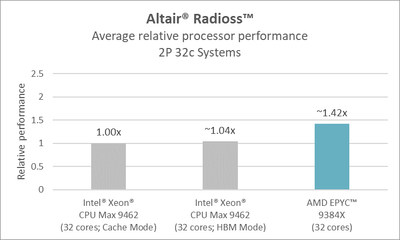

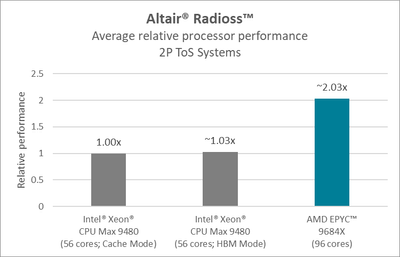

Altair® Radioss™

Altair® Radioss™ is used to perform structural analyses under impact or crash conditions. Its benchmarks provide hardware performance data measured using sets of benchmark problems selected to represent typical usage.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.42x.[8]

Figure 4: Altair Radioss performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~2.03x.[9]

Figure 5: Altair Radioss performance (top-of-stack)

Computational Fluid Dynamics

Computation Fluid Dynamics (CFD) uses numerical analysis to simulate and analyze fluid flow and how that fluid (liquid or gas) interacts with solids and surfaces, such as the water flow around a boat hull or the aerodynamics of a car body or aircraft fuselage, as well as a wide variety of less obvious uses, including industrial processing and consumer packaged goods. These workloads can be computationally intensive and require substantial resources, however most CFD workloads are limited by memory bandwidth.

AMD EPYC 9004 processors with AMD 3D V-Cache technology can significantly improve the performance of CFD workloads. With up to 1,152MB of L3 cache, more of the workload’s total working dataset can fit into ultra-fast L3 cache memory situated near the compute cores.

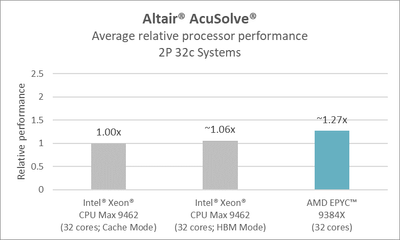

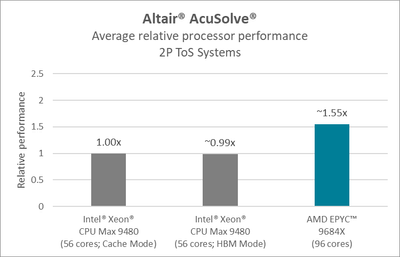

Altair® AcuSolve®

Altair® AcuSolve® is a proven asset for companies looking to explore designs by applying a full range of flow, heat transfer, turbulence, and non-Newtonian material analysis capabilities without the difficulties associated with traditional CFD applications.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.27x.[10]

Figure 6: Altair AcuSolve performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.55x.[11]

Figure 7: Altair AcuSolve performance (top-of-stack)

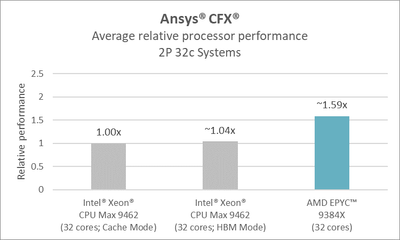

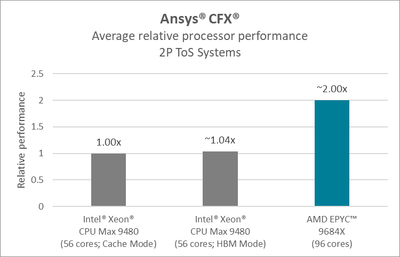

Ansys® CFX®

Ansys® CFX®: Ansys CFX is a high-performance computational fluid dynamics (CFD) software tool that delivers robust, reliable, and accurate solutions quickly across a wide range of CFD and Multiphysics applications.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.59x. The Intel Xeon processor running in HBM mode failed on one of the five benchmarks tested because the model did not fit; its performance vs the same Intel Xeon processor running in Cache mode was calculated against the four successful runs. Both the AMD EPYC processor and Intel Xeon processor running in Cache mode completed all five benchmarks, and that uplift is thus calculated using all five benchmarks. Please see Intel Xeon CPU Max Processor Modes to learn more.[12]

Figure 8: Ansys CFX performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~2.00x. The Intel Xeon processor running in HBM mode failed on one of the five benchmarks tested because the model did not fit; its performance vs the same Intel Xeon processor running in Cache mode was calculated against the four successful runs. Both the AMD EPYC processor and Intel Xeon processor running in Cache mode completed all five benchmarks, and that uplift is thus calculated using all five benchmarks. Please see Intel Xeon CPU Max Processor Modes to learn more.[13]

Figure 9: Ansys CFX performance (top-of-stack)

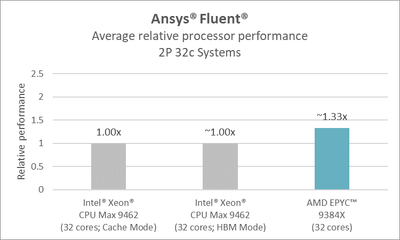

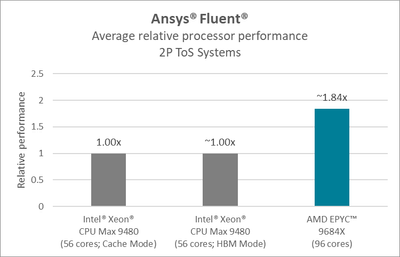

Ansys® Fluent®

Ansys® Fluent® is a fluid simulation application that offers advanced physics modeling capabilities and industry-leading accuracy.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.33x. The Intel Xeon processor running in HBM mode failed on three of the 15 benchmarks tested because the model did not fit; its performance vs the same Intel Xeon processor running in Cache mode was calculated against the 12 successful runs. Both the AMD EPYC processor and Intel Xeon processor running in Cache mode completed all 15 benchmarks, and that uplift is thus calculated using all 15 benchmarks. Please see Intel Xeon CPU Max Processor Modes to learn more.[14]

Figure 10: Ansys Fluent performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.84x. The Intel Xeon processor running in HBM mode failed on three of the 15 benchmarks tested because the model did not fit; its performance vs the same Intel Xeon processor running in Cache mode was calculated against the 12 successful runs. Both the AMD EPYC processor and Intel Xeon processor running in Cache mode completed all 15 benchmarks, and that uplift is thus calculated using all 15 benchmarks. Please see Intel Xeon CPU Max Processor Modes to learn more.[15]

Figure 11: Ansys Fluent performance (top-of-stack)

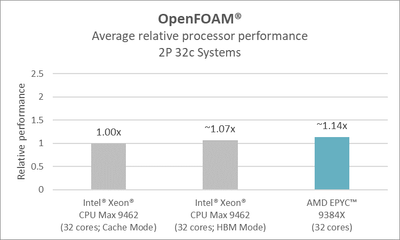

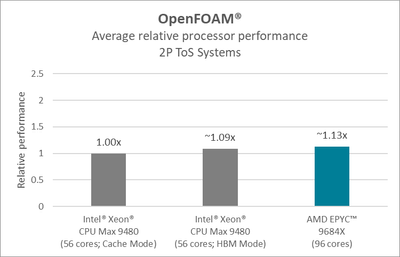

OpenFOAM®

OpenFOAM® is a free, open source CFD software. Its user base includes commercial and academic organizations.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.14x.[16]

Figure 12: OpenFOAM performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.13x.[17]

Figure 13: OpenFOAM performance (top-of-stack)

Molecular Dynamics

Molecular dynamics computationally simulates the physical movements of atoms and molecules. These virtual atoms and molecules interact for a fixed time to show how the system evolves during this period. The most common molecular dynamics method determines these positions by numerically solving Newtonian equations and is most commonly applies to chemistry, material sciences, and biophysics problems/experiments.

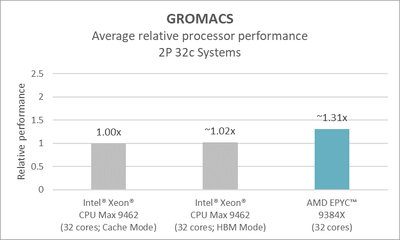

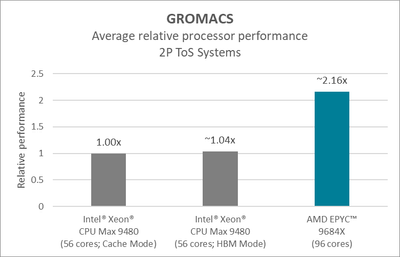

GROMACS

GROMACS is a modular dynamics application that simulates Newtonian motion equations for systems with anywhere from hundreds to millions of particles. Here again, 4th Gen AMD EPYC processors demonstrate industry-leading performance compared to a rival datacenter processor.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.31x.[18]

Figure 14: GROMACS performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~2.16x.[19]

Figure 15: GROMACS performance (top-of-stack)

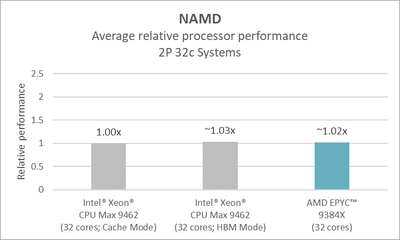

Nanoscale Molecular Dynamics

Nanoscale Molecular Dynamics (NAMD) performs high-performance simulations of large biomolecular systems, including both preparing the systems and analysis, and analyzing the results. AMD EPYC processors deliver superb performance running NAMD on both bare metal and cloud configurations.

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.02x.[20]

Figure 16: NAMD performance (32-core)

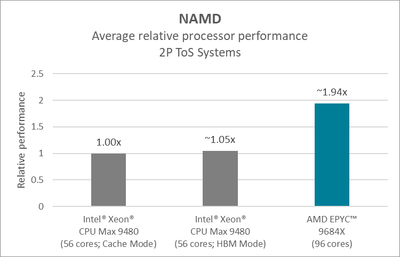

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.94x.[21]

Figure 17: NAMD performance (top-of-stack)

Quantum Chemistry with CP2K

CP2K is a quantum chemistry and solid-state physics application that simulates solid state, liquid, molecular, periodic, material, crystal, and biological systems at an atomic level.

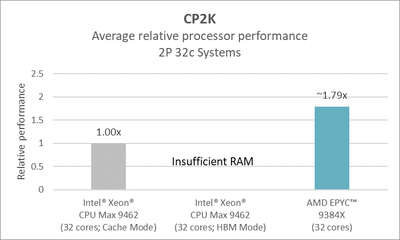

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.79x. This workload was too large to run on the Intel Xeon CPU Max 9462 in HBM mode. I explain why in Intel Xeon CPU Max Processor Modes, above.[22]

Figure 18: CP2K performance (32-core)

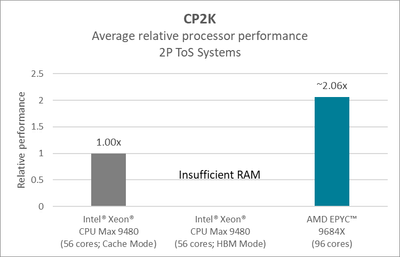

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~2.06x. This workload was too large to run on the Intel Xeon CPU Max 9480 in HBM mode. I explain why in Intel Xeon CPU Max Processor Modes, above.[23]

Figure 19: CP2K performance (top-of-stack)

Weather Forecasting with WRF®

Developed and maintained by the National Center for Atmospheric Research (NCAR), the Weather Research & Forecasting (WRF) model has over 48,000 registered users in over 160 countries. WRF is a flexible and computationally efficient platform for operational forecasting across scales ranging from meters to thousands of kilometers.

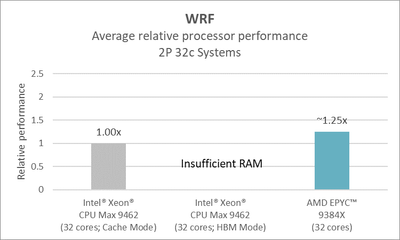

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.25x. This workload was too large to run on the Intel Xeon CPU Max 9462 in HBM mode. I explain why in Intel Xeon CPU Max Processor Modes, above.[24]

Figure 20: WRK performance (32-core)

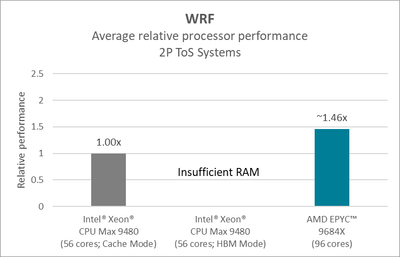

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.46x. This workload was too large to run on the Intel Xeon CPU Max 9480 in HBM mode. I explain why in Intel Xeon CPU Max Processor Modes, above.[25]

Figure 21: WRF performance (top-of-stack)

Seismic Exploration with Shearwater® Reveal®

Seismic exploration consists of sending a “shot” of seismic energy into the earth and then recording how that energy bounces off and is refracted by underground features, such as oil and gas reserves. The shot sends seismic waves into the earth, and a receiving station records the timing and amplitude of the reflected (bounced) and refracted (bent) waves. Elaborate calculations can determine the size, shape, and depth of underground features such as aquifers and oil and gas reservoirs. Seismic surveys are used for a variety of purposes, including scouting landfills, finding underground aquifers, and help predict how a given area will behave during earthquakes—which can help inform building codes that can help save lives.

Shearwater® Reveal® is used for time and depth processing and imaging in both land and marine environments. It is primarily licensed to oil and gas companies but is also used to process seismic data for shallow hazard mapping, geothermal projects, carbon capture, deep earth research, water resources, and the nuclear industry.

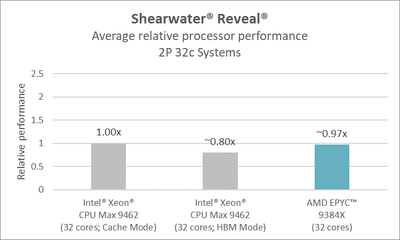

- 32-core performance: A 2P AMD EPYC 9384X system delivered performance roughly on par with that of a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode.[26]

Figure 22: Shearwater Reveal performance (32-core)

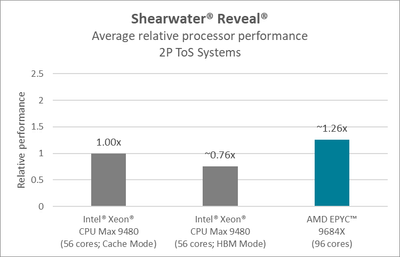

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.26x.[27]

Figure 23: Shearwater Reveal performance (top-of-stack)

Reservoir Simulation

As described above, seismic exploration sends waves into the earth to identify and characterize underground features, such as oil and gas reservoirs. Reservoir engineers must then employ additional computational tools to determine how these underground fluids will move and interact. Proper reservoir simulation promotes the efficient use of energy resources by maximizing the energy extracted over the productive lifespan of the reservoir.

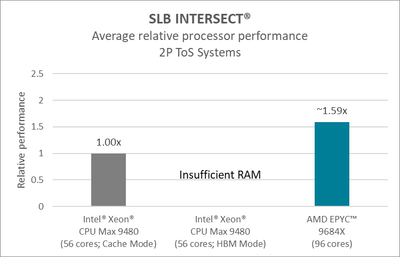

SLB INTERSECT®

SLB INTERSECT® is a high-resolution reservoir simulator that combines physics and performance for reservoir models. It offers accurate, efficient field development planning and reservoir management, including black oil waterflood models, thermal SAGD injection schemes, and handling of unstructured grids.

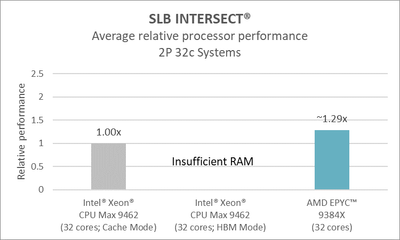

- 32-core performance: A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.29x. This workload was too large to run on the Intel Xeon CPU Max 9480 in HBM mode. I explain why in Intel Xeon CPU Max Processor Modes, above.[28]

Figure 24: SLB INTERSECT performance (32-core)

- Top-of-stack performance: A 2P AMD EPYC 9684X system outperformed a comparable 2P Intel Xeon CPU Max 9480 system running in Cache mode by ~1.59x. This workload was too large to run on the Intel Xeon CPU Max 9480 in HBM mode. I explain why in Intel Xeon CPU Max Processor Modes, above.[29]

Figure 25: SLB Intersect performance (top-of-stack)

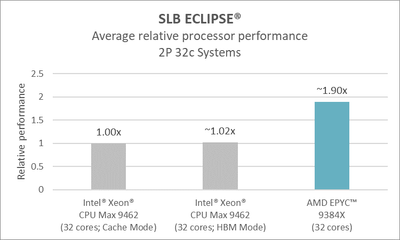

SLB ECLIPSE®

The SLB ECLIPSE® industry-reference simulator is a feature-rich, comprehensive reservoir. ECLIPSE offers a complete and robust set of numerical solutions for fast and accurate prediction of dynamic behavior for all types of reservoirs and development schemes thanks to its extensive capabilities, robustness, speed, parallel scalability, and superb platform coverage. A 2P AMD EPYC 9384X system outperformed a comparable 2P Intel Xeon CPU Max 9462 system running in Cache mode by ~1.90x.[30]

Figure 26: SLB ECLIPSE performance (32-core)

Conclusion

As presented above, the performance impact that AMD EPYC 9004 processors with AMD 3D V-Cache technology can deliver for technical computing workloads is impressive. If you are looking to minimize your time to solution, the highest core-count processors deliver exceptional performance per compute node. All these workloads are very complex and solve very challenging problems. Further, the software licensing costs can be high, especially because software is often licensed on a 32-core basis. Those looking to maximize the value of a 32-core software license should consider mid-core count AMD EPYC 9004 processors with AMD 3D V-Cache technology to deliver a balance of exceptionally high 32-core and per-node performance.

4th Gen AMD EPYC processors deliver the performance and efficiency needed to tackle today’s most challenging workloads. The advent of 4th Gen AMD EPYC processors with AMD 3D V-Cache technology brings the proven performance of AMD 3D V-Cache technology to the 4th generation of AMD EPYC processors to deliver exceptional performance for many memory bandwidth bound workloads.

AMD offers guidance around the best CPU tuning practices to achieve optimal performance on these key workloads when deploying 4th Gen AMD EPYC processors for your environment. Please visit the AMD Documentation Hub to learn more.

The launch of 4th Gen AMD EPYC processors in November of 2022 marked the debut of the world’s highest-performance server processor that delivers optimal TCO across workloads, industry leadership x86 energy efficiency [3][4] to help support sustainability goals, and Confidential Computing across a rich ecosystem of solutions. The advent of AMD EPYC 97x4 processors and AMD EPYC 9004 processors with AMD 3D V-Cache ™ technology expands the line of 4th Gen AMD EPYC processors with new processor models optimized for cloud infrastructure and memory-bound workloads, respectively.

Other key AMD technologies include:

- AMD Instinct™ accelerators are designed to power discoveries at exascale to enable scientists to tackle our most pressing challenges.

- AMD Pensando™ solutions deliver highly programmable software-defined cloud, compute, networking, storage, and security features wherever data is located, helping to offer improvements in productivity, performance and scale compared to current architectures with no risk of lock-in.

- AMD FPGAs and Adaptive SoCs offers highly flexible and adaptive FPGAs, hardware adaptive SoCs, and the Adaptive Compute Acceleration Platform (ACAP) processing platforms that enable rapid innovation across a variety of technologies from the endpoint to the edge to the cloud.

Raghu Nambiar is a Corporate Vice President of Data Center Ecosystems and Solutions for AMD. His postings are his own opinions and may not represent AMD’s positions, strategies, or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

References

- GD-204: “Technical Computing” or “Technical Computing Workloads” as defined by AMD can include: electronic design automation, computational fluid dynamics, finite element analysis, seismic tomography, weather forecasting, quantum mechanics, climate research, molecular modeling, or similar workloads.

- GD-183: AMD Infinity Guard features vary by EPYC™ Processor generations. Infinity Guard security features must be enabled by server OEMs and/or Cloud Service Providers to operate. Check with your OEM or provider to confirm support of these features. Learn more about Infinity Guard at https://www.amd.com/en/technologies/infinity-guard.

- SP5-072: As of 1/11/2023, a 4th Gen EPYC 9654 powered server has highest overall scores in key industry-recognized energy efficiency benchmarks SPECpower_ssj®2008, SPECrate®2017_int_energy_base and SPECrate®2017_fp_energy_base. See details at https://www.amd.com/en/claims/epyc4#SP5-072.

- SPCTCO-002A: A 2P AMD EPYC 96 core 9654 CPU powered server, to deliver 10,000 units of integer performance takes an estimated: 59% fewer servers (7 AMD servers vs 17 Intel servers), 46% less power, and a 48% lower 3-yr TCO than a 2P server based on the 40 core Intel Xeon Platinum 8380 CPUs. The 2P EPYC 96 core CPU solution also provides estimated Greenhouse Gas Emission savings emissions avoided equivalent to 145,443 pounds of coal not burned in the USA over 3 years and carbon sequestration equivalent of 53 acres of forest annually in the USA.

- SP5-221: AMD testing as of 11/03/2023 shows that a 2P AMD EPYC™ 9384X system outperforms a 2P Intel® Xeon® 9462 Max (Cache mode) by the following aggregate average amounts running the STREAM 5.10 add, copy, scale, and triad micro-benchmarks in scaling tests on under-subscribed 32-core systems where not all cores are performing memory operations at the same time. Scaling tests require manually setting the number of threads and placement to ensure even distribution of resources when all cores aren't allocated to a job. AMD tested various thread combinations, equally distributing them between NUMA domains wherever possible. Each system tested has 8 NUMA domains, 1 thread ran on a single CPU, and 4 threads were evenly split between 2 CPUs. Performance uplifts are normalized to the Intel® Xeon® 9462 MAX in Cache mode = 1.00x:

- 1 thread: 9462 (HBM) = ~0.97x, 9384X = ~3.60x

- 4 threads: 9462 (HBM) = ~1.32x, 9384X = ~3.21x

- 8 threads: 9462 (HBM) = ~1.28x, 9384X = ~2.99x

- 16 threads: 9462 (HBM) = ~1.31x, 9384X = ~2.32x

- 32 threads: 9462 (HBM) = ~1.24x, 9384X = ~1.61x

- 64 threads: 9462 (HBM) = ~1.54x, 9384X = ~1.30x

See footnote 31 for 32-core system configuration information. - SP5-194: AMD testing as of 10/24/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select tests on Ansys® LS-DYNA® 2022 R2. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- 3 Cars: 884.00, 861.67, 424.00 (1.00x, ~1.03x, ~209x

- Neon: 73.33, 71.67, 44.00 (1.00x, ~1.02x, ~1.67x)

- ODB 10m: 17424.33, 16721.00, 12383.00 (1.00x, ~1.04x, ~1.41x)

See footnote 31 for 32-core system configuration information. - SP5-208: AMD testing as of 10/25/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select Ansys® LS-DYNA® 2022 R2 benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- 3 Cars: 693.00, 668.00, 234.67 (1.00x, ~1.04x, ~2.95x)

- Neon: 59.33, 56.67, 28.67 (1.00x, ~1.05x, ~2.07x)

- ODB 10m: 12855.67, 12903.67, 6656.67 (1.00x, ~1.00x, ~1.93x)

See footnote 32 for top-of-stack system configuration information. - SP5-195: AMD testing as of 10/24/2023. The detailed results show average elapsed times for 2P Intel Xeon 9462 Max in Cache mode, 2P Intel Xeon 9462 Max in HBM mode, and 2P AMD EPYC 9384X running select tests of Altair® Radioss™ 2022.2 benchmark. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- rad-t10m: 8942.33, 8600.03, 7550.45 (1.00x, ~1.04x, ~1.18x)

- rad-venza: 3965.51, 3804.18, 2927.74 (1.00x, ~1.04x, ~1.35x)

- rad-neon: 1423.47, 1362.90, 892.62 (1.00x, ~1.04x, ~1.60x)

- rad-dropsander: 134.28, 129.74, 86.71 (1.00x, ~1.04x, ~1.55x)

See footnote 31 for 32-core system configuration information. - SP5-209: AMD testing as of 10/25/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select Altair® Radioss™ 2022.2 benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- rad-t10m: 6971.18, 6822.81, 4060.65 (1.00x, ~1.02x, ~1.72x)

- rad-venza: 3126.03, 2958.59, 1571.85 (1.00x, ~1.06x, ~1.99x)

- rad-neon: 1190.64, 1182.02, 458.57 (1.00x, ~1.01x, ~2.60x)

- rad-dropsander: 112.54, 107.95, 61.62 (1.00x, ~1.04x, ~1.83x)

See footnote 32 for top-of-stack system configuration information. - SP5-196: AMD testing as of 10/24/2023. The detailed results show average elapsed times for 2P Intel Xeon 9462 Max in Cache mode, 2P Intel Xeon 9462 Max in HBM mode, and 2P AMD EPYC 9384X running the acus-in benchmark of the Altair® AcuSolve® 2022.2 benchmark. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- acus-in: 2303.93, 2181.25, 1819.19 (1.00x, ~1.06x, ~1.27x)

See footnote 31 for 32-core system configuration information. - SP5-210: AMD testing as of 10/21/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running the Altair® AcuSolve® 2022.2 acus-in benchmark. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- acus-in: 2194.8653, 2213.2167, 1412.0037 (1.00x, ~0.99x, ~1.55x)

See footnote 32 for top-of-stack system configuration information. - SP5-197: AMD testing as of 10/25/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select tests on Ansys® CFX ® 2023 R1. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- Airfoil 10: 42.22, 40.29, 24.74 (1.00x, ~1.05x, ~1.71x)

- Airfoil 100: 456.07, N/A*, 316.04 (1.00x, N/A*, ~1.44x)

- Airfoil 50: 206.78, 198.27, 126.18 (1.00x, ~1.04x, ~1.64x)

- LeMans Car: 15.55, 15.15, 9.67 (1.00x, ~1.03x, ~1.61x)

- Automotive pump: 9.92, 9.64, 6.40 (1.00x, ~1.03x, ~1.55x)

*The Airfoil 100 benchmark did not fit in the available RAM when running the Intel Xeon 9462 MAX processor in HBM mode and was not included when calculating average HBM performance. Airfoil 100 was omitted from Xeon 9462 Cache results when comparing average performance to Xeon 9462 HBM.

See footnote 31 for 32-core system configuration information. - SP5-211: AMD testing as of 10/25/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select Ansys® CFX® 2023 R1 benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- Airfoil 10: 31.31, 29.69, 16.69 (1.00x, ~1.05x, ~1.88x)

- Airfoil 100: 339.30, N/A*, 203.47 (1.00x, N/A, ~1.67x)

- Airfoil 50: 156.07, 149.69, 92.02 (1.00x, ~1.04x, ~1.70x)

- LeMans Car: 11.35, 10.91, 4.49 (1.00x, ~1.04x, ~2.53x)

- Automotive pump: 8.04, 7.82, 3.61 (1.00x, ~1.03x, ~2.23x)

*The Airfoil 100 benchmark did not fit in the available RAM when running the Intel Xeon 9480 Max processor in HBM mode and was not included when calculating average HBM performance. Airfoil 100 was omitted from Xeon 9480 Cache results when comparing average performance to Xeon 9480 HBM.

See footnote 32 for top-of-stack system configuration information. - SP5-198: AMD testing as of 10/25/2023. The detailed results show the average Core Solver Rating for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select tests on Ansys® Fluent® 2023 R1. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- aircraft_14m: 1949.27, 2022.67, 2584.90 (1.00x, ~1.04x, ~1.33x)

- aircraft_2m: 14706.40, 15091.90, 21159.90 (1.00x, ~1.03x, ~1.44x)

- combustor_12m: 428.93, 436.90, 540.47 (1.00x, ~1.02x, ~1.26x)

- combustor_71m: 53.63, N/A*, 67.47 (1.00x, N/A*, ~1.26x)

- exhaust_system_33n: 652.10, 533.23, 790.33 (1.00x, ~0.82x, ~1.21x)

- f1_racecar-140m: 116.07, N/A*, 145.53 (1.00x, N/A*, ~1.25x)

- fluidized_bed_2m: 7053.13, 7215.10, 8705.43 (1.00x, ~1.02x, ~1.23x)

- ice_2m: 562.00, 568.77, 772.60 (1.00x, ~1.01x, ~1.38x)

- landing_gear_15m: 703.97, 617.80, 832.40 (1.00x, ~0.88x, ~1.18x)

- LeMans_6000_16m: 796.00, 830.13, 1030.80 (1.00x, ~1.04x, ~1.30x)

- oil_rig_7m: 1811.57, 1891.67, 2198.50 (1.00x, ~1.04x, ~1.21x)

- f1_racecar_280: 78.97, N/A*, 106.33 (1.00x, N/A*, ~1.35x)

- rotor_3m: 10835.20, 11245.23, 14664.87 (1.00x, ~1.04x, ~.135x)

- sedan_4m: 12674.83, 13041.50, 18614.50 (1.00x, ~1.03x, ~1.47x)

- pump_2m: 8429.90, 8801.37, 14481.53 (1.00x, ~1.04x, ~1.72x)

*The combustor_71m, f1_racecar-140m, and f1_racecar_280 benchmarks did not fit in the available RAM when running the Intel Xeon 9462 MAX processor in HBM mode and were not included when calculating average HBM performance. Combustor_17m, f1_racecar-140m and f1_racecar_280 benchmarks were also omitted from Xeon 9462 Cache results when comparing average performance to Xeon 9462 HBM.

See footnote 31 for 32-core system configuration information. - SP5-212: AMD testing as of 10/25/2023. The detailed results show the average Core Solver Rating for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select Ansys® Fluent® 2023 R1 benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- aircraft_14m: 2618.90, 2755.27, 4278.97 (1.00x, ~1.05x, ~1.63x)

- aircraft_2m: 19489.27, 20369.50, 43200.00 (1.00x, ~1.05x, ~2.22x)

- combustor_12m: 597.53, 614.90, 1124.53 (1.00x, ~1.03x, ~1.88x)

- combustor_71m: 76.93, N/A*, 97.00 (1.00x, N/A*, ~1.26x)

- exhaust_system_33n: 914.17, 602.67, 1353.00 (1.00x, ~0.66x, ~1.48x)

- f1_racecar-140m: 153.20, N/A*, 196.40 (1.00x, N/A*, ~1.28x)

- fluidized_bed_2m: 9216.40, 9573.57, 18288.77 (1.00x, ~1.04x, ~1.98x)

- ice_2m: 685.27, 705.77, 1280.03 (1.00x, ~1.03x, ~1.87x)

- landing_gear_15m: 966.07, 901.07, 1453.73 (1.00x, ~0.93x, ~1.51x)

- LeMans_6000_16m: 1114.57, 1163.50, 2245.43 (1.00x, ~1.04x, ~2.02x)

- oil_rig_7m: 2513.47, 2608.30, 5166.00 (1.00x, ~1.04x, ~2.06x)

- f1_racecar_280: 99.23, N/A*, 147.37 (1.00x, N/A*, ~1.49x)

- rotor_3m: 13571.30, 14281.00, 30052.20 (~1.00x, ~1.05x, ~2.21x)

- sedan_4m: 17814.40, 18647.60, 40501.20 (1.00x, ~1.05x, ~2.27x)

- pump_2m: 11677.90, 12328.23, 29042.00 (1.00x, ~1.06x, ~2.49x)

*The combustor_71m, f1_racecar-140m, and f1_racecar_280 benchmarks did not fit in the available RAM when running the Intel Xeon 9480 Max processor in HBM mode and were not included when calculating average HBM performance. The combustor_71m, f1_racecar-140m, and f1_racecar_280 benchmarks were omitted from Xeon 9480 Cache results when comparing average performance to Xeon 9480 HBM.

See footnote 32 for top-of-stack system configuration information. - SP5-199: AMD testing as of 10/25/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select tests on OpenFOAM® 2212. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- ofoam-1305252: 1113.3067, 1041.2533, 1056.7133 (1.00x, ~1.07x, ~1.05x)

- ofoam-1084646: 737.43, 688.7133, 638.26(1.00x, ~1.07x, ~1.16x)

- ofoam-1004040: 528.35, 497.5567, 439.0633 (1.00x, ~1.06x, ~1.20x)

See footnote 31 for 32-core system configuration information. - SP5-213: AMD testing as of 10/25/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select OpenFOAM® 2212 benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- ofoam-1305252: 841.0733, 760.71, 857.7833 (1.00x, ~1.11x, ~0.98x)

- ofoam-1004040: 415.5233, 380.85, 329.2267 (1.00x, ~1.091, 1.262)

- ofoam-1084646: 587.4633, 543.97, 507.9167, (1.00x, 1.08, 1.16)

See footnote 32 for top-of-stack system configuration information. - SP5-201: AMD testing as of 10/24/2023. The detailed results show average ns/day (nanoseconds per day) for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select tests on GROMACS 2021.20. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- benchpep: 1.0197, 1.0367, 1.271 (1.00x, ~1.02x, ~1.25x)

- gmx_water1536k_pme: 9.724, 9.807, 13.1857 (1.00x, ~1.01x, ~1.36x)

See footnote 31 for 32-core system configuration information. - SP5-215: AMD testing as of 10/25/2023. The detailed results show average ns/day (nanoseconds per day) for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running the GROMACS 2021.20 benchpep and gmx_water1536k_pme benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- benchpep: 1.435, 1.4973, 2.9677 (1.00x, ~1.043, ~2.07x)

- gmx_water1536k_pme: 12.9913, 13.43, 29.2613 (1.00x, ~1.034, ~2.25x)

See footnote 32 for top-of-stack system configuration information. - SP5-202: AMD testing as of 10/24/2023. The detailed results show average ns/day (nanoseconds per day) for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select tests on NAMD 2.15alpha1. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- namd-stmv20m: 0.1423, 0.1487, 0.1683 (1.00x, ~1.05x, ~1.18x)

- namd-stmv: 2.123, 2.1917, 2.124 (1.00x, ~1.03x, ~1.00x)

- namd-f1atpase: 7.1463, 7.312, 6.475 (1.00x, ~1.02x, ~0.91x)

- namd-apoa1: 23.018, 23.2053, 22.5977 (1.00x, ~1.01x, ~0.98x)

See footnote 31 for 32-core system configuration information. - SP5-216: AMD testing as of 10/24/2023. The detailed results show average ns/day (nanoseconds per day for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running select NAMD 2.15alpha1 benchmarks. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- namd-stmv20m: 0.1873, 0.2027, 0.5287 (1.00x, ~1.08x, ~2.82x)

- namd-stmv: 2.8557, 3.009, 3.792 (1.00x, ~1.05x, ~1.33x)

- namd-f1atpase: 9.515, 9.9377, 15.3293 (1.00x, ~1.04x, ~1.61x)

- namd-apoa1: 29.7133, 30.7457, 59.1917 (1, 00x, ~1.04x, ~1.99x)

See footnote 32 for top-of-stack system configuration information. - SP5-205: AMD testing as of 10/24/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode and the AMD EPYC running the CP2K h20-dft-l benchmark. The Intel Xeon processor in HBM mode was not able to run this benchmark because the model was too large to fit into available memory. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- h2o-dft-ls: 2499.3333, 1393.3333 (1.00x, ~1.79x)

See footnote 31 for 32-core system configuration information. - SP5-218: AMD testing as of 10/18/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode and the AMD EPYC running the CP2K 7.1 h20-dft-l benchmark. The Intel Xeon processor in HBM mode was not able to run this benchmark because the model was too large to fit into available memory. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- h2o-dft-ls: 1896.3333, 922.3333 (1.00x, ~2.06x)

See footnote 32 for top-of-stack system configuration information. - SP5-207: AMD testing as of 10/24/2023. The detailed results show average Mean Time/Step for the Intel Xeon in Cache mode and the AMD EPYC running the WRF 4.2.1 conus-2.5km model. The Intel Xeon processor in HBM mode was not able to run this benchmark because the model was too large to fit into available memory. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- wrf-2.5: 2.266, 1.8193 (1.00x, ~1.25x)

See footnote 31 for 32-core system configuration information. - SP5-220: AMD testing as of 10/17/2023. The detailed results show average Mean Time/Step for the Intel Xeon in Cache mode and the AMD EPYC running the WRF 4.2.1 conus-2.5km model. The Intel Xeon processor in HBM mode was not able to run this benchmark because the model was too large to fit into available memory. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- wrf-2.5: 1.754, 1.2027 (1.00x, ~1.46x)

See footnote 32 for top-of-stack system configuration information. - SP5-206: AMD testing as of 10/212023. The detailed results show average elapsed times for the Intel Xeon in HBM mode, Intel Xeon in Cache mode, and the AMD running the Shearwater® Reveal® 5.1 rtm_reveal benchmark. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- rtm_reveal: 4239.12, 5315.51, 4381.93 (1.00x, ~0.80x, ~0.97x)

See footnote 31 for 32-core system configuration information. - SP5-219: AMD testing as of 10/24/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running the Shearwater® Reveal® 5.1 rtm_reveal benchmark. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- rtm_reveal: 3676.9433, 4865.6667, 2908.7133 (1.00x, ~0.76x, ~1.26x)

See footnote 32 for top-of-stack system configuration information. - SP5-204: AMD testing as of 10/24/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode and the AMD EPYC running the SLB INTERSECT® 2021.4 rsm_ix benchmark. The Intel Xeon processor in HBM mode was not able to run this benchmark because the model was too large to fit into available memory. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- rsim_ix: 2039, 1578.6667 (1.00x, ~1.29x)

See footnote 31 for 32-core system configuration information. - SP5-217: AMD testing as of 10/06/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode and the AMD EPYC running the SLB INTERSECT® 2021.4 rsm_ix benchmark. The Intel Xeon processor in HBM mode was not able to run this benchmark because the model was too large to fit into available memory. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- rsim_ix: 1805, 1136.3333 (1.00x, ~1.59x)

See footnote 32 for top-of-stack system configuration information. - SP5-203: AMD testing as of 10/24/2023. The detailed results show average elapsed times for the Intel Xeon in Cache mode, Intel Xeon in HBM mode, and the AMD EPYC running the SLB ECLIPSE® 2021.4 comp_2mm benchmark. Uplifts normalized to the Intel Xeon in Cache mode appear in parentheses.

- comp_2mm: 1847.3267, 1805.12, 970.0333 (1.00x, ~1.02x, ~1.90x)

See footnote 31 for 32-core system configuration information. - 32-core system configurations: AMD: 2 x 32-core AMD EPYC 9384X; Memory: 1.5 TB RAM (24 x 64 GB DDR5-4800); NIC: 25Gb (MT4119 – MCX512A-ACAT) CX512A -ConnectX-5 fw 16.35.2000; Infiniband: 200Gb HDR (MT4123 – MCX653106A-HDAT) ConnectX-6 VPI fw 20.35.2000; Storage: 1 x 1.9 TB SAMSUNG MZQL21T9HCJR-00A07 NVMe; BIOS: 1007D; BIOS options: SMT=OFF, NPS=4, Determinism=Power; OS: RHEL 9.2 kernel 5.14.0-284.11.1.el9_2.x86_64; Kernel options: amd_iommu=on iommu=pt mitigations=off; Runtime options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governor – Performance, Disable C2 States. Intel: 2 x 32-core Intel Xeon 9462 Max; Memory (Cache mode): 1.0 TB RAM (16 x 64 GB DDR5-4800); Memory (HBM mode): none; NIC: 25Gb (MT4119 – MCX512A-ACAT) CX512A -ConnectX-5 fw 16.35.2000; Infiniband: 200Gb HDR (MT4123 – MCX653106A-HDAT) ConnectX-6 VPI fw 20.35.2000; Storage: 1 x 1.9 TB SAMSUNG MZQL21T9HCJR-00A07 NVMe; BIOS: 1.3; Hyperthreading=OFF, Profile=Maximum Performance; OS: RHEL 9.2 kernel 5.14.0-284.11.1.el9_2.x86_64; Kernel options: processor.max_cstate=1 intel_idle.max_cstate=0 iommu=pt mitigations=off; Runtime options: NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governor=Performance. Results may vary based on system configurations, software versions and BIOS settings.

- Top-of-stack system configurations: AMD: 2 x 96-core AMD EPYC 9684X; Memory: 1.5 TB RAM (24 x 64 GB DDR5-4800); NIC: 25Gb (MT4119 - MCX512A-ACAT) CX512A -ConnectX-5 fw 16.35.2000; Infiniband: 200Gb HDR (MT4123 - MCX653106A-HDAT) ConnectX-6 VPI fw 20.35.2000; Storage: 1 x 1.9 TB SAMSUNG MZQL21T9HCJR-00A07 NVMe; BIOS: 1007D; BIOS options: SMT=OFF, NPS=4, Determinism=Power; OS: RHEL 9.2 kernel 5.14.0-284.11.1.el9_2.x86_64; Kernel options: amd_iommu=on iommu=pt mitigations=off; Runtime options: Clear caches, NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governor - Performance, Disable C2 States. Intel: 2 x 56-core Intel Xeon 9480 Max; Memory (Cache mode): 1.0 TB RAM (16 x 64 GB DDR5-4800); Memory (HBM mode): none; NIC: 25Gb (MT4119 - MCX512A-ACAT) CX512A -ConnectX-5 fw 16.35.2000; Infiniband: 200Gb HDR (MT4123 - MCX653106A-HDAT) ConnectX-6 VPI fw 20.35.2000; Storage: 1 x 1.9 TB SAMSUNG MZQL21T9HCJR-00A07 NVMe; BIOS: 1.3; Hyperthreading=OFF, Profile=Maximum Performance; OS: RHEL 9.2 kernel 5.14.0-284.11.1.el9_2.x86_64; Kernel options: processor.max_cstate=1 intel_idle.max_cstate=0 iommu=pt mitigations=off; Runtime options: NUMA Balancing 0, randomize_va_space 0, THP ON, CPU Governor=Performance. Results may vary based on system configurations, software versions and BIOS settings.