- AMD Community

- Support Forums

- PC Drivers & Software

- Re: Rx 6950xt low GPU usage in games at 1080p (50...

PC Drivers & Software

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rx 6950xt low GPU usage in games at 1080p (50-65% usage)

I own an Asrock Rx 6950xt OC Formula, a Ryzen 9 5900x cpu , an MSI MPG B550 GAMING PLUS , 2 x G.Skill Ripjaws V DDR4 4266 MHz CL19 , and a Corsair 850w gold PSU. The problem is that in some games i get identical performance or less to my previous gpu which was a gtx 1080ti. In game at 1080p i get low gpu usage (40-70%) , low cpu usage (50 % max) and low to normal ram usage . This only happens in most of the games i play but not all of them. For example inThe Witcher 3, Assasins creed Origins and Odyssey the gpu usage sits at 60% in game with 70-90 fps and the gpu draws around 150-180w. Once i pause the game the usage goes up to 85-95% with 240 fps and the card then draws 250-320w which is the performance i would expect to be getting in game. This happens on halo MCC as well and my gtx 1080ti outperformed my rx6950xt in those games which makes no sence. However in games like halo infinite and days gone the performance is amazing both over 200fps 90-97% usage and 250-320w power draw. What is going on? I need to know how i can fix this as my gpu is greatly underutilized. I really hope this is a driver issue as the Rx 6950xt is a new gpu and that they ll release better drivers that utilize this card on these games otherwise its such a waste buying this gpu if i cant use it to its full potential.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is 2 fold.

1080P is generally known as a CPU dependant resolution so in some games your performance at 1080P may not be better than an older card as the CPU can't feed the GPU fast enough resulting in low GPU usage which in turn equates to lower than optimal FPS.

There's usually 2 ways around this, The first having a CPU fast enough to be able to "feed" your GPU, While the 5900X you have is a really good CPU it will still struggle at lower resolutions with any high end GPU whether it's a 6950XT or a 3090. I was in a similar situation with a 5800X that would see my 6950XT have low GPU usage in most games when I played at 1080P, Mainly competitively on my 240Hz monitor, I switched it out to a 5800X3D and my GPU usage went up massively in most games which inturn meant I had much higher FPS.

The 2nd "fix" is running a game in either DX12 or Vulkan wherever possible as both tend to make much better use out of the hardware available.

Outside of that some games are just poorly coded and won't take advantage of the hardware available.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem at 3440 x 1440 or 4k on Dx11 games

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is not the cpu. I am 100% sure. I used the same cpu with my gtx 1080 and the performance was better with that. After some digging i found out that the issue was the bad optimiziation of the amd gpu on anything lowerr than direct x 12. My gpu is getting 150 fps ultra on Assasins Creed valhala which uses direct x 12 but on ac origins and odyssey which use direct x11 i get 75 fps on 40% usage. Using dxvk on dx11 games seemed to boost performance by 45 fps. I hope amd optimizes their new graphics card better since its such a strong card and is so underutilized in some older games. And if cpu was the issue then why do i get 250 fps on halo infinite which uses dx12 and 150fps on hako mcc which uses dx11?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same boat man. I race on GTA 5 and my 1080ti was better. DX12 games run very well but i play GTA everyday so very sad.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Coldshot could you do me a favour please ? Run the ingame benchmarks of Origins and Odyssey both completely maxed out at 1080P and report your numbers please, When I get home from work tonight I'll be able to compare and let you know what's what as I have a 5800X in my HTPC which should have the same game performance as your 5900X so I'll be able to compare the numbers with the 5800X3D.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well I just ran AC:Origins and AC:Odyssey, Both maxed out at 1080P, Origins gave me 133FPS average in the benchmark and Odyssey gave me 129FPS average in the benchmark.

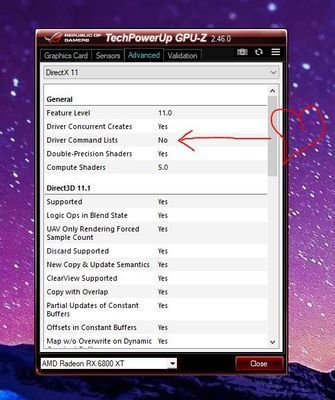

GPU usage was bouncing around from 50% to 80%+ and while the CPU with its cache does play a part, As Psyxeon mentioned in his other thread this could be helped by the AMD driver team putting in "driver command lists" which is completely missing from the DX11 portion of AMD's driver for some reason.

EDIT

Just reading around, Especially Hardware Unboxed from YouTube, They did a driver comparison between AMD and Nvidia last year and found Nvidia do infact use driver command lists to schedule the draw calls to obtain the best performance possible under DX11 with their hardware, Not best overall performance vs other vendors but allows their hardware to stretch its legs fully.

Would be nice if someone from AMD hint hint cough cough could clarify why this is left out of AMD's drivers when it does result in better performance on the competitions hardware or if it would result in lower performance or negligible performance gains on AMD hardware.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

They know!

Is an old problem, but AMD decided that DX12 is the future WOOOOOW and forgot aboutt DX9 DX10 and DX11 . AMD drivers are missing support for driver command lists.

Check this topic!

This is why Im dissapointed

My previous build Nvidia 1080ti + 5900x + 32G ballistics didn't have any problem with GTA at night.

I upgraded my GPU to 6800xt + 5900x + 32G ballistics - Low Fps low Gpu usage looking at the city at night time.

This video illustrates the issue perfectly (Not my video)

https://www.youtube.com/watch?v=x21bQ18-Uos&ab_channel=RezaNauma

My Issue is here

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i ll do that and upload the results

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dicehunter has summed this up perfectly. Without a 5800X3D, you will be CPU bound in many scenarios at 1080P with a 6950 XT. The GPU is simply too fast for most current generation hardware to keep the shaders active with work at 1080P.

That said, the games you referenced are all older games, built long before this hardware was even available to purchase. The two games you cite as working very well with no bottlenecks, both recently released.

I am using a 5800X3D and a RX 6950 XT and even the 5800X3D, which is the best gaming CPU out there, cannot always keep the RX 6950 XT pegged at 99% utilisation at 1080P. However it offers a significant performance increase over the 5950X it replaced. at 1080P. This is not the case at higher resolutions, where you moved from being CPU/API/Game bound to GPU bound.

Full disclosure, I'm actually running a 4K monitor (1440P/4K is the sweet spot for the 6950 XT) but like to test games like Warzone at 1080P on the 6950 XT, which has always been somewhat CPU limited (with low GPU utilisation) when using the 5950X. The 5800X3D gave a nice uplift in performance in this scenario and is now the fastest hardware combination available for Warzone.

I have a video of GTA V running on a 6950 XT and a 5800X3D, FPS is locked at around 188 for the most part at 1440P. (https://www.youtube.com/watch?v=qqL43E5Srow) It seems to be a game engine limitation that FPS will not go higher than 188. I might have to redownload GTA V and test out performance at 1080P to see how it runs on the 5800X3D.

In the meantime here are a few suggestions to try and help those games.

- Use the highest image quality pre-sets and options to mitigate the CPU limitation as much as possible.

- Use VSR in AMD Software and render the desktop and game at a higher than native resolution.

- Use 22.6.1 driver as it has the DX11 performance improvements.

- You could try disabling SMT to see if that helps add any extra performance in those few games.

- In AMD Software > Performance > Tuning menu, set the min GPU frequency to 100Mhz below whatever your max frequency is set to.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RIG: 6800xt + 5900x x570 + 32gb 3600 cl14 + Samsung 980 pro 2 tb

As you can see in my topic, GPU usage is LOW and i have fps drops to 60 and below. (Only at nighttime)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

at night time your card is rendering the game world with less lighting.. the game only draws what the light touches..

so you should have higher FPS if theres less light.

maybe you should consider light culling possibly or you've something set very very wrong.. i think its your PC or the game.. not your card.. you should be able to get thousands of FPS in that game .. well in every game.. on an AMD mobile phone from the last few years to a decade..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends of the game in GTA online is the opposite at nightime. "2 of my 12 cores are at 100%"

6800xt + 5900x x570 + 32gb 3600 cl14 + Samsung 980 pro 2 tb

AMD driver is missing support for driver command lists, which allows single-threaded commands to be distributed across multiple threads.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very weird. I installed the6800xt on my old 3900x b450 and the problem in gta is solved. LOL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe your cpu is not enough to your mighty RX 6950xt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Then why do i have no problems with the cpu if i use my old gtx 1080ti on direct x 11 games and as soon as i use an rx 6950xt then i have problems on older games? Maybe the issue is with the amd driver optimization for direct x 11 games?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your cpu is totally fine. AMD is great for DX12 but Nvidia is better for older games dx9,dx10,dx11

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi! For the sake of interest, reinstall windows, drivers for everything you need: motherboard, sound, video card, chipset, everything is as it should be (directx does not install an online installer, but download the full package from Microsoft and microsoft visual cc+ all versions or the full package) and disable the tpm module in the bios of the motherboard! And try to check if something has changed or not) I can say with confidence that my previous gtx 1080ti video card worked well in 1080p, but in 2k, it choked, and the rx 6900xt in 2k behaves perfectly, let's say far from perfect, but well, in games on vulcan and directx 12 in general, it just flies! And in some games on directx 11 (fallout 4 (4k textures and all that) Far cry 5, new dawn, is also not bad for 100 frames in 2k, not ideal of course, but ryzzen 5800x3d helps here, with i9 9900k I couldn't load the video card, even though it manages to stand idle in direct even in 2k x 11, in the same origins and odyssey (but gives out for 100 frames, depends on the workload of the scene) About such a game as skyrim se, I am silent, there is generally tin ((( it can only be played on nvidia..... now. Far cry 6 is flying, it's not surprising, the developers finally figured out that it's high time to translate the game to directx 12. I would say that this is the merit of the directx 12 api itself than optimization, since as far as I know even now the game uses only a part of the processor cores (I'm talking about far cry 6) in short, nothing has changed much, but they only filed the game on a modern api and the engine was single-threaded and remained, but oddly enough this is enough to load the video card to the eyeballs)). I now have odyssey and immortal fenix rising, far cry new dawn and part 6, they have a benchmark, throw off your graphics settings, I'll adjust them to my own and check how my games go in 1080p, these are the ones I don't really play)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you believe that CPU is the problem then why do i get more stable and almost double the performance of the rx 6950xt with my old gtx 1080ti especially on halo MCC? Nvidia Cards perform way way better on Direct x 11 and older games. There needs to be a fix on Direct x 11 drivers at least for the high end rx 6950xt and 6900xt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is we forget the part where my gtx 1080ti outperforms my rx 6950xt on 1080p. Both of these 2 cards when used with the same cpu do not have the same results. My gtx 1080ti has 300+ frames stable on halo mcc while my rx 6950xt has 180 fps and it drops to 80 fps many times which is very bad. When you buy the best of the best in a brand you expect it to be better than your seven year old card.

Cpu is not the problem. The problem is AMD performance on Direct x 11 and older games. I hope they improve their drivers in the near future. Like did you even read that my rx 6950xt has 260 fps on halo infinite but much lower on halo mcc which is a very old game?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is that the games are sabotaged and running 1950's code in them and are 16bit or 32bit or barely 64bit. while my AMD anything GPU is 2096bit or higher in many newer models. So you see.. your single thread single core games are going to struggle. my RX5700XT says its a 40 compute units which you could think of as CPU cores. when you run directx12 if you've an 8core CPU or whatever.. it will be millions of times faster mathematically especially so with vulkan and modern code thousands of FPS vs a few hundred.. but often its limited by the app and game developers being fake hardware enthusiasts for nvidia and intel junk. So the latest intel graphics or ARC or whatever they call it is 1024bit.. the stunning scifi 70's 80's and 90's voodoo3dfx graphics tech from AMD that nvidia has somehow stolen away and shoved up their rears for decades somehow forcing it to be disabled in all code known as ray tracing and realtime rendering when every RAY DEON card has been a ray tracer and renderer since forever and RTX is just a chip that lets it do that 10% faster when intel and nvidia could finally manage to fake it by using decades old 10 20 or 50 year old AMD technology that becomes cheaper or is licenced for cheaper goods like mobile phones so they can then buy it up or get it made by samsung for peanuts or its just so old the copyrights expired so it goes to universities and things for educational purposes which the university then owns and can sell to nvidia and intel cheaply whenever a student submits an assignement.. its how intel and nvidia and everybody else got a hold of AMD's 70's overlay technology and how unistudents managed to make reshade.me and reshade filters with open sourced AMD code.. and also why fidelity FX = open source = nvidia finefx. so AMD just makes all their code run on nvidia and intel because they're all just borrowed AMD tech from years ago. Though nvidia and intel are like the dumpster dived doorbells that made up 80's nintendo consoles and old tv's and how commodore 64 was just a reboxed atari st2000 and stuff.. well their cheapest fakest nonsense ever pretending to be computers with cheap nokia mobile phone parts that are identical to an intel i9 for about 20 years.. has now been replaced and upgraded with more modern multicore mobile phone parts.. and doesnt use AMD's military super computer hardware like at all..

so to be honest the more octillions of render resolution and the more ray traced and stuff you set your game the better it looks and the faster it goes and the less of the GPU it will begin to use because once the bit depth exceeds all of nvidia and intels fake software and spyware and sabotage.. only the core AMD display driver will be left so some fake screen effects and enhancement sparkles will switch off but the game will become hyper realistic reality simulation .. just it might look really boring.. probably the sabotage again. but well the more correctly and realistic you set your AMD graphics card to on your mobile phone or your desktop PC the less GPU/CPU it will use.. games like mortal kombat 11 ultimate aftermath in all ultra with the quality upped thousands of times should use no more than about 8% CPU on average and about 5-20% GPU in maybe 6million or billion resolution rendering? the less umm shackled your hardware is and the more it can just copy the files into the GPU and use its hardware.. the less its going to use resource wise. you need to enable the hardware!

but games like raid shadow legends on PC often say 230fps ray tracing 1080p whatever in maybe just 64k or whatever..and because its not well optimized and doesnt perform the best it might say about 45% GPU usage.. but once in a while maybe depending on driver settings it will start running faster and say 400FPS and 60-80% GPU usage.

then it slows down again.. it will often just randomly occur if you leave the FPS shown up in the corner. again its about how much of the hardware you toggle on and how low your latency is and .. mostly whether or not the game is coded to use a single year 2000's line of code like add.mGPU (multicore/card graphics) or whatever directx12 OS flag the developer types in to toggle it on or you make a config.ini text file and enable support and features and display driver functionality that way.. such as ultrarealityrendering dualaugmentedenhancedhyperultramaxtracedraylightpathways or same but for reflections or same but for diffused lighting.. you get the point you must type stuff in to use your super computer or it runs dumpster parts code from the 50's the same as the dumpster parts.. enabling infinity cache and then using values to the infinite and say settingthem to be simultaneously instantaneous is super awesome.. such as the new wave matrix multiply add? something in the 3dvcache latest CPU's from AMD announced was it called WMMA? if you turn that on everything loses like half your FPS.. but if you infinitely stack it whatever it does i maybe dont have the hardware for maybe i do with my 5700G ryzen and 5700XT radeon.. well you can just type in instantaneously and it will do its thing but with like maybe 1 FPS loss or 0? games same as before.. dont know if it works tho as not sure what its supposed to do as i believe zero games are coded for it..

you just have to use more of the quantum super computer technology type stuff like SIMD .. you can apply an operation to a single pixel on screen and have it do it instantly to the entire screen without taxing performance its a hardware function.. single instruction multiple delivery.. when you're direct transport or directtransfer storage or to display output.. you wanna hope its getting rid of some of those bottlenecks and you may have to use memory culling and other stuff.. i mean AMD runs the entire windows registry every letter every second and it fits every video games entire world map loaded in at once can see end to end if theres a huge mountain other side of the world map.. unless the atmosphere is too thick with smoke particles and fog and mist and breeses and air.. its neat how your game characters inhale through their little nostrils hahaha.. and their skin can sweat.. like ever since the ps3 its had true atmospheric rendering.. and true hair and tobbacco smoke level quality rendering.. you can get titles like tekken 6 from ps3 and run them on PC with the realtime sweat and stuff.. its cool. nice skin cell graphics.. you drop their character models into 3d software instantly its super hot adult content if you uhh search the web for say ps3's final fantasy lighting returns or 13 sera farron or lightning or the dead or alive 6 game that was banned in most countries world wide for being absurdly realistic graphics that terrified people except in argentina where it was normal or whatever and search like honoka or marie rose or nyotengu whatever.. and type in the words to enable AMD hardware features.. well then your game graphics from ps3 become absurdly hot.. just the octillions of render resolution makes it look fantastic.

so when you see an old ps2 or ps3 game and they've all got the same green phones in them as tokyo ghost wire.. it looks identical because it is the same game graphics and phone i guess. find the phone figure out how old AMD is and how awesome they are.

try my config.ini text file. see if it helps.. you may have to heavily edit it and customize it. the folder up a directory in media fire might have some of my screenshots of bios and windows and adrenaline AMD drivers to teach you how to configure your PC better.

https://www.mediafire.com/folder/prpl1rbp1o8h1/COMPUTERSYSTEMGLOBALDIRECTKERNELMODE

the tokyo ghost wire looks a bit like 60's japan or something was completely 3d mapped and playable in its entirety or something wouldnt you agree? i mean what are the chances AMD graphics looked like this in the 60's back when ray tracing and rendering was used for photographic imaging for commercials and things rather than gaming. as it was expensive so they didnt make cheap ray tracing gaming cards till maybe the 90's? its a shame nvidia and intel still "CAN NOT ANYTHING"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I only have this issue with GTA 5

RIG 5900x + 6800xt