Over the last few years, AI has moved from the realms of science fiction to being deployed in a range of disparate systems and software applications. In many cases, these applications live in the cloud or run best on large-scale computing systems. AMD's goal with RyzenTM AI and the AMD XDNA™ architecture is to expand access to AI and AI software development by bringing advanced AI processing to local PCs.

Shifting AI workloads out of the cloud and into local hardware offers improved latency, helps keeps sensitive data out of the cloud, and can speed processing compared to waiting in a queue to use a cloud-based service The Ryzen AI engine built into select Ryzen 7000 Series mobile processors provides a dedicated, on-die accelerator to run these workloads, but we haven't talked as much about the software side of the equation.

The Ryzen AI Software Platform, launching widely later this year, will provide developers with the tools they need to add AI to existing applications, as well as to create all-new programs that take advantage of this nascent field in new and exciting ways.

We began the public phase of our software rollout in May, with demos and code samples that developers can experiment with. This summer, we are expanding on that engagement with interim releases that add support for new operators running on the IPU and delivers quantization support for ONNX, PyTorch, and TensorFlow models. To get started, please visit the Ryzen AI software documentation page.

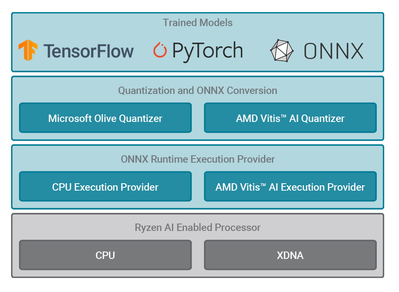

These early previews and limited developer partnerships will expand into broader support for AI developers and related ecosystems when the full Ryzen AI Software Platform debuts later this year. Future releases will support more ops running on AMD XDNA. The ONNXRT Execution Provider (EP) with upstreamed support for the Vitis™ AI execution provider will automatically decide whether to schedule workloads on the CPU or the AMD XDNA AI Engine depending on the characteristics of the workload.

Developers can take trained models in PT, TF, and ONNX formats, quantize these models with either AMD Vitis AI Quantizer or the Microsoft Olive Quantizer into INT8 and deploy using ONNX Runtime with the Vitis AI Execution Provider. The latter will partition and compile code to run on either the AMD XDNA AI Engine or CPU.

Later this year, AMD will release additional toolchains, libraries, and guides for easier AI development. These are all part of our strategy for simplifying AI at every level, from training models to locally deploying them on Ryzen AI-powered systems. We also intend to add support for Generative AI models.

AMD's goal for the Ryzen AI Software Platform is to shrink the gap between hardware debut and end-user software availability to the greatest extent possible, allowing programmers and end-users to see the benefits of AI processing even at this relatively nascent stage of development. The interim release this summer and the expanded functionality AMD will provide later this year will speed the development of an AI ecosystem and bring the benefits of this new type of processing to developers.