How to get an AI coding assistant on your AMD Ryzen™ AI PC or Radeon Graphics Card

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Do you know GPT-based Large Language Models (LLMs) that are running locally on your AMD Ryzen™ AI PC or Radeon™ 7000 Series Graphics Card can help you code with confidence. Not only do they not require an internet connection – but your conversations stay private and on your local machine. You can use them to brainstorm new code ideas or even run existing code by them. In order to configure a local instance of an AI chatbot to work with code, you can follow the instructions given below:

1. Download and setup LM Studio using the instructions on this page.

2. In the search tab copy and paste the following search term depending on what you want to run:

a. If you would like to run Mistral 7b, search for: “TheBloke/Mistral-7B-Instruct-v0.2-GGUF”. We are going with Mistral in this example.

b. If you would like to run CodeLLAMA 7b, search for: “TheBloke/CodeLlama-7B-Instruct-GGUF”

c. You can also experiment with other models here.

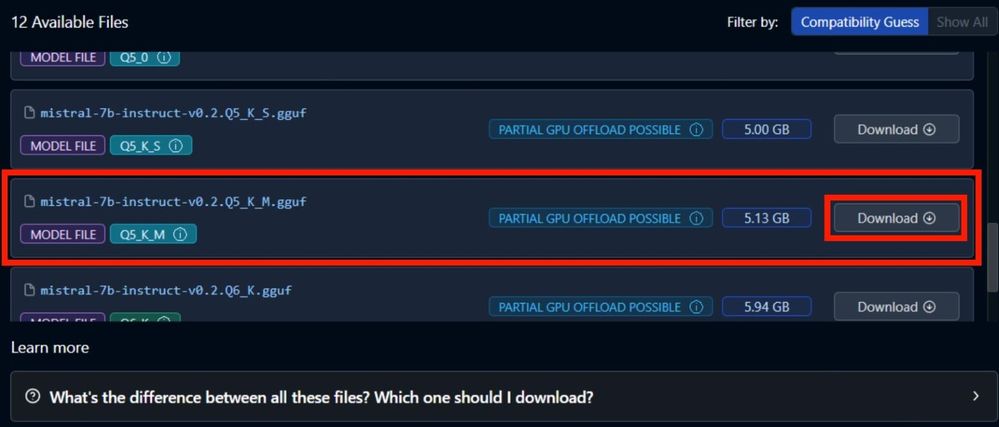

3. On the right-hand panel, scroll down till you see the Q5 K M model file. Click download.

a. We recommend Q5 K M for most models on Ryzen AI.

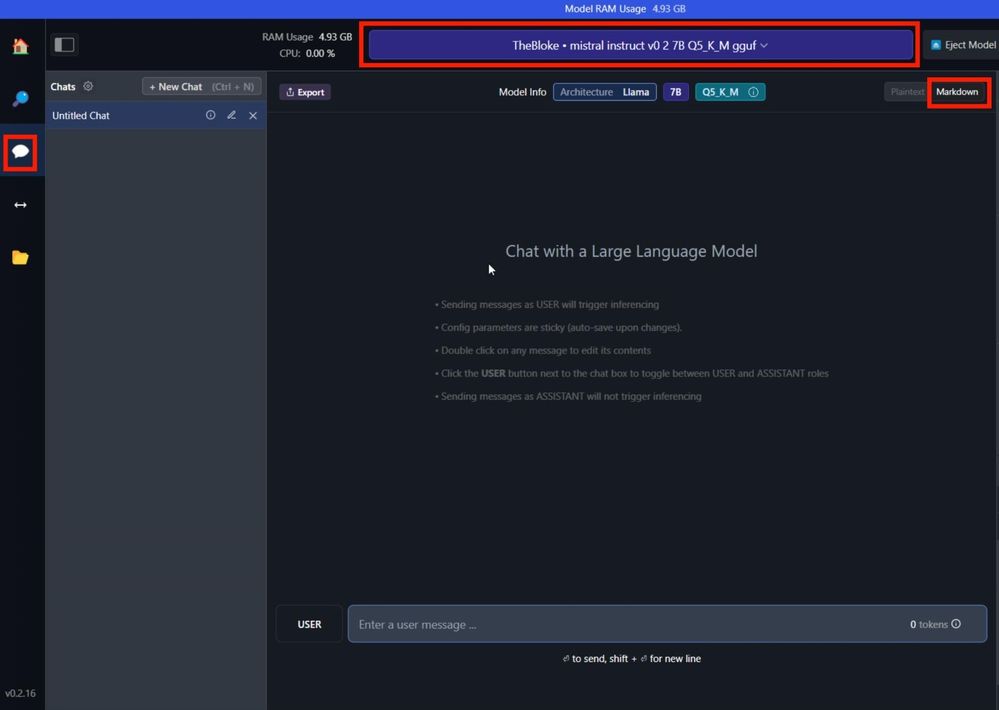

4. Select the model from the drop-down menu in the top center and wait for it to finish loading.

5. Go to the chat tab and click on “Markdown” option in the top right.

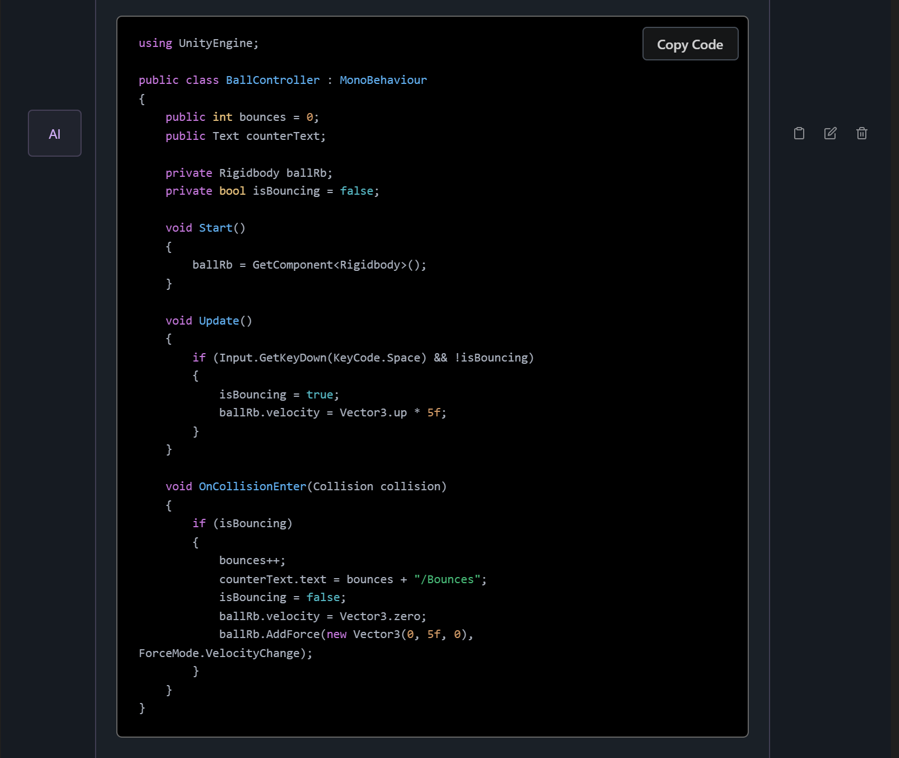

6. You can now ask your coding assistant anything and it will be presented in properly formatted syntax. Example: