Did you know that you can run your very own instance of a GPT based LLM-powered AI chatbot on your Ryzen™ AI PC or Radeon™ 7000 series graphics card? AI assistants are quickly becoming essential resources to help increase productivity, efficiency or even brainstorm for ideas. Not only does the local AI chatbot on your machine not require an internet connection – but your conversations stay on your local machine.

To spool up your very own AI chatbot, follow the instructions given below:

1. Download the correct version of LM Studio:

2. Run the file.

3. In the search tab copy and paste the following search term depending on what you want to run:

a. If you would like to run Mistral 7b, search for: “TheBloke/OpenHermes-2.5-Mistral-7B-GGUF” and select it from the results on the left. It will typically be the first result. We are going with Mistral in this example.

b. If you would like to run LLAMA v2 7b, search for: “TheBloke/Llama-2-7B-Chat-GGUF” and select it from the results on the left. It will typically be the first result.

c. You can also experiment with other models here.

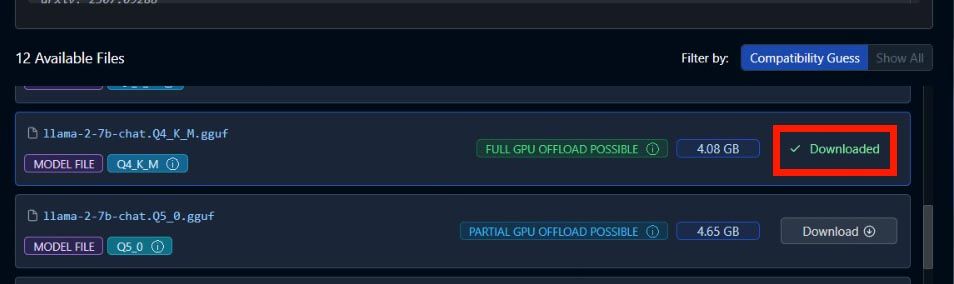

4. On the right-hand panel, scroll down till you see the Q4 K M model file. Click download.

a. We recommend Q4 K M for most models on Ryzen AI. Wait for it to finish downloading.

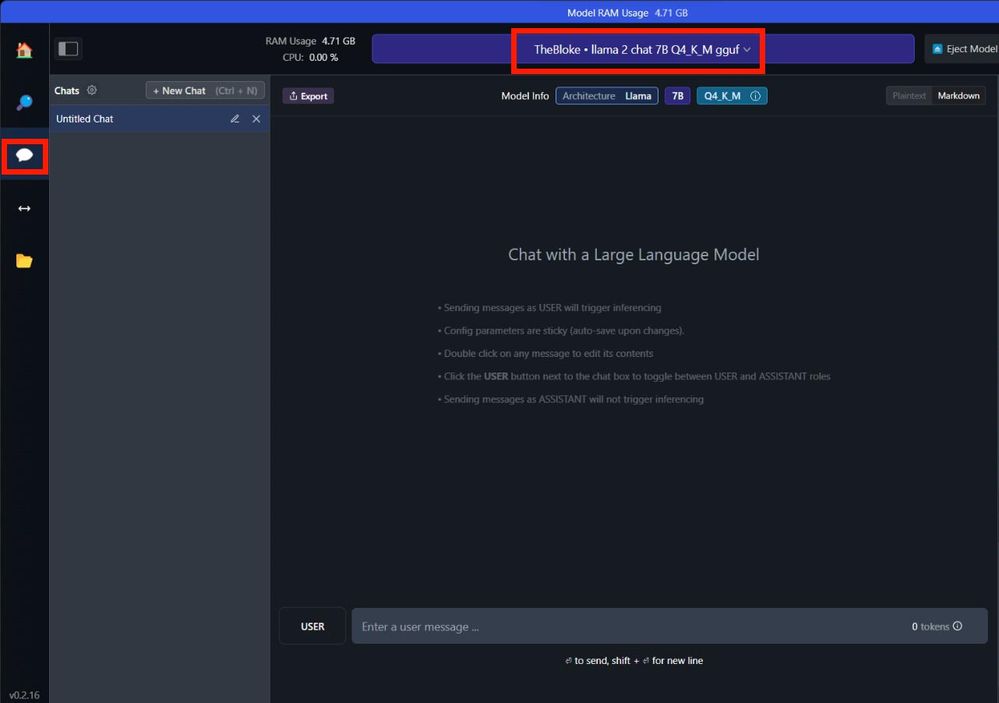

5. Go to the chat tab. Select the model from the central, drop-down menu in the top center and wait for it to finish loading up.

6. If you have an AMD Ryzen AI PC you can start chatting!

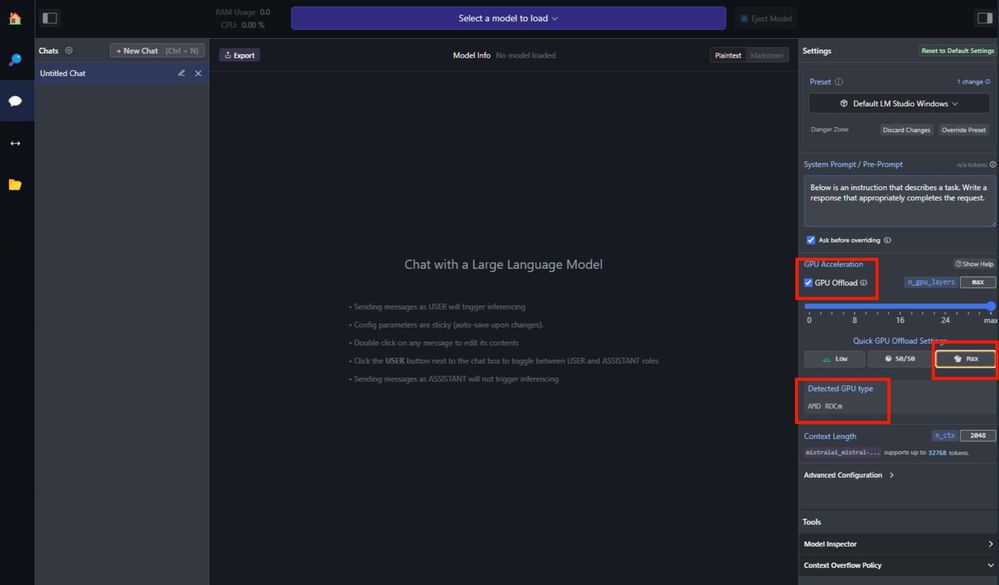

a. If you have an AMD Radeon™ graphics card, please:

i. Check “GPU Offload” on the right-hand side panel.

ii. Move the slider all the way to “Max”.

iii. Make sure AMD ROCm™ is being shown as the detected GPU type.

iv. Start chatting!