GPT based Large Language Models (LLMS) can be helpful AI assistants that maximize your productivity and increase the efficiency of your workflow. Running an AI chatbot on your AMD Ryzen™ AI powered AI PC or Radeon™ 7000 Series Graphics Card is easier than ever but did you know that you could customize it all for yourself?

Retrieval augmented generation (RAG) is a technique that allows you to enhance and provide context to your personal instance of a Large Language Model (LLM). This context can be local documents that you have on your PC or a text-based URL. And the best part? It runs 100% locally with no need for an internet connection or subscription fee.

You can use RAG with just one document or multiple documents to experience a truly customized LLM.

The step-by-step instructions to enable Retrieval Augmented Generation on your PC are given below. If you followed our previous guide and have LM Studio installed already, skip directly to step 7:

1. First, download and setup LM Studio using the instructions on this page.

2. Select the model you want to use with RAG from the central, drop-down menu in the top center and wait for it to finish loading up.

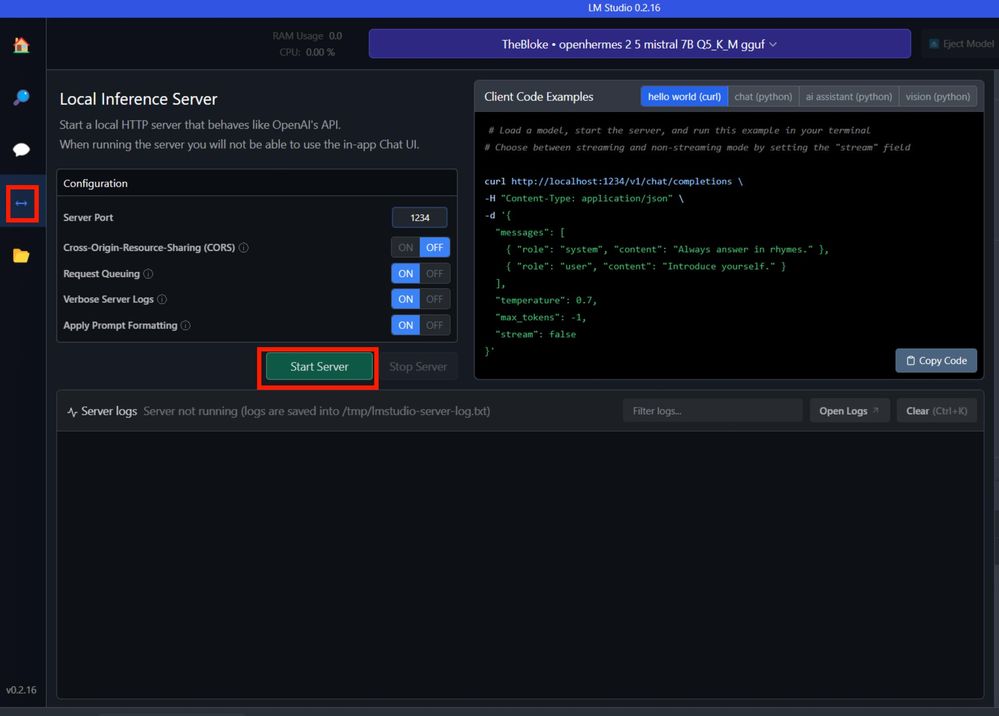

3. Go to the server tab and click on start server.

4. Please note the base URL. It is typically: http://localhost:1234/v1

5. Download AnythingLLM for Windows

6. Run the file.

7. Click “Get Started”

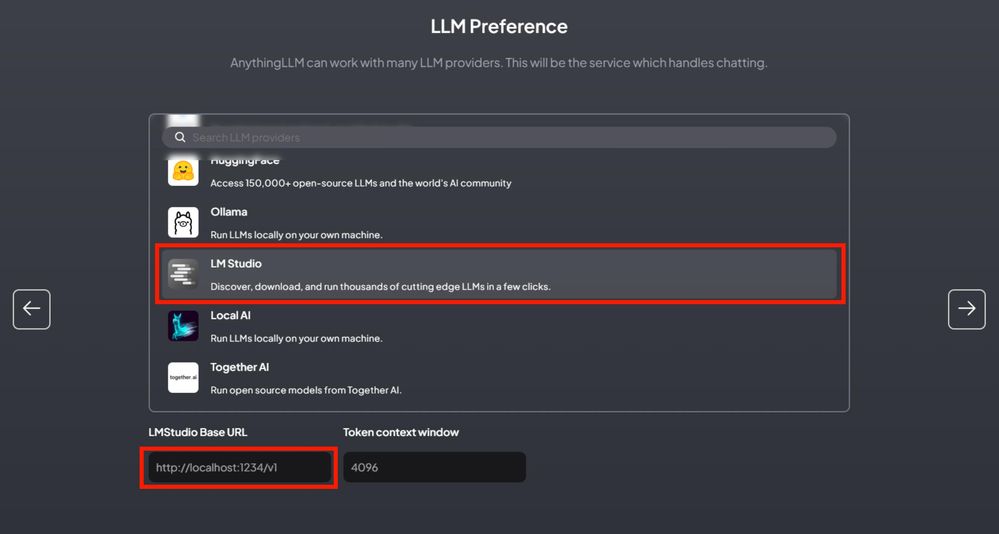

8. Click on LM Studio and enter the base URL (typically http://localhost:1234/v1)

9. Click on AnythingLLM Embedder for a 100% local experience.

10. Select LanceDB for a 100% local experience.

11. Click the next arrow on the privacy and summary screen.

12. AnythingLLM will ask you for details – this is optional, and you can either fill the forms here or click on “Skip Survey” to skip this.

13. Give your workspace a name and click the next arrow.

14. Click on your workspace name. We gave our workspace the name: “Test”

15. Click on the wheel button to show settings.

16. Click on upload document (you can upload as many as you want – we are using just one) or enter a URL for text-based retrieval.

17. Once your document(s) is uploaded, check it(them) in the left-hand context window and click move to workspace.

18. Click on save and embed.

19. Close this context window.

20. You can now ask the LLM a question that uses a specific term mentioned in your documents and it will be able to respond. RAG is now working, and it will show you the citation and the name of the local document used to answer your question.