This article was authored by Niles Burbank, director of product marketing at AMD

-----------------------------------------------------------------------------------------------------------------------

The PyTorch deep-learning framework is a foundational technology for machine learning and artificial intelligence. In the just-released PyTorch Documentary, I was privileged to provide an AMD perspective on the evolution of this enormously successful open-source project. As a complement to AMD observations in the documentary, I would like to share a bit of my personal history with machine learning. This provides, I hope, a bit of insight into some of the factors behind the unprecedented success of PyTorch.

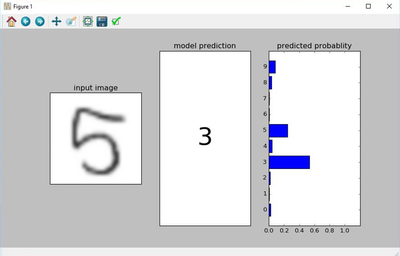

The history of machine learning goes back much further, but my professional awareness of it dates back about a decade. At that point, there was a surge of interest in using GPUs to accelerate DNNs (deep neural networks). To get familiar with how all this worked, I started with the simplest-possible tutorial – image classification using the MNIST dataset. This dataset is a collection of handwritten digits between 0 and 9, and the classification problem consists of properly identifying which of the ten possible digits a given image represents. Back around 2015, this was a pretty trivial problem. PyTorch didn’t exist at that point, but I managed to modify the tutorial to display images and the corresponding predictions, while a single layer network – essentially just a matrix multiplication – was being trained using the dataset. It was a revelation that such a simple network could “learn” to identify these images with impressive accuracy.

Figure 1: A snapshot of MNIST predictions during the training process (at this point the network is still struggling to distinguish between 5 and 3)

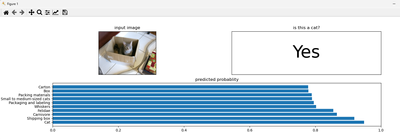

With that inspiration, I was spurred to consider what the simplest approach would be to solving a more complex image classification problem. At that time, this seemed to be via the API-based ML tools provided by services such as AWS, Azure, and Google Cloud Platform. In early 2018, two of those three platforms were able to correctly identify my cat (looking more svelte in those days than she does now).

Figure 2: API-based classification of a photo of my cat

These APIs are still great tools, but they don’t have the same flexibility to modify and experiment that comes from directly using an ML framework. I spent a bit of time trying to replicate the cat classifier on a local system, but it wasn’t my main job focus and I never got very far. I still periodically did small ML experiments, but found myself struggling with some of the programming structures involved.

When I first encountered PyTorch, shortly after its initial release, it seemed almost magically simple in comparison. With computations using “eager mode” by default, PyTorch behaved very much like any other Python library. For anyone with even a rudimentary competence with Python – like me and millions of other students, scientists, and hobbyists – there is almost no incremental learning curve to start using PyTorch to apply the power of machine learning to all manner of problems.

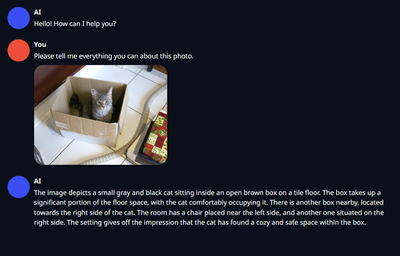

Today, it’s possible to use a multi-billion parameter multimodal PyTorch model to provide a far more nuanced analysis of my cat image on even a typical GPU-equipped PC. The 13 billion parameter Llava v1.5 model running on an AMD Radeon™ PRO W7900 graphics card provides a detailed, and generally accurate summary of what’s going on in the photo.

Figure 3: Chat session with Llava v1.5 13B

I share all this to make a more general point about the potential for AI and ML. As pervasive as these technologies are today, there’s still a huge untapped potential to apply the power of AI to new kinds of problems. The individuals best positioned to do that are experts in those fields, who aren’t generally professional programmers or computer scientists. That audience needs tools that are accessible and intuitive. PyTorch is certainly one of those tools, and the AMD GPUs that support PyTorch make that tool even more powerful.

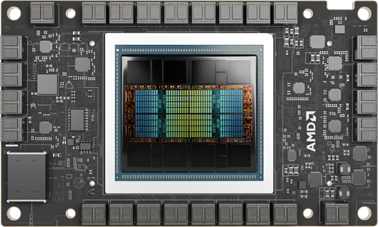

Figure 4: AMD Instinct™ MI300X Accelerator

Figure 5: AMD Radeon™ Pro W7900

Of course hardware isn’t useful without software, and the AMD ROCm™ software stack serves as the bridge between AMD GPU hardware and higher level tools like PyTorch. ROCm includes programming models, tools, compilers, libraries, and runtimes for AI and HPC solution development on AMD GPUs. It’s also open-source, like PyTorch, making it a natural fit into AI workflows that aim to be as transparent as possible.

The PyTorch documentary isn’t intended as a training resource, but there is a wealth of resources on the use of PyTorch from both AMD and the wider community. For anyone who wants to dig in further, here are a few starting points:

More Resources: