- AMD Community

- Blogs

- AI

- Developer Blog: Build a Chatbot with Ryzen™ AI Pro...

Developer Blog: Build a Chatbot with Ryzen™ AI Processors

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

AMD Ryzen™ AI processors and software bring the power of personal computing closer to you on an AI PC, unlocking a whole new level of efficiencies for work, collaboration, and innovation. Generative AI applications like AI chatbots, live in the cloud due to high processing requirements. In this blog, we will explore the building blocks of Ryzen™ AI technology and demonstrate how easy it is to utilize it to build an AI chatbot that runs with optimal performance, solely on a Ryzen AI laptop.

Full-Stack Ryzen™ AI Software

Ryzen AI comes with a dedicated Neural Processing Unit (NPU) for AI acceleration integrated on-chip with the CPU cores. The AMD Ryzen AI software development kit (SDK) enables developers to take machine learning models trained in PyTorch or TensorFlow and run them on PCs powered by Ryzen AI which can intelligently optimize tasks and workloads, freeing up CPU and GPU resources, and ensuring optimal performance at lower power consumption. Learn more about the Ryzen AI product here.

The SDK includes tools and runtime libraries for optimizing and deploying AI inference on an NPU. Installation is simple, and the kit comes with various pre-quantized ready-to-deploy models on the Hugging Face AMD model zoo. Developers can start building their applications in minutes, unleashing the full potential of AI acceleration on Ryzen AI PCs.

Building an AI Chatbot

AI chatbots require a lot of processing power, so much so that they usually live in the cloud. Indeed, we can run ChatGPT on a PC, but the local application is sending the prompts over the Internet to a server for LLM model processing and simply displays the response once it is received.

However, in this case, a local and efficient AI chatbot does not require cloud support. You can download an open-source pre-trained OPT1.3B model from Hugging Face and deploy it on the Ryzen AI laptop with a pre-built Gradio Chatbot app in a simple three-step process.

Step-1: Download the pre-trained opt-1.3b model from Hugging Face

Step-2: Quantize the downloaded model from FP32 to INT8

Step-3: Deploy the Chatbot app with the model

Prerequisites

First, you need to make sure that the following prerequisites are met.

- AMD Ryzen AI laptop with Windows® 11 OS

- Anaconda, install if needed from here

- Latest Ryzen AI AIE driver and Software. Follow the simple single-click installation here

Collateral materials for this blog are posted on the AMD GitHub repository.

Next, clone the repo or download and extract Chatbot-with-RyzenAI-1.0.zip into the root directory where you have installed the Ryzen AI SW. In this case, it is C:\User\ahoq\RyzenAI\

cd C:\Users\ahoq\RyzenAI\

git clone alimulh/Chatbot-with-RyzenAI-1.0

# Activate the conda environment created when RyzenAI was installed. In my case, it was ryzenai-1.0-20231204-120522

conda activate ryzenai-1.0-20231204-120522

#install gradio pkage using requirements.txt file. The browser app for the Chatbot is created with Gradio

pip install -r requirements.txt

# Initialize PATHs

setup.bat

Now you are ready to create the Chatbot in 3 steps:

Step-1 Download the pre-trained model from Hugging Face

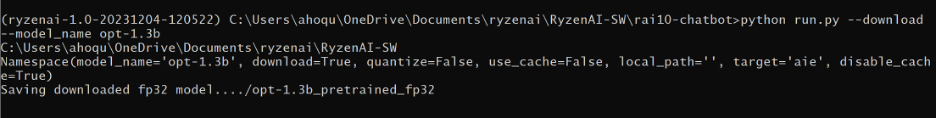

In this step, download a pre-trained Opt-1.3b model from Hugging Face. You can modify the run.py script to download a pre-trained model from your own or your company's repository. Opt-1.3b is a large, ~4GB model. The download time depends on internet speed. In this case, it took ~6 minutes.

cd Chatbot-with-RyzenAI-1.0\

python run.py --model_name opt-1.3b --download

The downloaded model saves in the subdirectory \opt-1.3b_pretrained_fp32\ as shown below.

Step-2 Quantize the downloaded model from FP32 to Int8

Once the download is complete, we quantize the model using following command:

python run.py --model_name opt-1.3b –quantize

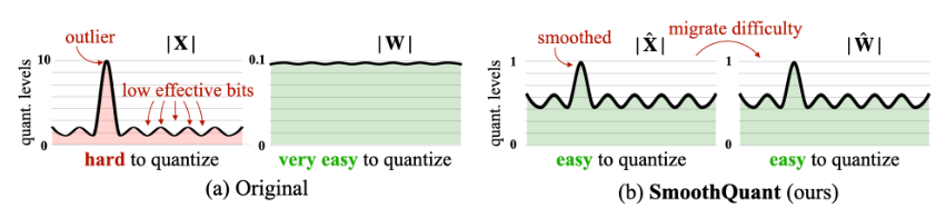

Quantization is a two-step process. First, the FP32 model is “smooth quantized” to reduce accuracy loss during quantization. It essentially identifies the outliers in the activation coefficients and conditions the weights accordingly. Hence, during quantization, if the outliers are dropped the error introduction is negligible. The Smooth Quant was invented by one of AMD's pioneer researchers Dr. Song Han, he is a professor at MIT EECS department. Below is a visual presentation of how the smooth quantization technique works.

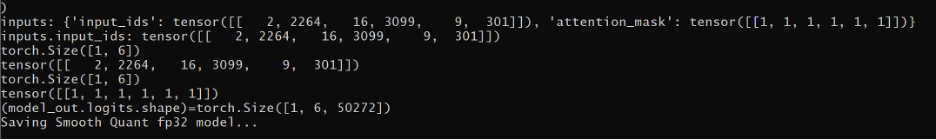

You can learn more about the smooth quantized (smoothquant) technique here. After the smooth quantize process, the conditioned model along with config.json files are saved in the 'model_onnx' folder in the opt-1.3b_smoothquant folder. Here is a screen capture of smooth quantization log:

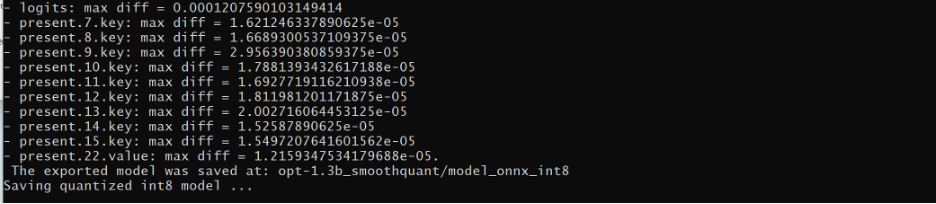

Smooth quantization takes ~30 seconds to complete. Once done, the optimum quantizer is used to quantize the model into int8. The int8 quantized model is then saved in the folder 'model_onnx_int8' inside the 'opt-1.3b_smoothquant folder. Quantization is an offline process. It takes about 2-3 minutes to complete and needs to be done once. Here is a screen capture of the Int8 quantization log:

Step-3 Evaluate the model and deploy it with the Chatbot App

Next, evaluate the quantized model and run it targeting NPU with the following command. Notice the model path is set to the location where we saved the int8 quantized model in the last step,

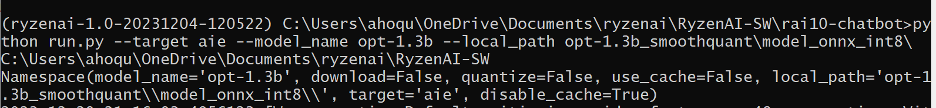

python run.py --model_name opt-1.3b --target aie --local_path .\opt-1.3b_smoothquant\model_onnx_int8\

During the first run, the model is automatically compiled by an inline compiler. The compilation is also a two-step process: first, the compiler identifies the layers that can be executed in the NPU and the once need to be executed in the CPU. Then it creates sets of subgraphs. One set is for NPU and the other set is for CPU. Finally, it creates instruction sets for each of the subgraphs targeting the respective execution unit. These instructions are executed by two ONNX Execution Providers (EP), one for the CPU and one for the NPU. After the first compilation, the compiled model is saved in the cache so in the subsequent deployment it avoids compilation. Here is a screen capture where the model information was printed during the compilation flow.

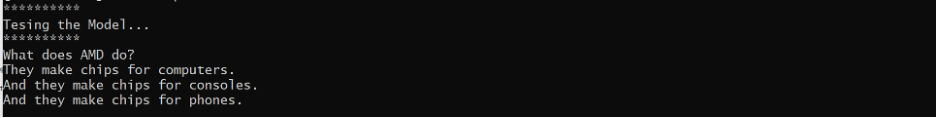

After the compilation, the model runs on both NPU and CPU. A test prompt is applied. The response from the LLM Opt1.3B model shows correct answers. Keep in mind that we downloaded and deployed a publicly available pre-trained model. So, its accuracy is subjective and may not be always as expected. We strongly recommend fine-tuning publicly available models before production deployment. Here is the screen capture of the test prompt and the response:

Now let’s launch the Chatbot with the int8 quantized model saved in the path \opt-1.3b-smooothquant\model_onnx_int8\

python gradio_app\opt_demo_gui.py --model_file .\opt-1.3b_smoothquant\model_onnx_int8\

As shown in the command prompt, the chatbot app runs on localhost on port 1234.

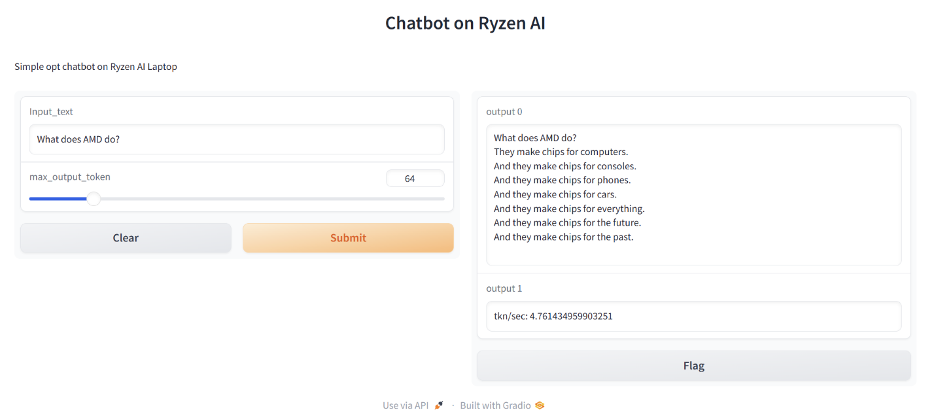

Open a browser and surf to http://localhost:1234.

On the browser app, set the max_output_token=64 and enter the prompt "What does AMD do?" in the Input_text box. The chatbot outputs the response as shown below. It also calculates the KPI (Key Performance Indicator) as token/sec. In this case it was ~4.7 tokens per second.

Congratulations, you have successfully built a private AI chatbot. It is running fully on the laptop with OPT1.3B, an LLM (large language model).

Conclusion

AMD Ryzen™ AI full-stack tools empower users to easily create experiences on an AI PC that were not possible before - a developer with an AI application, a creator with innovative and engaging content, a business owner with the tools to optimize workflow and efficiency.

We are excited to bring this technology to our customers and partners. If you have any questions or need clarification, we would love to hear from you. Checkout out our GitHub repo for tutorials and example designs, join us in our discussion forum, or send us emails at amd_ai_mkt@amd.com.

Endnote(s):

- Ryzen™ AI is defined as the combination of a dedicated AI engine, AMD Radeon™ graphics engine, and Ryzen processor cores that enable AI capabilities. OEM and ISV enablement is required, and certain AI features may not yet be optimized for Ryzen AI processors. Ryzen AI is compatible with: (a) AMD Ryzen 7040 and 8040 Series processors except Ryzen 5 7540U, Ryzen 5 8540U, Ryzen 3 7440U, and Ryzen 3 8440U processors; and (b) all AMD Ryzen 8000G Series desktop processors except the Ryzen 5 8500G/GE and Ryzen 3 8300G/GE. Please check with your system manufacturer for feature availability prior to purchase. GD-220b.

- Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied. GD-97.

-

Adaptive AI (Versal Zynq Kria)

15 -

AI Announcements

34 -

AI Software

43 -

Alveo AI

1 -

EPYC AI

9 -

Instinct AI

19 -

Radeon AI

33 -

ROCm

7 -

Ryzen AI

48 -

Tips and Tricks

9

- « Previous

- Next »