Generative AI is changing the way software engineers work today. Did you know that you can build your own coding Copilot just using the AMD RadeonTM graphic card locally? That’s right. AMD provides powerful large-model inference acceleration capabilities through the latest and advanced AMD RDNATM architecture, that powers not just cutting-edge gaming but also high-performance AI experience. With the help of the open software development platform AMD ROCmTM, now it is possible for software developers to implement GPT-like code generation functions on the desktop machines. This blog will share with you how to build your personal coding Copilot with Radeon graphic card, Continue (name of an open-source integrated development environment, act as an extension of VSCode and JetBrains that enable developers to create their own modular AI software development system easily), and LM Studio plus the latest open-source large model Llama3.

Here is the recipe to set up the environment:

|

Item

|

Version

|

Character

|

URL

|

|

Windows

|

Windows11

|

Host

|

|

|

VSCode

|

|

Integrated Development Environment

|

|

|

Continue

|

|

Copilot Extension

|

https://www.continue.dev/

|

|

LM Studio

|

v0.2.20 ROCm

|

LLM inference server

|

support Llama3

https://lmstudio.ai/rocm

|

|

AMD Radeon 7000 Series

|

|

LLM Inference Accelerator

|

|

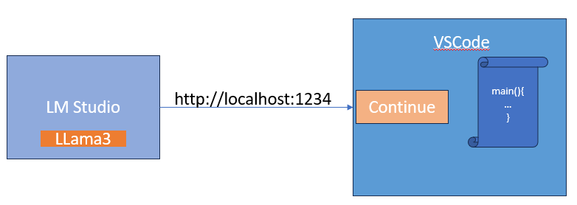

In this implementation, the LM Studio is used to deploy Llama3-8B as an inference server. The Continue extension connected to the LM Studio server plays as the copilot client in VSCode.

A Brief Structure of the Coding Copilot System

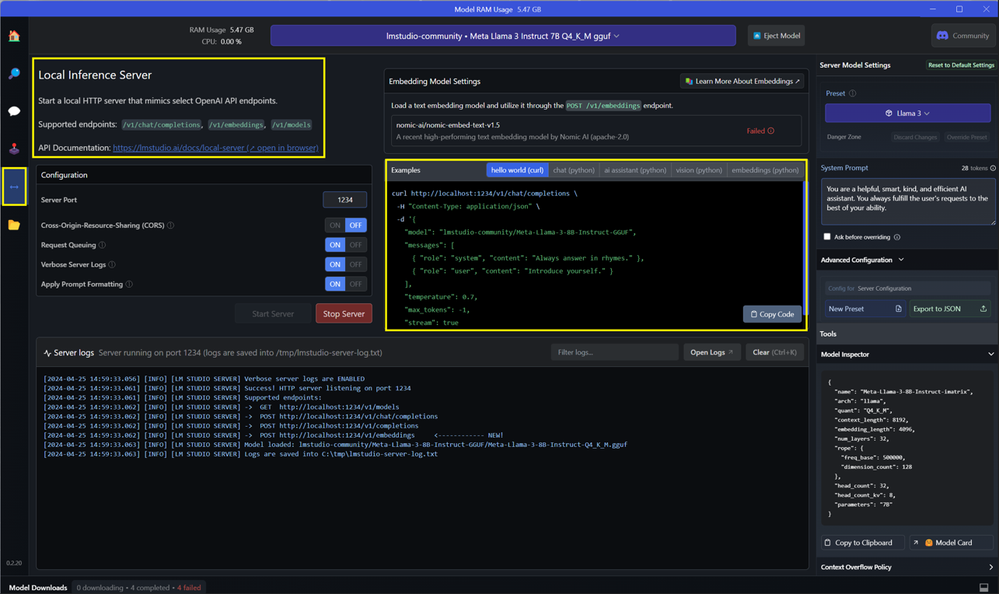

The latest version of LM Studio ROCm v0.2.22 supports AMD Radeon 7000 Series Graphics cards (gfx1030/gfx1100/gfx1101/gfx1102) and has added Llama3 to the support list. It also supports other SOTA LLMs like Mistral with awesome performance based on AMD ROCm.

Step1: Please follow Experience Meta Llama 3 with AMD Ryzen™ AI and Radeon™ 7000 Series Graphics to setup LM Studio with Llama3.

In addition to work as a standalone chatbot, LM Studio could also act as an inference server. Like shown in the picture below, just one-click on the Local Inference Server button at the left-hand side of LM Studio user interface with the LLM model, e.g. Llama3-8B selected, an OpenAI API HTTP inference service will be launched. The default port is http://localhost:1234

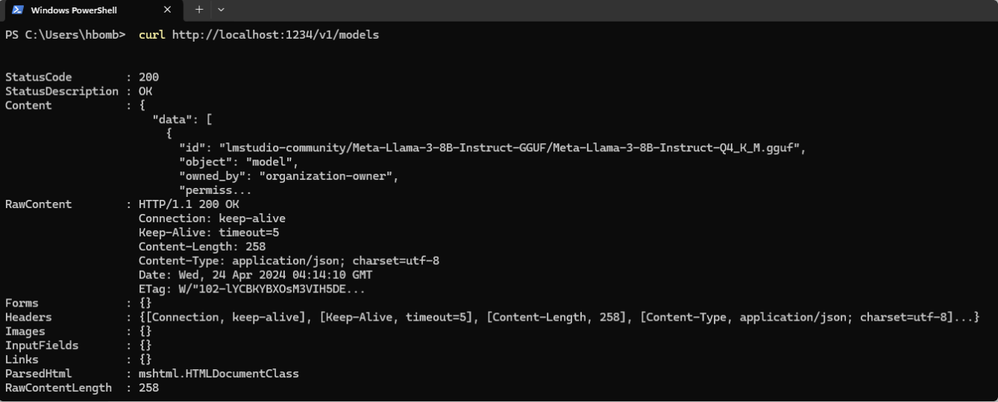

You may use the curl example code to verify the service with PowerShell.

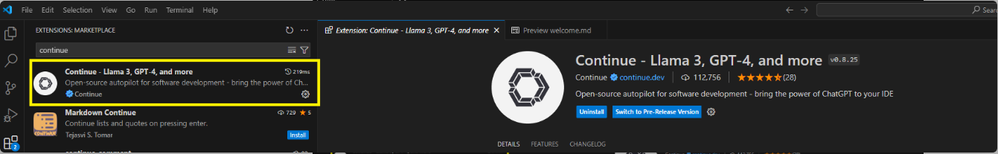

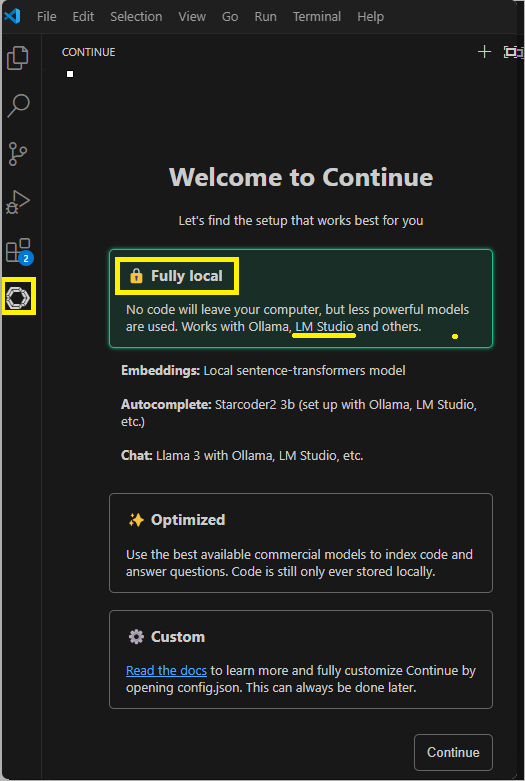

Step2: Setup Continue in VSCode

Search and install the Continue extention in VSCode.

You will find out that Continue now works with LM Studio and other inference framework.

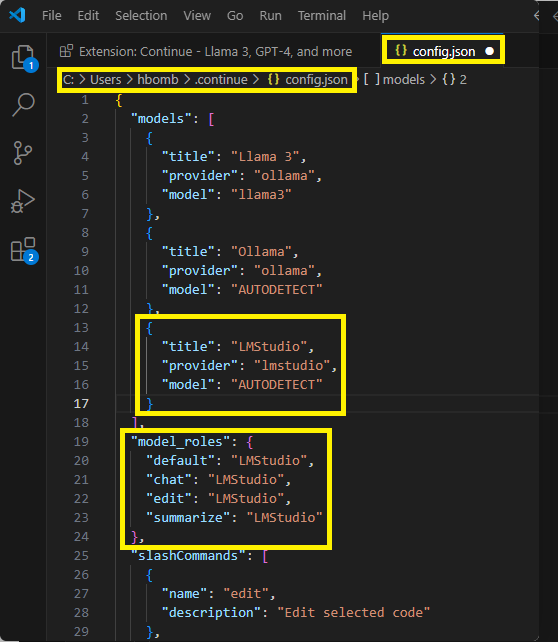

Refer to https://continuedev.netlify.app/model-setup/configuration to modify the config.json of Continue to set LMStudio as the default model provider. Find out config.json and add the contents as what have been highlighted in the picture below:

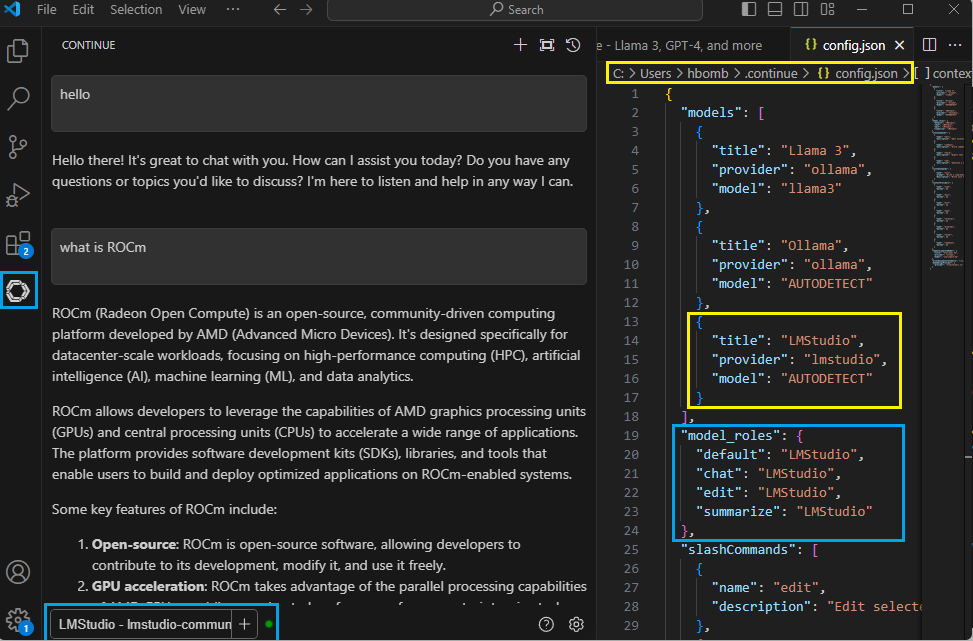

Then, choose the LM Studio as the copilot backend (at the lower-left corner of this UI). Then you can chat with Llama3 with Continue in VSCode.

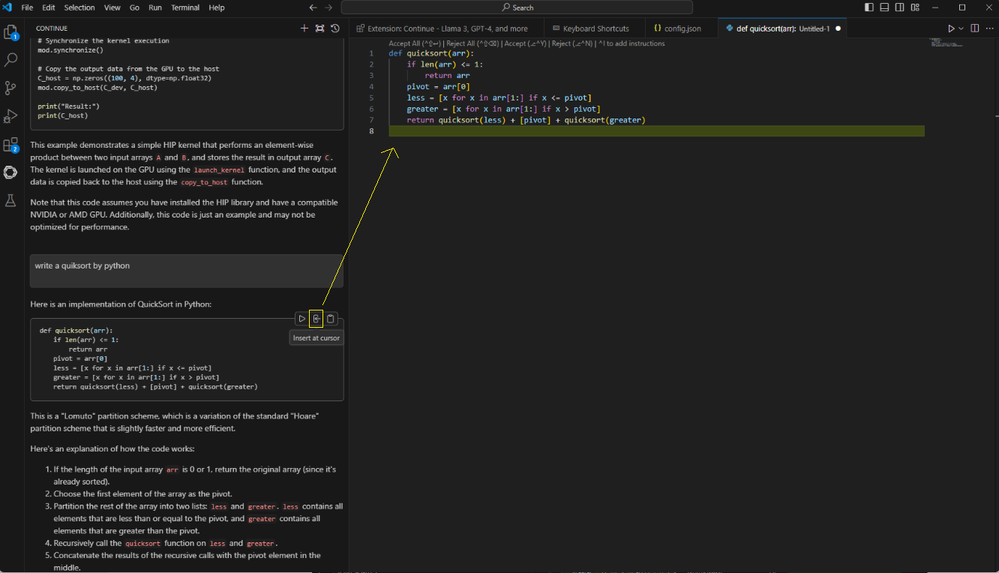

Continue provides a button to copy the code from chat to code file.

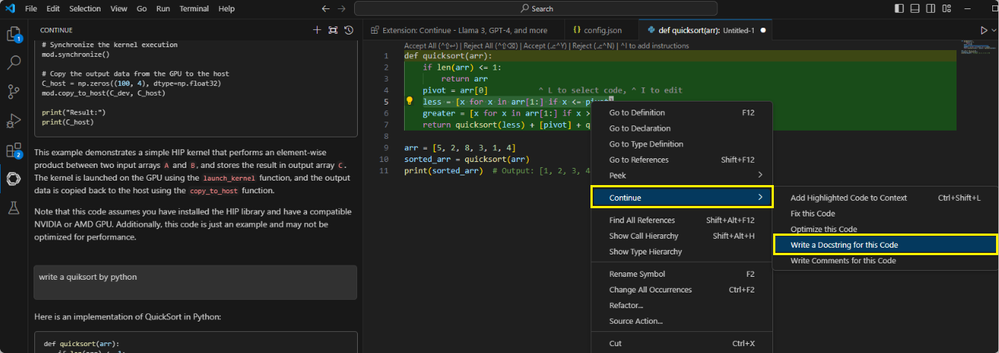

Right click the mouse to trigger out the quick menu of Continue in the code editing windows.

At this point, the application of automatic coding using the Llama3 model in LM Studio through Continue has been successfully launched. Continue enables users to select the right AI model for working purpose, whether it's open-sourced or commercialized models, running on local machine or remotely, and used for chat, autocomplete, or embeddings. You may find out more usage of it from https://continuedev.netlify.app/intro/.

Now, you have your own AI Copilot with AMD Radeon Graphic card. This is a very simple and easy-to-use implementation for many individual developers, especially those who do not yet have access to the cloud instance for large-scale AI inference calculations.

AMD ROCm open ecosystem is developing rapidly with the support of latest LLM by AMD GPU, and the excellent software applications such as LM Studio. If you need more information on AMD AI acceleration solutions and developer ecosystem plans, please send email to amd_ai_mkt@amd.com.