- AMD Community

- Blogs

- AI

- AMD Radeon™ PRO GPUs and ROCm™ Software for LLM In...

AMD Radeon™ PRO GPUs and ROCm™ Software for LLM Inference

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Large Language Models (LLMs) are no longer the preserve of large enterprises with dedicated IT departments, running services in the cloud. Combined with the power of AMD hardware, new open-source LLMs like Meta's Llama 2 and 3 – including the just released Llama 3.1 – mean that even small enterprises can run their own customized AI tools locally, on standard desktop workstations, without the need to store sensitive data online.

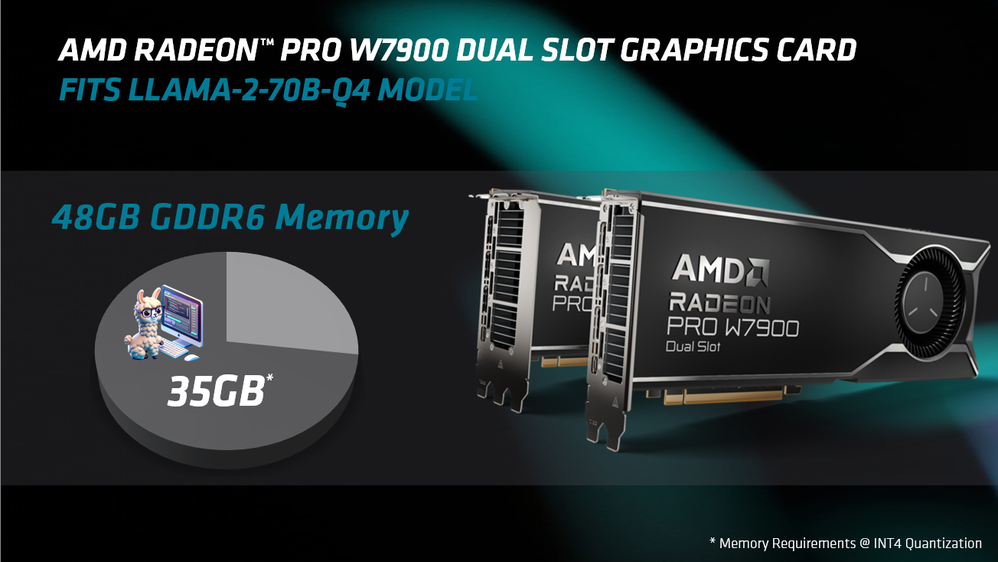

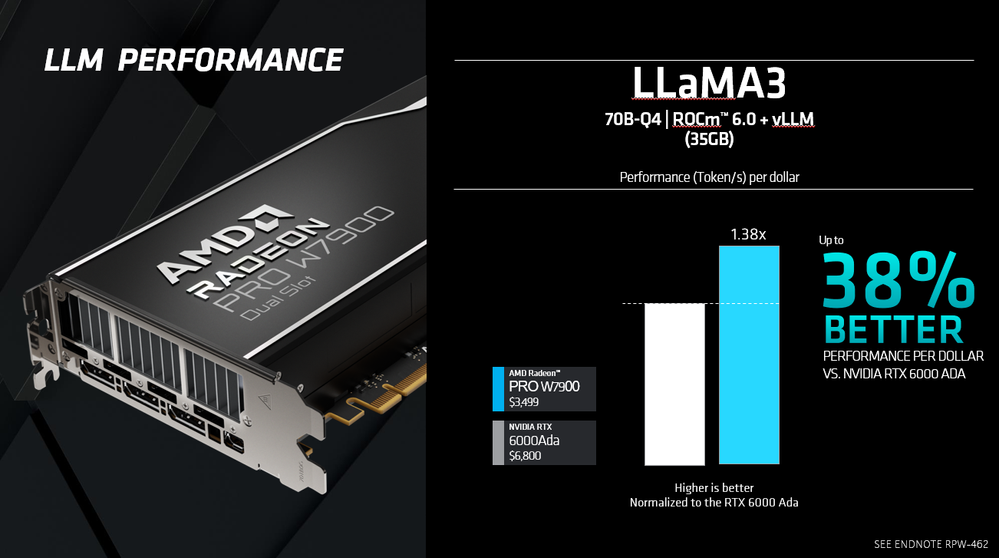

With their dedicated AI accelerators and enough on-board memory to run even the larger language models, workstation GPUs like the new AMD Radeon™ PRO W7900 Dual Slot provide market-leading performance per dollar with Llama, making it affordable for small firms to run custom chatbots, retrieve technical documentation or create personalized sales pitches, while the more specialized Code Llama models enable programmers to generate and optimize code for new digital products.1

And with ROCm™ 6.1.3, the latest release of AMD's open software stack, making it possible to run AI tools on multiple Radeon™ PRO GPUs, SMEs and developers can now support larger and more complex LLMs – and support more users – than ever before.

New uses for LLMs in Enterprise AI

Although AI techniques are widely used in technical fields like data analysis and computer vision, and generative AI tools are increasingly being adopted in the design and entertainment markets, the potential use cases for AI are much broader.

Specialized LLMs like Meta's open-source Code Llama enable programmers, app developers and web designers to generate working code from simple text prompts, or to debug existing code bases, while its parent model, Llama, has a huge range of potential uses for 'Enterprise AI', particularly for customer service, information retrieval and product personalization.

While off-the-shelf models are optimized for the widest possible range of users, rather small enterprise firms such as doctors, engineers or lawyers, SMEs can use retrieval-augmented generation (RAG) to make existing AI models aware of their own internal data – for example, product documentation or customer records – or can even fine-tune the models with that data, resulting in more accurate AI-generated output with less need for manual editing.

How can small enterprises make use of LLMs?

So how could an SME make use of a custom Large Language Model? Let's look at a few examples. Using an LLM customized on its own internal data:

- A local store could use a chatbot to answer customer enquiries, even out of work hours.

- A larger store could enable helpline staff to retrieve customer information more quickly.

- A sales team could use AI tools in its CRM system to generate tailored client pitches.

- An engineering firm could generate documentation for complex technical products.

- A solicitor could generate the first drafts of contracts.

- A doctor could summarize calls to patients and add the information to their medical records.

- A mortgage broker could populate application forms with data from clients' documents.

- A marketing agency could generate specialist copy for blogs and social media posts.

- An app development agency could generate and optimize code for new digital products.

- A web developer could look up documentation on syntax and online standards.

Quite a long list already – and that's only scratching the surface of the true potential of Enterprise AI.

Why run LLMs locally, not in the cloud?

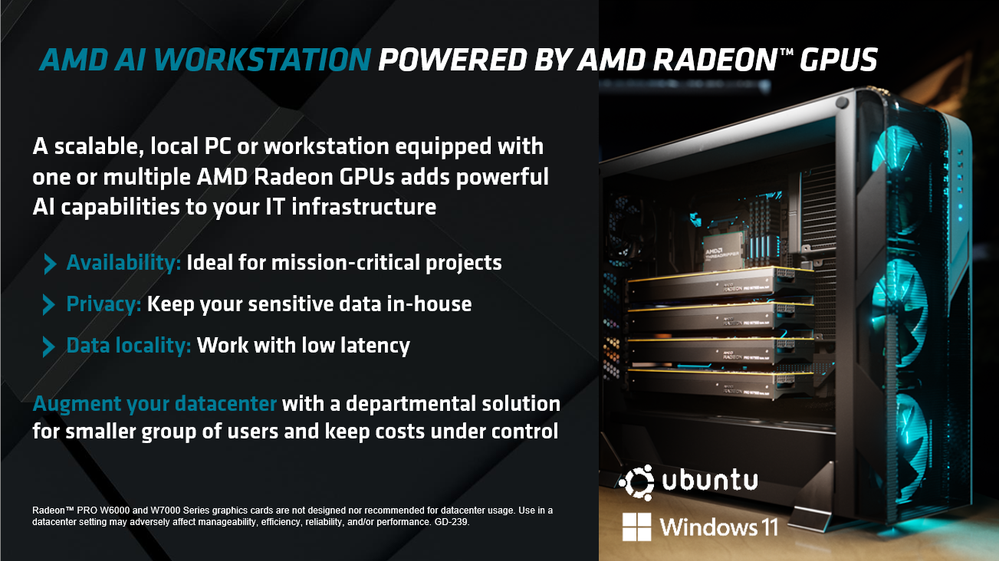

Although the technology industry provides a range of cloud-based options for deploying AI services, there are compelling reasons for small businesses to want to host LLMs locally.

Data security

Research from Predibase suggests that concern about sharing sensitive data is the largest single reason for enterprises not adopting LLMs in production.2 Running AI models on a local workstation removes the need to upload confidential client details, product documentation or code to the cloud.

Lower latency

Running LLMs locally rather than on a remote server reduces lag in use cases where instant feedback is important, like operating a chatbot, or searching product documentation in order to provide real-time support to customers calling in to a helpline.

More control over mission-critical tasks

By running LLMs locally, technical staff can troubleshoot problems or deploy updates directly, without having to wait on a service provider located in another time zone.

Ability to sandbox experimental tools

Using a local workstation as a sandbox environment makes it possible for IT departments to prototype and test new AI tools before deploying them at scale within an organization.

How can AMD GPUs help small enterprises to deploy LLMs?

For an SME, hosting its own custom AI tools need not be a complex or expensive business, as apps like LM Studio make it easy to run LLMs on standard Windows laptops and desktop systems. Enabling retrieval-augmented generation to customize the output is a straightforward process, and since LM Studio is optimized to run on AMD GPUs via the HIP runtime API, it can make use of the dedicated AI Accelerators in current AMD graphics cards to boost performance.

The memory capacity of consumer GPUs like the Radeon™ RX 7900 XTX is sufficient to run smaller models like the 7-billion-parameter Llama-2-7B, but the extra on-board memory in professional GPUs like the 32GB Radeon™ PRO W7800 and 48GB Radeon™ PRO W7900 makes it possible to run larger and more accurate models like the 30-billion-parameter Llama-2-30B-Q8.

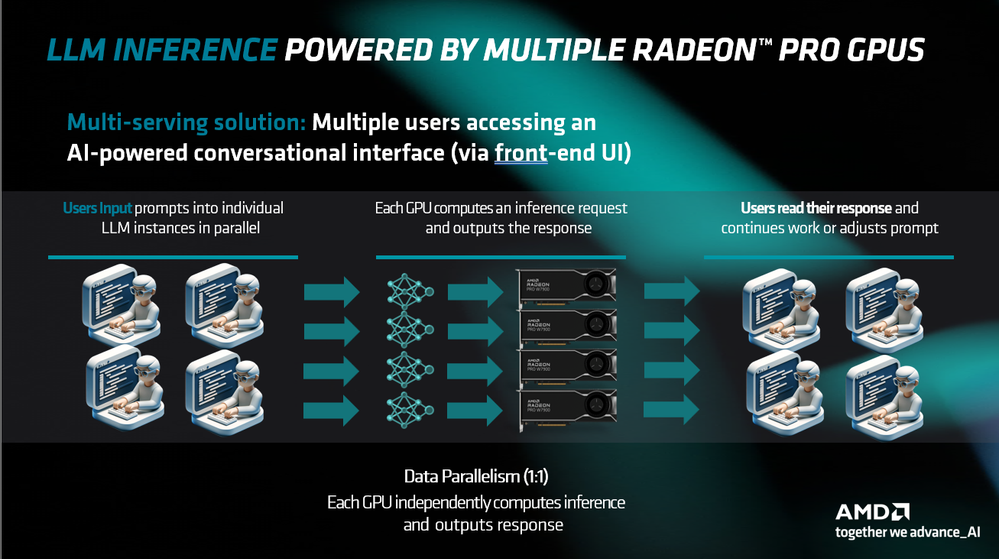

For more demanding tasks, users can host their own fine-tuned LLMs directly. With ROCm™ 6.1.3, the latest version of the open-source software stack of which HIP is a part, introducing support for multiple Radeon PRO GPUs, an IT department within an organization could deploy a Linux-based system with four Radeon PRO W7900 cards to serve requests from multiple users simultaneously.

For SMEs, AMD hardware provides unbeatable AI performance for the price: in tests with Llama 2, the performance-per-dollar of the Radeon PRO W7900 is up to 38% higher than the current competing top-of-the-range card: the NVIDIA RTX™ 6000 Ada Generation.1

AMD GPUs: powering a new generation of AI tools for small enterprises

With the deployment and customization of LLMs becoming more straightforward than ever before, even SMEs can now run their own AI tools, tailored to a wide range of business and coding tasks.

Thanks to their dedicated AI hardware and high on-board memory capacity, professional desktop GPUs like the AMD Radeon PRO W7900 are ideally suited to run open-source LLMs like Llama 2 and 3 locally, avoiding the need to upload sensitive data to the cloud. And with ROCm making it possible to share inferencing across multiple Radeon PRO GPUs, enterprises now have the capacity to host even larger AI models – and serve more users – for a fraction of the cost of rival solutions1.

Resources:

Visit the Documentation Portal >

David Diederichs is Product Marketing Manager for AMD. His postings are his own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied. GD-5

© 2024 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, AMD ROCm, CDNA, Radeon, and combinations thereof are trademarks of Advanced Micro Devices, Inc. Linux® is the registered trademark of Linus Torvalds in the U.S. and other countries. Microsoft and Windows are registered trademarks of Microsoft Corporation in the US and/or other countries. PyTorch, the PyTorch logo and any related marks are trademarks of The Linux Foundation. TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc. Ubuntu and the Ubuntu logo are registered trademarks of Canonical Ltd. Other product names used in this publication are for identification purposes only and may be trademarks of their respective owners.

1 Testing as of May 10, 2024 by AMD Performance Labs on a test system comprised of an AMD Ryzen Threadripper PRO 5975WX, 64GB DDR4-2133Mhz RAM, Ubuntu OS, 64-bit, with AMD Radeon™ PRO W7900 Dual Slot, vs. a similar system with Nvidia RTX 6000 Ada using Llama3 70b GPTQ. Performance may vary based on factors including driver version and system configuration. RPW-462

-

Adaptive AI (Versal Zynq Kria)

15 -

AI Announcements

33 -

AI Software

44 -

Alveo AI

1 -

EPYC AI

7 -

Instinct AI

21 -

Radeon AI

35 -

ROCm

7 -

Ryzen AI

40 -

Tips and Tricks

11

- « Previous

- Next »