- AMD Community

- Blogs

- AI

- AMD Composable Kernel library: efficient fused ker...

AMD Composable Kernel library: efficient fused kernels for AI apps with just a few lines of code

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Graph optimization plays an important role in reducing time and resources for training and inference of AI models. One of the most important functionalities of graph optimization is to identify the opportunities for fusing various combinations of tensor operators, which can improve computational efficiency by reducing memory allocation and traffic. However, a solution that can deliver high-performance backend kernels for a wide range of fused tensor operators at a rapid pace is a big challenge. Previously, due to the lack of such a solution, AI applications could only enable a limited set of graph optimizations on real hardware.

AMD Composable Kernel (CK) library aims to address these challenges for AMD's current and future generations of GPUs [2]. CK uses the general-purpose HIP C++ language and is completely open-sourced. CK is created with the following goals in mind:

- Performant and productive: At the core of CK are a set of carefully defined, highly optimized, and reusable primitives. These primitives are used to implement all conventional and fused operators in CK. Reusing these primitives shorten the backend development cycle, while reliably delivering high performance.

- Proficient for today's problems and flexible for future problems: CK aims to provide a self-contained backend solution for all AI operators. This makes advanced fused operators possible since the entire backend can be implemented within CK without requiring an external library. The reusable primitives in CK are proficient for popular AI models seen today (machine vision, natural language process, etc.). New primitives can be defined in the future if new problems emerge.

- Simple but powerful for AI system experts: All the AI operators in CK are implemented as HIP C++ templates. AI system developers can customize the attributes of the operators, like datatype, arithmetic, memory layout, and the number of operands, etc., by instantiating the C++ template in various ways, with a few lines of code.

- Approachable C++ Interface: CK aims to be inviting and productive for HPC algorithm experts, who play a crucial role in pushing the performance boundary of AI acceleration. CK's reusable primitives and the operators built on top of them are all implemented as HIP C++ APIs, instead of Intermediate Representations (IRs). HPC algorithm experts can add features and optimize performance by simply developing a better algorithm, without also building a customized compiler pass that accompanies the algorithm.

- Portable: Graph optimizations on the CK backend will run on current and future generations of GPUs, and will eventually be portable to other architectures, like CPUs.

Core Concepts

CK uses two concepts to increase backend developers' productivity:

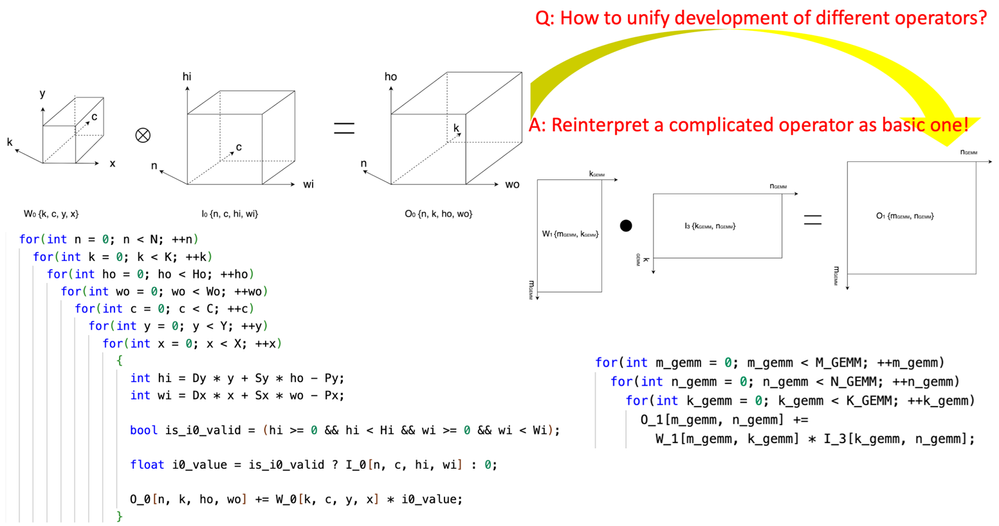

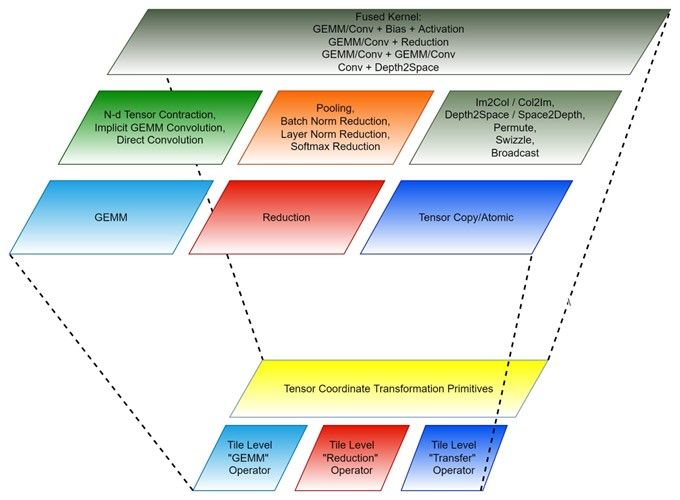

- Algorithm complexity reduction for AI tensor operators, using an innovative technique we call "tensor coordinate transformation.” We generalized and defined a set of tensor coordinate transformation primitives, which are used to reinterpret complex operators like (Convolution, Grouped Norm Reduction, and Depth2Space, etc.) as simpler operators like (GEMM, 2D reduction, tensor transfer, etc.). This technique allows for algorithms written for a simpler operator to be reused by all its complex counterparts.

- A tile-based programming model: Developing a fused operator can be thought of as breaking down each of the corresponding conventional operators into “smaller pieces,” which can then be combined into the fused operator. Each of the "smaller pieces" is a tensor operator operating on small tiles of the original tensors, and we call it the "tile tensor operator." CK contains a set of highly optimized tile tensor operator primitives, and all the AI operators in CK are implemented on top of these primitives. Currently, these primitives include "tile GEMM," "tile reduction" and "tile tensor transfer," and each of the primitives have implementations for a block, a warp, and a thread.

The tensor coordinate transformation primitives and tile tensor operator primitives together form CK's reusable primitives.

Fig. 1 Use CK’s tensor coordinate transformation primitives to reinterpret convolution as GEMM

Fig. 2 CK components (bottom: reusable primitives, top: conventional and fused operators)

Code Structure

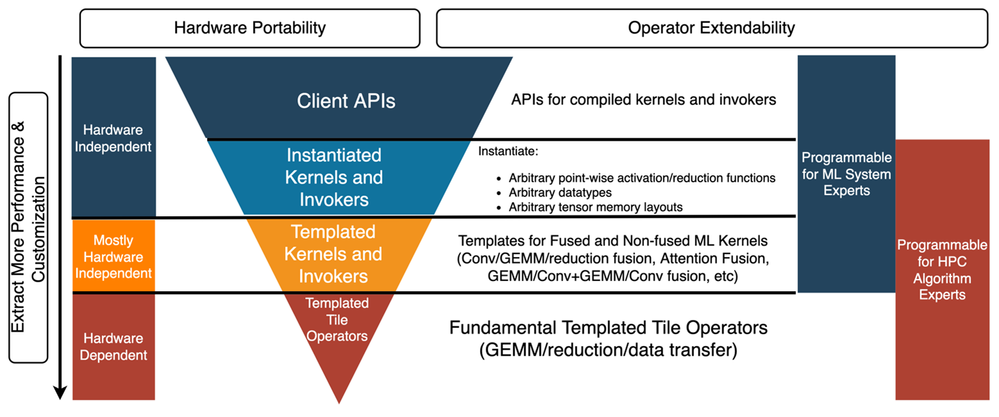

The current CK library is structured into four layers from bottom to top: "Templated Tile Operator," "Templated Kernel and Invoker," "Instantiated Kernel and Invoker" and "Client API" [3] for different groups of developers:

- AI system expert: "I want high performance kernels for conventional and fused AI operators out of the box." A great option for this person would be the "Client APIs" and "Instantiated Kernel and Invoker" layers, like in this client example [4] where GEMM + Add + Add + FastGeLU fused operator is provided to user as pre-instantiated and pre-compiled object.

- AI system expert: "I do state-of-the-art model level graph optimizations for an open-source ML framework. I need a self-contained solution that provides a wide range of highly optimized fused kernels. I also need to customize these kernels, so a ‘take it or leave it’ situation of a black-box solution doesn't fit my needs." A great option for this person would be the "Templated Kernel and Invoker" layer, like in this example [5] where FP16 GEMM + Add + Add + FastGeLU fused operator is instantiated by user from a fused operator template.

- HPC algorithm expert: "My team does in-house development of performance critical backend for my company's constantly evolving AI models. We have HPC algorithm experts, but we still want to reuse and modify optimized source code provided by vendors to increase our productivity and get performance portability on multiple generations of hardware. And we want to do this without sharing our source code or workloads." A great option for this person would be the "Templated Tile Operator" layer, like in this code [6] where “Templated Tile Operators” are used to write a GEMM pipeline.

Fig. 3 Multiple Layers of CK library

End-to-End Inference with AITemplate + CK

Meta’s AITemplate [7] (AIT) is a unified inference system with separate acceleration backends for AMD and NVIDIA GPUs. AITemplate utilizes CK as the backend on AMD GPUs. It interacts with CK's "Templated Kernel and Invoker" layer.

AITemplate + CK approach establishes state-of-the-art inference performance of several important models on AMD Instinct MI250. Most of the advanced fused operators CK supported in these models are driven by the vision of AITemplate team, and many fused kernels are co-designed by CK and AITemplate teams.

Here we compare end-to-end model performance on AMD Instinct™ MI250 accelerator and the alternative offerings [8]. All the benchmark models running on AMD Instinct MI250 here use AITemplate [9] + CK [10].

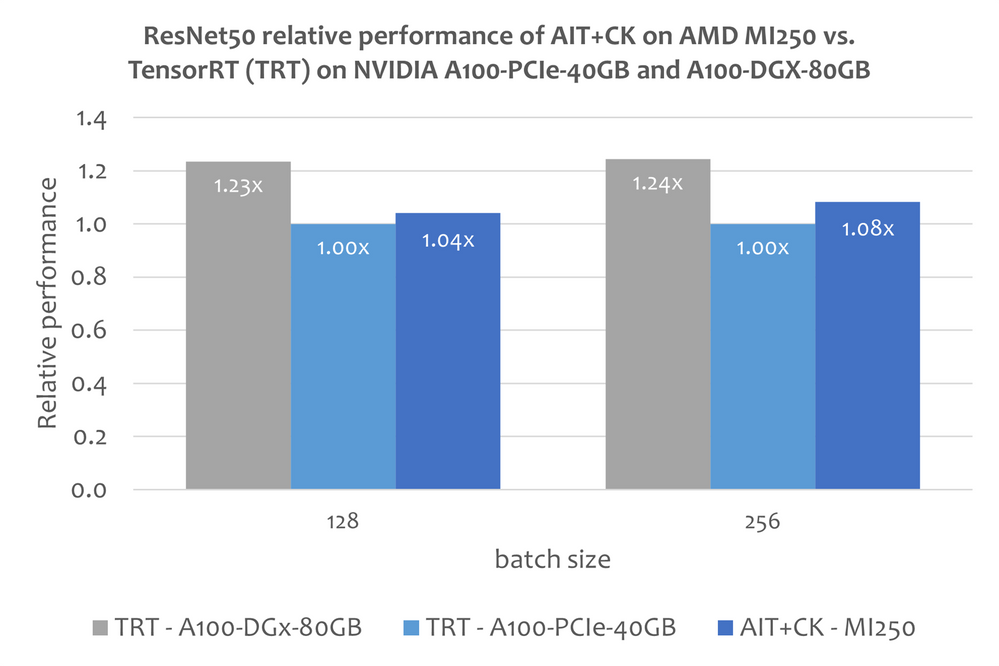

ResNet-50

The following benchmark results show the ResNet-50 performance results of AIT+CK on AMD Instinct MI250 and TensorRT v8.5.0.12 [11] (TRT) on NVIDIA A100-PCIe-40GB and A100-DGX-80GB. The results show that AIT+CK on MI250 can deliver up to 1.08x speedup over TRT on NVIDIA A100-PCIe-40GB.

BERT

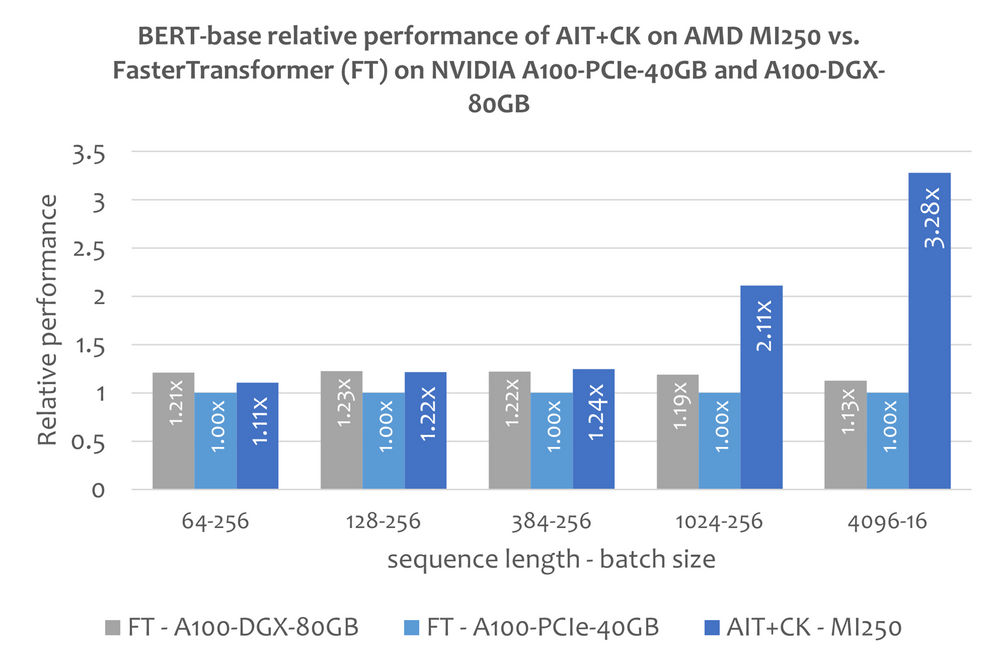

A Batched GEMM + Softmax + GEMM back-to-back fused operator template for attention layer, implemented using CK, completely removes the data traffic between compute unit and HBM for the intermediate result. By using this template, many workloads in the attention layer that used to be bandwidth-bound now become compute-bound, which can utilize GPU compute much more efficiently. This algorithm is heavily inspired by FlashAttention [12], with improvement that greatly reduces memory traffic compared with original FlashAttention.

The benchmark results shown below compare the BERT base model (uncased) performance results of AIT+CK on the AMD Instinct MI250 GPU and FasterTransformer v5.1.1 bug fix [13] (FT) on NVIDIA A100-PCIe-40GB and A100-DGX-80GB. With sequence length of 4096, FT will be out-of-memory on the A100-PCIe-40GB and A100-DGX-80GB at batch size 32. Therefore, for sequence 4096, we only show results of batch size 16, which is supported by FT on A100-PCIe-40GB and A100-DGX-80GB. The results show that AIT+CK on the MI250 can provide up to 3.28x speedup over FT on NVIDIA A100-PCIe-40GB and up to 2.91x speedup over FT on NVIDIA A100-DGX-80GB.

Vision Transformer (VIT)

The benchmark results below show Vision Transformer base model (image size 224x224) performance of AIT+CK on the Instinct MI250 and TensorRT v8.5.0.12 (TRT) on NVIDIA A100-PCIe-40GB and A100-DGX-80GB. The results show that AIT+CK on Instinct MI250 can provide up to 1.8x speedup over TRT on NVIDIA A100-PCIe-40GB and up to 1.4x speedup over TRT on NVIDIA A100-DGX-80GB.

Stable Diffusion

Stable Diffusion End-to-End

The following benchmark results show end-to-end Stable Diffusion performance results of AIT+CK on the AMD Instinct MI250 using batch size 1, 2, 4 and 6. Note that for batch size 1, only a single GCD of MI250 is used, while for batch 2, 4 and 6 both GCDs are used.

|

Batch size |

Average latency (ms) of AIT + CK on MI250 |

|

1 |

2604 |

|

2 |

2604 |

|

4 |

3951 |

|

6 |

5368 |

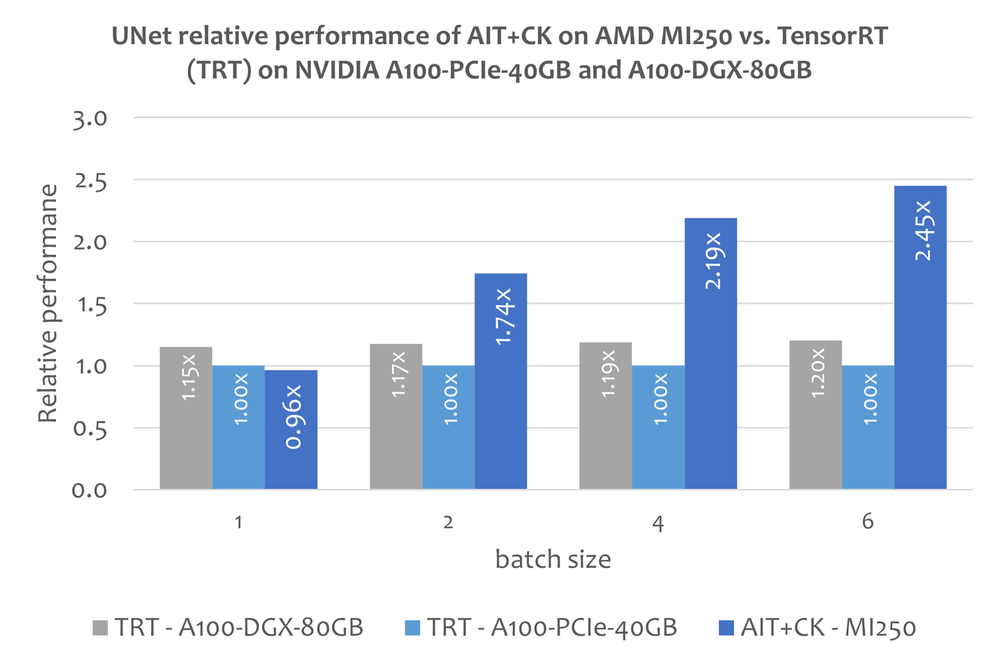

UNet in Stable Diffusion

As of writing of this article, there is no publicly available information on how to run Stable Diffusion end-to-end using TensorRT. However, this article Making stable diffusion 25% faster using TensorRT [14] shows how to use TensorRT to accelerate UNet part of Stable Diffusion. Since UNet is the most critical and time-consuming part in Stable Diffusion, the performance of UNet largely reflects that of Stable Diffusion.

The benchmark results below show UNet performance results of AITemplate + CK on the AMD Instinct MI250 and TensorRT v8.5.0.12 (TRT) on NVIDIA A100-PCIe-40G and A100-DGX-80GB. The results show that AITemplate + CK on the AMD Instinct MI250 can deliver up to 2.45x speedup over TRT on NVIDIA A100-PCIe-40G and up to 2.03x speedup over TRT on NVIDIA A100-DGX-80GB.

Final Thought on End-to-End Optimization Landscape

Two popular approaches for end-to-end performance optimization of AI models exist today:

Vendor-provided proprietary software that handles everything from graph optimizations to backend in black-box. We think the black-box and limited customizability nature of this approach creates artificial boundary between AI framework and backend, and limits what could possibly be optimized.

A compiler-based approach is being developed for application specific AI chips without a general-purpose language. This approach allows vendors to build compiler for AI specific backend programming language.

A compiler-based approach is also being developed for architectures that already have general purpose languages. Sometimes, it's to streamline the entire process from model-level graph optimizations to last mile kernel generation with Intermediate Representation (IRs). Other times, it's to implement Domain Specific Language (DSL) aiming at making programming hardware simple. The challenge with these endeavors is that the need for architecture and workload specific HPC algorithms is not removed, it's just changed into the need for writing architecture and workload specific compiler passes, making it hard for most HPC algorithm developers, who play a crucial role in pushing performance boundary of AI acceleration, to contribute.

AITemplate + CK demonstrates a third option which combines the best of breed from the current approaches: a graph optimizing framework identifies kernel fusing opportunities and construct kernels using a flexible and efficient backend library. The performance results show this approach performs well on GPUs and provides an agility which is difficult for the existing approaches to achieve. Through good library design, this approach serves the needs of AI system developers well, while also being inviting for HPC algorithm developers to contribute. Additionally, the general-purpose nature of the backend language means this approach is likely to adapt more quickly to new AI problems and architectural changes.

Get CK code

https://github.com/ROCmSoftwarePlatform/composable_kernel

Multiple teams at AMD have contributed to CK developement, including Chao Liu, Jing Zhang, Letao Qin, Qianfeng Zhang, Liang Huang, Shaojie Wang, Anthony Chang, Chunyu Lai, Illia Silin, Adam Osewski, Poyen Chen, Rosty Geyyer, Hanwen Chang [15], Tejash Shah [15], Xiaoyan Zhou [15], Jianfeng Yan [15], Dan Yao, Guangzhao Lu, Raman Jana, Jehandad Khan, Wen-Heng (Jack) Chung, Austin Kerbow, Stanislav Mekhanoshin, Vang Thao, Jeff Byrnes, and Chaitanya Sankis.

More Information

ROCm webpage: AMD ROCm™ Open Software Platform | AMD

ROCm Information Portal: AMD Documentation - Portal

AMD Instinct Accelerators: AMD Instinct™ Accelerators | AMD

AMD Infinity Hub: AMD Infinity Hub | AMD

Endnotes:

- Chao Liu is PMTS Software Development Engineer at AMD. Jing Zhang is SMTS Software Development Engineer at AMD. Their postings are their own opinions and may not represent AMD’s positions, strategies, or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied. GD-5

- CK for CPU is in early development phase.

- C++ APIs for now, Python APIs are under planning.

- Example of CK “Client API” for GEMM + Add + Add + FastGeLU fused operator. https://github.com/ROCmSoftwarePlatform/composable_kernel/blob/685860c2a9483c9e909d2f8bfb95056672491...

- Example of CK “Templated Kernel and Invoker” of GEMM + Add + Add + FastGeLU fuse operator. https://github.com/ROCmSoftwarePlatform/composable_kernel/blob/685860c2a9483c9e909d2f8bfb95056672491...

- Example of using CK “Templated Tile Operator” primitives to write a GEMM pipeline. https://github.com/ROCmSoftwarePlatform/composable_kernel/blob/685860c2a9483c9e909d2f8bfb95056672491...

- Meta’s AITemplate GitHub repository. https://github.com/facebookincubator/AITemplate

- MI200-71: Testing Conducted by AMD MLSE 10.23.22 using AITemplate https://github.com/ROCmSoftwarePlatform/AITemplate, commit f940d9b) + Composable Kernel https://github.com/ROCmSoftwarePlatform/composable_kernel, commit 40942b9) with ROCm™5.3 running on 2x AMD EPYC 7713 64-Core Processor server with 4x AMD Instinct MI250 OAM (128 GB HBM2e) 560W GPU with AMD Infinity Fabric™ technology vs. TensorRT v8.5.0.12 and FasterTransformer (v5.1.1 bug fix) with CUDA® 11.8 running on 2x AMD EPYC 7742 64-Core Processor server with 4x Nvidia A100-PCIe-40GB (250W) GPU and TensorRT v8.5.0.12 and FasterTransformer (v5.1.1 bug fix) with CUDA® 11.8 running on 2xAMD EPYC 7742 64-Core Processor server with 8x NVIDIA A100 SXM 80GB (400W) GPU. Server manufacturers may vary configurations, yielding different results. Performance may vary based on factors including use of latest drivers and optimizations.

- https://github.com/ROCmSoftwarePlatform/AITemplate/tree/f940d9b7ac8b976fba127e2c269dc5b368f30e4e

- https://github.com/ROCmSoftwarePlatform/composable_kernel/tree/40942b909801dd721769834fc61ad201b5795...

- TensorRT GitHub repository. https://github.com/NVIDIA/TensorRT

- FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness. https://arxiv.org/abs/2205.14135

- FasterTransformer GitHub repository. https://github.com/NVIDIA/FasterTransformer

- Making stable diffusion 25% faster using TensorRT. https://www.photoroom.com/tech/stable-diffusion-25-percent-faster-and-save-seconds/

- During their time in AMD

-

Adaptive AI (Versal Zynq Kria)

15 -

AI Announcements

34 -

AI Software

43 -

Alveo AI

1 -

EPYC AI

9 -

Instinct AI

19 -

Radeon AI

33 -

ROCm

7 -

Ryzen AI

48 -

Tips and Tricks

9

- « Previous

- Next »