- AMD Community

- Blogs

- AI

- A Discussion of Terms: From Artificial Intelligenc...

A Discussion of Terms: From Artificial Intelligence to Generative AI

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Artificial intelligence has enjoyed a meteoric rise in popularity, from science fiction to real-world use within just a few years. But how does AI work, behind the buzzwords? If you are confused about how terms like “artificial intelligence,” “deep learning,” and “neural net” relate to each other or want to better understand what sets generative AI apart from other types of AI, you’ve come to the right place.

I’ll start by explaining what terms like "AI", "machine learning," “neural net” and "deep learning" mean at a high level and work my way down. All these different words are connected to each other, in a hierarchical relationship, as shown here:

Figure 1: Image via Wikipedia, modified by Joel Hruska, CC BY-SA 4.0 DEED.

Broadly speaking, artificial intelligence is the use of models or algorithms to solve a problem in a way that echoes how intelligent beings might solve it. These models and algorithms are then integrated into software applications to make the insights they extract readily accessible to end users. Some, but not all, implementations of artificial intelligence involve machine learning. Machine learning is a subfield of artificial intelligence concerned with building logical or mathematical models that can do deductive reasoning: drawing general conclusions from data the model has seen.

Similarly, some (but not all) AIs have a specialized type of logical structure called a neural net, designed to resemble the networked information flow between neurons in the brain. Some particularly sophisticated neural nets can perform deep learning, (I’ll get into what that means in a few paragraphs) and some neural nets capable of deep learning can use what they have learned to generate passages of text, works of art, and even video. The relatively recent ability of deep learning neural nets to create these kinds of works has given rise to the topic category we use for this class of software: Generative AI.

Now that I’ve sketched out the overview, let’s talk more about each subfield.

Machine Learning:

Machine learning, neural networks, and deep learning have all become popular in recent years because they each offer performance advantages in specific scenarios, especially compared to other, earlier types of AI. While another AI might be able to accomplish the same task, each type of AI has its own strengths, and fits into its own niche.

One such strength is the capacity to learn, often in response to patterns in the data. Even a powerful AI may not be designed with the capacity to adjust its own model on the fly. For example, one of the first and most famous examples of AI was the IBM Deep Blue mainframe that made history by defeating Gary Kasparov in the late 1990s. Artificial intelligence does not necessarily involve learning; Deep Blue could not adjust its own gameplay based on its performance against Gary Kasparov. Instead, a team of experts tweaked the supercomputer’s strategy between games.

Machine learning AI often require human intervention in the form of sanitizing, labeling and structuring data, to guide an AI’s training along a specific path. Models that need this kind of guidance perform supervised learning. However, some models are designed to do unsupervised learning: to discern patterns in a dataset and then report what they find without the need for human intervention or data labeling. There is also semi-supervised learning, which blends the two approaches.

Neural Nets:

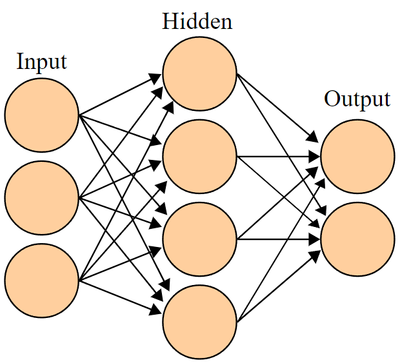

Figure 2: Image by Wikipedia user CBurnett, CC-BY-SA 3.0 DEED

Just as machine learning is just one method of implementing AI, an artificial neural network (often abbreviated to "neural net") is one method of implementing machine learning. A neural net is a computing throughput structure designed to resemble the neurons that make up the brain – typically the human brain – and nervous system. Neural networks can be implemented in software, hardware, or a combination of the two. Why mimic the brain? Because brains can perform a huge number of diverse tasks in parallel, within a power envelope smaller than the average notebook.

Neural networks consist of a set of inputs, at least one hidden layer where input is processed, and then an output layer. Nodes in the network are often referred to as neurons. For the purpose of this example, we'll assume our neural net is feed forward, meaning it only sends information in one direction: toward the output layer. There are also more sophisticated neural nets that can feed back information to earlier layers via a technique called backpropagation.

Deep Learning:

Neural nets with more than one hidden layer can be capable of deep learning, where multiple layers of processing can enable progressively higher-level output.

The diagram below shows one example of what a deep learning neural net can look like. Data moves through this neural net from the input layer at far left (the big end) to the output layer, a single neuron at the far right. Different configurations of input, hidden, and output layers will result in different network topologies.

Deep neural nets use larger data sets and rely on higher-end hardware to train models in a reasonable period of time. Adding more layers to the underlying AI model allows the machine to perform more sophisticated tasks, like training itself on data sets that have not been parsed and structured by humans first. Deep learning models can create more sophisticated outputs than simpler machine learning methods, but they also take longer to train.

Generative AI:

Deep learning can be used many different ways, but one rising star application of deep learning is so-called generative AI. A generative AI model is a model that can take user input as a prompt and generate new output, possibly in an entirely different format, style, or tone than the initial input.

There are a number of generative AI models in use today, including ChatGPT and GPT-4 (both developed by OpenAI), Llama 2 (Facebook), and Prometheus (Microsoft). Many such generative AIs are powered by large language models: very large deep learning models that use huge datasets, notable for their ability to parse natural language input and training data. The collective goal of these new products and services is to create bots and assistants far more capable than anything that's previously existed.

Generative AI hasn’t become a hot topic in technology solely because tech companies are looking for the Next Big Thing. Despite AI’s recent popularity, the basic conceptual principles of neural nets and deep learning were worked out a long time ago. Over the last decade, data storage costs, compute efficiency, and raw performance have all improved to the point that deep learning and generative AI became practical to deploy at commercial scale.

From high-end Radeon™ RX 7000 GPUs to the integrated, on-die AI acceleration engines in select Ryzen™ Mobile 7040, Ryzen Mobile 8040, and Ryzen 8000 desktop CPUs, AMD is a leader in this nascent market. Integrating artificial intelligence processing on-die, supporting it via specialized instructions on the CPU, and building graphics processors that excel in these workloads are all components of a larger Ryzen AI initiative, with the goal of supporting one of the most interesting technological debuts of the past 40 years.

While the specific goals of any given generative AI service or hardware manufacturer will vary, I’d argue that much of the computer industry’s interest in the topic is driven by genuine excitement. Throughout the 20th and 21st centuries, science-fiction authors have envisioned computers and androids that could understand and respond to people far more adroitly than computers of their own eras. Deep learning and generative AI may not singlehandedly bridge the gap between science fiction and science fact, but they offer an enormous improvement over previous AI approaches. Both may prove vital in the ongoing effort to build artificial intelligences that solve problems less like conventionally programmed computers and a bit more like us.

Joel Hruska is a marketing manager for AMD. His posts are his own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied. GD-5

© 2024 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, Radeon, Ryzen, and combinations thereof are trademarks of Advanced Micro Devices, Inc. Other product names used in this publication are for identification purposes only and may be trademarks of their respective owners.

-

Adaptive AI (Versal Zynq Kria)

15 -

AI Announcements

33 -

AI Software

44 -

Alveo AI

1 -

EPYC AI

7 -

Instinct AI

21 -

Radeon AI

35 -

ROCm

7 -

Ryzen AI

40 -

Tips and Tricks

11

- « Previous

- Next »