On-Chip AI Integration is the Future of PC Computing

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In January 2023, AMD unveiled select Ryzen™ 7040 Mobile Series processors with an AMD integrated Ryzen AI engine¹. This dedicated engine is built using the AMD XDNA™ architecture and is designed to offer lower latency, better battery life, and a secure environment for running AI workloads compared to sending data to the cloud. The launch of Ryzen AI was a first for x86 processors and an investment in the future of computing, but why integrate an AI engine in the first place?

There has been an explosion of interest in AI over the past year as businesses and the general public have seen what ChatGPT, Stable Diffusion, and other generative AIs can do. AI deployment is in its infancy, but the field is maturing rapidly as companies like Microsoft, Google, and Adobe enter the space.

The computing industry has seen workloads move from research labs and supercomputing settings to personal computing devices before. The inclusion of the floating point-point unit (FPU) in consumer CPUs and the advent of affordable consumer 3D graphics cards are both examples of this type of migration. Historically, integration of these new technologies has led to periods of high growth, often in unexpected ways.

Before CPU manufacturers began adding on-die floating point co-processors in the early 1990s, games like Doom were lauded for their use of fixed-point math. The wider availability of FPUs made the leap to fully 3D games like Quake possible just a few years later by driving significantly better visual quality and higher frame rates.

The same step forward brought the productivity benefits of sophisticated parametric CAD applications from $50,000 workstations down to $5,000 PCs, democratizing the technology by making it affordable to more companies. Over time, the addition of AVX SIMD instructions in CPUs dramatically increased float-point compute performance enabling the ray-traced rendering and images that we are now used to seeing in Hollywood movies.

Another example of how technologies have driven growth in unexpected ways is the introduction of the first consumer 3D graphics cards. These devices found immediate application in drawing shaded and textured triangles to display 3D objects. As the gaming industry drove increased performance demands on these early graphics cards, device makers began to add limited programmability before finally transforming into the general-purpose SIMD compute engines that they are today. These same GPU engines added high precision 64-bit floating point capabilities and were adopted for simulation and scientific analysis applications, before the recent shift to AI models that operate well with lower-precision datatypes.

In all these instances, both consumer and commercial, new use cases emerged as the technology adoption rose and the accelerators became more capable. AI is poised to make a similar leap. Select Ryzen 7040 Series processors are designed to support the processing needs of early adopters who prize the additional compute capability and unique features of a dedicated AI engine, but who also require support for software optimized for more conventional processing.

The Right Tool for the Right Task

Ryzen AI includes the ability to run AI workloads on a number of different compute engines in the processor. The best path for execution depends on the type of task, software support, model optimization, and the relative capability of each compute engine in the system. Ryzen 7040 Mobile Series processors are unique in their class because they offer the flexibility of leadership CPU, GPU, and AMD XNDA architecture-based computing capabilities. This provides developers and end-users unprecedented flexibility in workload execution, even as AI workloads evolve over time.

CPU vs. AMD XDNA Architecture Execution

Ryzen 7040 processors feature “Zen 4” based CPU cores which include support for AVX-512 ISA (Instruction Set Architecture) extension. This specialized instruction set allows the CPUs to execute AI workloads much more quickly in supported applications. CPUs are not typically used for training an AI model, but they can perform well in certain inference workloads. AVX-512 provides an additional advantage when supported by the application. While the “Zen 4” architecture offers some unique capabilities for processing AI workloads, there are still architectural advantages to the AMD XDNA AI engine.

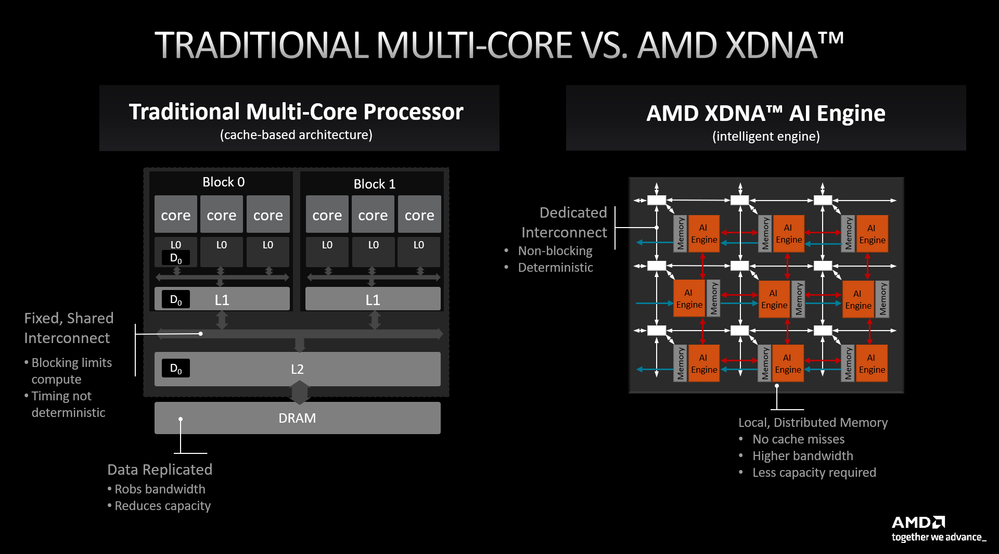

The left-hand side of the image above shows a conventional multi-core CPU with a mixture of individual and shared caches as well as the CPU's link to main memory. The right side shows a block diagram of the AMD XDNA AI Engine and its fundamentally different memory hierarchy.

Traditional CPUs rely on a mixture of private and shared caches to reduce memory access latency and improve performance. Communication between CPU cores is handled by shared interconnects or via shared caches. This arrangement works well when executing the types of workloads CPUs excel at, but it isn't the best solution for an AI engine. AI engines run optimally when they can deterministically schedule memory operations, but a typical CPU's memory latency varies depending on whether information is found within a cache or if the chip must retrieve it from main memory.

GPU vs. AMD XDNA Architecture Execution

More recently, GPUs have been the compute engine of choice for executing AI workloads due to their programmable shader architecture, high degree of parallelism, and efficient floating point compute capabilities. Ryzen 7040 Series processors also include an AMD RDNA™3-based graphics processor, which offers another powerful engine to execute AI workloads.

In many AI workloads, GPUs can offer higher performance than CPUs can deliver. However, even GPUs may have limitations which make them less optimized than dedicated hardware for AI processing. They often contain hardware blocks vital for 3D rendering that aren't used when running AI code, making them potentially less efficient than a dedicated accelerator. Additionally, GPUs emphasize executing operations across hundreds or thousands of cores. They use their own sophisticated memory architectures (not shown above) and hide cache misses by leveraging the intrinsic parallelism of graphics workloads. These capabilities are critical in graphics rendering, but they don't necessarily enhance the performance of an AI processor.

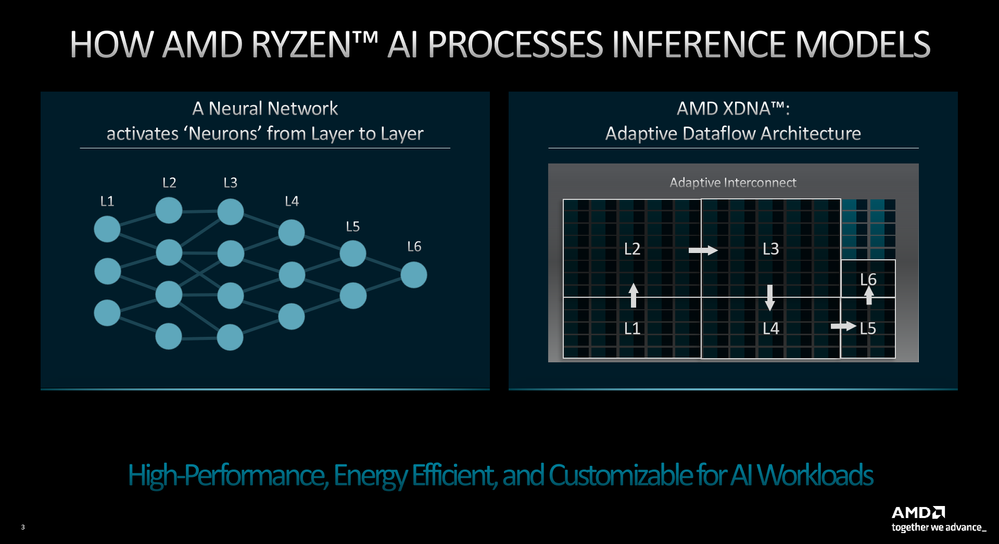

The image above shows an archetypal neural network on the left and the AMD XDNA adaptive dataflow architecture at the heart of the AMD Ryzen AI engine on the right. The connections running from L1 to L6 simulate the way neurons are connected in the human brain. The Ryzen AI engine is flexible and can allocate resources differently depending on the underlying characteristics of the workload, but the example above works as a proof-of-concept.

Imagine a workload in which each neural layer performs a matrix multiply or convolution operation against incoming data before passing the new values to the next neuron(s) down the line. The AMD XDNA architecture is a dataflow architecture, designed to move data from compute array to compute array without the need for large, power-hungry, and expensive caches. One of the goals of a dataflow architecture is to avoid unexpected latency caused by cache misses by not needing a cache in the first place. This type of design emphasizes high performance without incurring latency penalties while fetching data from a CPU-style cache. It also avoids the increased power consumption associated with large caches.

Advantages of Executing AI on the AMD XDNA Architecture

High performance CPU and GPU technologies are important pillars of AMD's long-term AI strategy, but they aren't as transformative as integration of an AI engine on-die could be. Today, AI engines are already being used to offload certain processing tasks from the CPU and GPU. Moving tasks like background blur, facial detection, and noise cancellation are all tasks that can be performed more efficiently on a dedicated AI engine, freeing CPU and GPU cycles for other things and helping with improved power efficiency at the same time.

Integrating AI into the APU has several advantages. First, it tends to reduce latency and increase performance compared to attaching a device via the PCIe® bus. When an AI engine is integrated into the chip it can also benefit from shared access to memory and greater efficiency through more optimal data movement. Finally, integrating silicon on-die makes it easier to apply advanced power management techniques to the new processor block.

An external AI engine attached via a PCI Express® slot, or an M.2 slot is certainly possible, but integrating this capability into our most advanced “Zen 4” and AMD RDNA™ 3 silicon was a better way to make it available to customers without sacrificing the advantages above. Applications that leverage this local processor can benefit from the faster response times it enables and more consistent performance.

It's an exciting time in AI development. Today, customers, corporations, and manufacturers are evaluating AI at every level and power envelope. The only certainty in this evolving space is that if we could look ahead 5-7 years, we wouldn't "just" see models that do a better job at the same tasks that ChatGPT, Stable Diffusion, or Midjourney perform today. There will be models and applications for AI that nobody has even thought of yet. The AI performance improvements AMD has integrated into select processors in the Ryzen 7040 Mobile Series processors give developers and end-users the flexibility and support they need to experiment, evaluate, and ultimately make that future happen.

1. Ryzen™ AI technology is compatible with all AMD Ryzen 7040 Series processors except the Ryzen 5 7540U and Ryzen 3 7440U. OEM enablement is required. Please check with your system manufacturer for feature availability prior to purchase. GD-220.

Use or mention of third-party marks, logos, products, services, or solutions herein is for informational purposes only and no endorsement by AMD is intended or implied. GD-83.