This article was originally published on January 19, 2022.

Editor’s Note: This content is contributed by Bingqing Guo, Sr. Product Marketing Manager for Vitis AI.

Vitis AI 2.0 is now available! As the most comprehensive software-based AI acceleration solution on Xilinx FPGAs and adaptive SoCs, Vitis AI continues to bring value and competitiveness to users’ AI products. With this release, the Vitis AI solution is easier to use and provides additional performance improvements at the edge and data center. This Webinar will introduce the new product features including models, software tools, deep learning processing units, and the latest performance information.

Key features for Vitis AI 2.0 release

- Ease-of-use breakthrough with CPU ops to support wider model coverage

- Additional state-of-the-art AI models in AI model zoo, for the sensor fusion, video analytics, super-resolution, and sentimental estimation applications in CNN and NLP

- Enhanced DPU scalability and IP features on edge and cloud card

To get a glimpse of all the new features, check out the full release note.

Breakthrough in Ease-of-Use

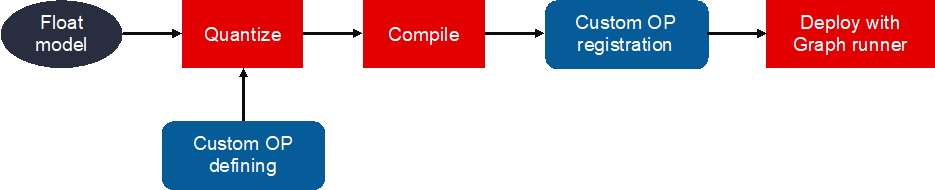

For users familiar with Vitis AI, the tool and IP sometimes encounters unsupported network layers, causing deployment failures. These network layers that are not yet supported by Vitis AI tools and DPU IP will be split into CPU processors one by one, and users need to manually handle the data exchange between DPU and CPU. This process greatly affects the user experience.

In Vitis AI 2.0, the custom OP flow provides an easier way to deploy models that have DPU un-supported OPs by defining those OPs in the quantization flow, then registering and implementing the OPs before deploying by Graph Runner. This way, users can easily deploy complete models without errors in the process.

WeGO Tensorflow Inference Flow

Another key ease-of-use breakthrough in this release is the introduction of WeGO (Whole Graph Optimizer) flow, which is the direct inference from Tensorflow by integrating Vitis AI development kit with this framework. In Vitis AI 2.0, WeGO is available for Tensorflow 1.x framework, and for the inference on cloud DPUs.

WeGO automatically performs subgraph partitioning for the models quantized by Vitis AI quantizer and applies optimizations and accelerations for the cloud DPU subgraphs. The DPU un-supported parts of the graph are dispatched to Tensorflow for CPU execution. The whole process is completely transparent to the users, therefore bringing ease-of-use to the next level with native in-framework support for all the layers and better end-to-end performance on cloud DPUs.

The AI model zoo has been one of the most popular components in the Vitis AI stack for users. It provides free, open, and retrainable optimization models that adapt to many vision scenarios. In the Vitis AI 2.0 release, the number of free models has been expanded to 130 in Pytorch, Tensorflow, Tensorflow 2 and Caffe.

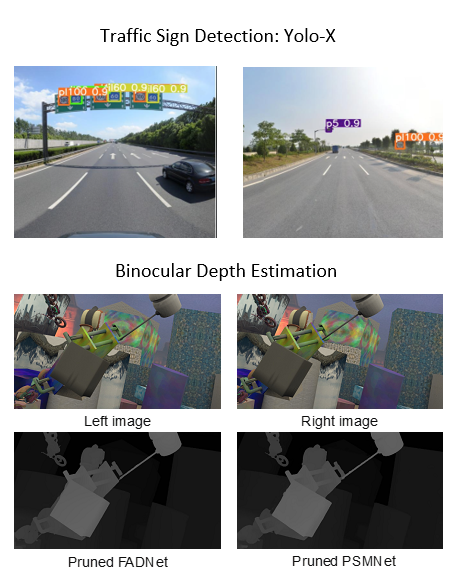

Some of the newly added models are SOLO, Yolo-X, UltraFast, CLOCs, SESR, DRUNet, SSR, FADNet, PSMNet, FairMOT, which can be widely used for traffic detection, Lidar-image sensor fusion, medical image processing, 2D and 3D-based depth estimation, re-identification and NLP models for Sentiment detection, customer satisfaction, and open information extraction. In addition to those trained models, the new release also contains OFA searched models that deliver better model accuracy and performance on hardware.

To get more AI model information, as well as the deployed performance on the hardware officially supported by Vitis AI, please check AI model zoo on Github.

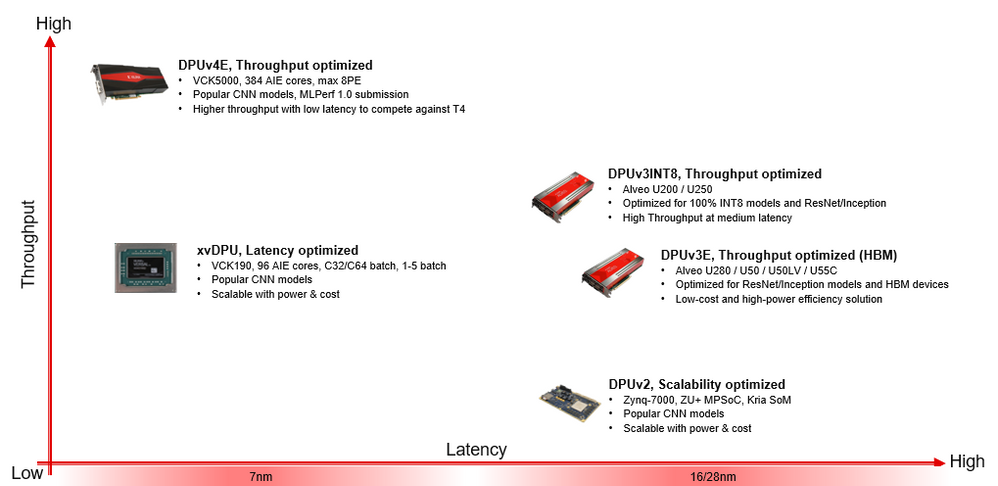

DPU Scalability and New Hardware Platforms

Vitis AI 2.0 added support for VCK190 and VCK5000 production boards and a new hardware Alveo U55c. So far, Vitis AI can fully support all the major devices or accelerator cards, Zynq Ultrascale+ MPSoC, Versal ACPA, Alveo cards, from embedded to data center end.

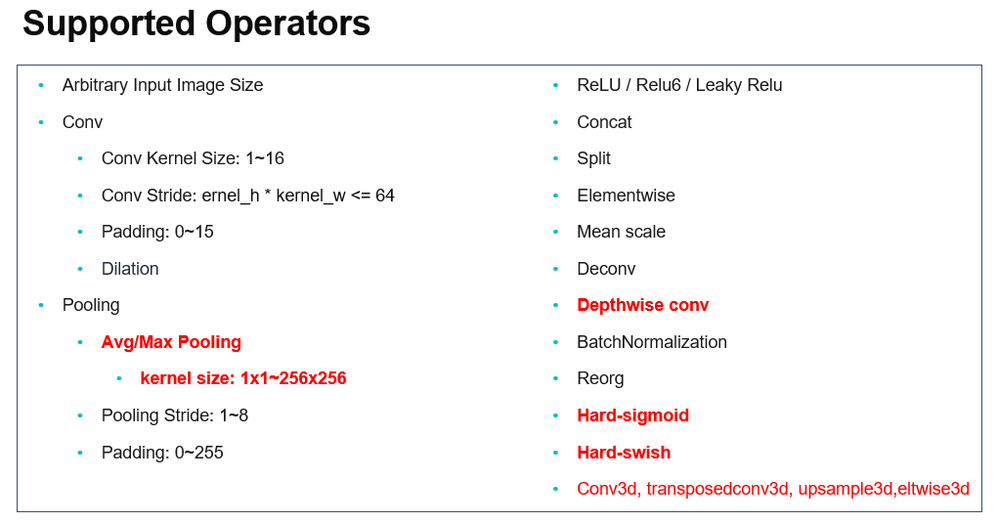

Both the DPU IPs on edge and cloud platforms have been upgraded to enable more features, such as Conv3D, Depthwise Conv, h-sigmoid, h-swish, and more. The Versal edge DPU can support C32 and C64 mode from batch 1 to 5, which enables even flexible DPU integration with custom applications.

Please check PG338, PG389 to get more IP information.

In addition to the above new features, Xilinx has also improved the functions and performance of the Vitis AI toolchain, in which the AI Quantizer and Compiler all supports custom OP, all upgraded to support higher versions Pytorch (v1.8-1.9) and Tensorflow (v2.4-2.6), AI Optimizer upgraded with new pruning algorithm OFA, AI Library, VART, AI Profiler and WAA all improved to support the new models and CPU OP implementation, etc.

Log into Xilinx.com and get started! Download the latest docker image and see what Vitis AI 2.0 can do.