High Performance Linpack (HPL) is a portable implementation of the Linpack benchmark that is used to measure a system's floating-point computing power. The HPL benchmark solves a (random) dense linear system in double precision (64 bits) arithmetic on distributed-memory computers measuring the floating-point execution rate of the underlying hardware.

In a complex HPC system, no single computational task can accurately reflect the overall performance of its computational capabilities. In order to standardize around a set of computational operations to measure an HPC systems’ capabilities, the HPL benchmark has become an industry standard and has been widely adopted by leading Supercomputing centers.

HPL is often one of the first programs run on large computer installations to produce a result that can be submitted to TOP500. It is used as a reference benchmark to provide data for the system ranking on the TOP500 list comparing it to supercomputers worldwide.

The June 2022 TOP500 listing showcased the Frontier supercomputer at Oak Ridge National Laboratory, using AMD Instinct™ accelerators and AMD EPYC™ processors, reaching the historic milestone of exceeding 1 ExaFlop of performance on HPL. AMD is now open sourcing the rocHPL code branch used in the Exascale run on Frontier, providing the industry with access to rocHPL code to run on a broad range of AMD Instinct accelerator-powered platforms. In addition to the previously open sourced rocHPCG code, rocHPL continues the commitment from AMD to the open-source philosophy. As well, AMD is the only HPC vendor to have open sourced both HPL and HPCG codes rather than binaries.

rocHPL and rocHPCG are benchmark ports based on the HPL and HPCG benchmark applications, implemented on top of the AMD ROCm™ Platform, runtime, and toolchains. rocHPL and rocHPCG are created using the HIP programming language and optimized for the latest AMD Instinct™ GPUs. HIP (Heterogenous-computing Interface for Portability) is an open programing paradigm that not only runs on AMD GPUs but is portable to run on other vendor GPUs and CPUs as well.

The AMD rocHPL is an innovative implementation that highlights the unique advantages of a 3rd Gen AMD EPYC processor and AMD Instinct MI250 accelerator-powered system. rocHPL performs the panel factorization on the CPUs, while performing most of the compute work on the Instinct accelerators. The unique system design unifying these EPYC processors and Instinct accelerators with the memory cache coherent AMD Infinity Fabric™ interconnect were the technologies used to enable Frontier to attain the performance necessary to achieve Exascale.

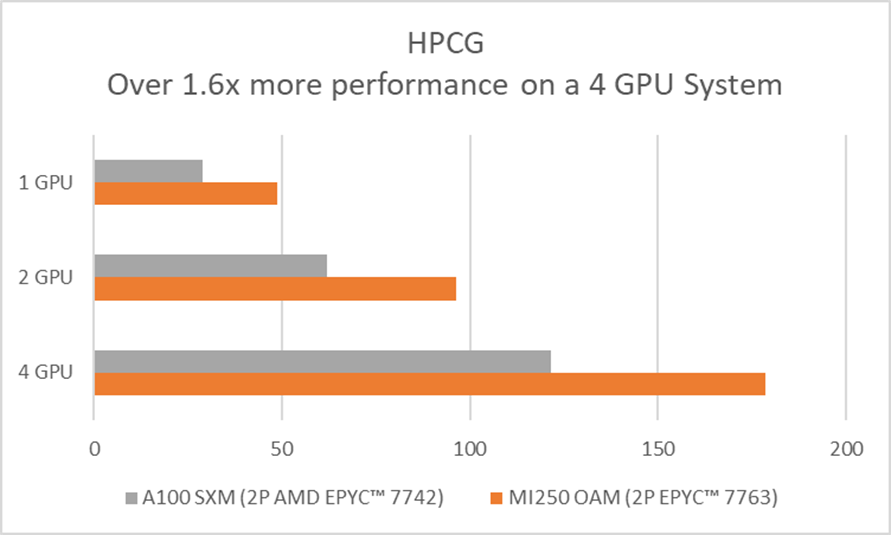

The below plot shows over 2.8X performance advantage of rocHPL and over 1.6X performance advantage on rocHPCG using four AMD Instinct MI250 accelerators and ROCm SW stack against the A100 GPUs from another GPU vendor.

Figure 1: HPL Performance on AMD Instinct™ MI250 accelerators.

Figure 2: HPCG Performance on AMD Instinct™ MI250 accelerators.

The open source code is available at below links.

rocHPL: https://github.com/ROCmSoftwarePlatform/rocHPL

rocHPCG: https://github.com/ROCmSoftwarePlatform/rocHPCG

The containers for HPL and HPCG are available at AMD Infinity Hub along with instructions.

Making the ROCm platform even easier to adopt

For ROCm users and developers, AMD is continually looking for ways to make ROCm easier to use, easier to deploy on systems and to provide learning tools and technical documents to support those efforts.

Helpful Resources:

- Learn more about our latest AMD Instinct accelerators, including the new Instinct MI210 PCIe® form factor GPU recently added to the family of AMD Instinct MI200 series of accelerators and supporting partner server solutions in our AMD Instinct Server Solutions Catalog.

- The ROCm web pages provide an overview of the platform and what it includes, along with markets and workloads it supports.

ROCm Information Portal is a new one-stop portal for users and developers that posts the latest versions of ROCm along with API and support documentation. This portal also now hosts the ROCm Learning Center to help introduce the ROCm platform to new users, as well as to provide existing users with curated videos, webinars, labs, and tutorials to help in developing and deploying systems on the platform. It replaces the former documentation and learning sites.

ROCm Information Portal is a new one-stop portal for users and developers that posts the latest versions of ROCm along with API and support documentation. This portal also now hosts the ROCm Learning Center to help introduce the ROCm platform to new users, as well as to provide existing users with curated videos, webinars, labs, and tutorials to help in developing and deploying systems on the platform. It replaces the former documentation and learning sites.- AMD Infinity Hub gives you access to HPC applications and ML frameworks packaged as containers and ready to run. You can also access the ROCm Application Catalog, which includes an up-to-date listing of ROCm enabled applications.

- AMD Accelerator Cloud offers remote access to test code and applications in the cloud, on the latest AMD Instinct™ accelerators and ROCm software.

Bryce Mackin is in the AMD Instinct™ GPU product Marketing Group for AMD. His postings are his own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

Endnotes:

- Testing Conducted by AMD performance lab 8.22.2022 using HPL comparing two systems. 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, ROCm 5.1.3 rocHPL: internal AMD repository: http://gitlab1.amd.com/nchalmer/rocHPL branch bugfix/public-hostmem, rev 92bbf94 olus AMD optimizations to HPL that are not yet available upstream.Vs.2P AMD EPYC™ 7742 server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM 400W GPUs, CUDA 11.6 and Driver Version 510.47.03. HPL Container (nvcr.io/nvidia/hpc-benchmarks:21.4-hpl) obtained from https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-69

- MI200-70: Testing Conducted by AMD performance lab 8.22.2022 using HPCG 3.0 comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, ROCm 5.0.0.50000-49, HPCG 3.0 Container: docker pull amdih/rochpcg:3.1.0_97 at https://www.amd.com/en/technologies/infinity-hub/hpcg vs. 2P AMD EPYC™ 7742 server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM 400W GPUs, CUDA 11.6. HPCG 3.0 Container: nvcr.io/nvidia/hpc-benchmarks:21.4-hpcg at https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-70