AMD to Add ROCm Support on Select RDNA™ 3 GPUs this Fall

AI is the defining technology shaping the next generation of computing. In recent months, we have all seen how the explosion in generative AI and LLMs are revolutionizing the way we interact with technology and driving significantly more demand for high-performance computing in the data center with GPUs at the center.

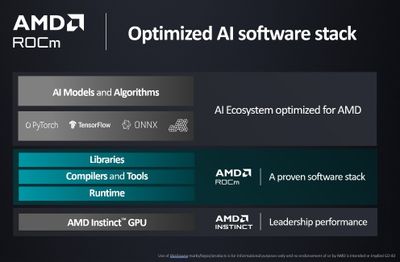

ROCm is an open software platform allowing researchers to tap the power of AMD Instinct accelerators, promoting portability of HPC and AI innovation across platforms. ROCm 5 features a comprehensive suite of optimizations for AI and HPC workloads. These include fine-tuned kernels for large language models, support for new data types, and support for new technologies like the OpenAI Triton programming language.

ROCm validation now includes hundreds of thousands of framework tests nightly, and validation across thousands of models and operators. This includes support for leading frameworks like PyTorch, TensorFlow, ONNX, and JAX, enabling an optimal out-of-the-box developer experience for all the AI models that have been built on these frameworks.

Today, I am excited to announce the latest release of ROCm 5.6.

ROCm 5.6 has enhanced capabilities with new AI software add-ons for large language (and other) models, including many performance optimizations across the ROCm portfolio of libraries. It also reflects continuous improvements supporting the AI community, including:

- integration of the Hugging Face unit test suite into ROCm QA

- incremental support for OpenAI Triton in PyTorch 2.0 inductor mode

- support for the broader community to enable OpenXLA support through ROCm for PyTorch, TensorFlow and JAX

ROCm 5.6 also introduces improvements to several math libraries like FFT, BLAS, and solvers that form the basis for HPC applications and enhancements to ROCm development and deployment tools, including install, ROCgdb (the CPU-GPU integrated debugger), ROCm profiler and documentations.

As the industry moves towards an open ecosystem that supports a broad set of accelerators, we will continue efforts to further optimize frameworks and backend compilers for optimal performance that include MLIR infrastructure improvements, underpinning AMD support for OpenAI Triton and OpenXLA compilers. We will also continue to add additional open-source AI models optimized for AMD solutions on the AMD hub on Hugging Face.

For our HPC users, we recently published a number of publishing recipes at AMD Infinity Hub to enable customers to build HPC application containers and future ROCm releases will add to the number of HPC applications supported on AMD Instinct solutions.

We’ve also seen tremendous interest from developers wanting to run the ROCm open software platform for AI and ML on our Radeon™ consumer and Radeon™ Pro workstation GPUs and have heard the community challenges with specific driver issues on unsupported GPUs. I’m pleased to say that we have fixed the reported issues in ROCm 5.6 and we are committed to expanding our support going forward.

We plan to expand ROCm support from the currently supported AMD RDNA 2 workstation GPUs: the Radeon Pro v620 and w6800 to select AMD RDNA 3 workstation and consumer GPUs. Formal support for RDNA 3-based GPUs on Linux is planned to begin rolling out this fall, starting with the 48GB Radeon PRO W7900 and the 24GB Radeon RX 7900 XTX, with additional cards and expanded capabilities to be released over time.

For more information about ROCm 5.6, please visit: https://www.amd.com/en/graphics/servers-solutions-rocm