- AMD Community

- Communities

- PC Processors

- PC Processors

- x570 raid in bios is hardware based or software?

PC Processors

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

x570 raid in bios is hardware based or software?

I have someone trying to convince me that all motherboard raid solutions are software even though I bought a KR7A-Raid 133 about 20 years ago; and that was a feature/selling point. I've ordered a UPS so I can enable write caching, is the inherently inferior to a NAS from synology or qnap? TBH I pretty much don't care either way (my 32GB of Patriot viper 4400 Bdie + 3900x can handle this, but I want to know one way or the other.

I set my array up in the bios and installed direct to the array, I had issues with installing to a non-raid disk. I have installed raidxpert2 but right now it just a monitoring program.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hardware RAID is long ago dead. Today NAS storage server all use SATA/SAS port expanders to connect upwards of 24 hard disks in a standard 3U chassis.

Linux has ZFS which can span disks into a volume with fault tolerance. Windows has Storage Spaces.

So in general today, all redundancy is software based.

If you have a specific NAS appliance in mind, post a link to the unit and I can provide some advise

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

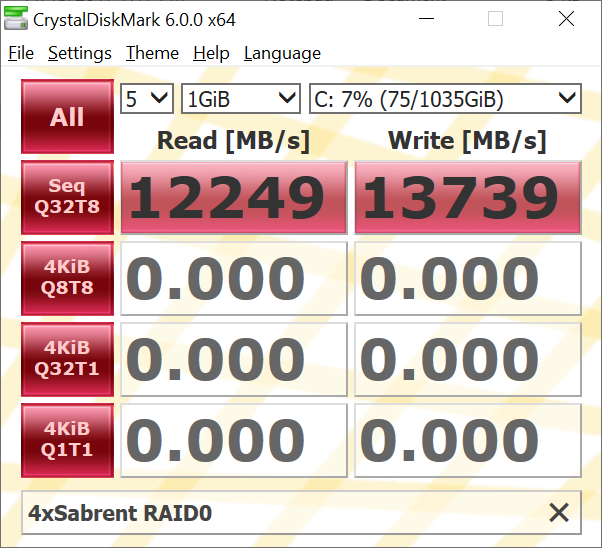

sgtsixpack, I do not have a clue what hardcoregames™ is talking about, I do not know what you want to do and I do not know what KR7A-Raid 133 is. What I do know is that I have two hardware RAIDs (0 and 5) currently running in my 3970X - RAIDs running in Ryzen SOC (Hardware). I will post my RAID0 boot disk performance. If you will tell us what you want to do, I will try to make an intelligent recommendation. Please post all your parts. Be sure you have the latest drivers for AMD not your MB vendor. Thanks and enjoy, John.

EDIT:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First off the board supports raid but the implementation is software not hardware based. It's more of a feature support than dedicated hardware.

It really boils down to what level of raid you are going to run, and how fast you need to rebuild if things fail. For raid 0 or 1 there really is no need for a hardware raid. For most home NAS users a software raid is adequate and much cheaper than going with a hardware raid. While software raids have replaced many traditional raid setups that once really needed hardware controllers, hardware raids are far from dead. In fact in data centers hardware is still very much king. The amount of bandwidth and cpu power needed by a software raid when things go south pales in comparison to that of what a hardware raid can do. Many hardware controllers can cause a bit of a slow bootup if they are set to check the raid upon startup at boot. Also a hardware raid controller from a couple years back may be slower than what comes on your board and is controlled by software today. Honestly I am not totally sure what you are asking. The raid you mentioned is an 18 year old on motherboard solution that is long dead. If you were mentioning by comparison, it would be more akin to a software raid today than a hardware raid. If you are a home user than I would not fear a software raid and it will certainly be cheaper. One of the caveats of software raid however is that I have seen many times if a motherboard fails and you can't get the same board, often the raid will not be recognized by a new different board.

Raids are often risky so often one might even question do you really even need a raid at all or just a good regular backup? With size of some single hard drives today and the size and speed of SSD, many have no need for raid at this point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not many consumers are going to spend the money for a enterprise grade SAN appliance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

True thats why I already made that point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have long used RAID cards so I have lots of experience with them. I started with SCSI cards for 16-bit ISA slots before moving to PCI. SCSI cards were favored in the old days as they could handle 7 hard disks. The SCSI spec changed fast before SATA surfaced and changed everything.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SATA could almost be called a meld of the two IDE/SCSI technologies. The big thing with SCSI beyond supporting raid arrays that nothing else would was its speed. However more important they were the enterprise class drives of their day and they would last forever. Non SCSI drives back then had high failure rates. You paid a high price for SCSI though. SCSI was also the only option initially for removable media such as optical disk and tape drives. In fact you still see plenty of SCSI hard drives in older proprietary systems still going strong today. Interesting too is that many proprietary systems used to use SCSI in a daisy chain configuration for networking. It was far faster than any other option at the time, which was the early 90's. It was very common in graphic intensive environments.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SCSI was anything but backwards compatible. At least the low cost IDE was scaled without changes SCSI elected to changed cables and connectors repeatedly.

I was into digital video long before it became common. the need for frame accurate tape decks was obvious. SDI cards are still expensive to this day.

Hard disks lagged tape for a long time and as an example miniDV tapes are about 13GB per hour. D5 are about 44GB per hour.

Back when hard disks were 100MB you see the problem. This is what gave birth to RAID. Now that hard disks and SSD products are so gargantuan its not needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I never had that issue. In fact I don't think I ever had a problem that a readily available adapter would not fix. Honestly though anyone paying for expensive SCSI interfaces, didn't really fret over having to buy a cable anyway. When it came to removable media IDE products they had their fair share of proprietary cables too. I will tell you if you have old cables and adapters there is a market for that old stuff. Don't just trash it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pokester wrote:

I never had that issue. In fact I don't think I ever had a problem that a readily available adapter would not fix. Honestly though anyone paying for expensive SCSI interfaces, didn't really fret over having to buy a cable anyway. When it came to removable media IDE products they had their fair share of proprietary cables too. I will tell you if you have old cables and adapters there is a market for that old stuff. Don't just trash it.

You should see my junk box. Hardware that is obsolete but it cost a mint when it was new.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yah, I have had the same. I held onto a bunch of old Syquest and Zip disks for year because the thought of what I paid for them made me want to cry when it came to trashing it. I have gotten better about not holding onto as much junk though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Only thing I have divested of is old crap cases. My old Cooler Master HAF 932 has stood up as the best of them all so far.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a 9700pro (actually just went to check the box), I think that might have gone to the charity shop). I did the same with a Epox motherboard since I cut the screws to attach the stock retention mechanism and never sourced more. I currently have a 280x which was used for mining but I used it with a Kraken G10 and significant memory underclock. I only never shifted it because I never flashed the stock bios back which would be better since the one it has is +0.5v GPU IIRC. I'm sitting on alot of spare hard drive capacity too right now.

This is my thread were I got the idea of software vs hardware raid from:

[SOLVED] Raid 10 stripe size & Performance bottoming out in HD tune - Spiceworks

Currently I'm sitting next to a UPS worth £350 new, I got from ebay and it looks like an asset recovery firm I bought from (but no way of knowing that from the seller's ebay profile); waiting for additional cables.

With my torrent client set to use 128MB of virtual cache (they said never go above 32MB in the wiki) I was seeing about 60MB/s greater read speed than a single drive alone is capable of (280MB/s) on checking a particularly large torrent. Raid10 is perfect in this use case, my 100MB/s download speed is the bottleneck, not writing to the array (which should be 2x a single drive performance (which theoretically would be roughly 400MB/s for large files).

All cache disabled (even onboard cache of single disk array SSDs):

Use Macrium reflect and SSDs go offline. I successful cloned when booted from a clone (must have worked before too), but on hitting restart I got a BSOD anyway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am into computer chess and I use an old laptop as a distribution source. The machine supports mSATA so I use a small one for the operating system and a hard disk for the primary storage.

EGTB are retrograde analysis for up to 6 pieces in the end game (chess programs are notoriously week in the end game). EGTB are perfect endgame analysis with every position calculated by brute force.

EGTB is 1.23TB with PAR2 error recovery to deal with flakey hard disks etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

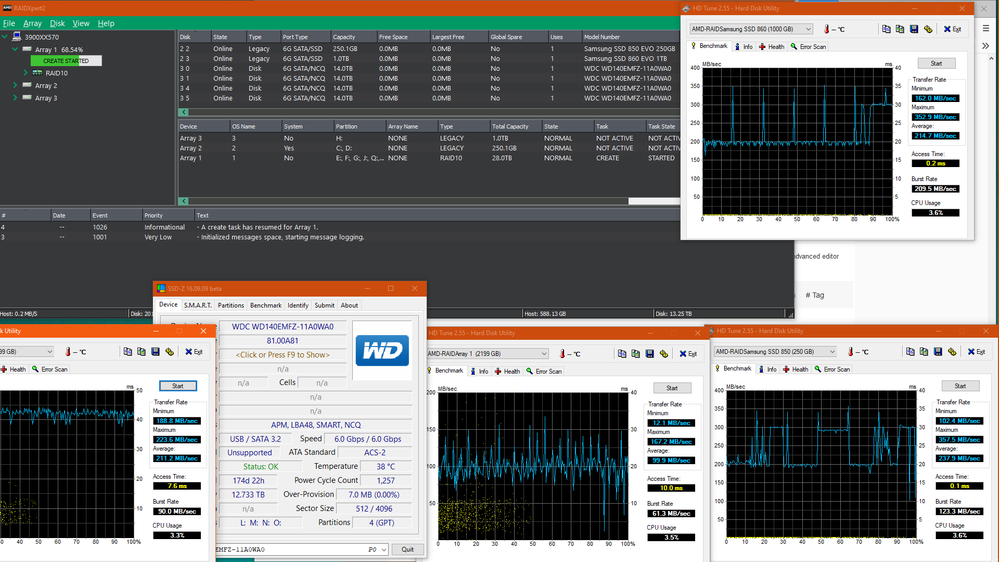

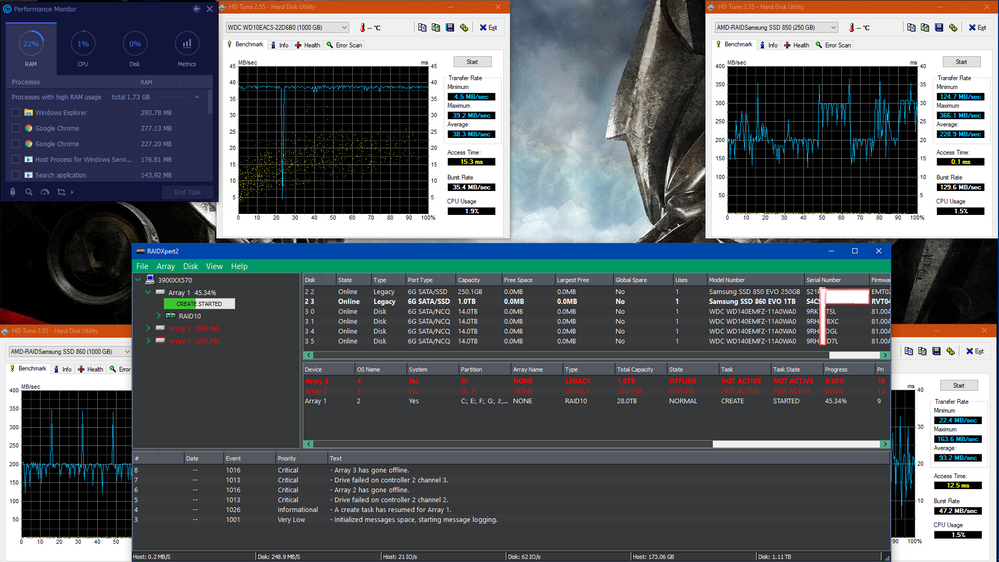

I've played my Uncle Barry at chess, although its not really my bag. Have u any idea what the raidXpert2 software is doing when it says "create started"? Its progressed from 65% since the screenshot to 75% but when idle I see no disk activity.

I would like it if AMD fixed their RAID driver so it worked with hard drive utilities. They cannot have been unaware of its incompatibilities? Or does it take RAID 10 or 28TB to make the programs fail. What Martin of HWiNFO thinks about AMD's driver:

Only crystaldiskinfo and raidxpert2 don't have any issues. I have not tried using paragon hard drive manager yet, to compact and defragment my MFT (that's what I bought it for). At least on MFT on my array has 4 fragments, as seen with ultra defrag. Come to think of it, I've not had any issues with ultra defrag yet, but its not as nimble as smart defrag (which also works flawlessly) or as precise as defraggler.

As far as the rest of your post, that was pretty confusing (EGTB=Endgame Tablebases )):

Nalimov Tablebases - Chessprogramming wiki

I didn't read all of that but I get the jist.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use JBOD in my rig. Over time smaller disks have been yanked in favor of larger ones. Over time 1GB disks became 2GB... and now 8 TB.

My Cooler Master HAF 932 has 5 hard disk bays. I only have 4 disks installed however at present.

As for EGTB, work on that goes back to Ken Thomson with the Belle project in the 1970s. Nalimov used Edwards EGTB and extended it to 6 pieces.

Retrograde analysis of chess is much older than most realise. It goes back before computers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a 100% charged UPS and 3 additional cables. I'm just about to set it up but the USB cable for auto-shutdown is in the post (I already have a USB cable I bought before seeing the UPS and it has two type A but an A & B are needed (bought an A to B adapter too)). I have downloaded the autoshutdown software but I am waiting for a support ticket on which firmware (of 2) I need. I tested the UPS by unplugging it and it gave a beep every 5 seconds till I plugged it back in. I set it to 230v output because the box of my HX 850 Corsair says high efficiency of 230v vs 110v (which it does without a switch on the fly).

I'll plug it in but won't enable the write cache until I get the type A to B USB cable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Windows is tolerant of not needing a UPS. I use a Corsair HX1000i which is very efficient.

UPS systems are not readily availale at local suppliers. Shipping costs are steep for a heavy unit.