- AMD Community

- Communities

- PC Graphics

- PC Graphics

- Re: RX 6800 XT Low GPU Usage

PC Graphics

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RX 6800 XT Low GPU Usage

GPU: RX 6800 XT Sapphire Pulse (Fresh from the store)

CPU: Ryzen 7 3800X

RAM: 16GB 2666Mhz DDR4

Motherboard: Asrock B550AM Gaming

PSU: Seasonic 1000W

I'm playing in 1080p and I'm getting very low performance out of this card, I got more fps with my old RX 5700 XT.

My GPU usage jumps around a LOT, mostly between 10-60% when playing games. I understand it's not always supposed to be at full usage, but I'm not getting high fps at all. Skyrim, I'm getting 30fps in cities, assassins creed origins, I'm getting 40 fps, lowering graphic settings literally does nothing. I'm even getting stutters in minecraft and truck simulators. People playing in 4k get more fps than me.

Nothing is overheating, GPU temps never really exceed 70 degrees celcius, CPU temps never exceed 70 degrees celcius either. CPU is not bottlenecking anything as its usage never exceeds 60%.

I already set my pc displays to High Performance, I overclocked my GPU in AMD software, I am running RAM in XMP and dual channels, I've tested every single possible driver for the GPU, I've messed around in the BIOS, I couldn't find any settings for the gpu. I've triple checked all the PSU cabling, everything is connected perfectly, but no matter what I can't get my GPU usage to be consistent.

When I run the Kombustor stress test, everything's perfect, GPU usage is at a consistent 99%-100% there. In some games, it only reaches 99% usage in menu screens, but not in the actual game. In some games, it reaches 99% when I alt tab out of the game, but drops once again when I go back in.

I ran the Userbenchmark test and my CPU is performing above expectations, card's performing WAY above expectations, SSD and HDD are working normally, but each time I run it, RAM is always performing way below potential, warning me I should enable XMP and use dual channel, even though I already do.

Could this be a problem with RAM? Or did I waste $1300 on a faulty card.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

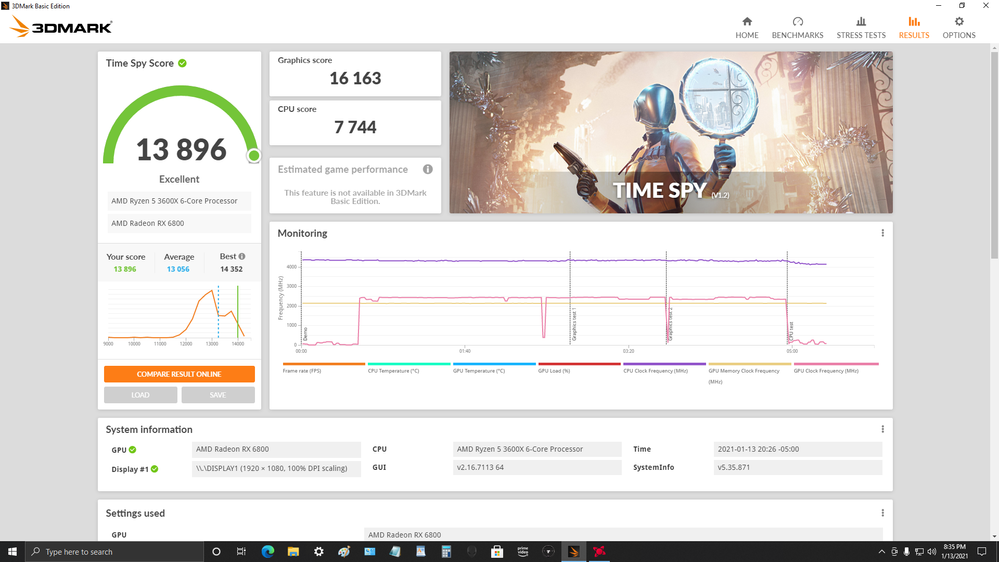

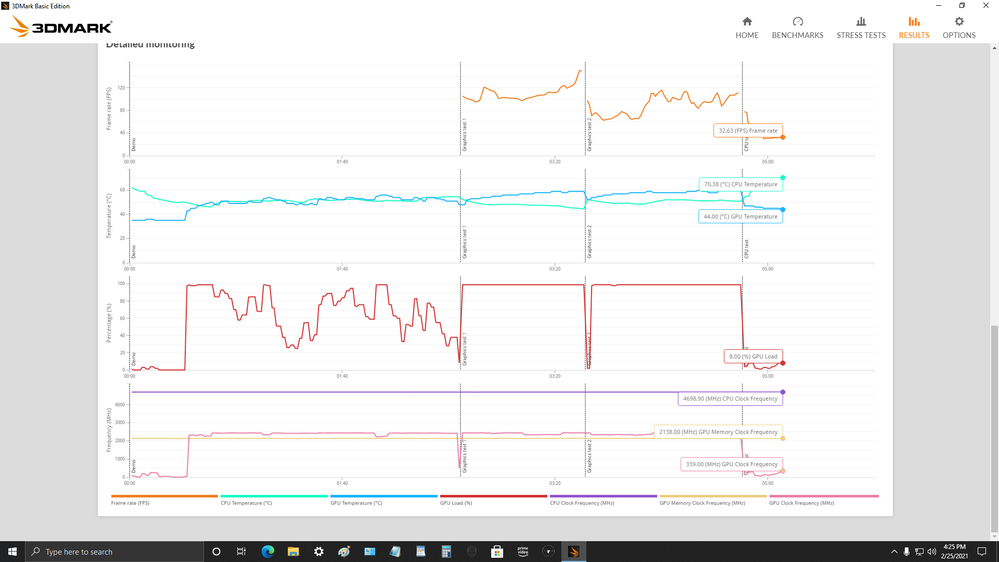

Try running a memory test or just upgrade that RAM to 3600Mhz. 2666Mhz is kind of low, will do the job but if you're going to spend all that on the GPU/CPU combo, why skimp on RAM? That speed can cause the CPU to bottleneck now that you have a high end card. Also, every game you mentioned is CPU intensive, not so much a problem with the 3800X as the CPU. You said you OC'ed the card, did you undervolt it and slide the lower clock to within 100Mhz of the max clock? Change the VRAM to "fast" and slide the "power limit" to max as well. Doing all that might help but that RAM is holding you back. Games should be on the SSD as well(always these days). Yes the difference is huge between 2666 and 3600 as far as FPS goes. These are my results using the RX 6800, so don't copy the settings exactly but you'll get the idea. I use 16GB DDR4 3600 CL16. Notice how my usage line is fairly flat through the test. One final point is those who play those games found going to a 5600X or 5900x improved their games by tons. Your more or less saying you run at 1080p, so RAM should fix you up. 1440p than RAM and moving to a 5000 series will fix you up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok I will see about buying faster RAM, I'm also going for a 2k monitor at the same time. Also I did all that performance tuning but sadly it didn't change much. Another thing I forgot to mention, this was a prebuilt PC that came with that slow memory. I only changed the GPU and PSU.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, the "AM" on the board is the give away that it's a pre-built unit. Sold to OEM's. I'm not sure what games you're playing but I suspect they are games like Warzone, Fortnite, Decay, Red Dead Redemption 2, FarCry series, CyberPunk 2077, PUBG, basically games written in C++ or known to be CPU intensive. Going to a 2K monitor will make the problem worse due to even more load on the CPU. The only way to actually get out of the CPU bottleneck would be to upgrade to a 5000 series CPU. That could be the lower cost 5600X or if you need 8 cores for work or whatever, the 5900x is the top choice. The 5950X is overkill and not the best gaming CPU vs. the prior choices.

These newer cards whether AMD or Nvidia are requiring CPU upgrades to run some of the newer titles optimally. There's a thread on here where the OP says they literally went form 40-50 FPS at 1440p to the 200 FPS area by going to the AMD 5900X and the OP had the RX 6900 XT. I say this because you remarked how changing your setting gave very little increase in performance. That's a clear indication of a CPU bottleneck. I think the 5600X is the best choice since it will beat any 3000 series CPU and cost ~$300 less than a 5900X at around the $400 mark currently.

That said, it's still worth the effort to do the RAM upgrade. However, you might want to wait on the 2K until you can get into a 5000 series CPU.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did upgrade my RAM today to 32GB 3600Mhz, my fps did go up but GPU usage is still low. Skyrim I'm getting 40 fps instead of 30 at a consistent 10-20% usage. Are you absolutely sure this could be a CPU bottleneck? My cpu usage never really exceeds 60%, and mostly hangs around 20-30.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, the cpu is not the problem. My 8700k is keeping up just fine with my 6900xt.

Make sure you use 2 separate power cables coming from your psu to gpu. Do NOT daisy chain one power wire. You can also download GPU z and make sure you are in pcie X16

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what happens when you daisy chain the power line to the GPU? I have done this and am having same problems with 6800xt and r5 3600 on a b450 tomahawk max. 3200 RAM the card runs as required on sponsored game such as AC Valhalla but warzone my performance is 60fps lower than what it should be

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would use 2 X 8pin, separate power cables, no dongle split or "daisy chain". The card pulls over the rated 9amp load of a single cable. Warzone in general seems to have issues with FPS. Poorly optimized game? Here's a good article on that game:

[SOLVED] - 100 % CPU usage Modern Warfare, Warzone | Tom's Hardware Forum

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, the CPU is causing it. The OP replying saying "no" is running an Intel CPU, they work much differently. Ryzen's have a poor single thread performance compared to something like his 8700k and other disadvantages when pairing the RX 6800 XT to the 3000 series CPU's. That game is very CPU intensive. The fact it ever hits 60% tells me that. Your FPS tells me that too. Probably running at 1440p or 4K as well, which will make things worse since the CPU/GPU need to work harder to draw more pixels.

Check out a few Warzone posts on here about low FPS and see what most did. Many went to the 5950X(extreme and more for work), others went to the 5900X(great for work and play), most go with the 5600X for pure gaming reasons and light work loads.

In my opinion and many others, you cannot go wrong with the ~$370 5600X for a gaming rig. Now add video editing, Adobe type stuff, folding, you want the 5900X-5950X. The 5800X is just not worth the bang for the buck, when the others out perform or come real close to it, like the 5600X does. The 5800X is ~$180 more than the 5600X putting you in the 5900X 's price range, a far better CPU if you're spending money.

FYI, I looked at my GPU usage in BF4 on Ultra at 1080p X 32" 185Hz and it doesn't go much above 40%/130W, average 189 FPS. That seems to be with most games I have, so I wouldn't go by the usage as much as the experience. I may go to the 5600X eventually but 130 FPS is my worst in FarCry 5/New Dawn, so not complaining. Skyrim isn't a game I have but it's heavy with scripts which slow it down. Here's a read on that and why Intel would run it better in the other OP's rig: Stupid question, can the skyrim engine be a bottleneck? : skyrimmods (reddit.com)

An Intel 8700k would go through scripts faster and the GPU you have isn't the best for handling that by itself. You're dipping into more IPC's and that is something that matching a 5000 series to that GPU would solve. Connecting the CPU L3 cache to the GPU and SAM does amazing things.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is absolute total utter garbage. the 3800x is a superb cpu and will run any game very well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I saw you helping this fellow out - I was wondering if you could possibly do the same for me?

I’m having the identical situation, but this time with a 6900XT and a 5950x (so no bottleneck on the CPU). I’m getting extremely low GPU usage that’s fluctuating anywhere from 5-89 (according to the Radeon Software) my FPS on Battlefield V have been around 130+ on ultra settings 1440 however, i’ve been receiving tons of stuttering. COD: Cold War? Virtually unplayable. Im topping out at roughly 80FPS on ultra settings and the stuttering is 10x worse than that of Battlefield.

This is honestly kind of upsetting because I purchased the 6900XT as a back up to my blown RTX 3090 and I was expecting virtually the same improvement (if not - better in some instances). however i’m not feeling the hype too much.

My build is as follows:

ASUS TUF RX6900XT

Ryzen 9 5950x

ROG Crosshair VIII (Wi-Fi) x570

GSKILLZ Trident Z Royal (32GB) OC’d to 3200MHZ

EVGA Supernova 1000W Gold

Any advice would help our tremendously. Everything is running stock minus OC the RAM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same I need some help as well. I have the samm issue as them with a sapphire nitro + 6800XT and a 5800x (so not bottleneck on cpu as well). I get good performance at 1080p in games like Red Dead, Ark, even cyberpunk. But when playing gta v, battlefront 2, COD and some other titles my gpu usage bounces from 90-30% resulting in very poor performance 50ish fps. I have the same problem with AC Odyssey and Origins, terrible performance. This is upsetting and frustrating when you spend this kind of money. Already ran ram tests and they are fine.

My build

Sapphire nitro + RX6800XT

Ryzen 7 5800x

ROG b550 F gaming

Crucial ballistix DDR4 3600mhz (16gb)

NZXT 850W Gold.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joelacosta15p , @WallabyMaster

So it could well be the CPU. I just got the 5600X and my Far Cry 5 went down, way down. Far Cry New Dawn goes way up, then eventually goes down to 90 then levels off at the 160 area. I'm still experimenting, but for starters, make sure both of your CPU's are set PBO disabled not auto. There may be some CPU related issues to iron out and I will be spending the next few days trying to figure out the enigma.

I my testing so far, my 5600x will drop core 5 in the middle of an all core, heavy load. Some cores fail to boost like they should and it sticks to boosting only the 2 best cores. Weird stuff. So stay de-tuned or try jumping into the fray! I found SAM to be pretty ueless and the "curve optimizer" as well. Might be the new BIOS 1.2.0.0, so I may try going back to 1.0.8.0, pre curve, lol.

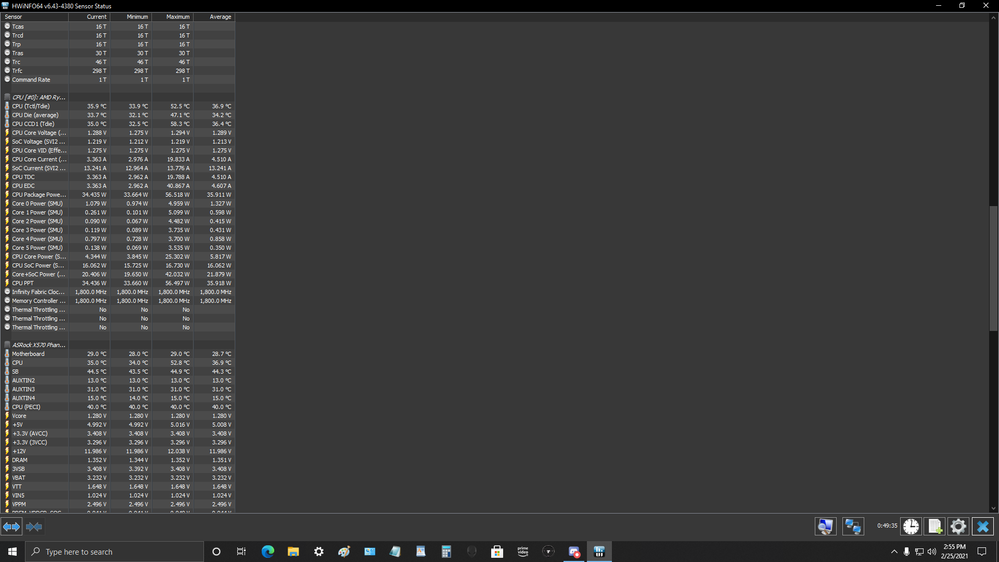

You can test to see if cores are dropping by getting the 4.63 Beta version of HWiFO, since it's patched to not cause shutdowns with the RX 6000 cards. Run the game(s) in question, when FPS goes to fodder, close the game or "alt/tab" to view HWiNFO and look at the CPU max and min clock speeds per core. You'll probably find one to 3 even that just stop or "sleep" at the time of the slow down.

How to fix that, is the question.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Appreciate the response!

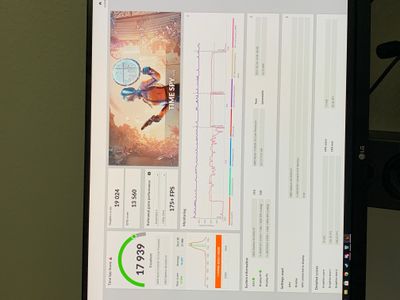

Update: I “think” I figured out what my problem was. I still had preexisting NVIDA drivers so I ended up removing all drivers. Reinstalled the Adrenaline driver. My FPS seems solid (180-200) on ultra settings in Battlefield. However sometimes, I still get stuttering. (I’m not sure if it’s because the map itself is extremely populated). When I run Halo 4 remaster - no stuttering issues. FPS still about 150+. My biggest issue i’ve noticed though is that my GPU usage is still fairly low. Now it’s about 70-80%. Sometimes in an idle screen - it’ll run 90%. If I run a bench mark program it’ll hold a steady 98-99%.

I disabled the PBO. Ran the HWiFN and ran 3DMARK (results below). My cores seemed to be normal (i’m kind of ignorant to this type of stuff so please take it with a grain of salt lol). My cores fluctuated. Some boosted up to almost 5000k while others stood around the 3600k mark. Sometimes, all cores were in synced. But none of them ever went below 2800K while running the test. Also, i’ve noticed my VRAM is typically low (according to radeon software runs around 30-40%). I was wondering if maybe my PSU isn’t pumping out enough power - so I ordered a EVGA 1000W Platinum to see if maybe it’ll help out. More to follow on those findings.

Appreciate the help though brother. ima take a look at HWiFN while playing BFV and see what happens.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think PBO is broken and I went to an all core OC of 4700 at 1.27v, temps are in the 40c idle, 60c game, no FPS drops. To do it you need to turn off CPB and PBO and manually set the BUS to 100Mhz. Start at 4700 or 47X and a manual vcore of 1.256v. Test with HWiNFO Beta 4.63 version because the stable will crash your system due to a GPU compatibility problem, and use Cinebench R23 for the basic stability check.

Run the multi core first with HWiNFO on top to monitor your temps. While testing it might crash at that voltage. Go back into BIOS and add a small amount more to vcore, like the next step is roughly 1.270v. This might even be the best setting. Re-run the test and if you pass 2 times, temps stay below 85c ( I get 73-75c multi core), I'd say you're good at 4.7Ghz solid. If temps are low, like 60c, you might try for 4.8, that would be exceptional, some have gotten to 4.9 or even 5Ghz but let's keep things real.

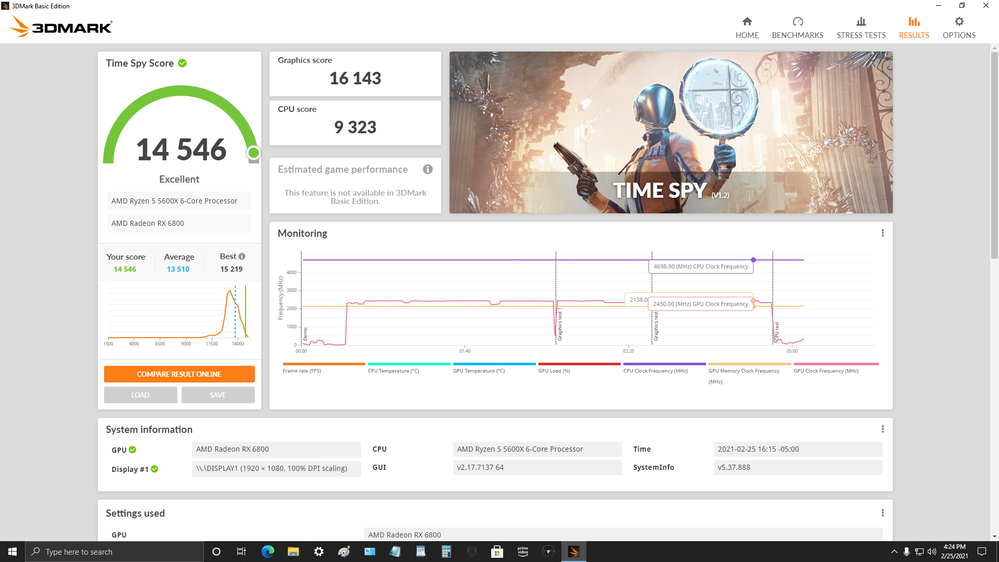

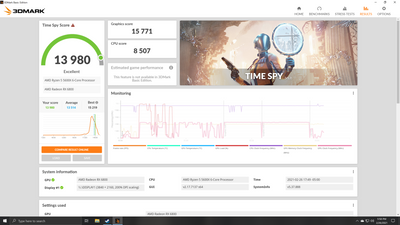

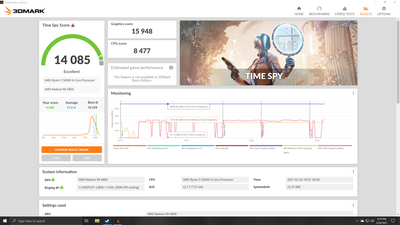

Next after you find this good all core with low as possible voltage OC, not over 1.3v because your multi core temps will most likely hit the 90c mark and that's no good; do a single core pass twice. Lastly if all is stable and temps are down I would run 3D Mark TimeSpy (Free version) and it should pass that with flying colors and your CPU line will be flat not rippling.

Do it right and you wind up right around my voltage and the same or higher clocks on all cores, no single core performance reduction, it goes up. Temps should be roughly 60c in the toughest games with no FPS drops, CPU usage loss or GPU dropping. Idle you should be around 40c or less. Keep in mind I'm using an AIO Corsair H110i, air coolers will work but the clocks will be less.

PBO and all the tricks couldn't get me to the 4.6 the CPU says it can do on the box. Disabling PBO and it got closer. Manual and it hit the mark, without Ryzen Master but that gave me a clue as to where to go. I researched it and many tried this and it works great. Silicon degradation? Doubtful it's any worse than PBO blasting 3 cores at a time with 1.45v and temps sticking at 75-80c in game.

Took me from my last post until yesterday to dial this in. Your journey will be much shorter if you try this but just go slow. No big voltage swings, nice and easy. It may reboot 10 times as you get it perfect. Hopefully my supplied "hinted" voltages help guide you to a faster result. Check out my results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So the first image is before your overclock and the second is after. Usage is still all over the place and Fortnite and Warzone have really bad frame rate drops down to the teens with the game micro freezing. I have an AMD Rx 6800 with a 5600x 16gb of 3200 memory on a B550 Tomahawk board 850 watt gold psu. I have uninstalled drivers with DDU reinstalled windows updated bios and chip drivers. I don't know what else to do. This is a new build and it seems to be getting worse with random system resets in both games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What did you do to the CPU settings? It dropped from the base score. In BIOS there's a few voltages that help stabilize things, one is not using XMP or DOCP and entering your RAM timing/IF speed manually (3200 RAM/ 1600 IF or FCLK). It can be what's on the box, nothing custom, the IF is half the RAM speed. Manually put the SOC/Uncore voltage to 1.10v, it tend to run 1.2v or higher and that can cause problems. Set the BUS speed manually to 100Mhz, they tend to run 99.8 and believe it or not it makes a difference. Look in the hardware info area of BIOS and make sure the DRAM voltage is at spec, if it's lower go back to your BIOS screen for RAM voltage and enter that manually adding about .2 to the voltage (1.350=1.370). Reboot back into BIOS and recheck the voltages under the hardware info area and the DRAM should be dead on or slightly high which is better than too low. Oh, make sure the RAM is in slots A2/B2 if using 2 sticks.

It's important list what you OC'd and how. Was it the GPU only or did you go for a manual all core, PBO, etc? The resets need to handled and FPS drops in those titles are common, both poorly optimized in general, Fornite has it's own issues that dev's have been trying to work on allegedly. All EPIC games are sorta not up to par these days but total crashes aren't one of the complaints.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just overclocked the gpu. I have tried with PBO on and off. Nothing seems to matter. I was able to get the random resets to stop after I repaired windows with SFC /scannow. But timespy is unstable with any overclock of cpu and gpu stock. It seems the most stable with a 20 negative undervolt. My temps on cpu never even get to 70c. and gpu max is 82c. I know fortnite is not optimized well but I can put all settings to low and have 400 to 500 fps and still get drops into the teens. Valarant runs fine Apex is also fine Warzone is hit or miss but fortnite is a stuttering mess.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My only guess would be a bad CPU cooler, due to the safeguards the only way to know is to switch to another cooler with good paste and see. Also make sure you have 2 pcie on GPU and not just a split cable, I think it uses too many watts for a daisy chain. GPU should always be high usage unless it is bottlenecked in a game or just not receiving power

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DO NOT set your PBO to Disabled unless you don't have an option to set it to advanced. If you can manage to set PBO to advanced then set EDC limits to 'Motherboard' That should solve issues for your CPU. That fix, solved every issue I had with my 5800X. As for my 6900 XT in Skyrim it has a GPU usage that bounces between 30-60% with constant fram dips to the 30's. Its playable, but very irritating. That is still an issue for me.

My Specs:

Ryzen 7 5800X (280mm AIO OC'd to 4.775 all core)

G.Skill 4x16GB (64GB total) @ 3600MHz

MSI B550 Gaming Carbon WiFi

XPG 1TB NVME SSD gen 3.0

Inland Premium 2TB NVME SSD gen 3.0

AMD Radeon RX 6900 XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just be careful.. Warzone is a pain in the ass when it comes to AMD Gpus. dont make rash decisions that will not solve anything. Its more likely the game rather than your pc. My son has 5700xt and i have a 6900xt. it runs awaful on his pc with a 10700k. Im using a 3700x currently and its fine at 200fps set at high spec with a 6900

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Falling in line with the last two comments here, I'm fairly mystified why anyone's initial advice to folks having massive performance issues while running perfectly capable processors with brand new graphics cards would be to overclock them, thereby both voiding warranties and adding additional complications to the end goal of just getting their darned games running smoothly. No CPU listed by anyone having issues here should be considered a "bottleneck" to playable FPS in any modern title at 1080p. Again, my 8 year old 4-core 4770k was more than capable of supporting a 1070 Ti at better FPS than I was seeing with my jacked 5900X/6800XT combo, and the OP reported better FPS with their last GPU as well.

Ditto with dropping XMP and messing around with RAM settings. I'm quite sure the people giving the advice know exactly what they're talking about and have seen solid benchmark gains tightening their timings, but if someone's system can't run a basic XMP at 3200 and/or FCLK doesn't automatically sync up at that speed, that's an issue to troubleshoot all on its own.

I love squeezing out that last few FPS to top out a benchmark score as much as anyone else, but I'm seeing a whole lotta pointing to the middle of the tweaking list here, not to the top of the troubleshooting tree where you might want to start, especially while still under warranty with RMA as an option if you discover something hardware related.

As to my own issue... gremlins. It was apparently goddamn gremlins. I was in the midst of building up my 5600X as a spare system to game with until AMD gets back to me, and remembered I still have my old SSD with Steam/TimeSpy/Destiny 2 installed. Tossed that in the 5900X rig and surprise surprise, my TimeSpy GPU score was back above 18k. Figured at that point it might just be as simple as reinstalling Steam et al. on my main NVMe, but that worked perfectly as well once back in. I could say it was reseating the GPU to get to the NMVe, but I'd already done that about 4 times already. Currently running a very reasonable 17.5k overall (https://www.3dmark.com/spy/19628330), with GPU usage a nice flat line at 99% like it should be. That CPU isn't running PBO, btw; PBO limits are disabled, -15 all-core undervolt curve, and max allowed frequency bumped up 200MHz.

Wish I could say I'd found the smoking gun for everyone...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

It’s seems to be more or less the same things for Destiny 2

low cpu and gpu usage ingame cause fps drop,

also seems to be normal in game menu...

Check Bungie forum PC FPS issues megathread

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Me too Exactly, I have AMD 5950x & RX 6900 XT.

But Always (GPU UTIL & GPU PWR) too low .

And the result too low FPS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the same issue- a Radeon 6000 series GPU paired with a Ryzen processor performing vastly worse in gaming than it should. Specific symptoms are low GPU scores in TimeSpy (yet CPU scores are normal) with erratic mid-test GPU load, low FPS in games, and stuttering.

I honestly think it's a random hardware compatibility problem specifically between the CPU and GPU versus a CPU bottleneck or RAM issue. The chip I'm having this problem with is a 5900X running perfectly stable at 1:1 with 4 sticks of single-rank 3800Mhz G.Skill 14-16-16-36 RAM with XMP. Motherboard VRM is pretty well overkill for anything but heavy overclocking, cooler is a Noctua NH-D15 that keeps the chip below 40C at idle, and my PSU is a Seasonic Prime 850W. I've done some pretty extensive hardware, temperature, driver, and BIOS troubleshooting to rule out anything else.

With my 5600X the RX 6800XT will score in the low 18k range (GPU only, 15,500 combined) in TimeSpy. Destiny 2 will run smoothly at 1440p at highest settings between 120-144 FPS all day without anything ever breaking 70C. That's basically what I'd expect without really tweaking things.

Yet when I swapped in a 5900X fresh out of the box from AMD RMA, identical system and settings, my TimeSpy GPU score dumped to low 13k and Destiny 2 can't even hold steady at 70 FPS with medium settings- worse than the i7-4770K/GTX 1070 Ti setup I just retired a few months ago. Every other aspect of the system is stable, and the TimeSpy CPU score is perfectly reasonable for a non-overclocked 5900X (13,200ish).

Tried 3 different releases of Adrenalin drivers, two different BIOS versions, and tested with a different RAM kit, motherboard, and PSU with both chips. Doesn't matter- this seems to be independent, specific to my 5900X + 6800XT running together. Wish I had a spare 6000 series GPU to try, but don't we all...

I bounced this off the GPU's tech support and they were completely stumped- couldn't think of anything to try that I hadn't. Waiting now on AMD's reply to all this. I'll keep y'all updated on what I hear.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

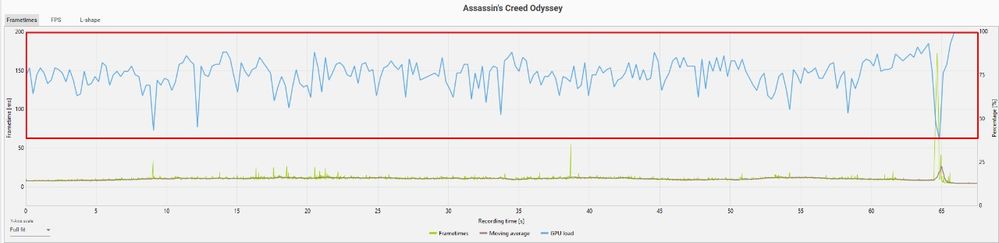

For those wondering if the low GPU usage is the due to a card fault or any component bottleneck considering the results of AC Origins or Odyssey, dont worry, it is not. These two games only runs well in 4k resolution because in this resolution, the cards are forced to be pushed. For lower resolutions, due to a driver fault, any AMD card running these games, will show poor performance.

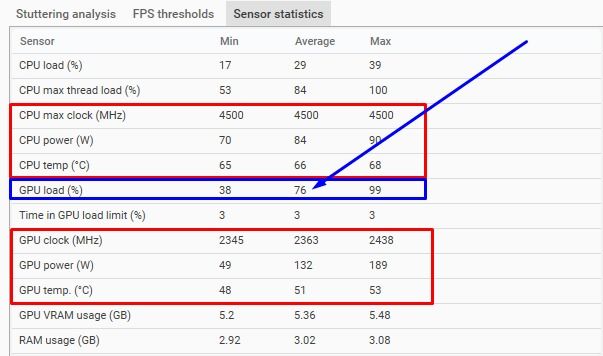

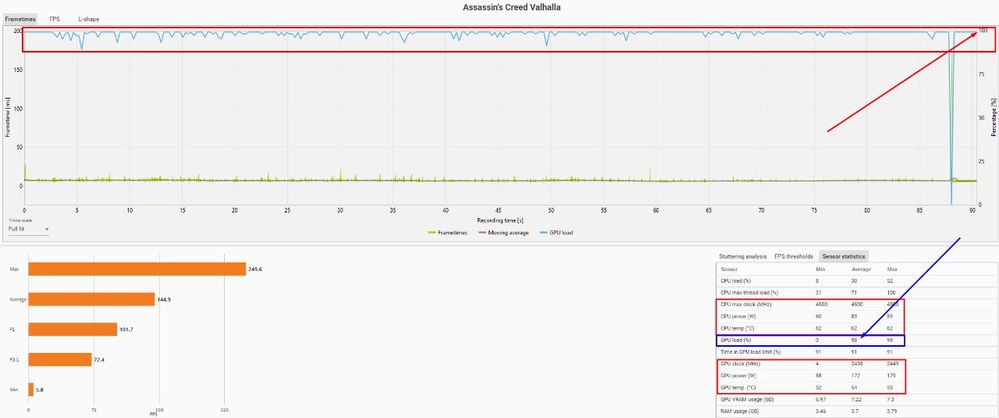

I'm attaching bellow, 3 images, showing the GPU usage comparison between AC Odyssey and Valhalla, in the first one, the average usage was 76%, against 98% of the second one.

The system was the same for both games, a 5900x@4.650Mhz + 6900xt@2.500Mhz

AC Odyssey GPU Low Usage (Average 76%)

AC Odyssey GPU Low Usage (Average 76%)

AC Odyssey GPU Correct Usage (Average 98%)

Based on this data, it is clear that this issue is due to a driver fault, AMD knows it and does nothing (as always) So, don't worry about your systems, they're probably fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cards from amd, do not work well under 11 DirectX, run any game on vulcan or direct x 12. And it will be the same as what you have in the screenshot, 98%+ - loading on the gpu. The valhalla Assassin uses just 12 directx, and it is not a bottleneck. And in games with 11 directx, for example, days gone( when you are in settlements or large cities, the load on the gpu will drop, because of 11directx! It is not able to work with a large number of processor threads. In simple scenes outside the city in the same days gone or similar games where the scene itself in the game is not overloaded with objects, directx 11 will be top! As soon as the scene in the game is oversaturated with small details, NPC objects, etc., then directx 12 or vulcan will drag.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey guys,

I have the same problems since I've built my new pc.

- Ryzen 5800x

- Sapphire Nitro+ 6800XT SE

- 16GB Corsair Platinum 3600

- SSD and all the new things - amount of 2500 EUR.

Some games running well (cs go, star wars fallen order, cod, horizon zero dawn), but some important games like destiny 2 or the new mass effect legendary edition are so bad. Doesnt matter of the resolution (1080p / 1440p), that changed nothing. The Utilization spread is Incredible, within 10 seconds from 5% to 80% to 40% etc. Fps drops all the time, not playable. Fps from 10 to 150 in destiny or from 10 to 240 in mass effect.

I dont know why the utilization could not hold over 90%.

All benchmarks are great. All common fixes I have tried. More hours of looking for a solution instead of enjoying gaming

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I fixed my problem. I returned the card to AMD. The new one works great. Fortnite and COD and all other games run without all the micro freezing.